AIC JBOX (J5010-02) Some Fun PCIe Configurations

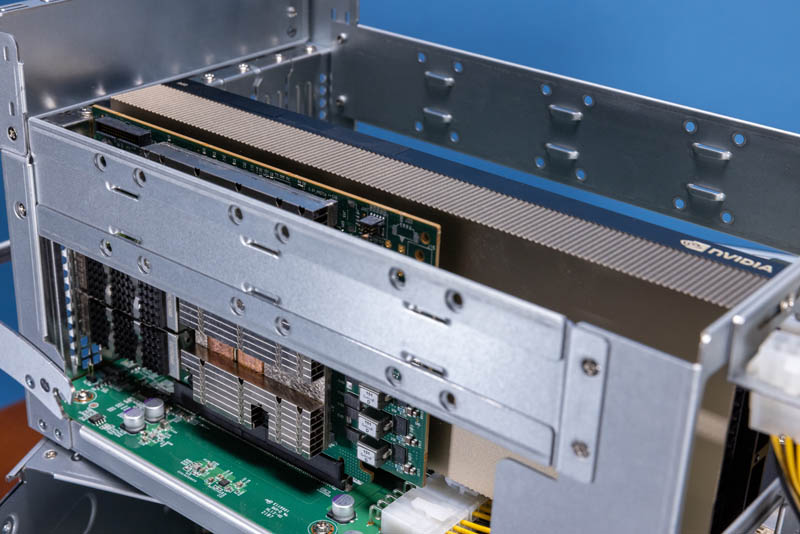

Continuing from the internal overview, here is the BlueField-2 DPU and the NVIDIA A40 GPU installed in the chassis. If you are thinking, that looks like a way to connect a GPU to a DPU through a PCIe switch, you would be correct.

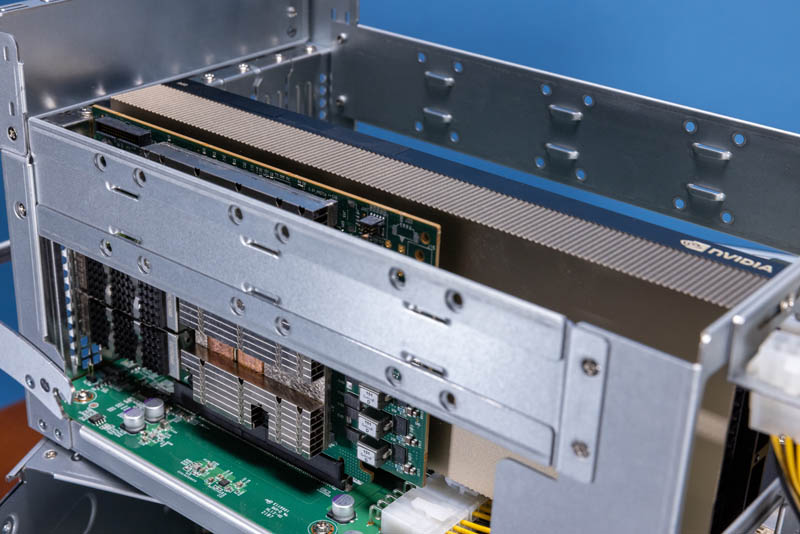

One small feature that we had no idea about until using this chassis occurred when we started using larger cards in the JBOX. Here is the double-width full-height NVIDIA A40 being connected via a data center power cable. You can see that there is plenty of room for cards with top-mounted power as well. Still, we had an challenge where the GPU power connector interfered with the power cabling. The three screws one can see between the four power headers can be loosened and the entire mounting assembly for the power headers can be moved. A quick shift and re-tightening and everything worked perfectly without interference. This is a small feature, but it just shows the level of thought that went into the JBOX.

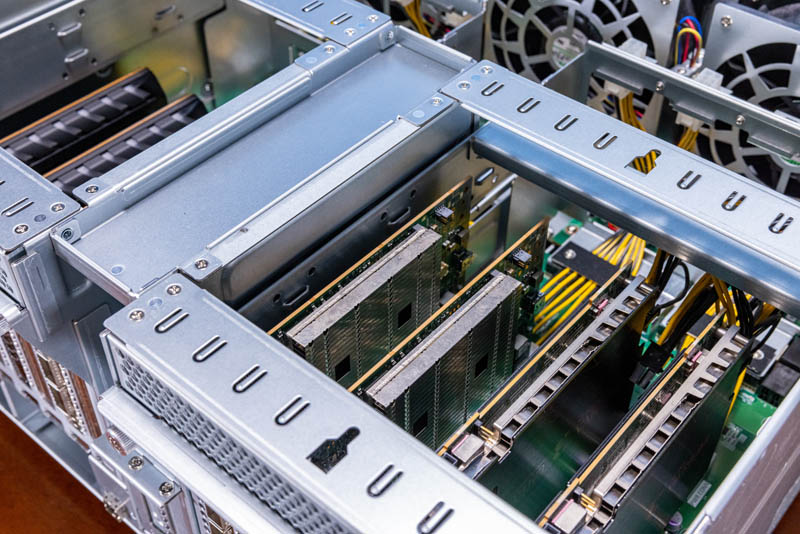

Here is another view with a BlueField-2 DPU and thee low-profile add-in cards that are high-performance SSDs. This is not using the entire width available. One could imagine how AIC could build a version of this chassis with 8x PCIe Gen4 x8 slots. That is really one of the concepts behind the JBOX. AIC has other versions it does not publicly list, but this chassis was designed to be flexible for custom customer designs.

We used some Xilinx FPGA development boards loosely based on the Alveo U55 platform. These particular cards required the more consumer/ desktop style 8-pin connections. Since they are shorter cards, we needed a little bit more cable length but that is an advantage of a movable power header. We could both swap to the different power cables and get a little bit more distance.

While one can use consumer GPUs in this, we will quickly note that many of today’s consumer GPUs are not standard sizes. This is a NVIDIA RTX 3060 Ti and it was simply too long to fit in the system. Some GPUs are also triple-width or more than double-width so that would not be ideal in this platform either given the PCIe spacing.

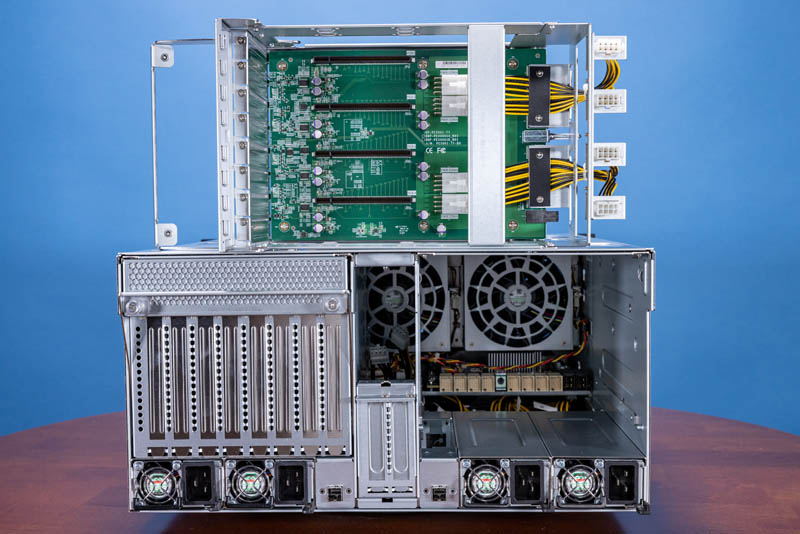

AIC actually bundled both the consumer GPU power (each with 2x 8-pin headers) and the data center accelerator PCIe power cables with the unit. It even comes with rubber feet for those that want to not put these systems in traditional racks.

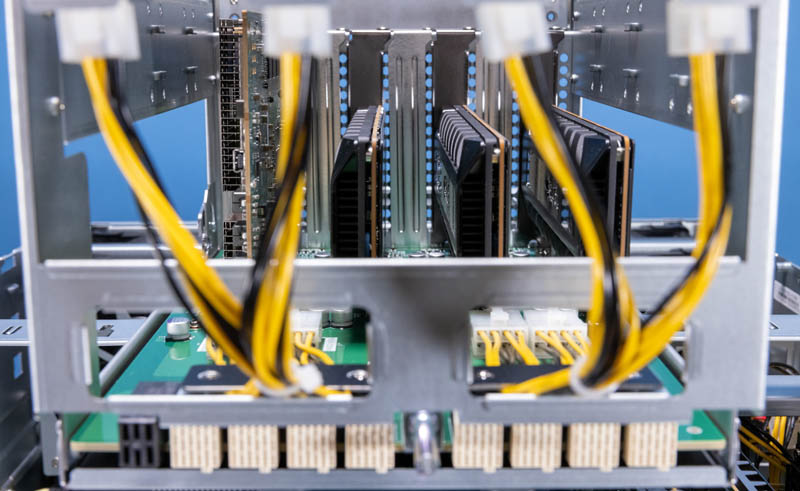

Here is a dual Xilinx FPGA and dual NVIDIA BlueField-2 configuration installed in the chassis.

Here is a version with storage, networking, FGPAs, Arm CPU cores, and there is even a GPU installed on the far side of the system.

Here is again the shot with the heavier networking view. There are a total of eight full-height PCIe cards with three DPUs and two FPGAs that have their own HBM2 memory. We have a storage node on the left, and a networking and accelerator node on the right. There is also the ability to add two more low-profile cards in the center section. That gives a sense of just how flexible this system is.

While we have SSDs for storage here, one could just as easily have external SAS HBAs to get even more storage.

Hopefully, by this point, our readers see where this is heading on STH, as we are going to have a lot more content in the future.

Final Words

One thing we can say for certain is that not every server rack will need an AIC JBOX. If you are using traditional GPU servers, or if you do not need accelerators, then the AIC JBOX is not something you will need. Still, this has the possibility to provide an easy way to add PCIe devices to a system or multiple systems. There is so much that fits on the PCIe bus these days that just having a chassis with PCIe switches and slots is awesome. The shorter depth also means that one can potentially use lower-power 1U/2U short depth servers, and then use external cabling to a chassis like this to get massive PCIe expandability with plenty of power and cooling.

We will quickly note that AIC has other variants of this JBOX that they make for customers, but this should be fascinating to many of our readers. AIC has a number of customers for the JBOX and it shows. We first saw this in November 2021, and it took a few months to actually get one because they are in such high demand. The easy-to-service and flexible design show why AIC sells a lot of these JBOXes.

Later in 2022, we will embark on the PCIe Gen5 and CXL era. Boxes that are like future versions of this JBOX will change computer architecture. For now, the AIC JBOX is just an awesome way to add flexible PCIe expansion to a number of servers or to simply create exciting PCIe-based systems.

Again, as you can probably tell from some of the photos, there are some setups that are for show, and there are some setups we hope to show you more of in the next few weeks and months. Get ready.

What an interesting device! Is there any idea on pricing yet? It would be very interesting if they brought out a similar style of device but with U.3 NVMe slots on the front instead, so you could load it up with fast SSDs but only take up a single x16 PCIe slot on the host.

Excellent device, Patrick! Great review.

I wonder though what that power connector on the sled is rated at? Can it handle 1200W for 4 A40 or similar cards? It does not look very chunky.

Are these any public examples of builds using the AIC JBOX?