At OCP Summit 2025, we saw the Xsight Labs E1 in a different light. If you need a quick refresher on the E1, you can see our Xsight Labs E1 DPU Offers Up to 64 Arm Neoverse N2 Cores and 2x 400Gbps Networking piece. While some may think these are simply slideware, I actually got to help open the first Xsight Labs E1 DPU PCIe cards arriving in California a few weeks ago. At OCP Summit 2025, in a hotel suite, we found a really neat AI storage solution from Hammerspace based on the E1 DPU.

Xsight Labs E1 800G 64-Core Arm DPU Shown for Hammerspace AI Storage

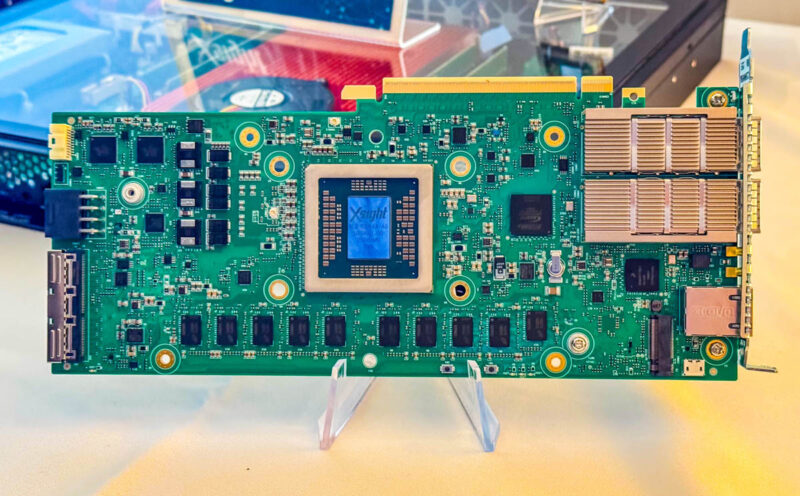

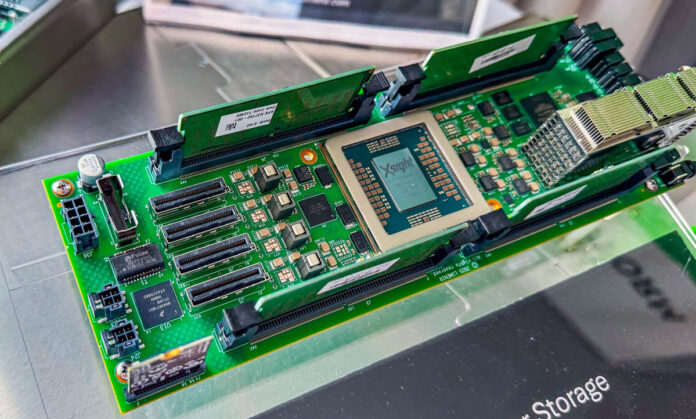

Here is the Xsight Labs E1 DPU. This is a 64-core Arm Neoverse N2 chip that can push two 400GbE ports worth of traffic.

While that is exciting as a networking device (this is one we are very excited about), there was something else in the suite, the Open Flash Platform.

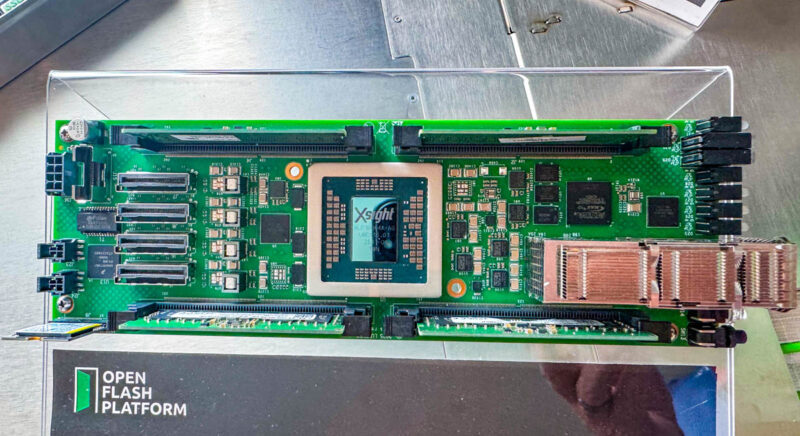

This is a small E1 card, with four SODIMMs, and connectivity for PCIe on one end and networking on the other end.

On the PCIe side, we get MCIO connectors for cabled connectivity.

For the networking side, there are two 400GbE ports, providing 800G of networking connectivity.

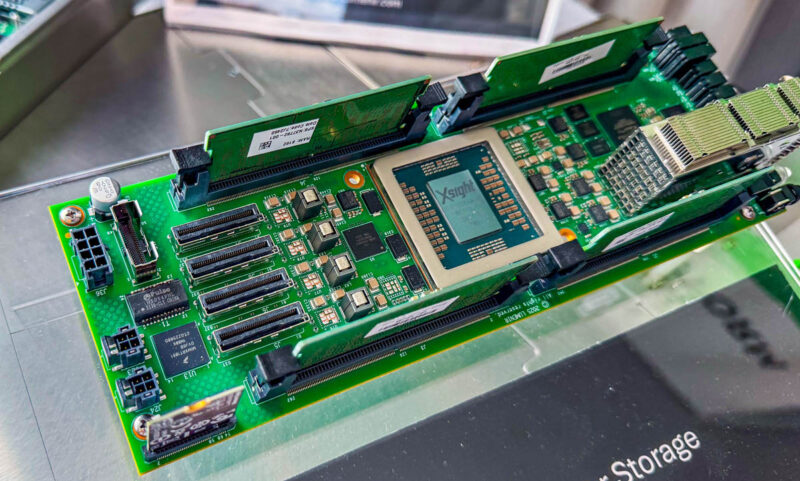

That compute module fits into a 1/5th-width OCP chassis that also has SSDs.

This one has eight ScaleFlux 2.5″ U.2 SSDs. You can see four, but the green pull tabs help you get to the second level of stacked drives. So this is actually four pairs of drives.

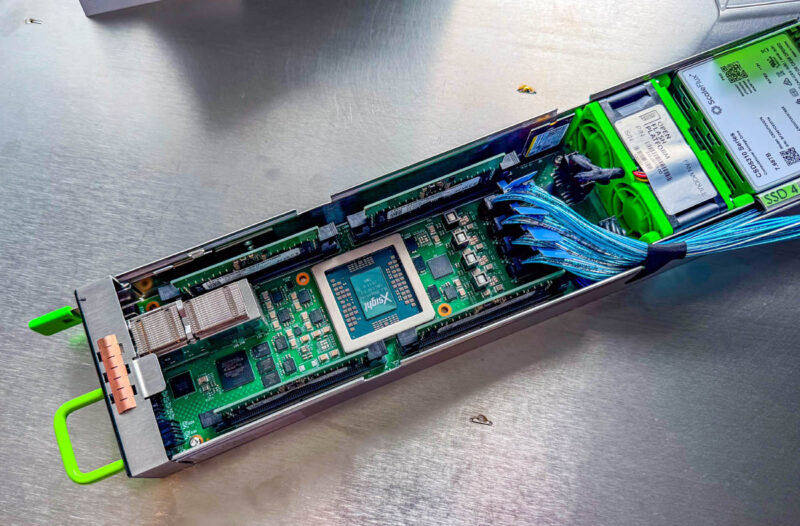

Here is the E1 compute node in the chassis wired with x8 cables. You can also see the fans.

These deep storage nodes are then inserted into the 1OU chassis five wide.

Here are the five DPU storage nodes next to each other.

Just for some density figures, that is 320 Arm Neoverse N2 cores, 4Tbps of networking, and 40x U.2 SSDs in 1OU.

Final Words

This is a really neat setup by Hammerspace because of the balance. The 800Gbps per node roughly aligns to 8x PCIe Gen5 x4 SSDs for each node. If you think about a traditional storage server, you might need a high-speed NIC with some CPU cores for running the storage stack and driving the PCIe root. Instead, this is a DPU that is designed to do all of that without a host system. While NVIDIA BlueField-4 with 64 Arm Cores and 800G Networking was announced for 2026, we have already seen the Xsight Labs E1 cards, so it seems like the startup is ahead in shipping. Xsight Labs is also an example of a different philosophy on building these DPUs, as we discussed in our Substack a few weeks ago.

Another big takeaway is just the storage density. If you were able to fit 40 of these 1OU systems into a ORv3 rack, that would be 12,800 Arm Neoverse N2 cores per rack, 1,600 SSDs, and 400 400GbE ports. Using today’s 122.88TB SSDs or 256TB SSDs, that is a massive storage capacity.

At this point, you might be wondering, how does the Xsight Labs E1 work? Or perhaps, can it really pass traffic at 800Gbps speeds through a pair of 400GbE ports? There might be a reason we already tested the 800Gbps traffic generation capabilities in our MikroTik CRS812-8DS-2DQ-2DDQ-RM Review. Stay tuned for more on STH.

I’m confused when the article writes “10U chassis.” It looks like 1U to me but I don’t know anything. Isn’t 10U rather much larger than even 4U?

1OU (Open Compute U) not 10U.

Not the first time someone has made that mistake, and certainly won’t be the last. Perhaps STH should link the first instance in each article to a brief explainer.