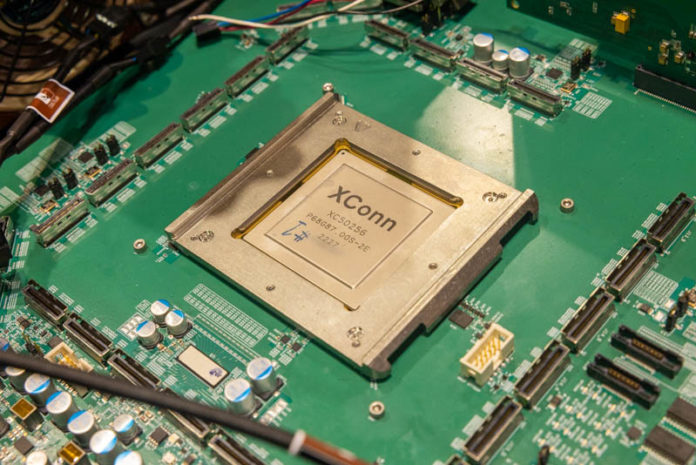

At Flash Memory Summit 2023, there was a really neat CXL demo. XConn had the XConn XC50256 running on the show floor linked with devices and multiple chassis. We have been tracking the XConn CXL 2.0 switch chip for some time, and this year the demo was greatly expanded.

XConn XC50256 CXL 2.0 Switch Chip Linked and Running at FMS 2023

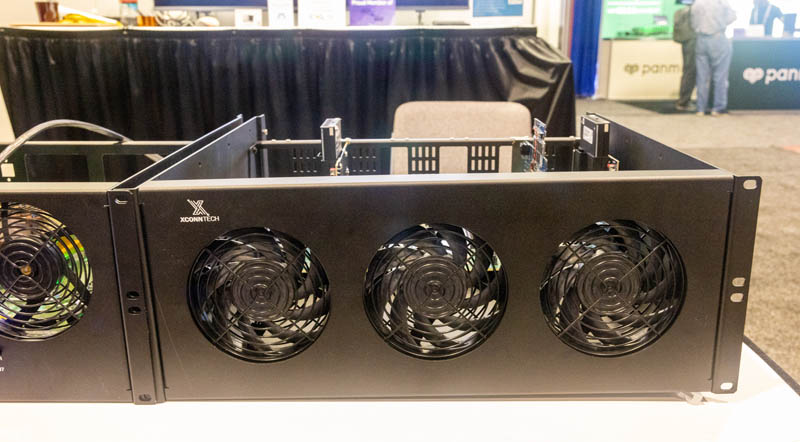

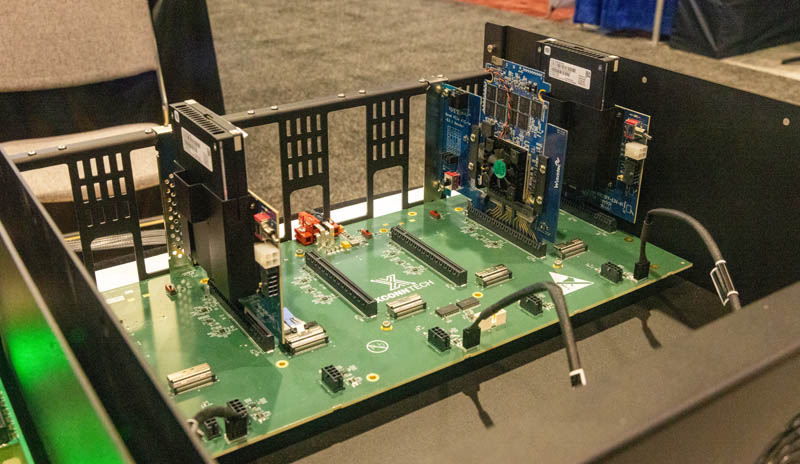

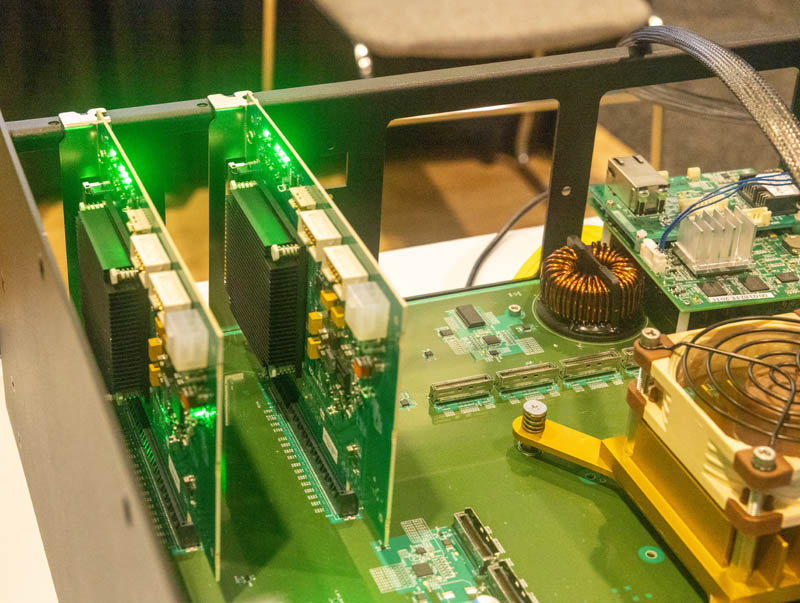

At FMS 2023, XConn had what are now familiar boxes with devices plugged in.

The company was showing off compatibility at the show with a number of devices. Specifically, though, the big demo was with the Samsung 256GB CXL memory modules, the H3 Platform 2TB of CXL memory in 2U and MemVerge’s Memory Machine X software tying everything together over the XConn switch.

The XConn switch previously had large and loud coolers. The new design uses a quieter Noctua fan. We also saw a number of new devices in the booth.

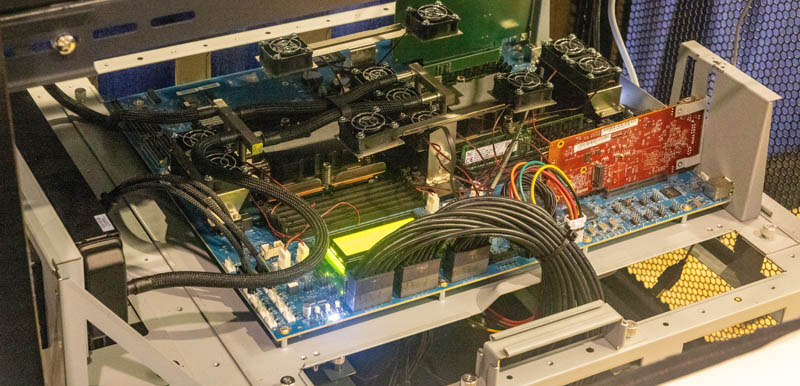

One of the fun parts is that, despite Intel Xeon Sapphire Rapids parts being shown in most of the early CXL demos, at the XConn booth we saw this crazy system. It may be hard to tell, but XConn was demonstrating not just with Intel Xeon, but also AMD EPYC. That is a dual-socket SP5 12-channel memory platform.

A hallmark of most CXL demos is that the CPU memory slots are not filled since the focus is on CXL memory. This was in a rack tying a number of systems and CXL devices together over the XConn CXL 2.0 switch to show off pooling.

Final Words

It is amazing to see how XConn’s CXL switch demos have progressed. From having what looked like a static package when we first saw it to having a single CXL switch attached to an Intel Xeon Sapphire Rapids with a single device, to now showing compatibility with multiple CXL devices across Intel and AMD platforms, and running pooling with MemVerge.

Our guess is that in 2024 CXL is really going to start to pick up as these XConn CXL 2.0 switches hit production, CXL devices become more abundant, and CPU support encompasses CXL 2.0 across Type-3 devices as well.

“Amazing” ? Really ?Who will pay for a CXL2.0 switch to use a CXL RAM pool that is 10 times slower and adds 10 times more latency than local CPU DRAM ? Genuine question.

The truth is that CXL2.0 bandwidth is too low to be really useful in this generation. Maybe CXL3 will get a bit of attraction but for now, it’s just tech demo without any real market until they solve the huge performance problem…

A minor limitation of the XC50256 is seemingly that it only supports 32 ports for switching. That equates to 8 lanes of traffic per port which is genuinely a lot of bandwidth for a single device end point. The gotcha is when topologies expand to include multi-host and/or multi-pathing, those 8 lanes to a single device would be split into 4 lanes going to different XC50256 switches to provide redundant paths to the host processor(s). The switch chip can operate a single port using only 4 lanes but the remaining 4 lanes available to the port become unused. The only areas where this isn’t a concern for multi-pathing/multi-host would be 16x slots which is fine for various high end accelerators

@Xpea, it’s not 10x slower. A CPU going to CXL is the same latency as going to a different NUMA node for memory. Going through a switch is like being another hop away. Bandwidth to a CXL port is similar to a DDR5 channel. There are a quite a few applications that — today — can benefit from having the increase in bandwidth over what DDR5 can provide.

And who else would be buying this? System and application developers that are planning to use the Gen6 version which will provide double bandwidth and lower latency.

You realize that devices need to exist before anyone can write software for them, right? So it’s not a demo, it’s market enablement. Chicken or egg, one had to come first.

Does CXL Mem provide sync primitives? Like, can it be used to share the same RAM amongst multiple hosts? This is something I have never been clear about with CXL Memory. It is a way to centralize RAM and have gigantic RAM pools, but is it also capable of providing shared address space?