Step 5: Scaling the Dell Pro Max with GB10

That brought my thinking around to applying this to other companies. Frankly, there are many companies that do not have the capital or facilities to buy and house $200K+ 8-GPU servers, or even one of them. On the other hand, two of these are only around $8100.

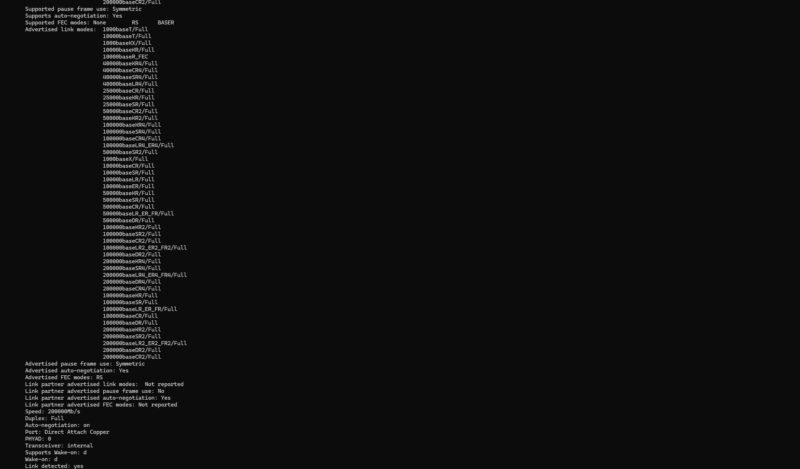

Recently we did an in-depth piece using these showing why The NVIDIA GB10 ConnectX-7 200GbE Networking is Really Different. The networking is actually similar to what we saw when we did bi-directional 800Gbps traffic through the NVIDIA ConnectX-8 NIC in our review. NVIDIA has its (fast) Arm processor, Blackwell GPU, a lot of memory, and high-speed networking to allow for not just one of these to be used at a time. Doing that ConnectX-8 piece really helped give us the perspective that these are probably the best low-cost development tools for NVIDIA’s Arm, Blackwell, and RDMA networking stack. The RDMA networking is important because it provides a path to scale to larger models.

When I first saw the MikroTik CRS812-8DS-2DQ-2DDQ-RM and upcoming MikroTik CRS804 DDQ in Latvia last summer I told John Tully, MikroTik’s co-founder, that these were perfect for the GB10 clusters. Indeed, if you are buying a few of these units, then these have become the de-facto standard for low-power and lower-cost switches.

In the video, we showed you how to connect these not just to those smaller switches, but also a larger Dell Z9332F-ON 32-port 400GbE switch. We also tested these with the NVIDIA DGX Spark. These days we would strongly suggest getting a Dell Pro Max with GB10 over the DGX Spark because you get the ability to customize the storage and you can get Dell’s warranty and support services. That includes options for ProSupport Plus for drops and spills if you are planning to travel with these setups.

This is a somewhat strange way to think about it, but the thought I had while doing this is what if we treated the Dell Pro Max with GB10 as an “employee”. Using that mental model, we could then have several of these as a team. That team can do individual tasks (host different models) or be used together via RDMA networking to accomplish a larger task, hosting larger models.

Final Words

There is certainly a lot of benefit to getting higher-end systems instead of multiple Dell Pro Max with GB10’s. At the same time, these allow you to scale to your business needs if you think of them as 2 hours per week of time savings at $40 per hour for a year. For many business owners, managers, and so forth, they may want to have a local AI solution but coming up with a business case to host a super-fast 10kW server that costs $200,000 is difficult. On the other hand, everyone does reporting. Reporting is done on data that most organizations do not want to share to many cloud services, and there are likely countless organziations out there that have somone doing a 2 hour per week $40 per hour reporting role.

I know that many folks will read this and have ideas of what can be changed. Some may think “I could do this better with a NVIDIA RTX Pro 6000 Workstation” or “I would just use a cloud service to generate tokens faster.” All of these are true statements, but that is perhaps the point of this. It was a fairly easy worfklow to setup, going from a flow chart on a notepad, to a functioning AI-powered reporting tool a few hours later. Using this is something that frees up my time without having to hire an additional part time role. Instead, we were able to get a well-known individual as STH’s new managing editor.

Our other key takeaway was a big one. What enabled this was not running a smaller model fast. It was running a larger model, with higher accuracy, even if it was much slower. We are already budgeting 2-4 hours per week for this, so it is not something I am expecting, nor do we need answers within five seconds. What I need is to be able to trust the result of the workflow. Ideally, I could have extremely fast result I could trust, but in a sub-$10,000 workflow these days that is hard to do using a different tool.