The Hardware Behind the Intel Developer Cloud

There was a ton of hardware installed, and frankly a lot more that was in process. Our visit was in August 2023, and there were pallets of servers, storage nodes, switches, and more that had been unpacked and staged for installation on the floor. In the next photos, you will see where some of these systems will go.

In the data center, there are a few main components. First, there are the systems to test, both with hardware that has been released already as well as pre-release hardware. Next, there is all of the infrastructure around that, such as storage and networking. Finally, there is an entire management plane to manage the provisioning of servers as well as things like access to the environment.

In all aspects, this is an installation that one could tell was growing rapidly. Here was a networking project happening on the day that we visited.

Until Intel sold its server systems business to MiTAC, it had a division that made servers, including reference platforms. There were a huge number of these racks in the cage. These systems can operate as standard dual Xeon servers, but they have another purpose. They are designed to house up to four double-width accelerators.

These systems had various cards in them. That included Ponte Vecchio and as we can see here “ATS-M.” If you recall Intel brands Arctic Sound-M as Intel Flex Series Data Center GPUs so these systems also house Intel’s visualization and transcoding GPUs.

If these systems look familiar, we used one as part of our Intel Xeon Max review, as this is also the development platform for Xeon Max.

If you want to check out that video with more views of this server, you can see it here:

Intel had not just Xeon Max, but also standard Xeon servers with different SKUs.

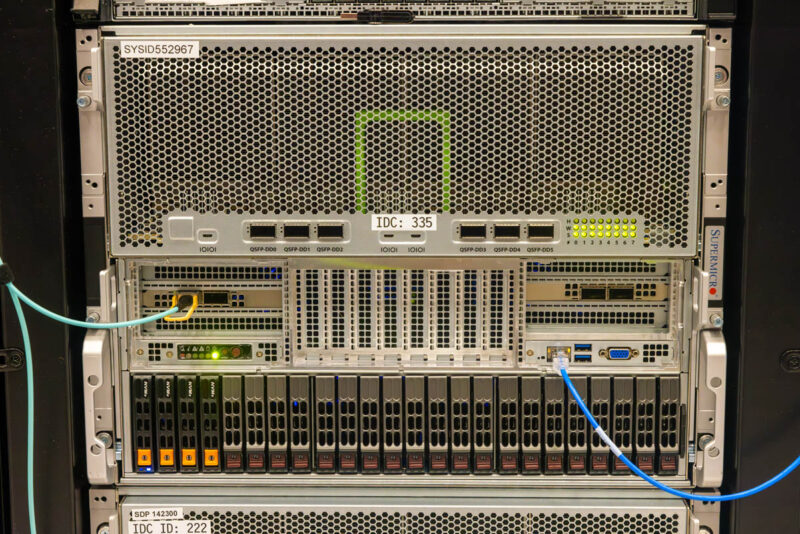

There were also racks of Supermicro SYS-521GE-TNRT. This is Supermicro’s class-leading PCIe GPU server that is slightly taller at 5U but it uses that extra space for additional cooling. We just looked at this system with two other GPUs, but here we can see a unique Intel configuration.

Here we can see eight Intel Data Center GPU Max 1100 series PCIe cards installed with a 4-way bridge on top of each set.

The system itself uses PCIe Gen5 switches in a dual-root configuration so in addition to the high-speed bridge on top of the cards, there is a direct PCIe switch connection between these sets of cards.

That gives Intel the flexibility to provision 1-8 GPUs for a user, and it is straightforward to assign a user one CPU and four interconnected GPUs. A developer might be extra excited about this type of configuration as NVIDIA’s current PCIe enterprise training and inferencing GPU, the L40S, does not have NVLink. The NVIDIA H100 NVL is the dual PCIe GPU solution but with only two GPUs connected. Intel has four connected in a standard PCIe server. Without the Intel Developer Cloud, this would be the type of configuration that would be harder to get access to, and that is why I was very excited to find this one.

There is, however, more. As an example, here we can see both Supermicro and Wiwynn OAM AI servers. OAM modules are the open accelerator format that Intel uses for both GPU MAX 1500 series as well as its Habana lineage AI accelerators Gaudi and Gaudi 2.

The hottest systems today are the Gaudi and Gaudi 2 systems. These have 100GbE links directly from the accelerators and use Ethernet instead of more proprietary communications standards like NVLink or off-accelerator InfiniBand.

Intel let us pull out a system that was there but not installed yet.

The Gaudi 2 line is in such demand right now that the team let it slip that they need to get each system up as it arrives or they get calls from executives asking when each system gets online.

The OAM platforms were split between Supermicro and Wiwynn. Supermicro has the top 19″ AI servers in the industry at the moment, and Wiwynn probably has the top OCP rack acceleration platforms. QCT is also in the conversation as a top-tier AI hardware vendor, but those are generally the big 3 in AI and have been for some time. It is great to see Intel use class-leading hardware here.

Overall, there were just racks and racks of systems with Intel Xeon, Xeon Max, Data Center Flex series GPUs, Max GPUs, and Gaudi/ Gaudi 2 accelerators. In this location, in August, there were 20 Gaudi 2 systems installed. We were told many more were incoming.

Next, let us show some of the other parts of the infrastructure.

On a couple of the pictures why do they slap so many stickers over the air inlets? Seems like it may be bad for cooling.