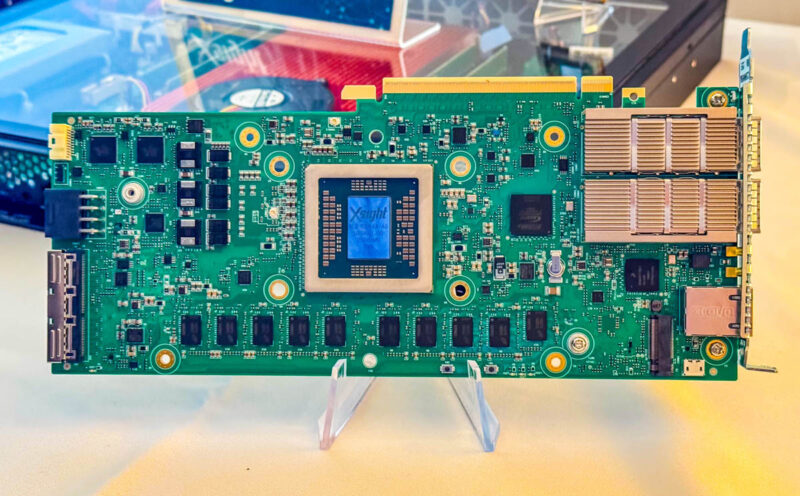

Today we have a fun one. A few months ago, I was at Xsight Labs in California and saw the company’s E1 DPU PCIe card. A few weeks after that, a 1U chassis arrived. We wanted to start our series with a quick look at the really neat DPU, as this is far different from the DPUs we covered a few years ago. If you have not heard of Xsight Labs, we first covered them in 2020, and their X2 switch chip just had a major milestone win, Powering SpaceX Starlink V3 networking.

If you want to see a few more angles, we have a short.

The Xsight Labs E1 DPU Overview

What Xsight Labs is building is not a NIC, or a NIC with a few compute cores attached. Instead, it is more of a miniature server.

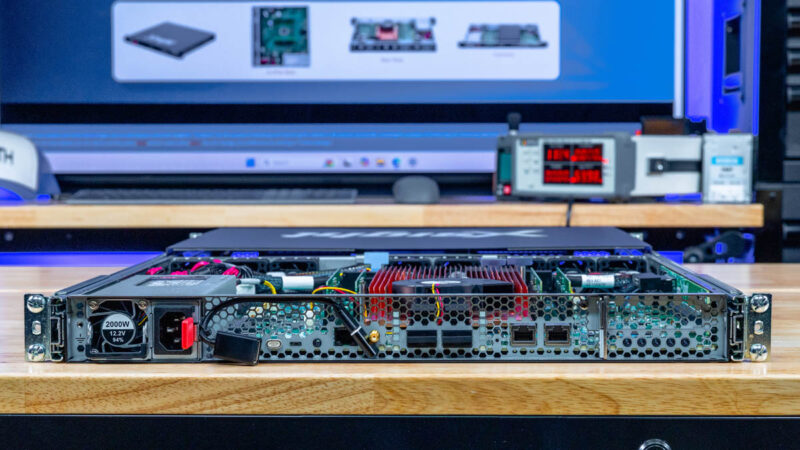

The Xsight Labs E1 1U platform is much larger than the PCIe card, but that is really the point. The platform we are using is designed to not just be a network offload in a server. Instead, it is designed to be more flexible so that you can build more around the architecture.

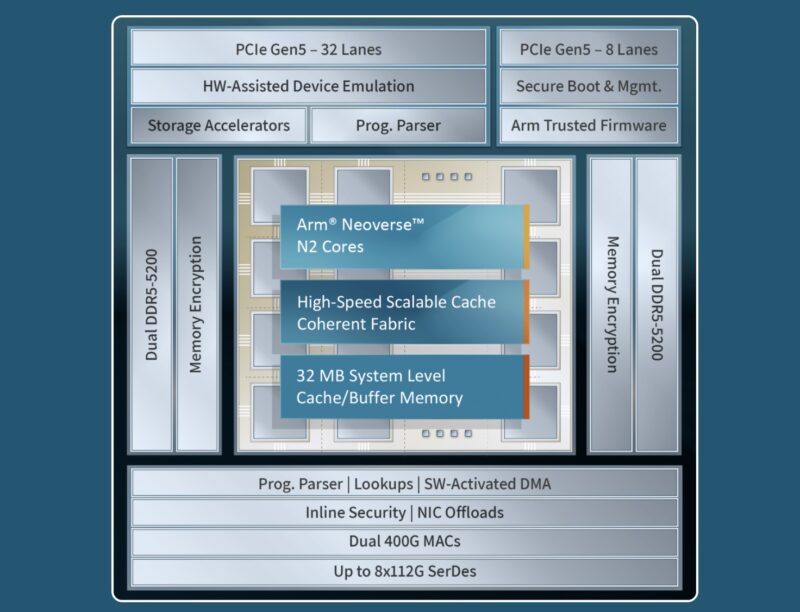

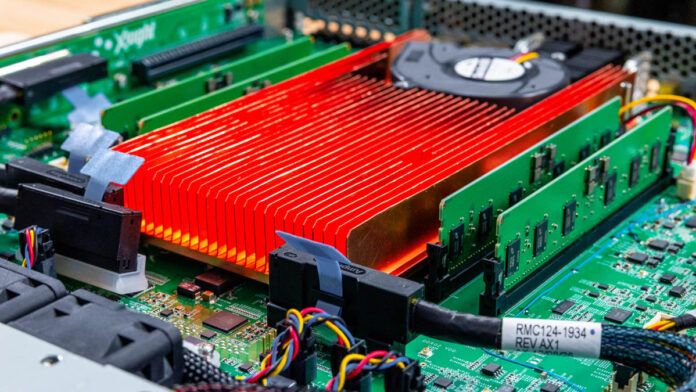

Starting with the E1 DPU, this is a 64-core Arm Neoverse N2 part built in TSMC 5nm, and you can stick four DDR5-5200 ECC RDIMMs alongside it in the 1RU server.

Just to give you some sense, here is the block diagram for the part:

Other than the DDR5 memory that we showed, there is a lot more going on. Our unit is still more of a development platform, which you can see from the USB headers. Still there is something neat.

Onboard, there are two 400G MACs for a total of 800Gbps of networking. This platform is different because Xsight Labs has validated it with the SONiC-DASH Hero 800G test. That may not sound exciting, but SONiC is the open-source NOS right now running in many organizations. The Hero 800G test is one to validate that the NIC can keep 800Gbps of traffic moving with 120 million connections and 12 million connections per second with zero dropped packets. I think they actually used Keysight CyPerf to test this (I need to double check) which is what you are seeing on STH on everything from the NVIDIA ConnectX-8 C8240 800G Dual 400G NIC Review to lower-end 10G gateway devices.

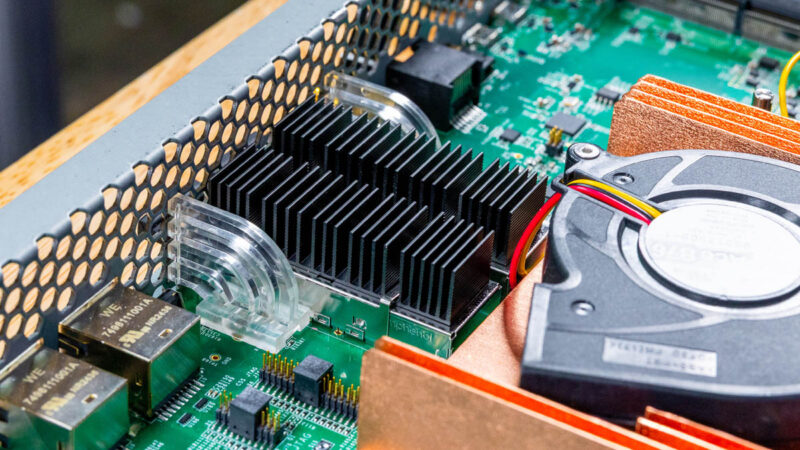

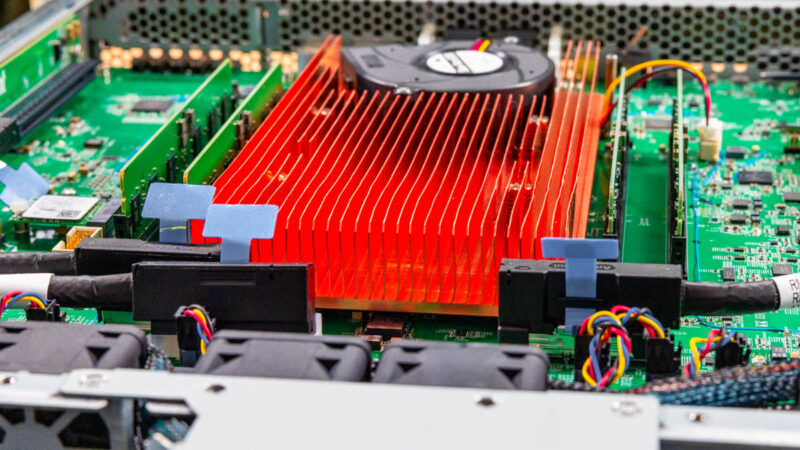

Just as a fun one, here are the heatsinks for the two QSFP112 400Gbps ports:

The company says that it passed the SONiC-DASH 800G Hero test with 19% headroom, and further room for optimization. That is important for a few reasons. First, the E1 does not have a NIC IP attached to it along with its compute cores. It is actually using the Arm Neoverse N2 cores to do the networking thanks to features like DPDK. That is very different from many other DPUs on the market that we discussed a few months ago in the Substack.

Second, the extra headroom means that CPU cycles are free to do other tasks. That is where the next set of features comes in.

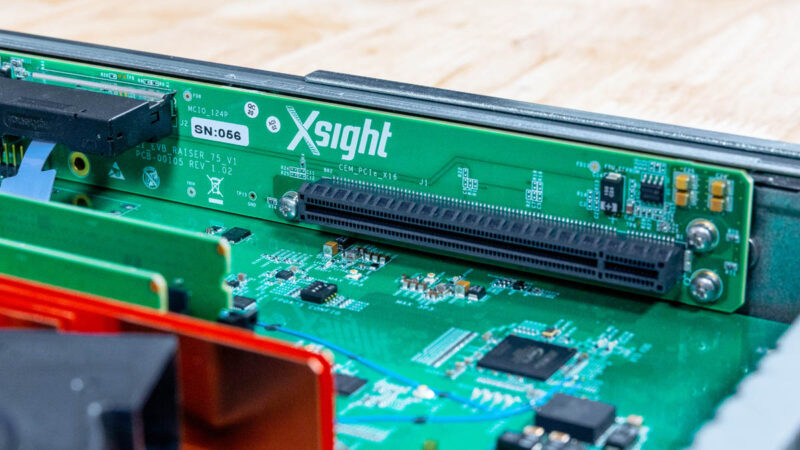

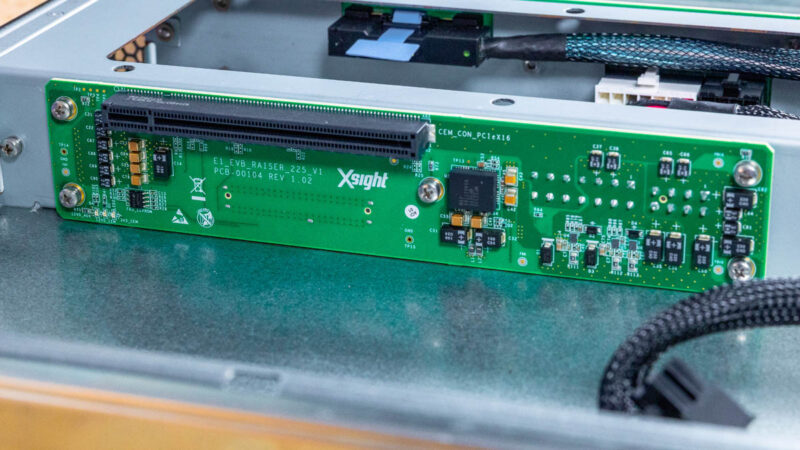

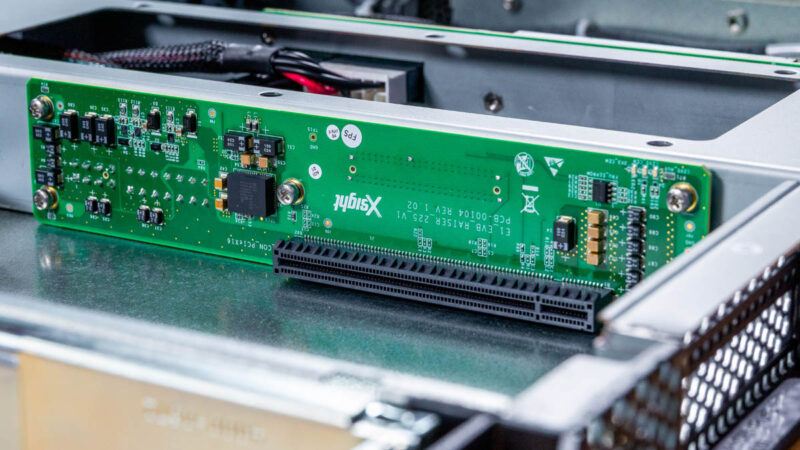

If you look around the chassis, you will see a number of PCIe slots that expose PCIe from the DPU that you can see in the block diagram.

Those include dual slot PCIe Gen5 x16 slots at the front.

Unlike the NVIDIA BlueField-3 DPU where there are explicit versions for both the NIC version and the one that acts as a PCIe root as a “Self-Hosted” DPU, the Xsight Labs E1 can do both. Indeed, it can have x16 or x4/x4/x4/x4 bifurcation of its slots.

With the ability to push traffic through two 400Gbps ports (800Gbps), and 32 PCIe Gen5 lanes (roughly 800Gbps) the idea is that those free CPU cycles can do other work. For example, we showed off the Xsight Labs E1 800G 64-Core Arm DPU Shown for Hammerspace AI Storage.

If you look at the chassis above, it houses five sleds in a 1OU (that is O for Open, not 0) server. As a result, we get 320 Arm Neoverse N2 cores, and 40 SSDs. Since each sled has eight SSDs, which are roughly 800Gbps of storage, and then 800Gbps of networking, it is almost like putting forty PCIe Gen5 SSDs directly on the network, running the storage stack on the DPU between the SSDs and the network ports. While that is an example with SSDs, one could easily see PCIe GPUs or other accelerators also being attached to the E1 in those card slots.

Final Words

Another neat point about this DPU, aside from the architecture, is that it is early. NVIDIA BlueField-4 will utilize 64 Arm cores and 800G networking as well, but we had the E1 DPU in our studio before the formal GA launch of the BlueField-4, which is expected in 2026.

As you may have surmised, this is really kicking off a series using the DPU. In the next piece, we are going to do something simple, yet profound. If you saw this week’s Lenovo ThinkCentre neo 50q Tiny QC Review, that consumer Arm system could not install Ubuntu Linux from an ISO, which is very different from the experience we had with the ASRock Rack AMPONED8-2T/BCM with Ampere AmpereOne A192-32X Arm CPU. The next piece in this series is just getting the OS running on this from an ISO, and it is an important server milestone, yet one that other DPUs do not do. For example, our Intel E2100 DPU does not support this and is therefore very difficult to get running outside of narrow use cases. This E1 is an exciting platform, so we have a lot to cover.

The color of that heatsink in some photos is interesting – it looks like it’s glowing hot

I think “which are roughly 800Gbps of storage” should be “which can provide 800GB of storage”.

LOL, that blower fan is blowing on nothing, the heatsink is getting no airflow, AND the blower blocks the exit of the heatsink so not even the axial fans are able to cool the heatsink.