This weekend, Andrej Karpathy, AI guru at Tesla, showed off Tesla’s current supercomputer for developing autonomous driving. For a bit of fun, we wanted to take a quick guess at what systems Tesla is using.

Tesla Supercomputer with NVIDIA A100 80GB

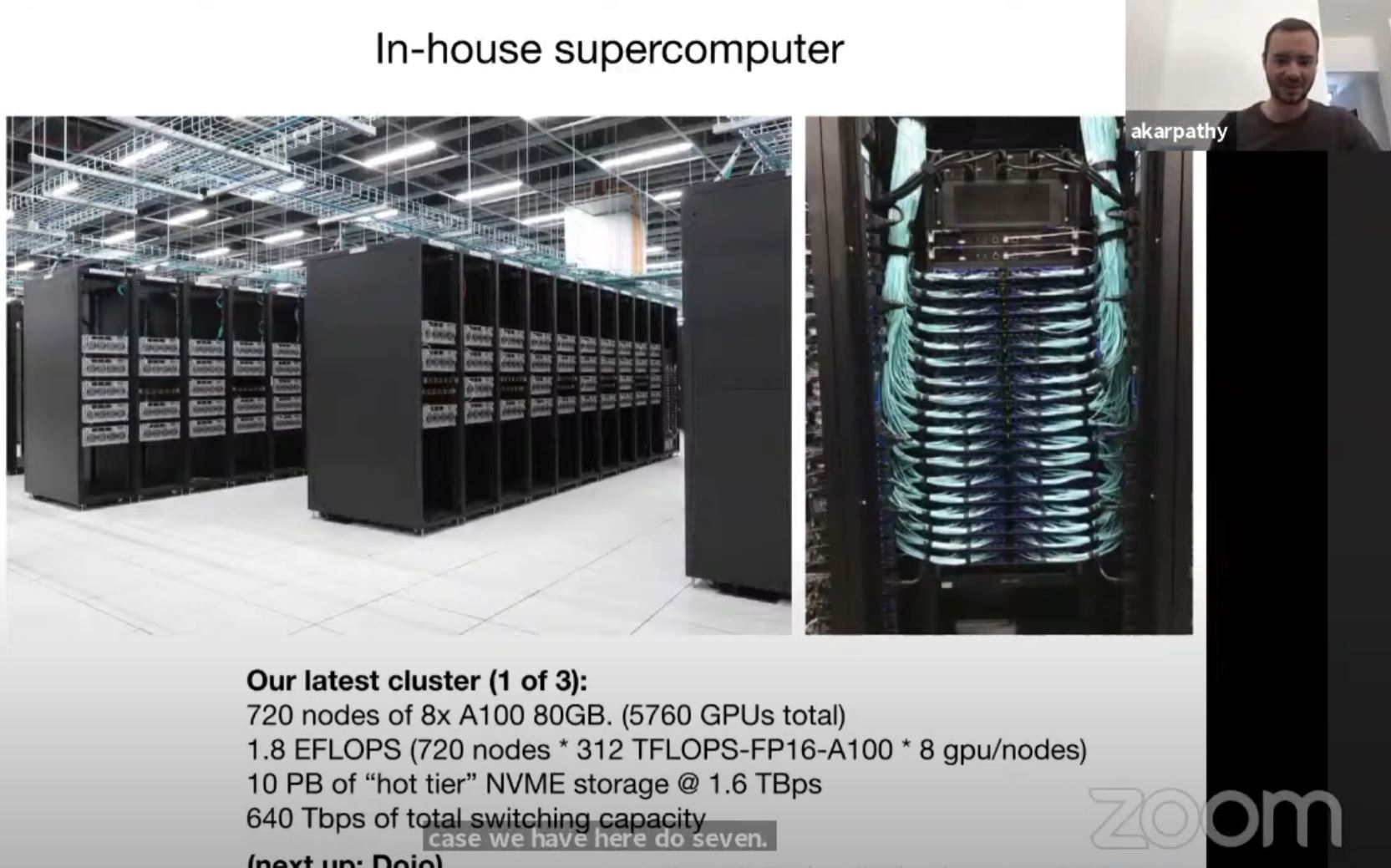

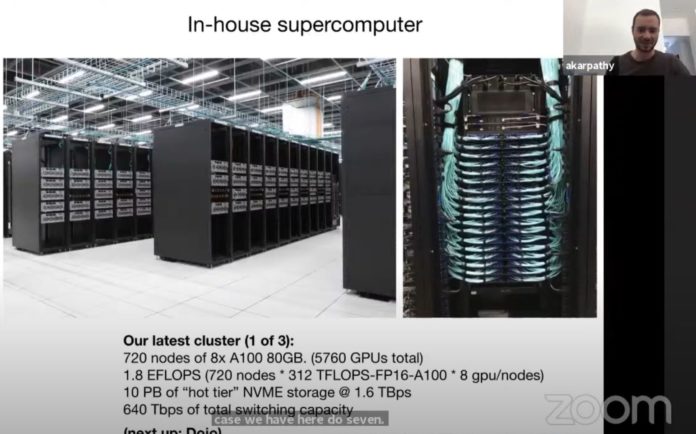

During the latest talk, Andrej said that the supercomputer Tesla operates is massive. Scale wise he says it would rank as a top 5 supercomputer, despite it not being on the Top500. This just shows that there are a lot of large-scale systems not listed on the Top500 these days.

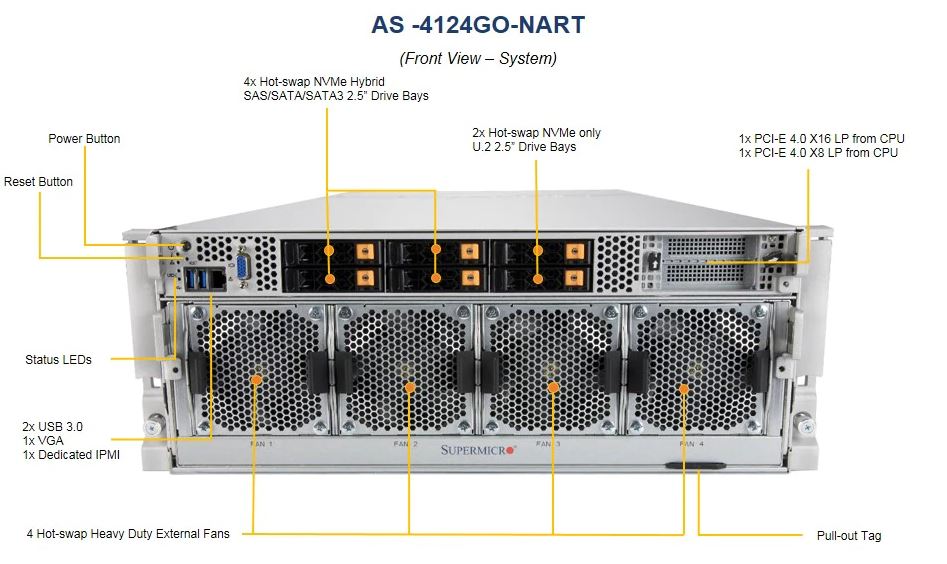

There was no mention of which vendor Tesla is using. I asked our Editor-in-Chief Patrick what his best guess is regarding which systems were used. We did not confirm this, but it appears given the photo is that Tesla is using 720x Supermicro nodes. The nodes seem to look like either SYS-420GP-TNAR(+) (Intel Xeon Ice Lake) or AS-4124GO-NART (AMD EPYC) systems although it is very hard to tell from the view shown what they are. Both the Intel and AMD systems share the chassis which is all we can see (in a blurry version) Here is what the front of one of those systems looks like:

Tesla’s current supercomputer consists of:

- 720 nodes of 8x A100 80GB (5760 GPUs total)

- 1.8 ExaFLOPS (720 nodes * 312 TFLOPS-FP16-A100 * 8 GPU/nodes)

- 10 petabytes of “hot tier” NVME storage @ 1.6TBps

- 640Tbps of total switching capacity

Training for autonomous driving requires pulling in video data so the hot-tier of NVMe storage needs to deliver 1.6TB/s of performance. One can also see some of the 640 “terabytes” per second of switching capacity (our guess NVIDIA/ Mellanox?) Just note, some who have seen this presentation have referred to this as “terabytes” not “terabits” per second.

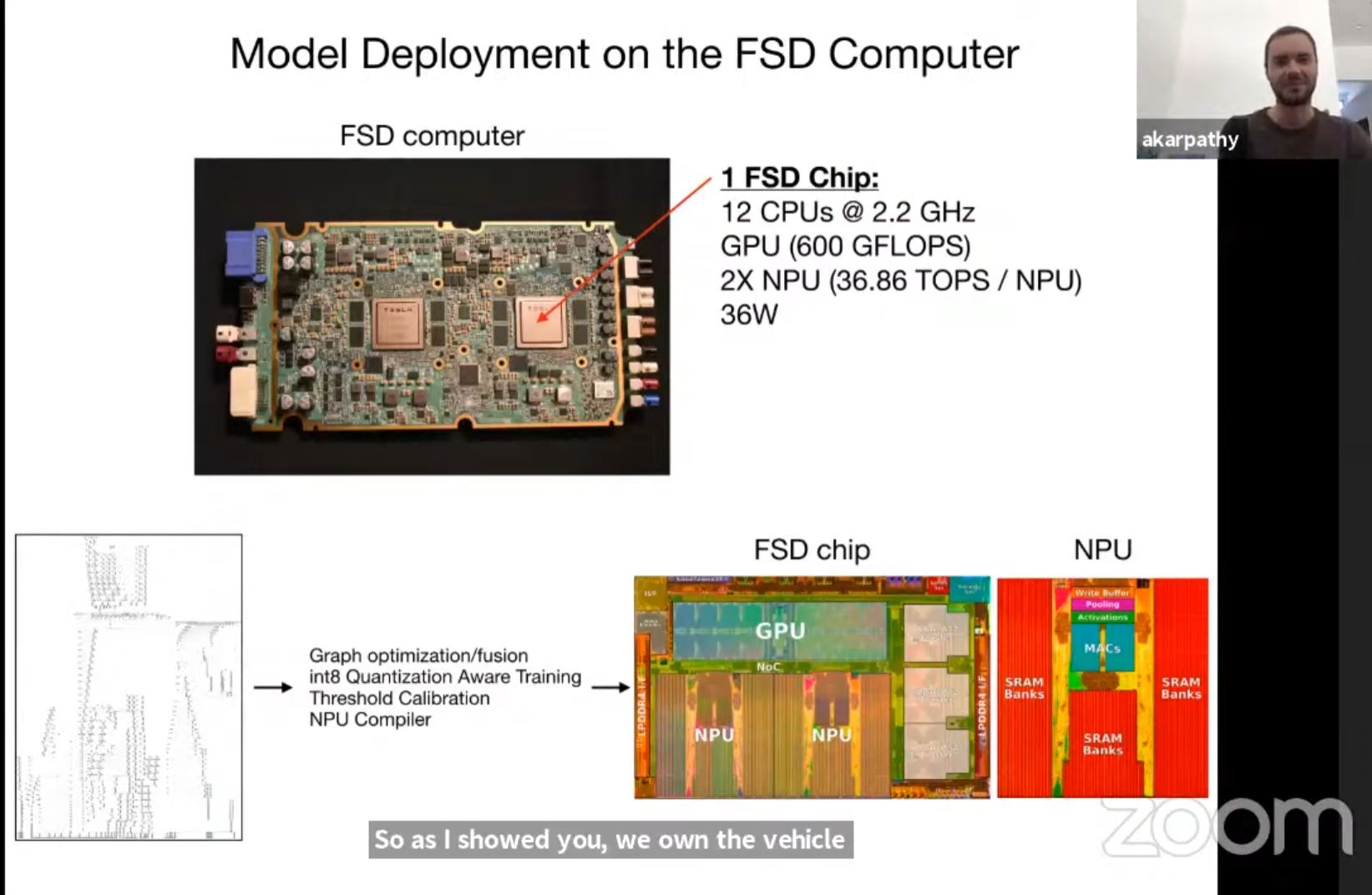

Tesla may use NVIDIA GPUs to train, but it ultimately deploys on its own silicon, not on the NVIDIA Drive platform.

Final Words

This is perhaps the no-brainer area where it makes sense to operate a cluster at this scale. Still, that gives some sense as to what it takes to get ahead in autonomous driving. That is currently around the level of a top 5 supercomputer and is going up from here. Hopefully, we get more on Project Dojo soon which is the next generation.

What is very interesting here is that this seems to have evolved a lot over time. Years ago search engine companies like Baidu and autonomous driving startups like Zoox (now Amazon) were using NVIDIA GTX 1080 Ti’s, and Andrej commented on the DeepLearning11: 10x NVIDIA GTX 1080 Ti Single Root Deep Learning Server noting the payback versus renting time on AWS via Twitter:

"DeepLearning11: 10x NVIDIA GTX 1080 Ti Single Root Deep Learning Server." That's a lot of firepower for $16K https://t.co/f5v7HdevXJ

— Andrej Karpathy (@karpathy) October 28, 2017

It seems like that if Tesla had been using the 1080 Ti’s years ago, it is now fully embracing data center GPUs for its compute even though the GeForce line remains very popular with some of Tesla’s China-based competitors.

That is what you get when you dump BTC – decent AI/ML rig.

@baukukas:

Yeah, no shit. I would rather see AI engineers use GPUs to train and inference instead of stupid ass miners who only know how to “insert PCIe cards” into bitcoin motherboards with lots of PCIe x1 slots that can’t run 3060s.

ASICs for BitCoin. GPUs for Ethereum.

Not sure how that all fits in the trunk….

Nice try tesla but 5760 air cooled A100 are not going to beat 6200 water cooled A100 in Perlmutter.

Given that Selene with 4480 A100 is 6th it would take their spot