Every June 8 since 2009 I get to write one of my favorite posts of the year, the status update. Last year’s post was particularly exciting. We announced DemoEval, our platform to allow our readers to try out the newest technology gear using their own workloads. This year involved a lot of hard work on scaling out our capabilities. STH has one of, if not the best data center hardware evaluation facilities of any editorial site. While it may look like a similar STH, we have had several notable areas of growth. I wanted to take this opportunity to give the STH community a glimpse into how STH is changing.

HTTP/2 and a Facelift De-emphasizing Display Ads

At STH we adopted Google’s SPDY for our forums when we switched over to HTTPS years ago. Since SPDY was succeeded by HTTP/2, we did behind the scenes work to upgrade the forums to the new standard. The forums are where we allow users to log into STH, and they received HTTPS first.

This year, we gave STH its first major facelift in many years. We needed to add a few new capabilities for content that is coming. As part of this facelift, STH mobile usability went up significantly.

If you read our STH facelift piece titled STH Q2 2017 Refresh is Live: Let’s Talk Ad Space you will notice we spent a lot of time during our re-design to limit the number of display ads. We actually keep an eye on how much space we are using for ads versus other tech sites.

In 2016 we launched a project to reduce the number of ads shown. After almost a year of the reduction, we are now hovering at an 11% display ad decrease per page versus Q1 2016. For those not in the “free” editorial publishing industry, that is an awesome result. The general industry trend is to use more ad space, not less and to use more aggressive ad types.

Beyond bucking the trend by decreasing display ads, we are also not using sponsored content. We have one of, if not the most editorial sound processes in the industry not accepting sponsored content. We also maintain an editorial conflict list down to the level of detail where we even have a pizza technology company due to an indirect investment.

Our Lab Capabilities

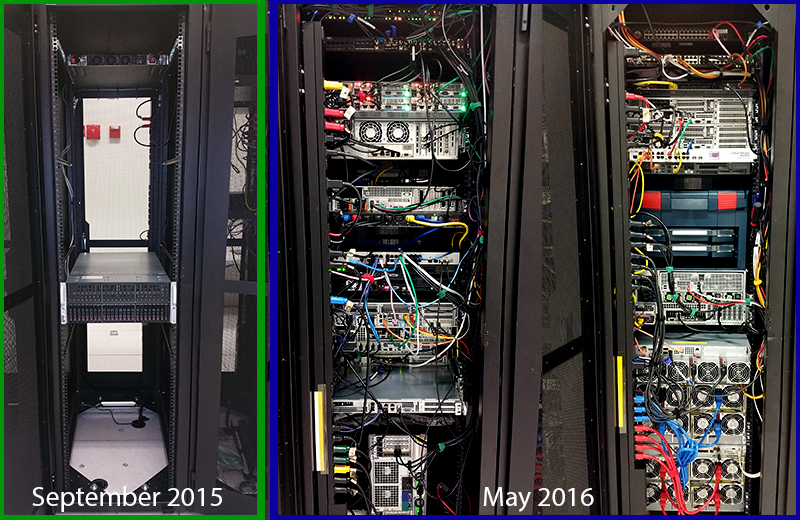

I still use this illustration from last year, but this is completely out-dated. We now have multiple racks in multiple data centers, but it was fun to see a snapshot of last year’s setup:

Unfortunately, a simple shot like that is impossible today. As the racks are no longer side-by-side.

Our static load (e.g. weekends without tests running) is now hovering around 20kW with peaks significantly higher. We are likely going to be adding another 10kW over the next few weeks as we prepare for all of the new server releases. That increment was more power than we had in the entire main lab infrastructure a year ago.

One of the drivers for this is that systems are using more power. NVMe drives can use 25W each up from less than half that for typical SAS/ SATA SSDs. Next-generation chips and platforms are using significantly more power. Finally, we are doing more GPU related work for the deep learning/ AI bloom cycle. An example of this is the DeepLearning10 8x GPU system that has now peaked at around 3.1kW making it our largest single node (not chassis) system in the lab to date. In terms of scale, that box alone at 2.5kW costs us around $600/ month to power.

We moved the lab from 10GbE to 40GbE as our standard. We will be adding 100Gbps Intel Omni-Path to the lab over the next few days.

Enterprise gear has been reviewed in homes and small offices for many years. At STH, we are changing the game by doing industry leading in-datacenter reviews. If a node has an issue, our remote monitoring needs to pick it up and we need to use remote management tools to fix. That allows us to live a day in the life of an operations team when we do our reviews.

Further, the massive lab is being used for more than just STH reviews. Last year we announced DemoEval, over the next few months we will announce our next service that will allow additional groups to utilize our facilities.

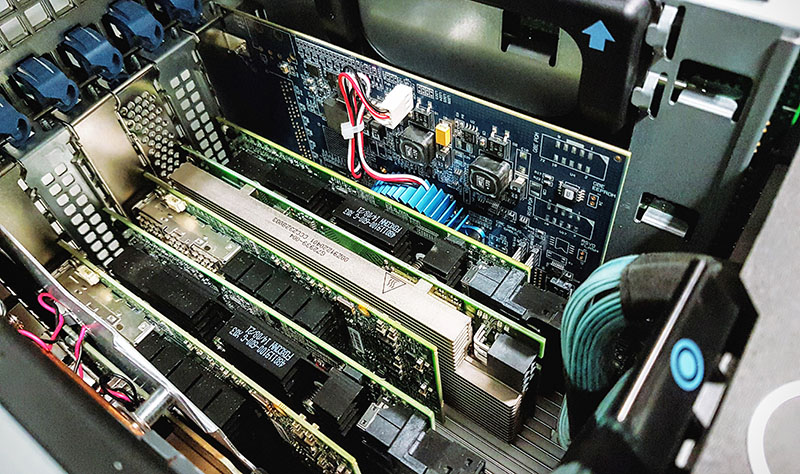

Staying Ahead of the Curve

We are using these capabilities in an attempt to stay ahead of the curve with our content. We are the only major site to have tested platforms such as the Intel Xeon Phi x200 codenamed “Knights Landing”, Intel Xeon E3-1200 V6 (every meaningful SKU), Intel Xeon E3-1500 series, real-world AI/ deep learning training machines like the 8x NVIDIA GTX 1080 Ti machine with Tensorflow, Intel QuickAssist Technology, Cavium ThunderX, Intel Optane datacenter systems (plural) and using M.2 Optane in servers, Intel Atom C3000 series “Denverton” benchmarks, Ryzen 5 and 7 Linux benchmarks (every SKU), QCT “white box” switches, and the list goes on. We also have developed industry leading test methodologies such as the 2U 4-node “sandwich” that let us get the most realistic look at server power consumption.

Many of these pieces are not easy. For example, both the Intel QAT and Xeon Phi x200 article series required 80+ hours of behind the scenes, hands-on work to get the series done. We have been making investments even in these niche technologies just to ensure we are ready as they become mainstream.

The Note Driving STH

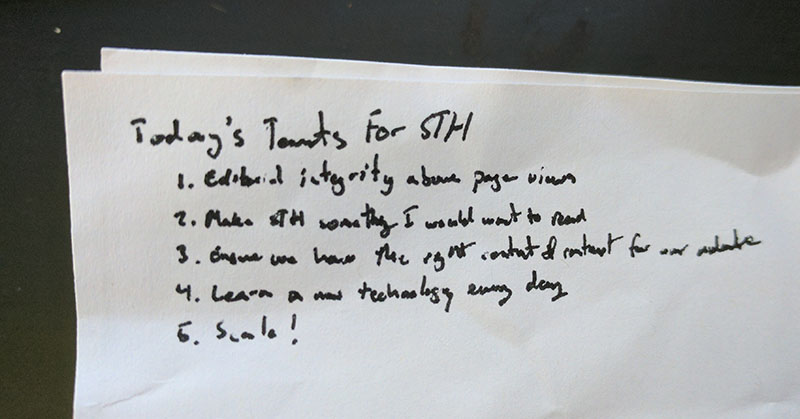

Every morning I look at a handwritten note that has key principles behind STH.

Since my handwriting is terrible, here is the list transcribed:

- Editorial integrity above page views

- Make STH something I would want to use and visit

- Ensure we have the right content and context for our audience

- Learn a new technology area each day

- Scale

That is not a perfect list but at 4:45 AM each morning that starts my day (along with coffee.) Over the last eight years, that list has morphed but the direction has been the same. It has also lead to annual growth in the 75-100% Y/Y range while fewer technology sites will spend editorial resources on datacenter products.

A Thank You to Our Community and Final Words

I just wanted to say thank you to our awesome community both on the main site and on the STH Forums. The STH team and I learn a lot from the experts we have in our community. The strength of the STH Forum community is bolstered by the tone set by our forum moderators who help set an exemplary tone.

To those that I have met in person on five continents over the past few years, thank you for taking the time out of your day to give live feedback. Our user feedback sessions covered eight countries and eleven US states last year alone. Those interactions help us shape STH immensely.

Thank you to our industry (hardware and software) community that help get reviews done on STH. Without your support, STH could not grow.

I did want to take a special moment to thank William Harmon for his great reviews. Also, both Rohit and Cliff for picking up coverage in areas such as networking and storage where we needed help this year.

There is certainly more to come for STH so stay tuned!

Congrats! Keep up the great work.

Recently find out this website, bookmarked it after visiting two of your posts. Great info here!

Happy Birthday, STH!

Congratulations Patrick:

you’re a gentleman and a scholar too!

I don’t remember the article which lead me to this site. The reason is clear, though… Accuracy, clarity, and the integrity you mention above, have provided countless go-to articles and guides. For me, this is where I start. Thank you!

An excellent ethos, congratulations are in order for keeping this valuable resource going for 8 years strong. Good stuff.