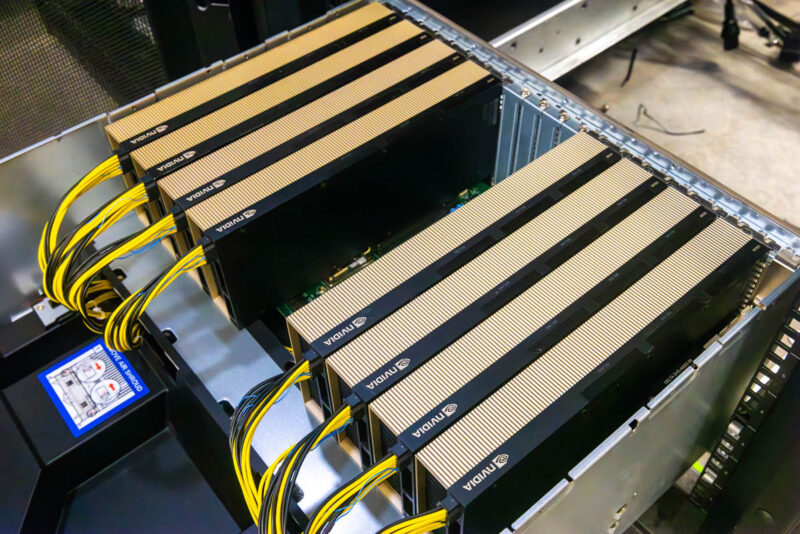

At the end of October 2023, we published NVIDIA L40S is the NVIDIA H100 AI Alternative with a Big Benefit. That article has been passed around in many circles, so we recorded a video version with footage from when we were at Supermicro. The NVIDIA L40S has been one of the most interesting GPU launches in recent history because it offers something very different and with a different price, performance, and capability set compared to the NVIDIA A100 and H100 GPUs.

NVIDIA L40S vs H100 vs A100 The Video

If you want to check out the video, you can find it here:

As always, you may want to watch the video in its own browser, tab, or app for a better viewing experience.

From our initial piece, there are a few points worth looking at here:

- The L40S is a massively improved card for AI training and inferencing versus the L40, but one can easily see the common heritage.

- The L40 and L40S are not the cards if you need absolute memory capacity, bandwidth, or FP64 performance. Given the relative share that AI workloads are taking over traditional FP64 compute these days, most folks will be more than OK with this trade-off.

- The L40S may look like it has significantly less memory than the NVIDIA A100, and physically, it does, but that is not the whole story. The NVIDIA L40S supports the NVIDIA Transformer Engine and FP8. Using FP8 drastically reduces the size of data and therefore, a FP8 value can use less memory and requires less memory bandwidth to move than a FP16 value. NVIDIA is pushing the Transformer Engine because the H100 also supports it and helps lower the cost or increase the performance of its AI parts.

- The L40S has a more visualization-heavy set of video encoding/ decoding, while the H100 focuses on the decoding side.

- The NVIDIA H100 is faster. It also costs a lot more. For some sense, on CDW, which lists public prices, the H100 is around 2.6x the price of the L40S at the time we are writing this.

- Another big one is availability. The NVIDIA L40S is much faster to get these days than waiting in line for a NVIDIA H100.

Since we published the original piece, we have heard some interesting feedback. For example, folks are using these not just for AI clusters, but also for things like visualization and vGPU clusters. Since the L40S has video pipelines and things like RT cores, the cards can be used for vGPU workloads during the day and then transitioned to AI workloads in the evening when there is less demand for vGPUs.

That is one of the key use cases for systems like the Supermicro SYS-521GE-TNRT that we used for these cards.

Final Words

The L40S is a really interesting card, and they are easier to get these days than the H100. Beyond just the ease of acquisition, the L40S also has a few features that the H100 and A100 lack. It may not be the card for those who also need FP64 computing, but for those who do not need to work at that precision, these are good alternative GPUs.

Again, thanks to Supermicro and NVIDIA for getting us all of the hardware needed for this project.

L40s is fanless variant of RTX6000 Ada and it runs DirectX OpenGL and Vulcan, but H100 H200 does not run graphics api at all.

I hope Nvidia releases DirectX capable GPU with 80GB+ memory to run pixel shaders.