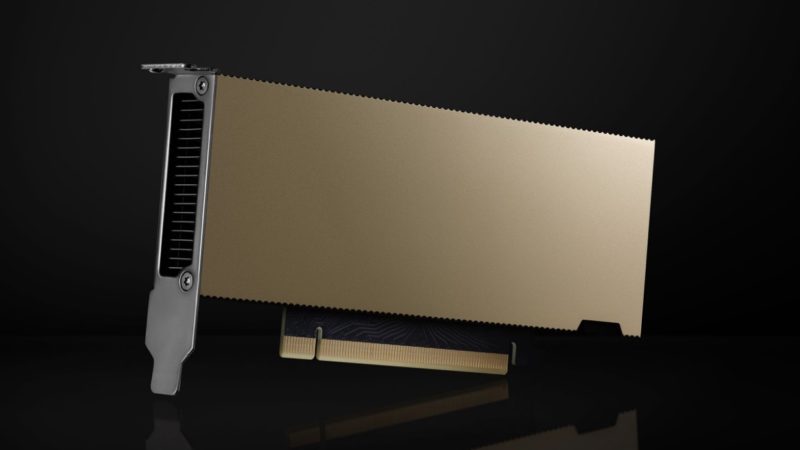

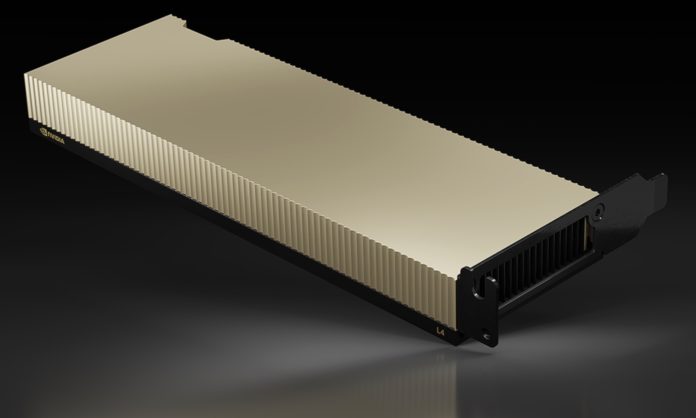

The NVIDIA L4 is going to be an ultra-popular GPU for one simple reason: its form factor pedigree. The NVIDIA T4 was a hit when it arrived. It offered the company’s tensor cores and solid memory capacity. The real reason for the T4’s success was the form factor. The NVIDIA T4 was a low-profile PCIe card that did not require an additional power cable. That meant that it was extremely easy to integrate into most servers. With this generation, the NVIDIA L4 looks to follow that pedigree for success.

NVIDIA L4 Released 4x NVIDIA T4 Performance in a Similar Form Factor

The NVIDIA L4 is a data center GPU from NVIDIA, but it is far from the company’s fastest. Here are the key specs of the new GPU:

| Form Factor | L4 |

|---|---|

| FP32 | 30.3 teraFLOPs |

| TF32 Tensor Core | 120 teraFLOPS* |

| FP16 Tensor Core | 242 teraFLOPS* |

| BFLOAT16 Tensor Core | 242 teraFLOPS* |

| FP8 Tensor Core | 485 teraFLOPs* |

| INT8 Tensor Core | 485 TOPs* |

| GPU memory | 24GB |

| GPU memory bandwidth | 300GB/s |

| NVENC | NVDEC | JPEG decoders | 2 | 4 | 4 |

| Max thermal design power (TDP) | 72W |

| Form factor | 1-slot low-profile, PCIe |

| Interconnect | PCIe Gen4 x16 64GB/s |

The 72W is very important since that allows the card to be powered by the PCIe Gen4 x16 slot without another power cable. Finding auxiliary power in servers that support it is easy, but what made the T4 successful was not requiring that support.

NVIDIA is also pushing on the T4’s NVENC/ NVDEC capabilities. NVIDIA is updating this generation to support features like AV1 per the keynote. That will be important in future workflows when data is uploaded, decoded, an AI transformation applied, and then re-encoded in the future. One of the other main benefits of the NVIDIA T4 was that it was a relatively easy way to add video encode/ decode acceleration in a server.

Final Words

While this may not be the biggest headline GPU for NVIDIA as it uses a fraction of the power and has a fraction of the performance of something like a NVIDIA H100, it is still an important announcement for the company as this is NVIDIA’s go-anywhere GPU offering.

Everybody forgot about the poor ol’ A2 huh

These will be the go to Gpus for VDI density in rack servers, which is one of the reasons the T4 was so popular. You can stick 8 of these in a 2u server and run 96 2gb vpc profiles, a perfect pairing with the new 96 core AMD processors. Crazy density.

75 watt card without video-out. if it’s for AI/ML tasks, why its so power-constrained? if its for vGPU or video transcoding, why upgrading from any card since 2015 – these tasks dont need much power?

if you are looking for something stackable in 2-height slots, i would rather go with A5000/A6000.

i never understood why (for instance, aws) was offering T4 if it was weak and not really cheap in its time.

Why is Nvidia not publishing basic specs on the L4? Like base/boost clocks and SM count?

It wins the FP32 TF/watt crown. In. One. Slot. I like it.

Patrick can you verify does it play nice with NCCL P2P via PCIe or is it gimped like RTX4000 gaming and Ada workstation card?

@altmind, think about where they get installed. I have never seen a server room HVAC installed atop a telephone pole or inside a kerb side utility box. Have you?

Very poor bandwidth/TFLOPS ratios (for both GPU and bus bw) will make it suboptimal for many use cases. High price per TFLOP (relative to e.g. A4000) wouldn’t help either.