The NVIDIA ConnectX-8 SuperNIC offers Next-Generation 800G Performance

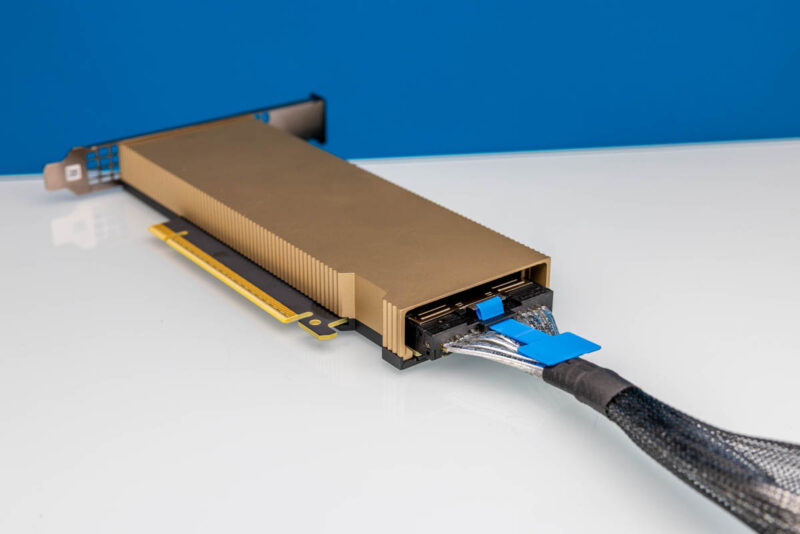

Now that we have everything connected, there are a number of other steps. You do not just get 800Gbps out of the NICs by just plugging them in and getting connectivity. Steps like optimizing the device queues, tuning each port to the correct NUMA node, and placing the workloads on the cores closest to the PCIe controllers on the Intel Xeon 6980 128-core CPUs, and so forth, are essential. Since we have two 400GbE ports, and two PCIe Gen5 x16 links to the CPUs, the configuration looks a lot like what we showed in the NVIDIA GB10 ConnectX-7 200GbE Networking is Really Different. Once that is done, we now have two installed in our Keysight CyPerf testing box.

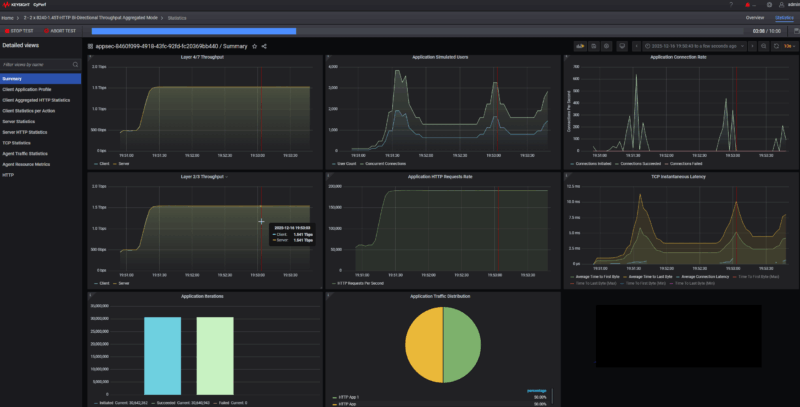

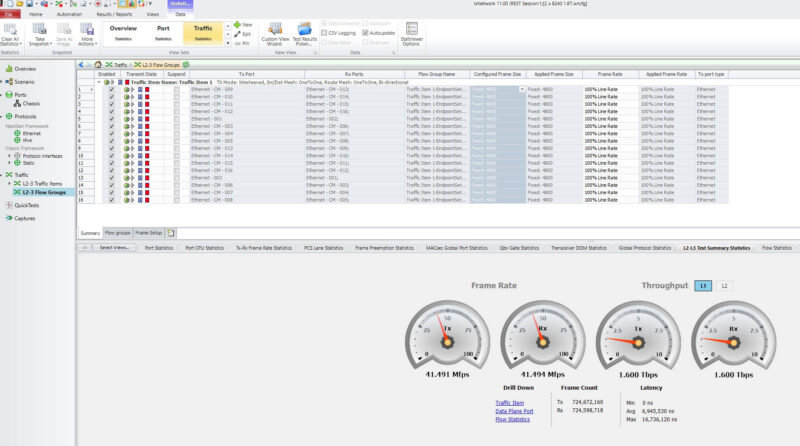

Many will know Ixia from its long lineage of making network testing devices. Ixia is part of Keysight, and we managed to get an assist on showing this with IxNetwork since we do not have that test suite, especially for these speeds. IxNetwork allows for some of the high-accuracy, low-layer testing of network devices. If you notice, our CyPerf results above are measuring at L2/3 and L4/7, but here is what we got at L1:

That is the ConnectX-8’s L1 bandwidth at 1.6Tbps and using PCIe Gen5 servers, no less, since we are still some time from PCIe Gen6. One of the reasons we wanted to show this is that achieving full speed on a PCIe Gen5 server is so much work. It is far different from a 10GbE or 100GbE NIC, where the performance comes online without much effort. This was down to picking cores and internal cabling.

As you might imagine, the vision is to use our testing tool, which can generate loads exceeding 1.6Tbps, to test today’s 800GbE 51.2T switches. You need to be able to send and receive at 800GbE speeds as table stakes to test these devices. If you were wondering about why we have a Massive NVIDIA 800G OSFP to 2x 400G QSFP112 Passive Splitter DAC Cable as an example, stay tuned in the new year for that testing.

Final Words

This is nothing short of amazing. In January 2026 we hope to bring you more ConnectX-8 content including how NVIDIA is using it alongside its latest B300 Blackwell Ultra generation of GPUs. While the B300 supports PCIe Gen6, current servers are limited to PCIe Gen5. The ConnectX-8 is a key part of enabling next-generation GPU-to-network communication while we wait for next-generation servers later in 2026. In the meantime, through lots of work, we managed to get these running at 800Gbps speeds even in today’s PCIe Gen5 servers, albeit in a high-end Supermicro Hyper SuperServer SYS-222HA-TN Review. Unfortunately, this configuration does not let us use the PCIe Gen6 speeds, but hopefully, we will get to do that in 2026.

Hopefully, our STH readers enjoyed this look at what is being dubbed a “SuperNIC”. What is for sure is that it has additional capabilities not seen in other NICs, and we have truly brought 800GbE NIC speeds to current-generation servers that are typically limited to 400GbE due to their PCIe Gen5 x16 links. That makes this one of the more exciting reviews we have done in recent memory.

$300 switch $50 switch $3000 NIC. Talkin’ about the gamut

Too bad there isn’t much about the actual power consumption in the article.

Out of curiosity: I assume that this would mostly be not preferred in dual-socket systems, since CPUs have purpose-built interconnects for chatting with one another; but if you are using one of these in a multi-node chassis or similar can the two hosts connected to the NIC communicate directly across the PCIe switch host-to-host; or is it purely a mechanism for exposing the NIC to more than one root complex?

I expect that you would want it to be very obvious whether or not that was enabled, to avoid unexpected lateral movement; but conceivably not having to go out the switch and back could be handy. There have been HPC systems that used PCIe as a node interconnect in the past.

@fuzzyfuzzyfungus Yes and no. The two sockets cannot communicate across the ConnectX-8’s PCIe switch as PCIe endpoints per se because each socket does not see the other as an endpoint. What does happen, however, is that the two network interfaces (one for each node) can communicate as network nodes via the embedded e-switch without going out to the external network switch.

Very useful article, recently researching traffic testing of 800G OSFP.