Direct-to-Chip Liquid Cooling

While immersion cooling is always fun to look at, for many facilities, vertical racks are going to be the standard. For that, there is direct-to-chip liquid cooling. This method utilizes cold plates on hot components and removes heat by running liquid through the cold plates.

There are a few different ways for this work. One can use chilled facility water as an input. There are other options where there is an in-rack heat exchanger.

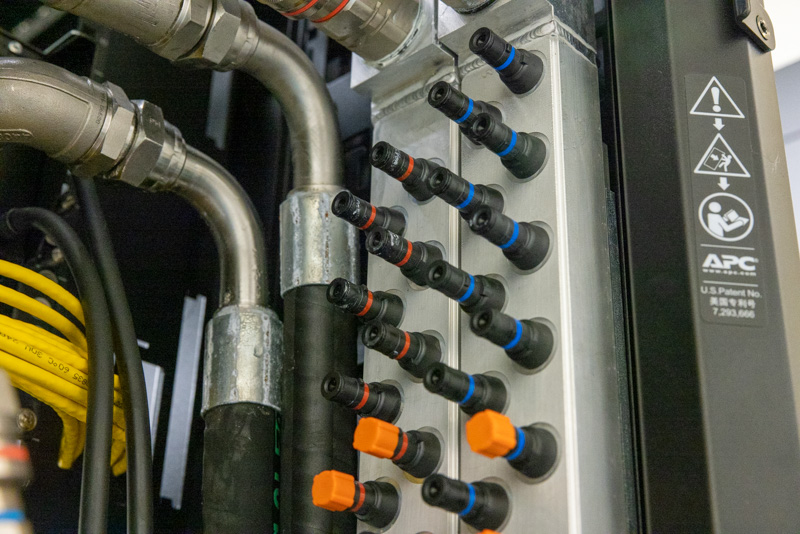

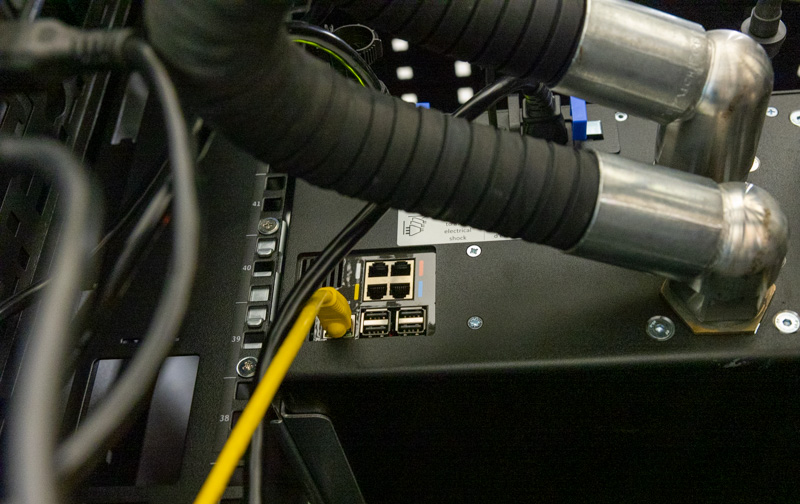

While we tend to focus on the water blocks that sit atop chips, in liquid cooling systems each server often has one or more loops. In the GPU server we are looking at there are five loops. With five loops per system, one needs a water distribution block and a pump infrastructure for the rack. This sits on the side of the rack opposite the PDUs. There are quick-connect fittings that pipe cool liquid into the server and warmed water away from the server.

Some of these solutions have heat exchangers that sit in racks that exhaust into the data center. Others exchange liquid with facility feeds.

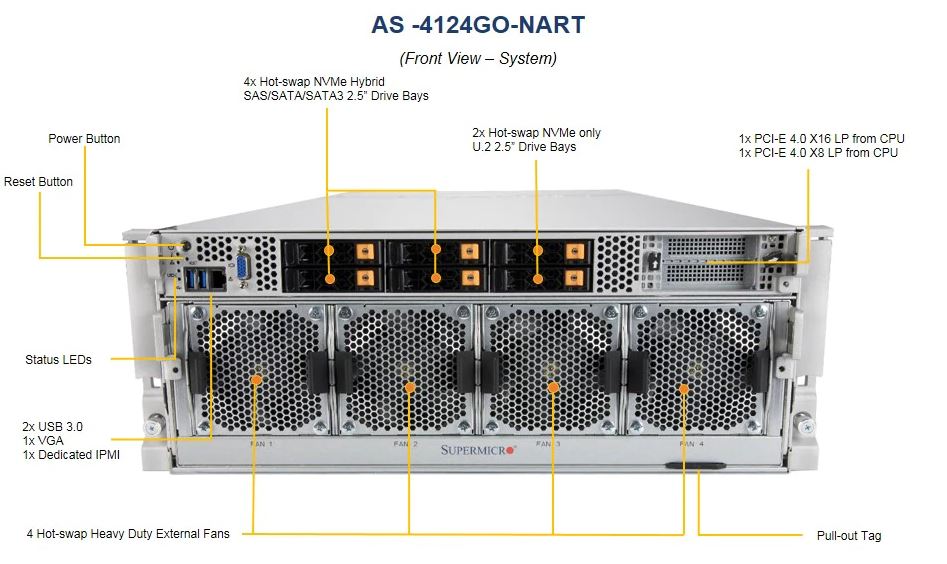

This though is the purpose of our visit. There are two servers, Supermicro AS-4124GO-NART you may have seen in our Tesla Supercomputer piece.

One of these systems is an air-cooled 8x NVIDIA A100 40GB 400W system.

The other system is a liquid-cooled 8x NVIDIA A100 80GB 500W system.

With the 8x 400W NVIDIA A100 modules, as we will see this system can pull over 5kW of power. In a 4U chassis, there are thermal limitations to what can be cooled so to move to the 500W modules, one needs liquid cooling.

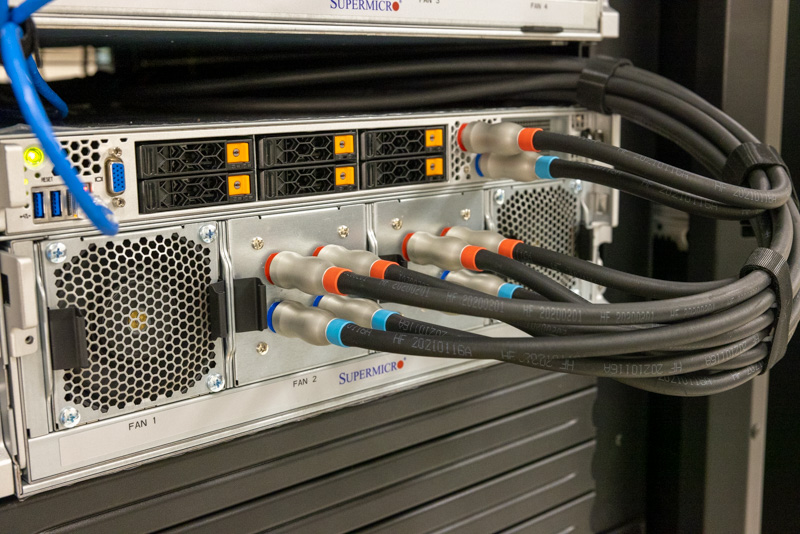

That means that the chassis with all of the quick connect fittings is the liquid-cooled unit. This has 8x 500W NVIDIA A100 modules.

These 500W units are important. In 2022 we will start to see 600W accelerators become more common. If you recall, a key challenge for server designers is being able to remove heat from a chip package’s surface quickly enough to prevent the chip from overheating. Given the larger package sizes for GPUs, a 400W GPU is still possible to cool via a heatsink and air. To get to this 400W figure, accelerators cannot be in PCIe form factors because they do not provide enough airflow.

At 600W, we expect everything other than perhaps more exotic less-dense solutions to be liquid-cooled. Since we have the 500W NVIDIA A100 80GB today, we get a GPU that some can cool with 4x 500W GPUs in a 4U chassis if data center ambient temperatures are low enough. For most though, and especially with 8x GPU configurations, we see these being liquid cooled.

The 500W server one can see is missing two of the external fans in the center of the chassis where the four GPU loops enter/ exit the chassis. Since the CPUs, GPUs, and NVSwitches are being cooled by water, the fan load goes down significantly which reduces power consumption.

Also, the solution had an in-rack Asetek InRackCDU installed. This is a 4U unit that handles the liquid-to-liquid heat exchange.

This unit manages the flow of water from the facility and another loop of liquid in the rack which is then exchanged so that the system is not constantly cycling the in-rack liquid. Also, by having a CDU where this happens, one can use water from the facility loop but another liquid on the in-rack loop.

The Asetek unit also has all of the monitoring and alerting features for this rack.

It is important to keep in mind that since all three of these solutions are being used in data centers, monitoring and control are key capabilities for liquid cooling servers.

So the purpose of the visit was to test the 400W versus the 500W top end in the same server platform to see what the impacts are.

The kiddies on desktop showed the way.

If data centers want their systems to be cool, just add RGB all over.

I’m surprised to see the Fluorinert® is still being used, due to its GWP and direct health issues.

There are also likely indirect health issues for any coolant that relies on non-native halogen compounds. They really need to be checked for long-term thyroid effects (and not just via TSH), as well as both short-term and long term human microbiome effects (and the human race doesn’t presently know enough to have confidence).

Photonics can’t get here soon enough, otherwise, plan on a cooling tower for your cooling tower.

Going from 80TFLOP/s to 100TFLOP/s is actually a 25% increase, rather than the 20% mentioned several times. So it seems the Linpack performance scales perfectly with power consumption.

Very nice report.

Data center transition for us would be limited to the compute cluster, which is already managed by a separate team. But still, the procedures for monitoring a compute server liquid loop is going to take a bit of time before we become comfortable with that.

Great video. Your next-gen server series is just fire.

Would be interesting to know the temperature of the inflow vs. outflow. Is the warmed water used for something?

Bonjour

Je sui taïlleur coudre les pagne tradissonelle ect…

Je ve crée atelier pour l’enfant de demin.

Merci bein.

Hi Patrick.

Liked your video Liquid Cooling High-End Servers Direct to Chip.

have you seen ZutaCore’s innovative direct-on-chip, waterless, two-phase liquid cooling?

Will be happy to meet in the OCP Summit and give you the two-phase story.

regards,