Kioxia CM6 Performance

We are going to first go into some unique testing that we had to do before we could even get to results. We are then going to discuss our results.

PCIe Gen4 NVMe Performance is Different

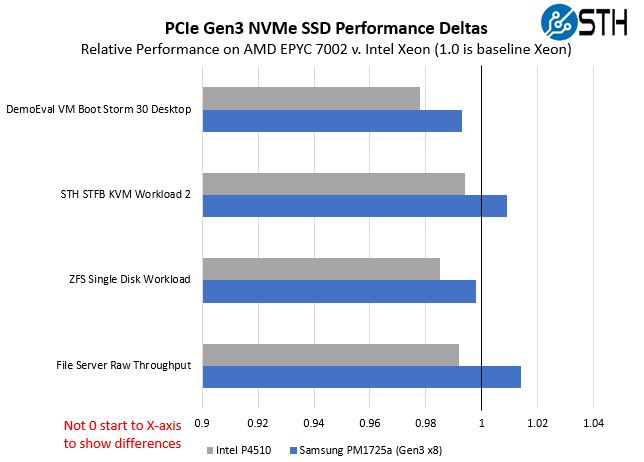

Something that was slightly unexpected, but perhaps should have been, is that PCIe Gen3 performance is not exactly the same on AMD EPYC 7002 “Rome” versus the Intel Xeon Scalable family. It is close, but there were differences. We even went down the path of taking our data generated on 2-socket systems and putting them on a single-socket Intel Xeon platforms. While we could get consistent Intel-to-Intel performance on the same machine, the performance was not as consistent Intel to AMD.

As a result, we realized that we needed to re-test comparison drives on an all AMD EPYC 7002 platform. These deltas are small. In terms of real-world impact, most would consider this completely irrelevant. They are generally within 2% which one can attribute to a test variation, but since that is not a 2% range across the board and more of a +/- 2% range, we had to make the call to not use legacy data for our review since we strive to get consistency. To that end, we had to investigate another aspect of AMD EPYC performance: PCIe placement.

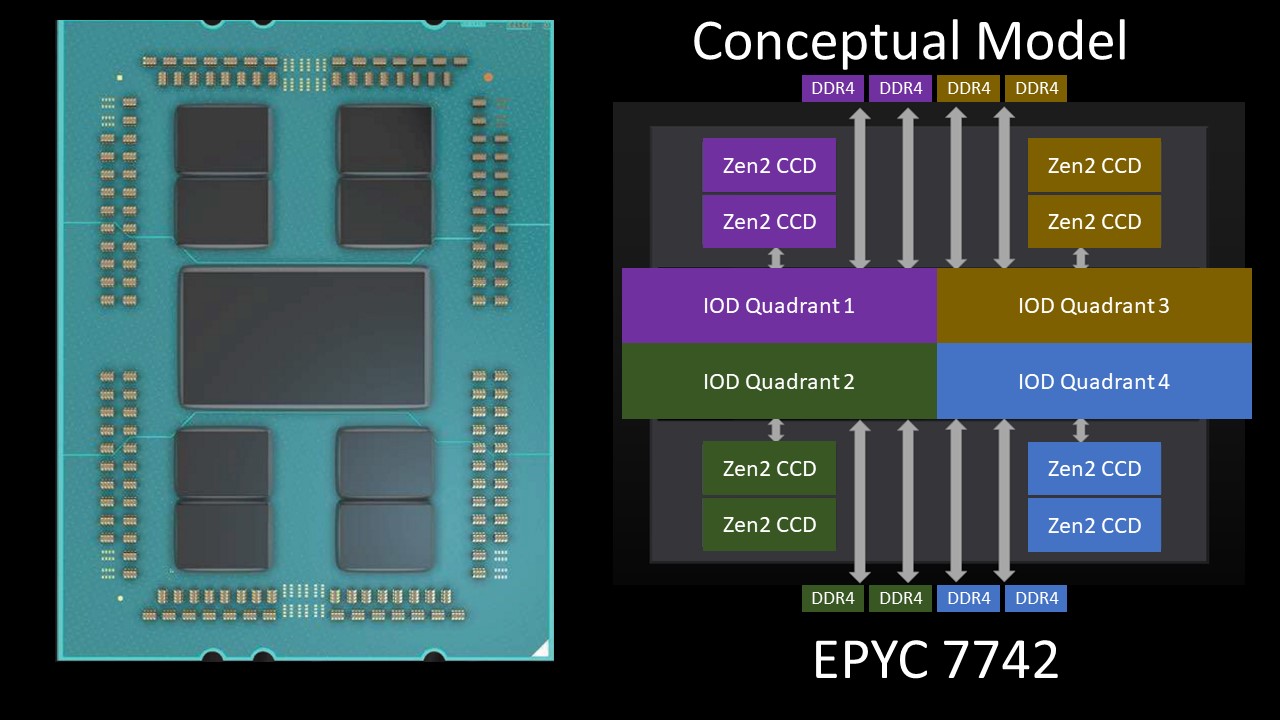

Even within the AMD EPYC 7002 series, one needs to be cognizant of die placement and capabilities. A great example of this is that there are AMD EPYC 7002 Rome CPUs with Half Memory Bandwidth. In that piece we go into the SKUs and why they are designed with fewer memory channels.

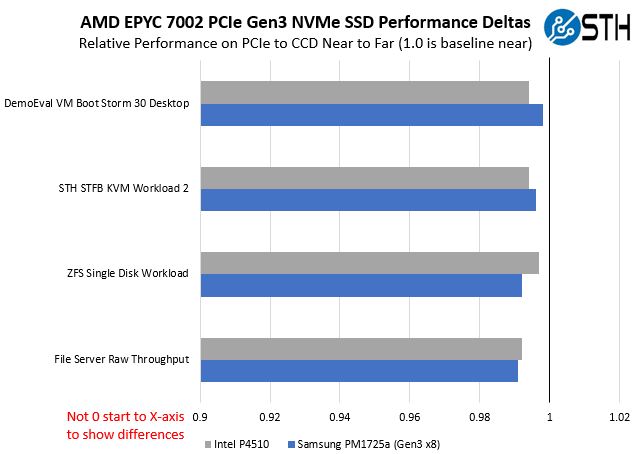

It turns out that as we were testing PCIe Gen4 devices on AMD EPYC SKUs, the actual placement of the workload on cores, and therefore an AMD CCD, and the location of PCIe lanes actually matters. As you can see from the diagrams above, the CCD, RAM, and PCIe lanes can be far apart from one another on the massive I/O die (or IOD.) This is a very small impact when we run workloads (<1%), but we could measure it.

Further, we found that in some of our latency testing the 48 core (6 CCD) SKUs and lower clock speed SKUs were less consistent than the higher clocked and 4x or 8x CCD SKUs. Even with a single PCIe Gen4 device, all AMD EPYC 7002 SKUs are not created equal. This is less pronounced at single drives, as we are testing here, but it becomes a much bigger challenge moving to an array of drives which one can see even using PCIe Gen3 SSDs on EPYC platforms.

The reason this review took so long for us to publish is simply that this took a long time to validate and then decide upon a workaround. Since PCIe mapping to IO dies is not easy to trace on many systems, and we needed an x8 slot for both PCIe Gen4 SSDs as well as a Gen3 era SSD (the PM1725a), we ended up having to build our test setup around a single x16 slot in our Tyan EPYC Rome CPU test system, and then mapping workloads to AMD’s CCDs around that slot. We also used the AMD EPYC 7F52 because that has a full 8x CCD enablement with 256MB L3 cache while also utilizing high clock speeds so we did not end up single-thread limited in our tests.

Again, these are extremely small deltas but very important. They will also, therefore, vary when one looks at Arm players such as Ampere (Altra), Huawei (Kunpeng 920), Annapurna Labs/ Amazon AWS (Graviton 2), NVIDIA-Mellanox Bluefield, and soon Marvell with its ThunderX3, and IBM with Power9 / 10. The bottom line is that once we move out of a situation where Intel Xeon has 97-98%+ market share, and we have NVMe SSDs, this all matters. It is also something that we have been testing for years, including in our Cavium ThunderX2 Review years ago. The difference in PCIe controllers and chip capabilities is well-known in the industry and is something STH has been looking at for years.

A downside of this is that we took a lot of testing then building around a single PCIe Gen4 slot we became serial in our ability to test rather than parallel which was unpleasant when you also cannot use historical data in the comparison. Still, to test the Kioxia CM6 (and soon CD6) we had to get to this level of detail to have a valid comparison to other drives. Using our Xeon-based PCIe Gen3 test results would have been untenable.

Having a full set of 24x SSDs which is a more common deployment scenario, would mitigate the need for the above testing, but since we were looking at a single drive, this became important, especially with application-level testing.

Traditional “Four Corners” Testing

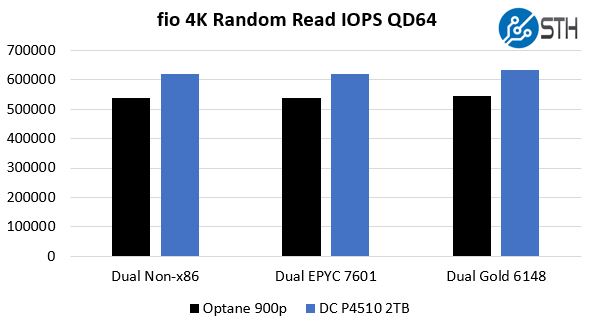

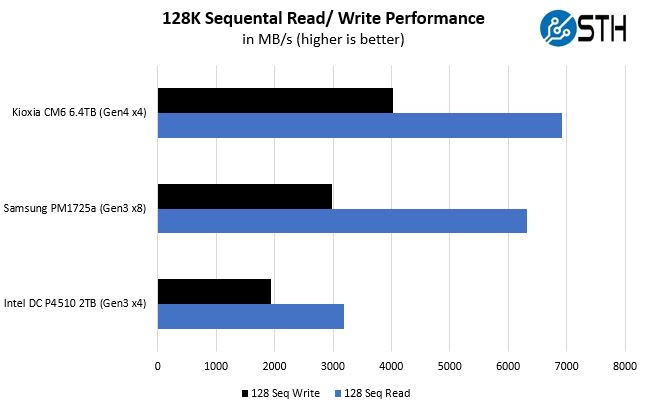

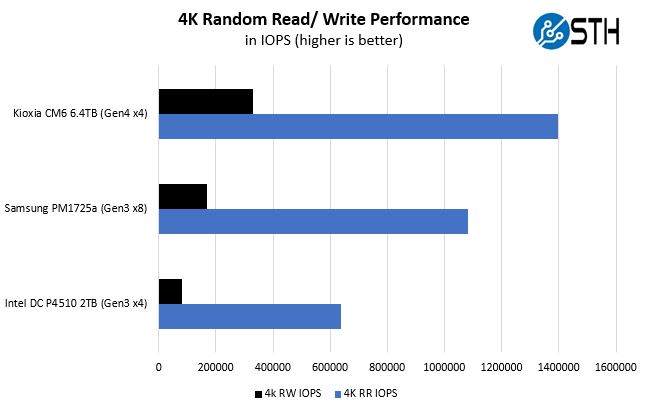

Our first test was to see sequential transfer rates and 4K random IOPS performance for the Kioxia CM6 6.4TB SSD. Please excuse the smaller than normal comparison set, but if you need an explanation, see above as to why we are not using legacy Xeon Scalable platform results.

Overall, we saw performance on-par with what we see on the spec sheet. We did not quite hit Microsoft’s 7GB/s at 129K sequential read performance rates, but this is still very good. If nothing else, the experience going through validating test platforms showed us that we can expect some deltas.

The key takeaway here is that we are seeing the Kioxia CM6 perform better than traditional PCIe Gen3 x4 SSDs where we have been using the Intel DC P4510 as our reference in this class of ~1-3 DWPD drives. Not only that, but we are actually getting better performance than PCIe Gen3 x8 devices such as the Samsung PM1725a 6.4TB AIC. Using eight lanes to get this level of performance is not preferable as it limits the number of devices that can be used in a system. Changing to PCIe Gen4 x4 means that we can double the number of drives that can potentially be connected to a server versus PCIe x8 devices.

STH Application Testing

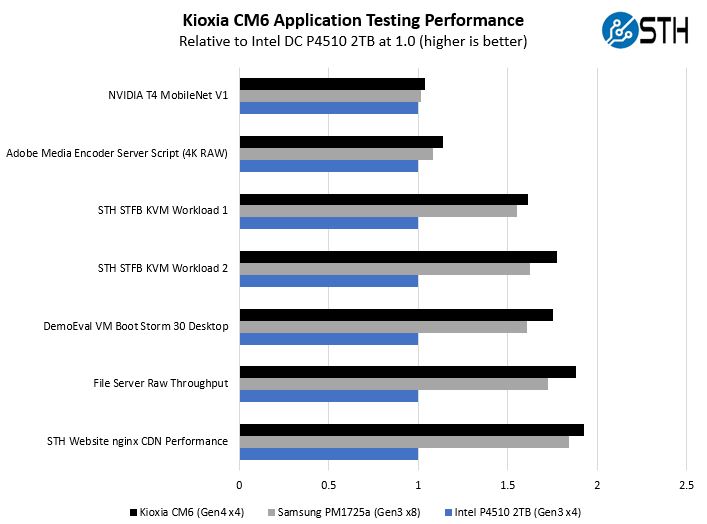

Here is a quick look at real-world application testing versus our PCIe 3.0 x4 and x8 reference drives:

As you can see, there is a lot of variabilities here in terms of how much impact the Kioxia CM6 and PCIe Gen4 has. Let us go through and discuss the performance drivers.

On the NVIDIA T4 MobileNet V1 script, we see very little performance impact, but we see some. The key here is that we are being mostly limited by the performance of the NVIDIA T4 and storage is not the bottleneck. We did see what looks like a latency based benefit with the CM6. Likewise, our Adobe Media Encoder script is timing copy to the drive, then the transcoding of the video file, followed by the transfer off of the drive. Here, we have a bigger impact because we have some larger sequential reads/ writes involved, the primary performance driver is the encoding speed. The key takeaway from these tests is that if you are compute limited, but still need to go to storage for some parts of a workflow, there is an appreciable impact but not as big of an impact as getting more compute. In other words, applications can benefit from, but will not necessarily see a 2x speedup from a Kioxia CM6 PCIe Gen4 SSD over a PCIe Gen3 SSD.

On the KVM virtualization testing, we see heavier reliance upon storage. The first KVM virtualization Workload 1 is more CPU limited than Workload 2 or the VM Boot Storm workload so we see strong performance, albeit not as much as the other two. These are a KVM virtualization-based workloads where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker. We know, based on our performance profiling, that Workload 2 due to the databases being used actually scales better with fast storage and Optane PMem. At the same time, if the dataset is larger, PMem does not have the capacity to scale. This profiling is also why we use Workload 1 in our CPU reviews.

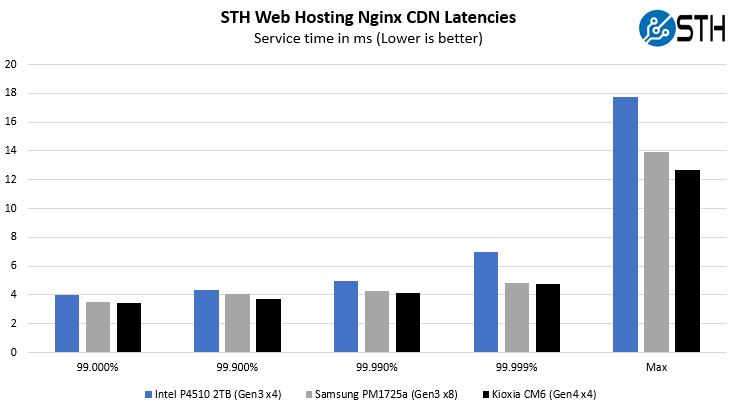

Moving to the file server and nginx CDN we see much better QoS from the new CM6 versus the PCIe Gen3 drives. Perhaps this makes sense if we think of a SSD on PCIe Gen4 as having a lower-latency link as well. On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with caching disabled, to show what the performance looks like in that case. Here is a quick look at the distribution:

Overall, we saw a few outliers, but this is an excellent performance. Much of this workload is normally cached for web hosting, but it at least gives us a quality of service point for a real-world usage scenario.

Since this section is long already, we spent some time simplifying results. The key takeaways are:

- If you are mostly limited by CPU/ GPU performance, the CM6 will likely have some benefit, but to a lesser extent.

- The more you are focused specifically on raw performance moving data, such as in our virtualization, file server, nginx CDN testing, the Kioxia CM6 can offer large performance gains.

- PCIe Gen3 x8 SSDs can be competitive to the CM6, but one will have half the number of devices per system if an x8 drive is used versus an x4. From a serviceability standpoint, the x8 drive is not hot-swappable since it is an add-in card while the U.3 CM6 can be swapped easily which makes it a much more serviceable option to hit this range of performance.

Next, we are going to give some market perspective before moving to our final words.

Page 1 = ok.

Page 2 = WOW and more WOW

Page 3 = Classic STH

Stellar Eric.

That EPYC and Xeon storage analysis is f***ing awesome. I haven’t seen anyone else do that. Everyone else just assumed they are the same.

You might want to make an article just from that so more of the chip audience sees it. You need it here too I guess but that’s like a mini-article within a review.

that’s solid pcie investigation. you didn’t really need to do that since nobody would have known better but it’s great you actually did it.

I was gonna comment on that product photo… Then I got to page 2. That’s a gem!

P4510 was released ~2years ago and is about 1DWPD (and not 1-3 DWPD). CM6 is a medium endurance drive (for write-intensive applications) vs P4510 is read-intensive focussed. Not sure if that’s the right comparison to make here.. The equivalent would be P4610 from Intel for medium endurance SKU comparison