3rd Generation Intel Xeon Scalable Ice Lake Initial Performance Observations

Many of our readers simply want to know performance, but the platform is extremely important here. Unlike when we did our AMD EPYC 7003 launch piece, we are going to use the dual-socket comparisons here. We are also going to put a few single-socket comparison points into the mix just to discuss consolidation ratios.

As always, we will have our formal chip reviews after this, but we wanted to give some sense of performance outside of Intel’s AVX-512 and accelerator heavy comparisons. Effectively, we are looking at how software one is running on existing hardware performs, not highly optimized workloads.

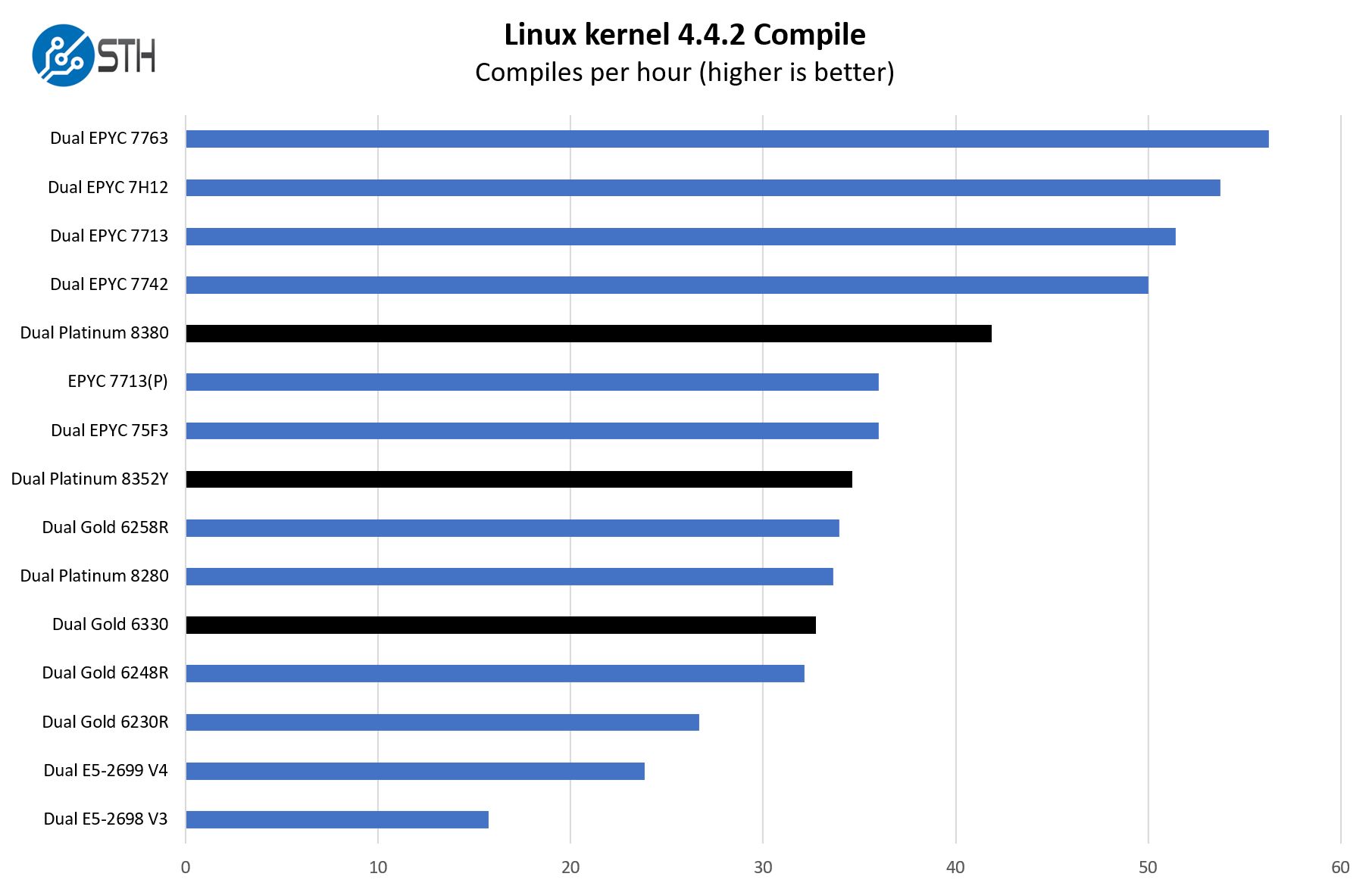

Python Linux 4.4.2 Kernel Compile Benchmark

This is one of the most requested benchmarks for STH over the past few years. The task was simple, we have a standard configuration file, the Linux 4.4.2 kernel from kernel.org, and make the standard auto-generated configuration utilizing every thread in the system. We are expressing results in terms of compiles per hour to make the results easier to read:

Overall, Intel is showing some massive performance gains. Intel simply does not have the core count to compete in many benchmarks that do not use its accelerators versus AMD. At the same time, a major story is simply that the dual Platinum 8380 bar is above the EPYC 7713(P) by some margin. This is important since it nullifies AMD’s ability to claim its chips can consolidate two of Intel’s highest-end chips into a single socket.

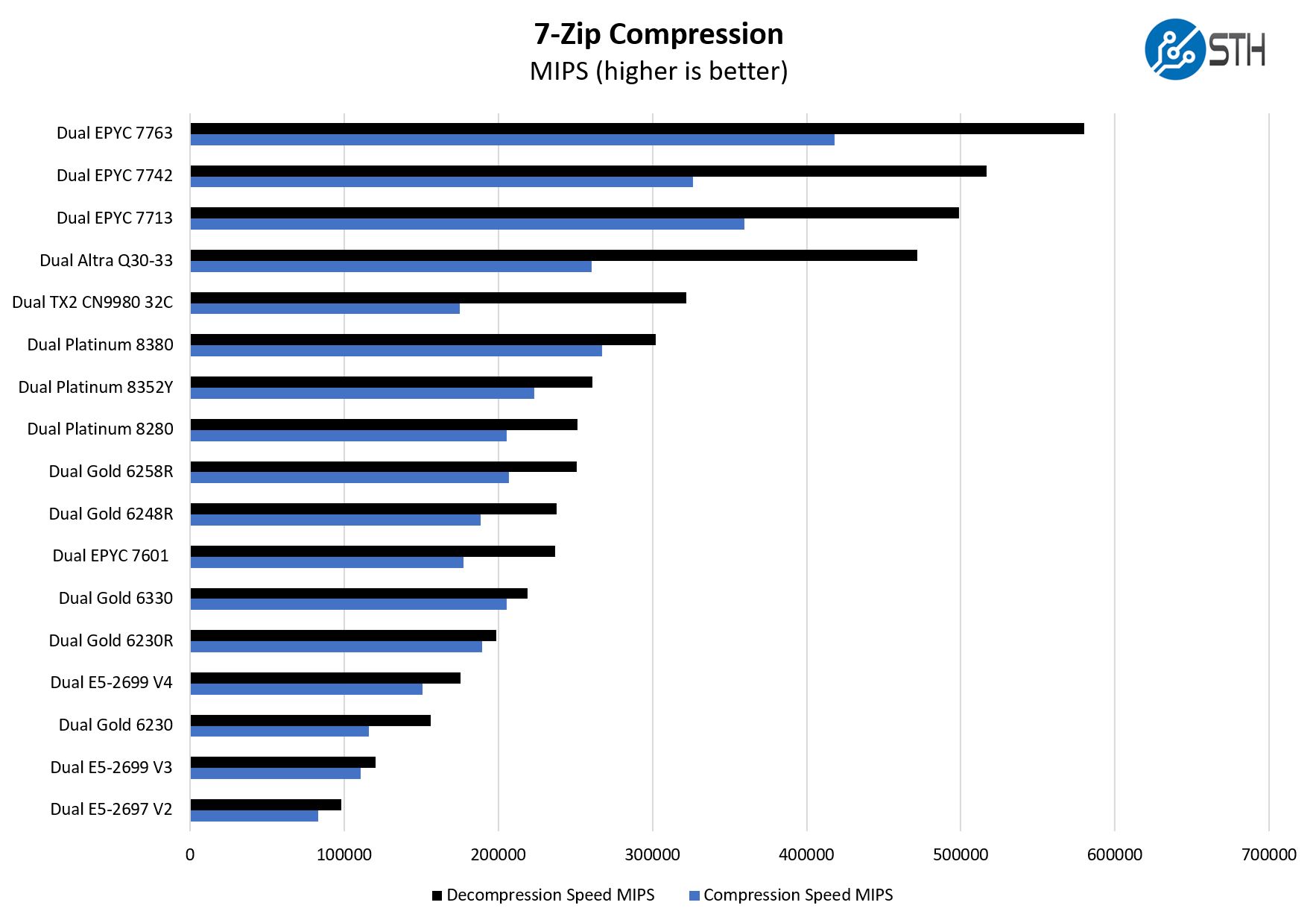

7-zip Compression Performance

7-zip is a widely used compression/ decompression program that works cross-platform. We started using the program during our early days with Windows testing. It is now part of Linux-Bench.

On the compression side, the dual Platinum 8380 would move up a bit, but we made the decision around a decade ago when we started running this benchmark on Windows that we would sort by decompression. Still, Intel is showing some very nice generational performance gains here. The high-end Xeon E5 V2/ V3 are 2:1 or better consolidation ratio to the Gold 6330.

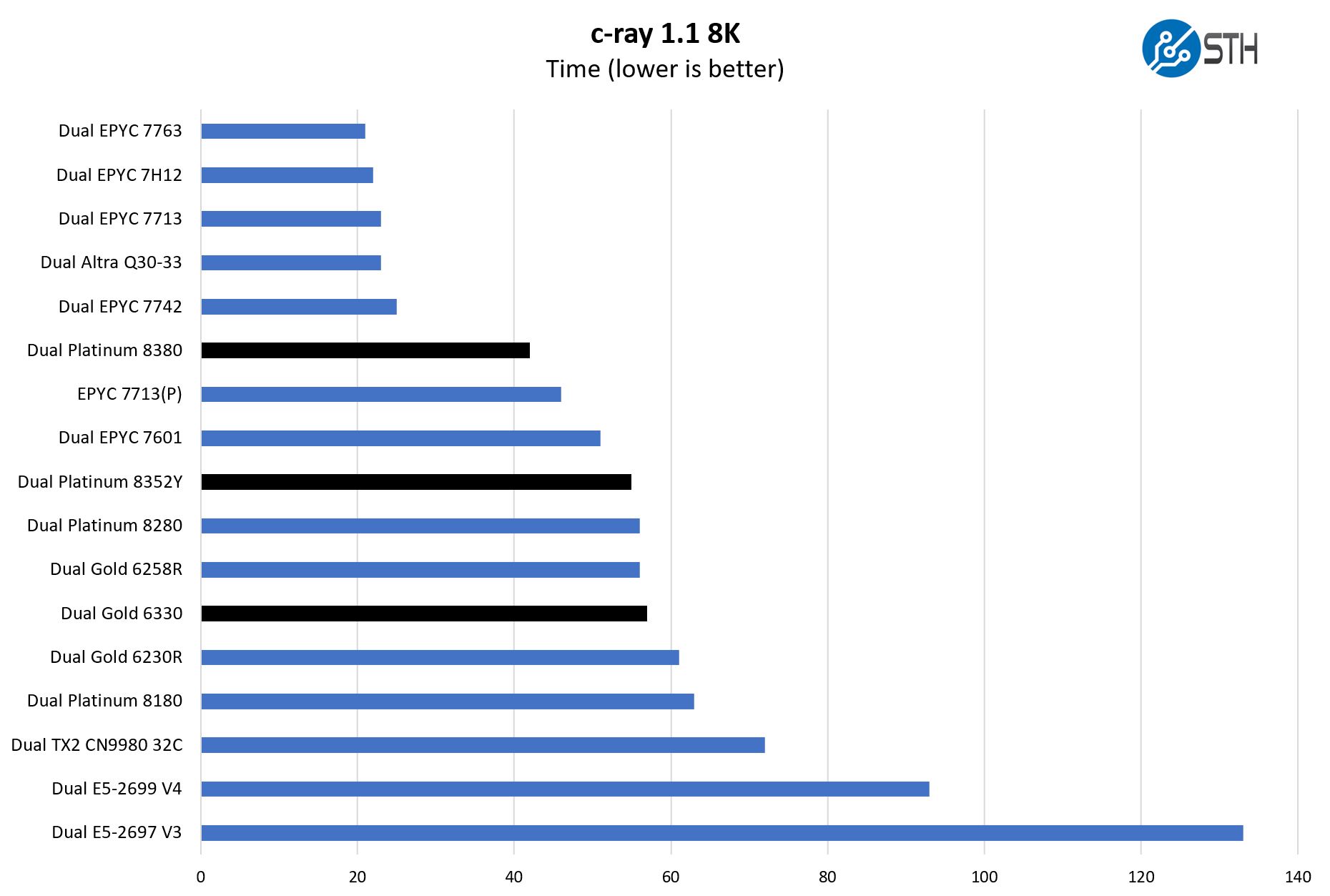

c-ray 8K Performance

Although not the intended job for these, we also just wanted to get something that is very simple and scales extremely well to more cores.

This is a test where AMD typically does extremely well, as does the Arm-based Ampere Altra. The Altra has 80 physical cores per socket instead of 40 cores and 80 threads. Still, we get nice gains on the Intel side and it is notable that the Platinum 8380’s are able to get ahead of the single EPYC 7713(P) here. Previously, AMD had a big enough advantage Intel could not have this good of a showing.

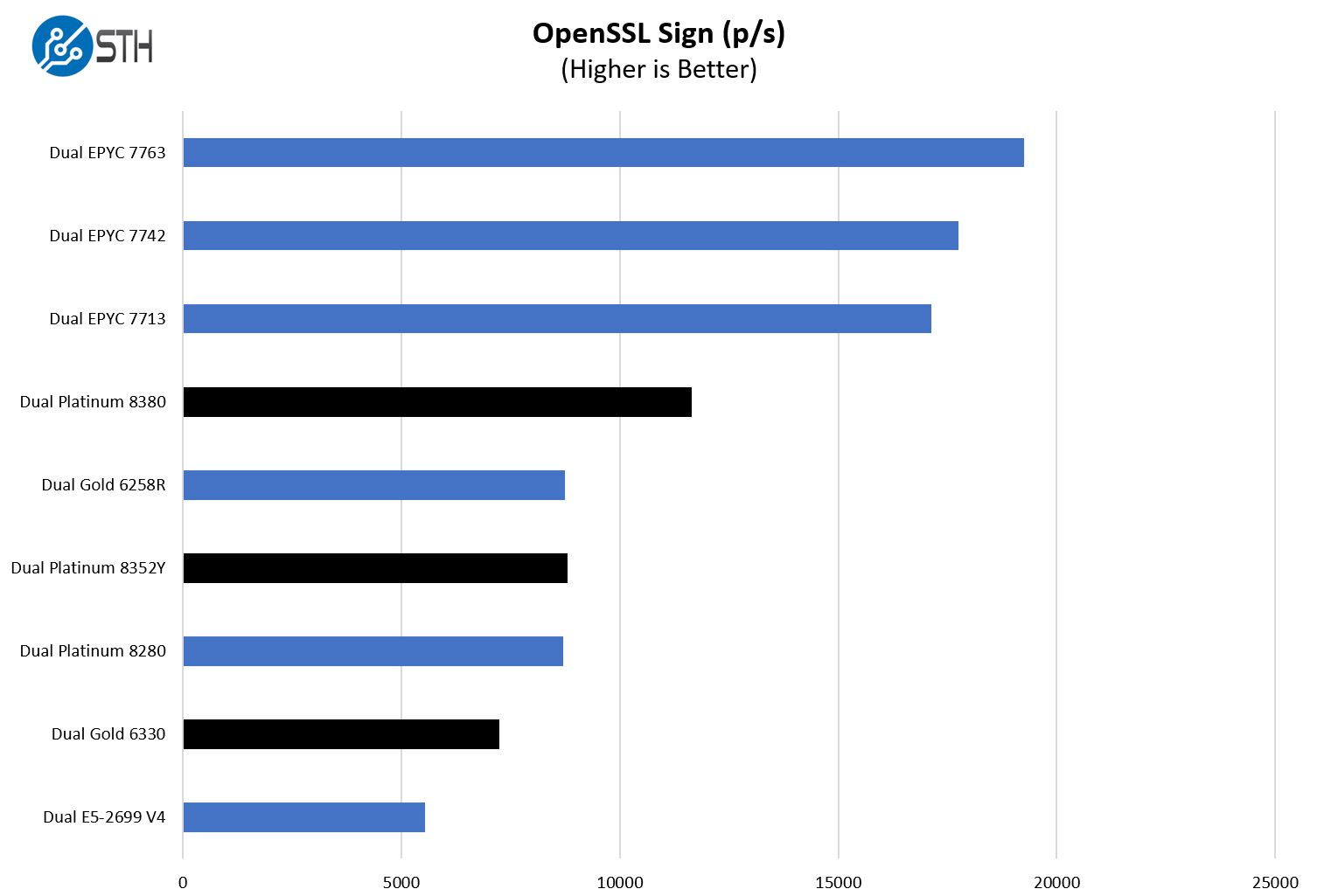

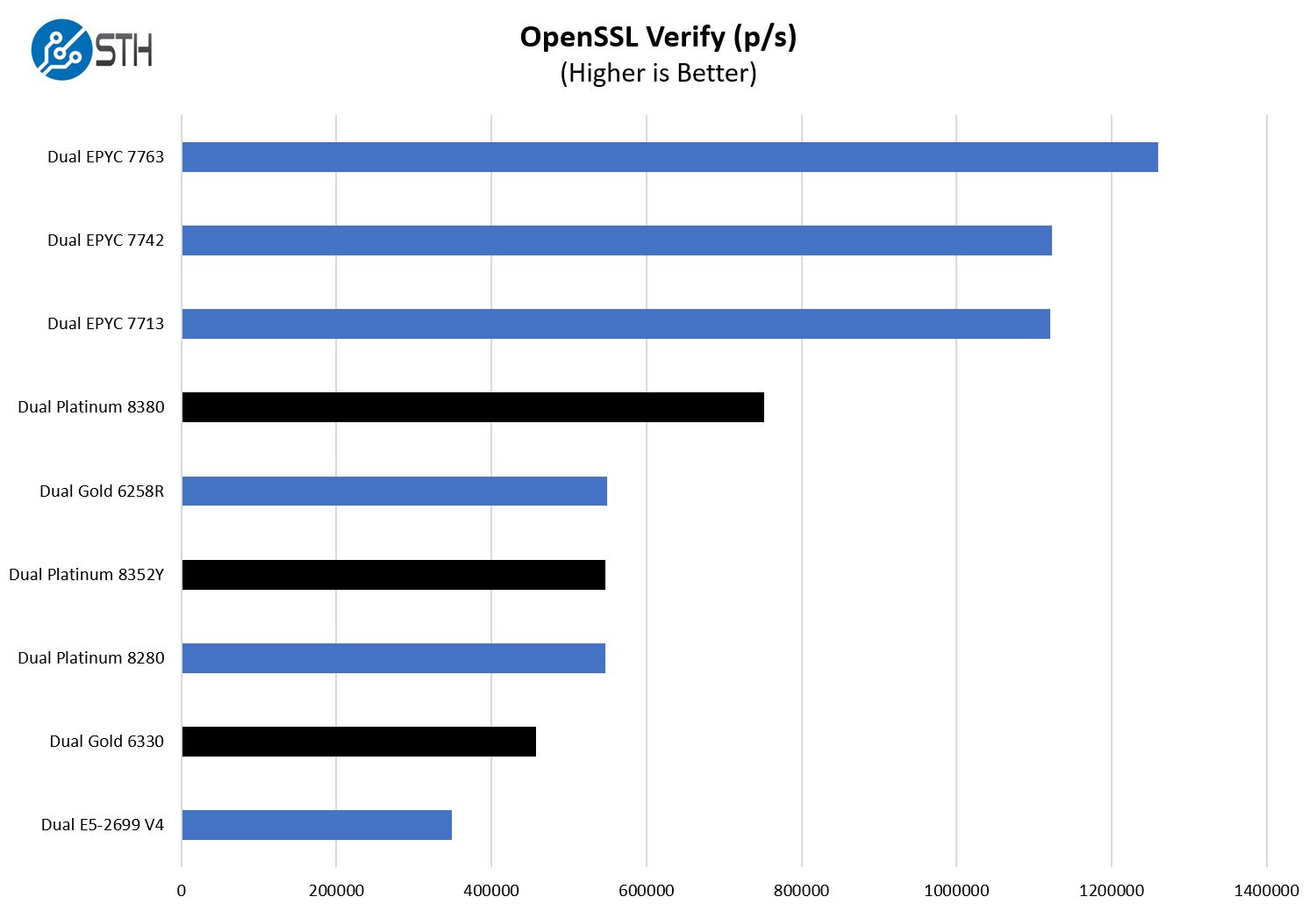

OpenSSL Performance

OpenSSL is widely used to secure communications between servers. This is an important protocol in many server stacks. We first look at our sign tests:

Here are the verify results.

Something that we want to point out here is that if one needed high-end OpenSSL acceleration, investing in a QAT enabled Lewisburg Refresh PCH, card, or getting a NIC/ DPU that could do offloading would be worthwhile. It is a bit hard to say one can pay more to upgrade the PCH and use a x16 PCIe link to the CPU to have QAT offload here, but then make a comparison to a Xeon E5-2699 V4 without an accelerator.

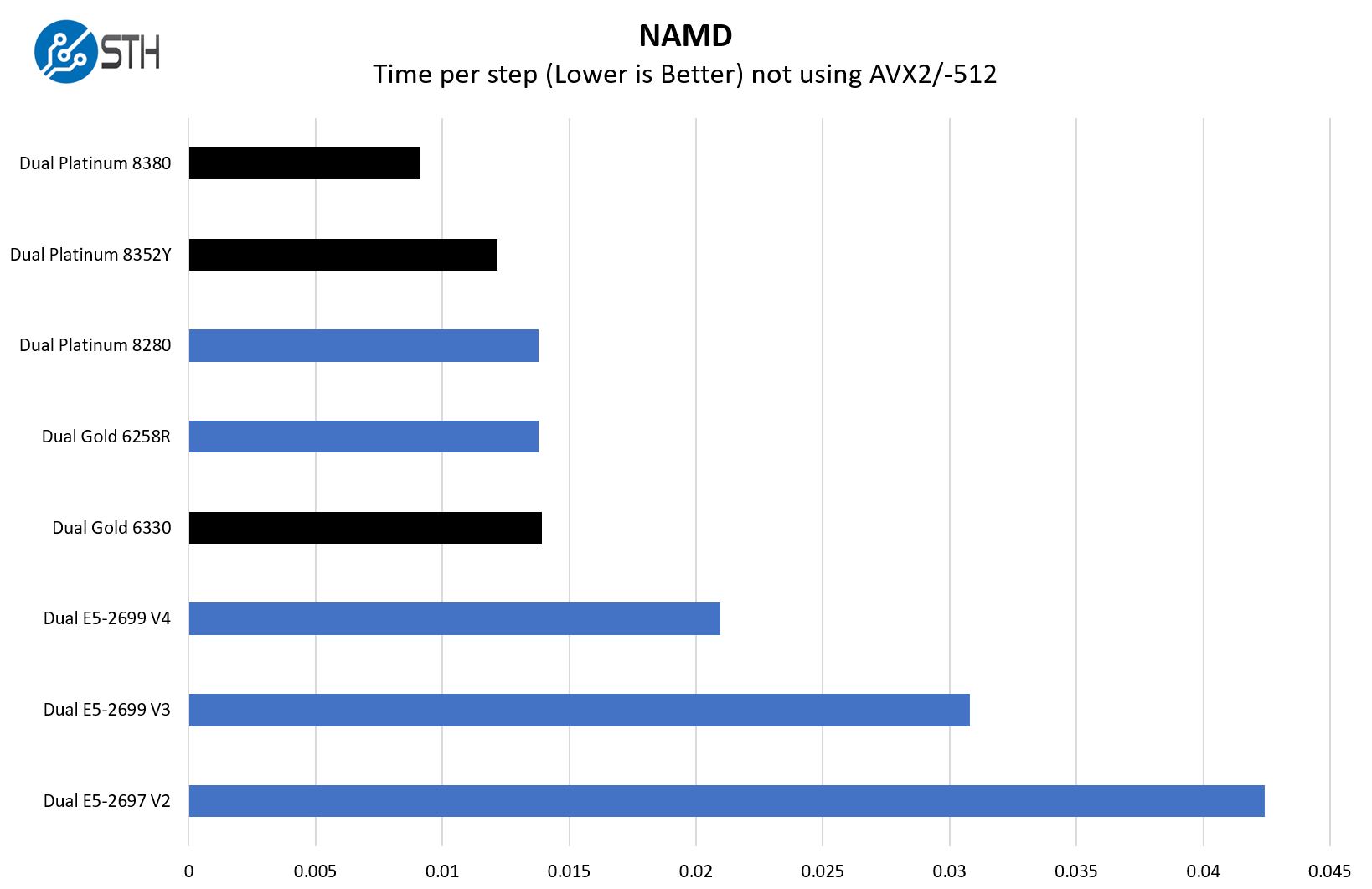

NAMD Performance

NAMD is a molecular modeling benchmark developed by the Theoretical and Computational Biophysics Group in the Beckman Institute for Advanced Science and Technology at the University of Illinois at Urbana-Champaign. More information on the benchmark can be found here. We are pulling out AVX2/ AVX-512 support here to give some sense of generational performance:

Since Intel went over-the-top showing AVX-512 enabled workloads, we are going the other direction. Here is an Intel SKU progression on NAMD without using AVX2/ AVX-512 so we can see raw compute power increase from Ivy Bridge to Ice Lake.

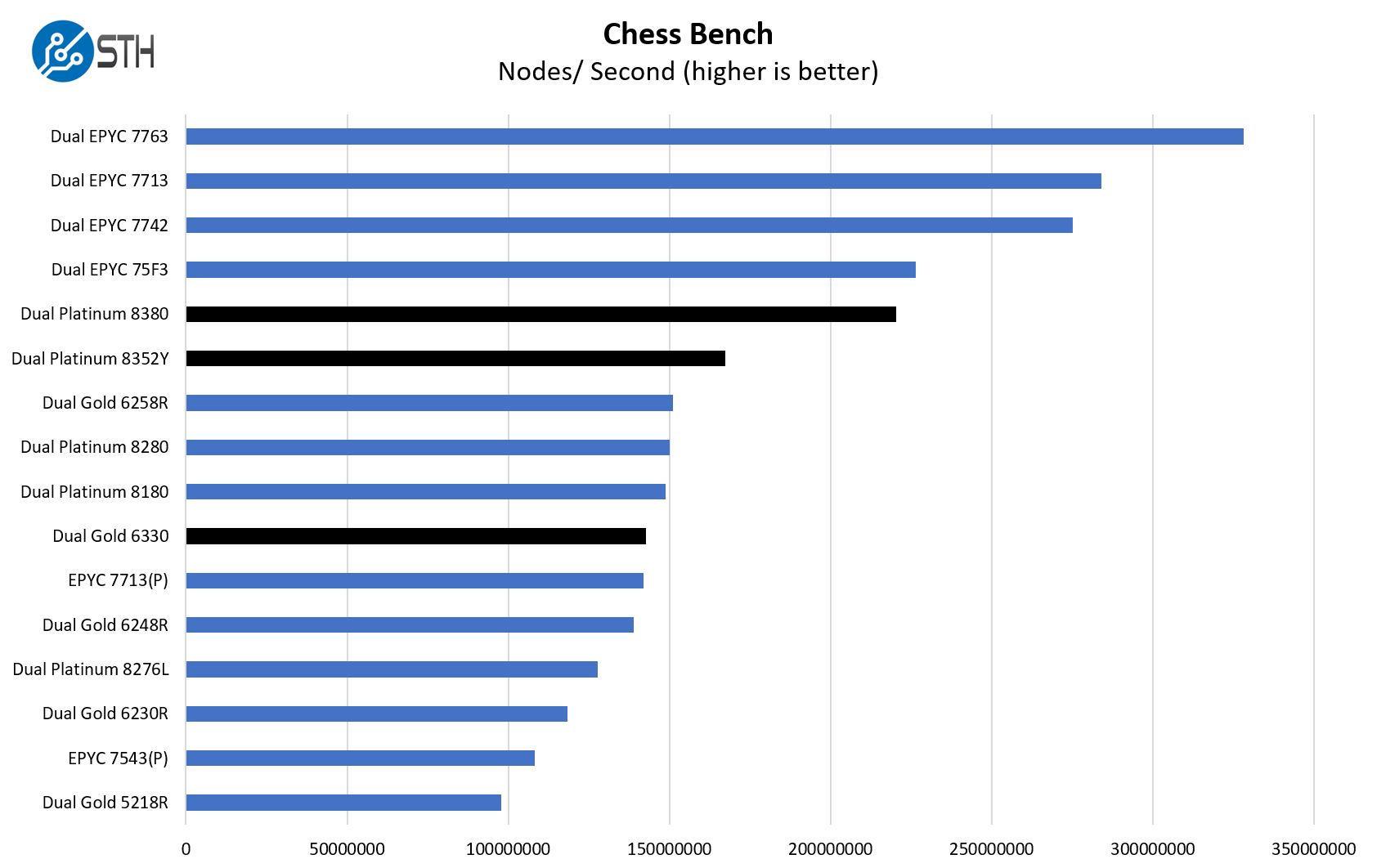

Chess Benchmarking

Chess is an interesting use case since it has almost unlimited complexity. Over the years, we have received a number of requests to bring back chess benchmarking. We have been profiling systems and now use the results in our mainstream reviews:

This is one where the Xeon Gold 6330 it appears fell victim to having higher IPC, but lower clocks than some older 28 core chips. On the other extreme, the Platinum 8352Y showed a lot more performance than the previous top-end 28 core parts.

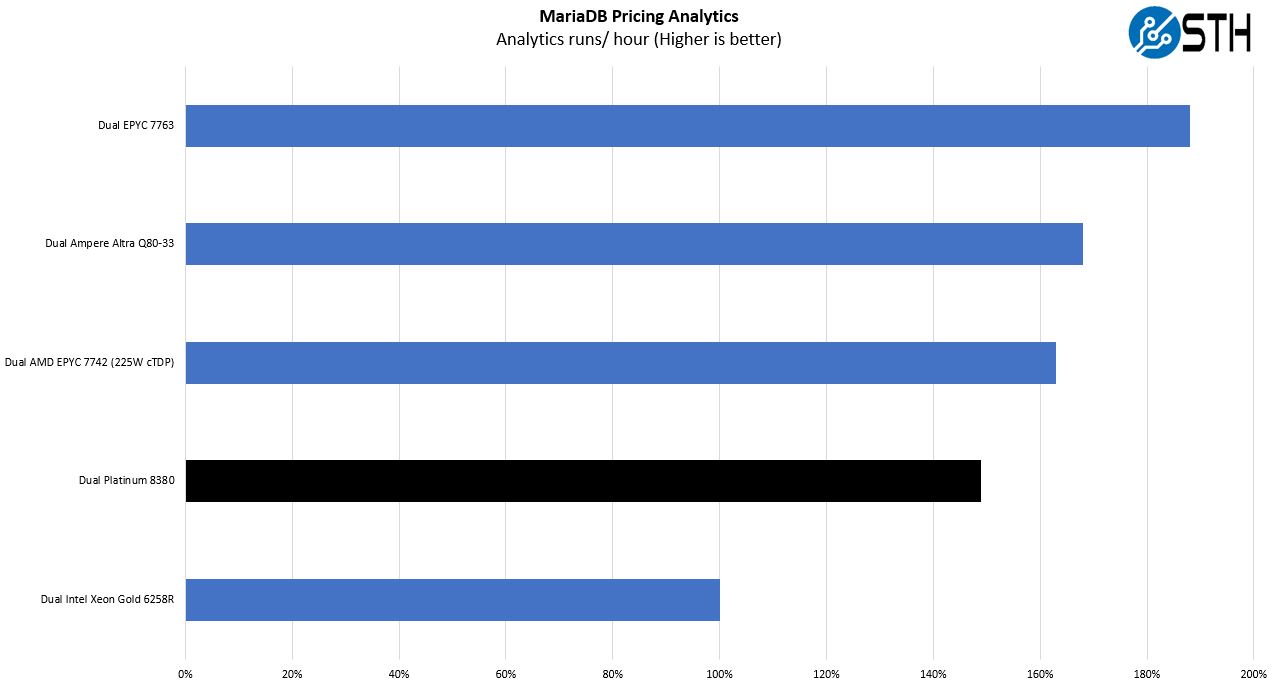

MariaDB Pricing Analytics

This is a personally very interesting one for me. The origin of this test is that we have a workload that runs deal management pricing analytics on a set of data that has been anonymized from a major data center OEM. The application effectively is looking for pricing trends across product lines, regions, and channels to determine good deal/ bad deal guidance based on market trends to inform real-time BOM configurations. If this seems very specific, the big difference between this and something deployed at a major vendor is the data we are using. This is the kind of application that has moved to AI inference methodologies, but it is a great real-world example of something a business may run in the cloud.

We are moving to top-bin SKUs here and are using the Gold 6258R to represent the Cascade Lake 28-core top-end. The performance was very close between it and the Platinum 8280 so instead of having the Platinum 8280 at 101% we simply made this chart less busy. Intel is doing very well here. One can see that if Ice Lake launched in 2019 alongside AMD EPYC 7002 “Rome” the Platinum 8380 would have been very close to AMD’s top-end.

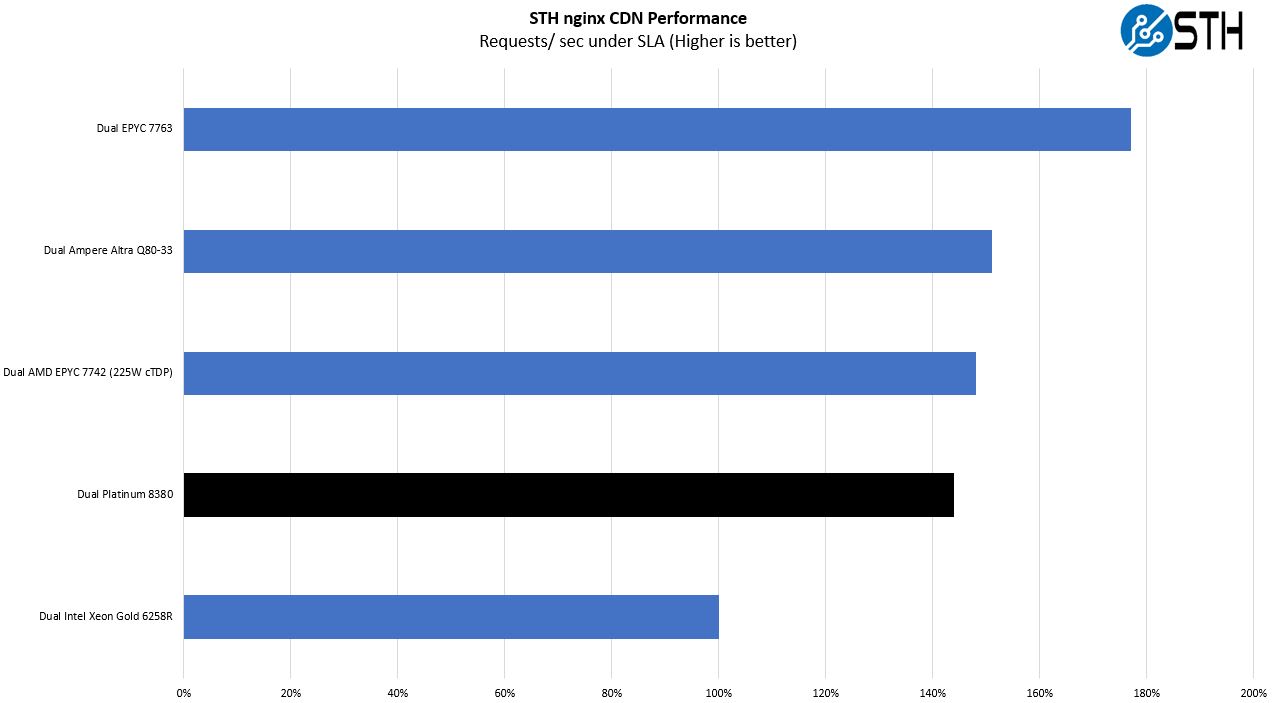

STH nginx CDN Performance

On the nginx CDN test, we are using an old snapshot and access patterns from the STH website, with DRAM caching disabled, to show what the performance looks like fetching data from disks. This requires low latency nginx operation but an additional step of low-latency I/O access which makes it interesting at a server-level. Here is a quick look at the distribution:

Usually, we think of this as scaling with cores, but here Intel is actually doing very well. Again, if this CPU was launched in 2019 against Rome, it would have been a very close fight. Ampere is a bit ahead, but the delta is not going to make many switch architectures. There are a lot of synthetic benchmarks out there, but this is one we use for our own internal purchasing.

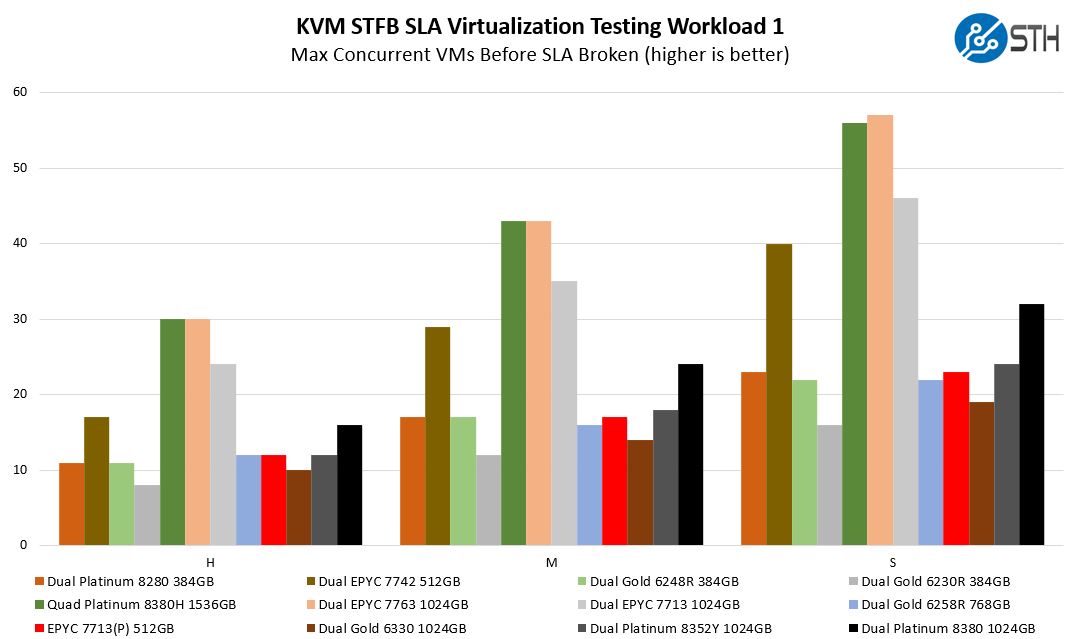

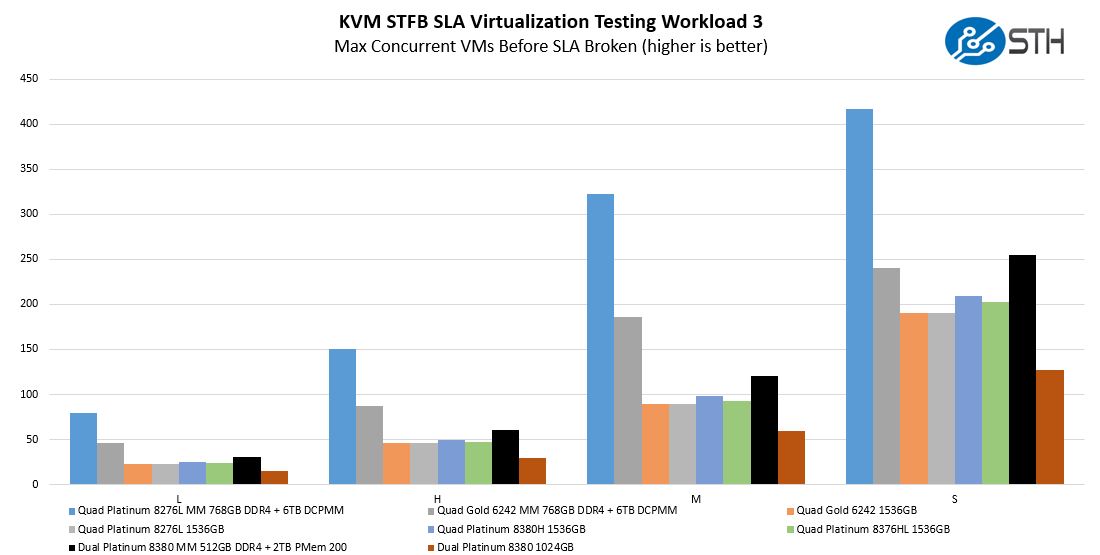

STH STFB KVM Virtualization Testing

One of the other workloads we wanted to share is from one of our DemoEval customers. We have permission to publish the results, but the application itself being tested is closed source. This is a KVM virtualization-based workload where our client is testing how many VMs it can have online at a given time while completing work under the target SLA. Each VM is a self-contained worker.

Workload 1 is more CPU bound than memory bound. While AMD still holds the lead here, what is absolutely clear is that Intel is making huge headway into the virtualization space.

Here we can see the impact of adding Intel Optane PMem 200 to a system. We get noticeably better results from the larger memory footprint. While Workload 1 is largely CPU bound, this is more memory capacity bound so using PMem 200 in Memory mode is helping here.

As a reminder, PMem 200 works with Cooper Lake, but it does not operate in Memory Mode and only works at DDR4-2666 speeds. With Ice Lake we get both Memory Mode and App Direct which is a big bonus. Given this, it makes recommending Cooper Lake harder for higher memory footprints, especially given the need for “L” SKUs even though it is a 3rd generation Intel Xeon Scalable.

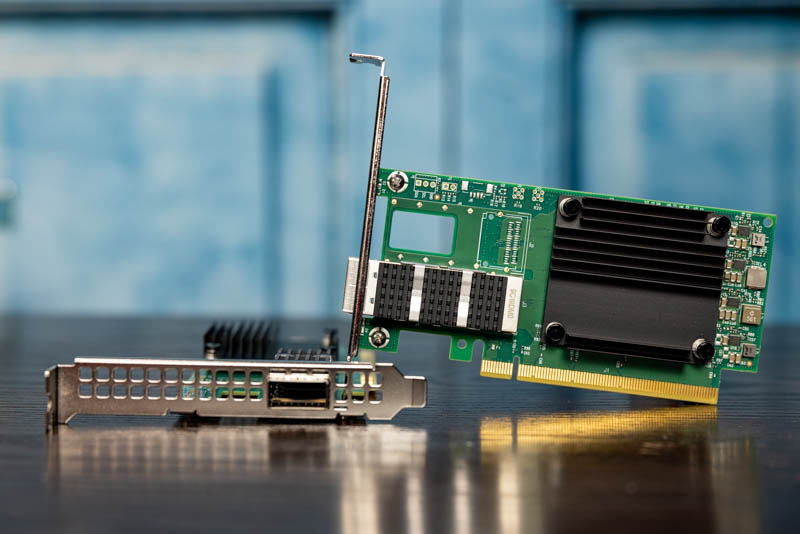

Networking Performance Check 200GbE is Here

When we looked at previous generation systems, we have used 100GbE as our basis to see how fast the networking out of a system can get. Now, we have 200GbE NVIDIA Mellanox ConnectX-6 NICs capable of 200GbE per port.

We hooked these into two Intel Xeon Platinum 8380 and Gold 6330 platforms and we saw excellent performance of around 194Gbps. That is very close to what we got on the AMD EPYC systems. We have a new Columbiaville adapter, but we wanted to maintain parity with what we used for our Milan launch.

With some of the older Arm solutions, we noticed that they were struggling to keep line rate. Now we just need to figure out how to get a 400GbE switch for the lab like the Inside an Innovium Teralynx 7-based 32x 400GbE Switch we just got hands-on time with:

Hitting this amount of traffic is no easy feat. 200GbE is not something that can be driven out of a single PCIe Gen3 x16 slot (although there are multi-host adapters) so this is an area where Intel caught up to AMD.

Next, we are going to look at the power and a critical look at one of Intel’s performance claims.

@Patrick

What you never mention:

The competitor to ICL HPC AVX512 and AI inference workloads are not CPUs, they are GPUs like the A100, Intinct100 or T4. That’s the reason why next to no one is using AVX512 or

DL boost.

Dedicated accelerators offer much better performance and price/perf for these tasks.

BTW: Still, nothing new on the Optane roadmap.it’s obvious that Optane is dead.

Intel will say that they are “committed” to the technology but in the end they are as commited as they have been to Itanium CPUs as a zombie platform.

Lasertoe – the inference side can do well on the CPU. One does not incur the cost to go over a PCIe hop.

On the HPC side, acceleration is big, but not every system is accelerated.

Intel, being fair, is targeting having chips that have a higher threshold before a system would use an accelerator. It is a strange way to think about it, but the goal is not to take on the real dedicated accelerators, but it is to make the threshold for adding a dedicated accelerator higher.

“not every system is accelerated”

Yes, but every system where everything needs to be rewritten and optimized to make real use of AVX-512 fares better with accelerators.

——————

“the inference side can do well on the CPU”

I acknowledge the threshold argument for desktops (even though smartphones are showing how well small on-die inference accelerators work and winML will probably bring that to x86) but who is running a server where you just have very small inference tasks and then go back to other things?

Servers that do inference jobs are usually dedicated inference machines for speech recognition, image detection, translation etc.. Why would I run those tasks on the same server I run a web server or a DB server? The threshold doesn’t seem to be pushed high enough to make that a viable option. Real-world scenarios seem very rare.

You have connections to so many companies. Have you heard of real intentions to use inference on a server CPU?

Even Facebook is doing distributed inference/ training on CPUs. Organizations 100% do inferencing on non-dedicated servers, and that is the dominant model.

Hmmm… the real issue with using AVX-512 is the down clock and latency switching between modes when you’re running different things on the same machine. It’s why we abandoned it.

I’m not really clear on the STH conclusion here tbh. Unless I need Optane PMem, why wouldn’t I buy the more mature platform that’s been proven in the market and has more lanes/cores/cache/speed?

What am I missing?

Ahh okay, the list prices on the Ice Lake SKUs are (comparatively) really low.

Will be nice when they bring down the Milan prices. :)

@Patrick (2) We’ll buy Ice Lake to keep live migration on VMware. But YOU can buy whatever you want. I think that’s exactly the distinction STH is trying to show

I meant for new server application, not legacy like fb.

Facebook is trying to get to dedicated inference accelerators, like you reported before with their Habana/Intel nervana partnerships, or this:

https://engineering.fb.com/2019/03/14/data-center-engineering/accelerating-infrastructure/

Regarding the threshold: Fb is probably using dedicated inference machines, so the inference performance threshold is not about this scenario.

So the default is a single Lewisburg Refresh PCH connected to 1 socket? Dual is optional? Is there anything significant remaining attached to the PCH to worry about non-uniform access, given anything high-bandwidth will be PCIe 4.0?

Would be great if 1P 7763 was tested to show if EPYC can still provide the same or more performance for half the server and TCO cost :D

Sapphire Rapids is supposed to be coming later this year, so Intel is going 28c->40c->64c within a few months after 4 years of stagnation.

Does it make much sense for the industry to buy ice lake en masse with this roadmap?

“… a major story is simply that the dual Platinum 8380 bar is above the EPYC 7713(P) by some margin. This is important since it nullifies AMD’s ability to claim its chips can consolidate two of Intel’s highest-end chips into a single socket.”

I would be leery of buying an Intel sound bite. I may distract them from focusing on MY interests.

Y0s – mostly just SATA and the BMC, not a big deal really unless there is the QAT accelerated PCH.

Steffen – We have data, but I want to get the chips into a second platform before we publish.

Thomas – my guess is Sapphire really is shipping 2022 at this point. But that is a concern that people have.

Peter – Intel actually never said this on the pre-briefs, just extrapolating what their marketing message will be. AMD has been having a field day with that detail and Cascade Lake.

I dont recall any mention of HCI, which I gather is a major trend.

A vital metric for HCI is interhost link speeds, & afaik, amd have a big edge?

Patrick, did you notice the on package FPGA on the Sapphire Rapids demo?

Patrick, great work as always! Regarding the SKU stack: call me cynical but it looks like a case of “If you can’t dazzle them with brilliance then baffle them with …”.

@Thomas

Gelsinger said: “We have customers testing ‘Sapphire Rapids’ now, and we’ll look to reach production around the end of the year, ramping in the first half of 2022.”

That doesn’t sound like the average joe can buy SPR in 2021, maybe not even in Q1 22.

Is the 8380 actually a single die? That would be quite a feat of engineering getting 40 cores on a single NUMA node.

I was wondering about the single die, too. fuse.wikichip has a mesh layout for the 40 cores.

https://fuse.wikichip.org/news/4734/intel-launches-3rd-gen-ice-lake-xeon-scalable/

What on earth is this sentence supposed to be saying?

“Intel used STH to confirm it canceled which we covered in…”