Testing Intel QAT Encryption: nginx Performance

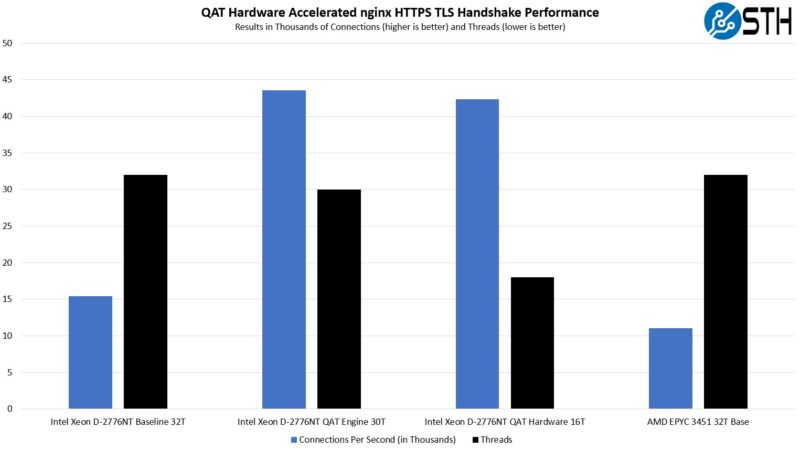

Here we are going to take a look at something that goes beyond just QAT hardware acceleration. We are going to look at the nginx HTTPS TLS handshake performance using QAT Engine. This is going to encompass both the hardware offload, but also the IPP using onboard Ice Lake extensions. Since performance is going to vary a lot based on our different cases, we are aiming for 43,000 connections per second and adjusting the various solutions to achieve around that mark by dialing up and down threads as well as testing the different placement of the threads on the chips.

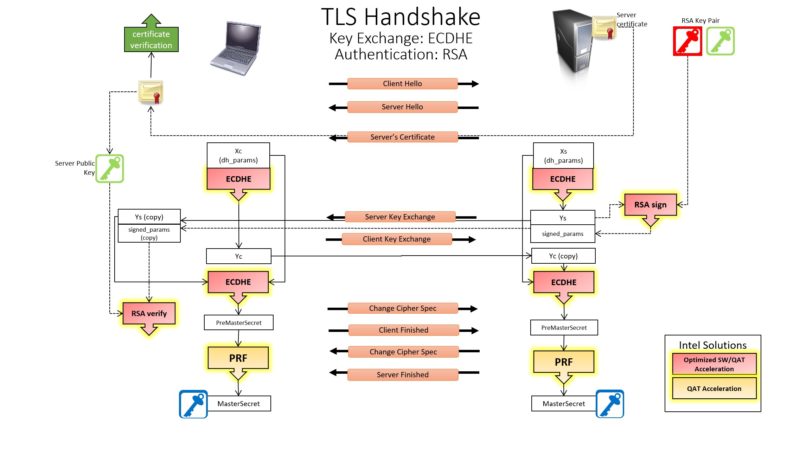

To give some sense of where the acceleration is happening, Intel has this diagram that shows where it accelerates the HTTPS TLS handshake:

Unlike when we did the larger CPUs, we let these processors run wide open using every available thread in the baseline case. Even in doing so, these chips could not match the performance of using hardware acceleration.

AMD at 32 threads performed much worse than what we saw on the Milan chips last time. 32 Milan core can reach the 44K connections per second range (44200cps.) In the embedded space, the EPYC 3451 is using 16 cores plus the SMT threads for 32 threads. It has lower clock speeds and a two-generation older architecture. All of this leads to worse performance in the embedded space. AMD was actually faster when we did the mainstream parts and looked at our base case.

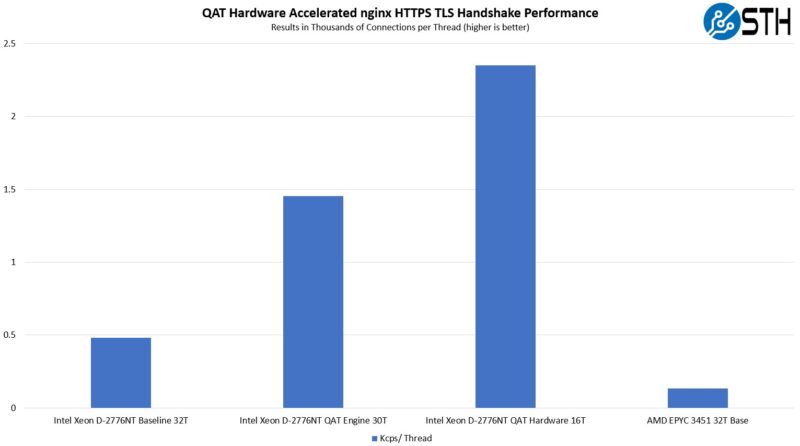

Here, the QAT engine and using VAES helped us drop from 32 threads to 30 on the Intel Xeon D-2776NT while also almost tripling performance. In turn, that has a huge impact on overall performance per thread because we have fewer threads being used and more performance:

As we can see, the VAES here is really helping, as is the QAT. Remember, the VAES is something that is not even using the QAT hardware accelerator, and we get a lot of performance. Many people are running nginx web servers and not using the QAT Engine/ IPP offload for Ice Lake and are not seeing this performance. For AMD, we really just need an updated architecture, as running on essentially 2017 Zen cores is not helping in this market.

Taking a step back, this is what makes servers notoriously difficult to benchmark. Companies with other architectures, such as Arm CPUs often discuss nginx performance. What they often miss is that a lot of their advantages disappear when encryption and compression are turned on and proper accelerators are used. Using IPP and the QAT Engine did not require special hardware and even that offers massive performance gains.

Next, let us discuss the “gotchas” of QAT, Sapphire Rapids, and our final words.

You are probably under NDA but did you learn something about the D-2700 ethernet switching capabilities? Like for example dataplane pipeline programmability like the Mount Evans/E2000 network building block ? As THAT would be a gamechanger for enterprise edge use!!!

Hi patrik, also a follow up question did you try to leverage the CCP (crypto co-processor) on AMD EPYC 3541 for offloading cipher and HMAC?

Hi patrik, thanks for the review. couple of pointers and query

1. Here we are getting better performance with two cores instead of using the entire chip for less performance.

– A physical CPU is combination of front-end (fetch, decode, opcode, schedule) + back-end (alu, simd, load, store) + other features. So when SMT or HT is enabled, basically the physical core is divided into 2 streams at the front end of the execution unit. While the back end remains the same. with help of scheduler, outof order and register reorder the opcodes are scheduled to various ports (backend) and used. So ideally, we are using the alu, simd which was not fully leveraged when no-HT or no-SMT was running. But application (very rarely and highly customized functions) which makes use of all ports (alu, load, store, simd) will not see benefit with SMT (instead will see halving per thread).

2. is not Intel D-2700 atom (Tremont) based SoC https://www.intel.com/content/www/us/en/products/sku/59683/intel-atom-processor-d2700-1m-cache-2-13-ghz/specifications.html . If yes, these cores makes use of SSE and not AVX or AVX512. Maybe I misread the crypto-compression numbers with ISAL & IPSEC-MB, as it will make use of SSE unlike AMD EPYC 3451. hence CPU SW (ISAL & IPSEC_MB) based numbers should be higher on AMD EPYC 3541 than D2700?

3. did you try to leverage the CCP (crypto co-processor) on AMD EPYC 3541 for offloading cipher and HMAC?

people don’t use the ccp on zen 1 because the sw integration sucks and it’s a different class of accelerator than this. qat is used by real world even down to pfsense vpns.

D-2700 is ice lake cores not Tremont. They’re the same cores as in the big Xeon’s not the Tremont cores. I’d also say if they’re testing thread placement like which ccd they’re using, they know about SMT. SMT doesn’t halve performance in workloads like these.

I’ve learned AMD just needs new chips in this market. They’re still selling Zen 1 here.

@nobo `if you are talking about ccp on zen 1` on linux, this could be true. But have you tried DPDK same as ISAL with DPDK?

@AdmininNYC thank you for confirming it is icelake-D and not Tremont cores, which confirms it has AVX-512. Checking Nginx HTTPS Performance, Compression Performance comparison with SW accelerated libraries, show AMD EPYC 3451 (avx2) is on par with Xeon-D icelake (avx512). Only test cases which use VAES (AVX512) there is a leap in performance in SW libraries. It does sound really odd right?

Running ISAL inflate-deflate micro benchmarks on SMT threads clearly shows half on ADM EPYC. I agree in real use cases, not all cores will be feed 100% compression operation since it will have to run other threads, interrupts, context switches.

Something is wrong with this sentence fragment: “… quarter of the performance of AMD’s mainstream Xeons.”