Last week a number of journalists had the opportunity to tour of Intel’s data center facilities in Santa Clara California. Intel uses over 100,000 dual Intel Xeon servers worldwide for design of its future generation chips. The company has more than 135,000 servers installed total and experiences 25% annual growth. The company decommissioned a fab between the Robert Noyce Building and highway 101 around 2008 and has been converting that space into successively more efficient data center facilities. We were told their latest designs were operating in the 1.06PUE range, down from around 2.0 a few generations ago. PUE is a standard measure of data center efficiency with 1.0 representing a data center where all of the power going into the data center is consumed by the IT equipment (e.g. servers, storage and networking.) Numbers above 1.0 represent how much of the input power is being used for non-IT equipment such as cooling. The lower the number, the more efficient the data center. We took a few photos where we could and have a few notes on the Intel data center that we can share.

Where the Data Center is Located

The Santa Clara data center is in the heart of the Silicon Valley, connected to the main Robert Noyce Building, with the Intel museum via an elevated walkway. Here is a map of where we were:

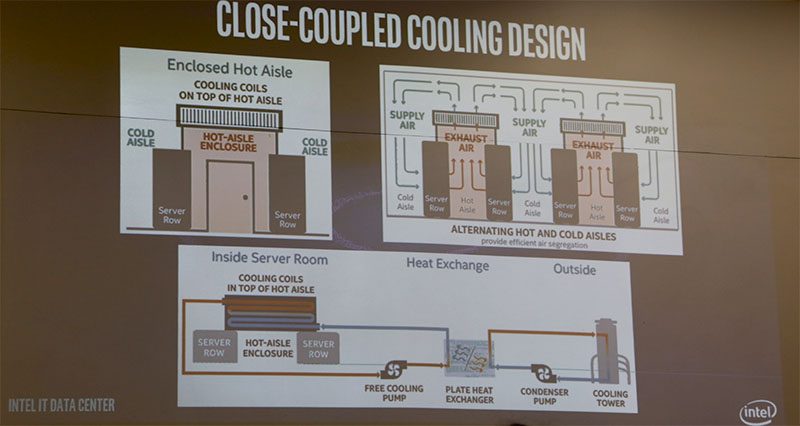

Intel converted the old fab in Silicon Valley to house its data center which was a massive cost savings opportunity for the company because it was re-purposing an existing facility. Intel is using the Bay Area’s temperate climate to cool the data center between around 60F to 91F without using chillers. We were told that even in the California sun, Intel only needs to use chillers for around 40 hours per year. Here is an example of what Intel is using for cooling:

Intel is also using recycled water to minimize environmental impact.

Older Generation Intel Datacenter

The first data center chamber we were allowed to visit housed a number of servers and also a Top 100 super computer. During the entire tour we had specific places we could take photos from which is why you do not see any machine close ups. Also, apologies in advance as I was shooting handheld without a tripod in the data center which is not easy to do. Here is the basic layout. Key points are that we could see blade servers from HP and Dell and microservers (9 nodes in 3U) from Quanta. Switching was 10GbE and appeared to be provided by Extreme Networks.

As you might notice, Intel is using non-standard racks. First off, they are minimally wide. The posts for each server chassis line up directly next to one another and there is a minimum amount of space in-between the servers. One will also notice the racks seem tall. These are 60U racks not the industry standard 42U. Intel claims higher efficiency. You can also see (on the left above) braces on the racks. They are large and heavy at the top so in Santa Clara, California where earthquakes are a potential hazard, Intel has added cross bracing to support the weight of the racks during an earthquake.

Moving to the hot aisle, one can see that Intel has PDUs mounted away from the rear of the racks. There is no room in Intel’s racks for a zero U PDU inside them in order to increase density so these are mounted in the vertical pillars behind the rack. Remember, each of the servers Intel uses houses at minimum 2-3 servers per U and the racks are packed into 60U and closer together than average so these PDUs are using a lot of power.

While this was the older data center area we toured, there was one more unique feature here, a Top 500 Supercomputer.

Intel SC D2P4 Supercomputer

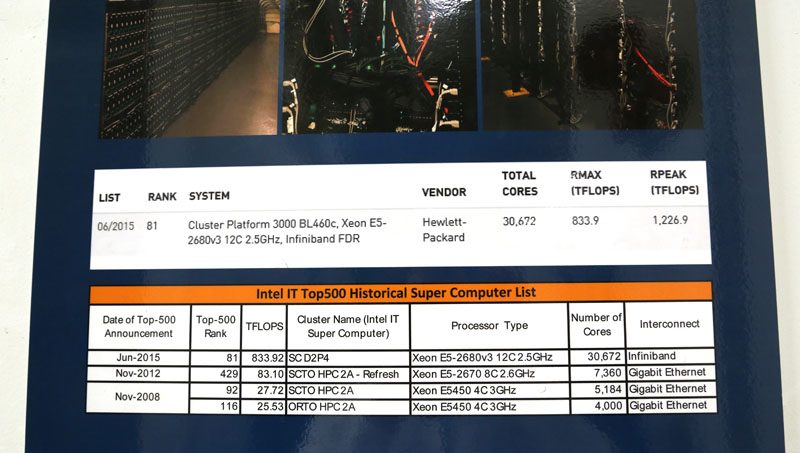

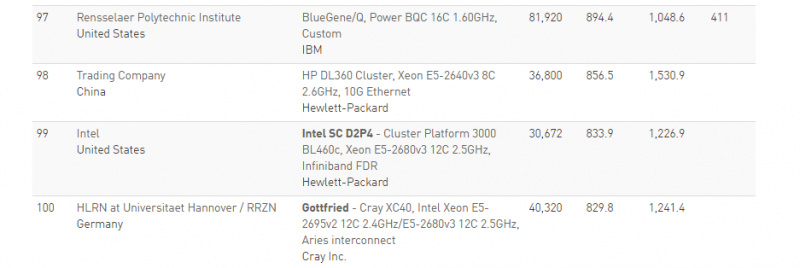

We saw placards about this machine at quite a few points and we were able to take a photo from a designated spot. The machine was made up of HP BL460c blades and Intel Xeon E5-2680 V3 CPUs connected via Infiniband.

Someone did ask, and this is an all-CPU supercomputer, no GPUs involved. We saw the June 2015 Supercomputer Top-500 as #81 and decided to double-check the November 2015 list. It is down to #99 but still quite high there.

Here is the Intel SC D2P4 supercomputer. Each chassis holds 16 server sleds and there are a maximum of 6 HP blade chassis per rack. Although we saw plenty of Dell and Quanta hardware elsewhere, this is purely a HP machine. FDR Infiniband networking is aggregated in the center of the supercomputer where those deal wires rise vertically.

We did also ask if this was using Xeon Phi/ Knights Landing and the answer was “not yet” but it is being considered for a next-generation machine.

New Data Center

Intel next took us to their new section which is much denser area that we were told can handle 5MW of power in 5,000 square feet. It is designed for up to 43 KW per 60U rack and with a PUE of somewhere in the 1.06 or 1.07 range.

Here you can see a wall of Quanta Stratos S910 chassis. These chassis have nine nodes in 3U and have an internal switch that Intel has two 10GbE SFP+ ports as uplinks to the rest of the data center. Using chassis level switching, Intel is able to reduce the cabling and aggregation switch port count.

We were able to take a snapshot of another “cold” aisle. It was around 60F outside and since air is being pumped in directly from outside it was a bit chilly in here.

Between each set of racks there is a small pod design that contains the hot air and forces it through ceiling heat exchangers. We were able to take a photo of a new section that is more than twice as long as the finished sections. One can see the heat exchanger fans running above the rack, pipes carrying the cool and warm water through the data center. Those giant red things hanging are actually the massive power connectors to feed the vertically mounted PDUs.

Final words

Intel’s new facilities achieve some excellent power figures as they have started to innovate in terms of their data center designs. Some key trends including customized racks (narrower/ taller than standard), water and free cooling techniques being used, and chassis level network aggregation to build more efficient data centers. These data center techniques are quite interesting because Intel is using standard 19″ rack mount gear and not a custom form factor as we see with the Open Compute racks. At the same time, Intel is pushing a fairly low PUE of 1.06-1.07 using a re-purposed fab, not even a purpose built facility. Just for context, Google’s data centers reportedly are down to and average of 1.11-1.12 PUE which is still higher than what Intel is currently getting. Intel’s data center is a Tier 1 facility, so it does not have a lot of the redundancy and loss due to that redundancy that we see from other facilities so a Google data center to an Intel data center is not the best comparison. It does show that Intel’s IT team is driving efforts starting with a 2.00 PUE years ago to something that is almost twice as efficient at a data center level.

Edit 1 – Post-publication I was told by folks who work at a large social media property that Facebook is in a similar 1.06 PUE range (see here.)