The HPE Superdome Flex 280 is the company’s next-generation 2 to 8-socket server. While most of its competition is focused on the 4-socket market only, HPE is going bigger. Lenovo, for example, said that it was not planning to release an 8-socket server based on the new 3rd Generation Intel Xeon Scalable platform codenamed Cooper Lake/ Cedar Island.

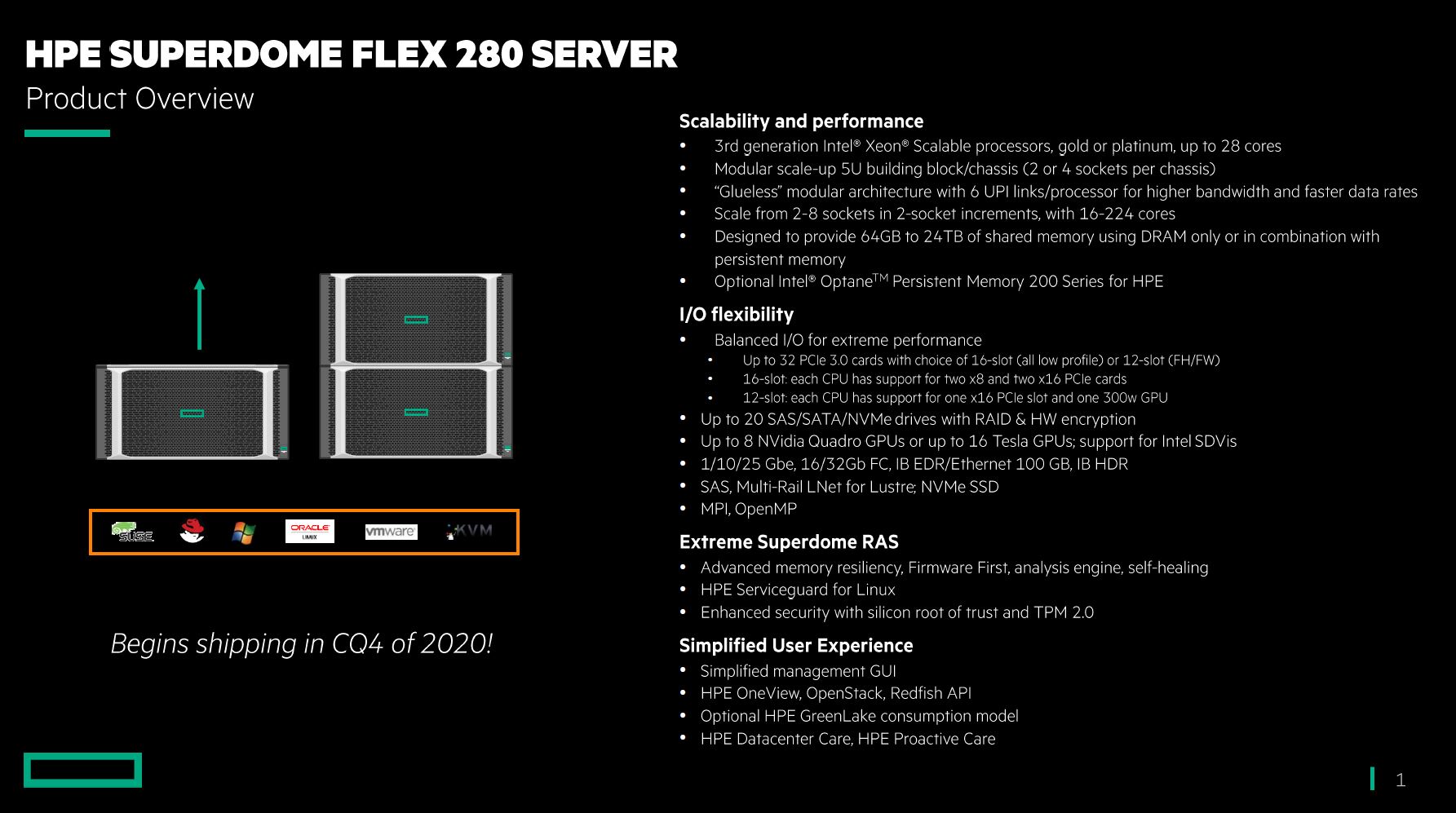

HPE Superdome Flex 280 Overview

As a 5U solution, the HPE Superdome Flex 280 can either be used in single or dual chassis configurations. Each chassis can support either two or four Cooper Lake generation CPUs. That means one can scale from 16 to 224 cores and get up to 24TB of memory in the server (Optane PMem 200 on Cooper Lake is only supported in up to 4-socket configurations by Intel.)

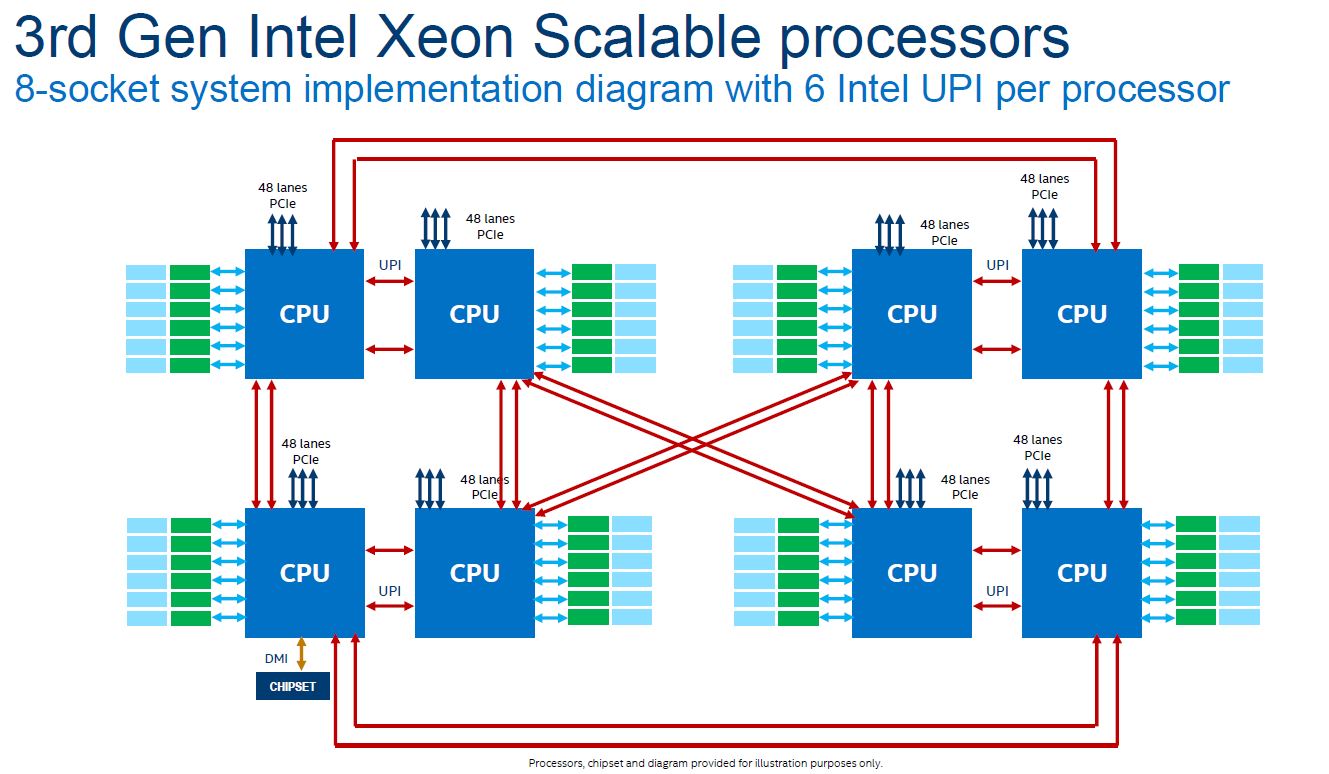

For those wondering, here is what the official Intel diagram looks like for an 8-socket Cooper Lake generation server:

HPE can take all of those PCIe lanes and direct them to the storage, networking, GPUs including Quadro and the line formerly known as NVIDIA “Tesla”, or other PCIe expansion cards.

HPE also is highlighting its Superdome RAS features and security features along with the standard management solutions that integrate with the rest of HPE’s portfolio.

Final Words

What makes the HPE Superdome Flex 280 stand out is that HPE designed the solution to scale almost the entire range of what the 3rd Gen Xeon Scalable processors are capable of (Facebook has a single-socket version in Yosemite V3.) It is one of the only 8-socket capable machines that has been announced thus far.

Shipping is expected in Q4 2020. These systems have longer qualification and validation cycles given their scale and the applications they run. HPE will offer the Superdome Flex 280 on its GreenLake consumption program. At Discover 2019, HPE’s mantra was GreenLake Everything by 2022. It makes sense that this new platform follows that model. We should be hearing more about HPE GreenLake as the company’s virtual Discover 2020 event kicks-off this week.

Are these strictly limited to only 8 sockets? The previous generation of HPE’s Superdome scaled all the way up to 32 sockets as leveraged the glue logic chips initially developed by SGI. With Cooper Lake doubling the number of UPI links, those glue chips wouldn’t inherently have to be redesigned: just the number of sockets per drawer (ie quad socket would become dual socket with Cooper Lake). HPE could have simply also doubled the number of glue chips per drawer and called it good.

Pardon my ignorance here; but what is the target audience for this sort of configuration?

8 sockets in 10Us clearly isn’t targeting the density enthusiasts, and while it looks like PCIe and storage are given lots of flexibility I’m guessing that the price on these is going to push it well out of the range of either the people who just need as many GPUs in the rack as they can get or people buying the basic 2-3U all-rounder-generalist type systems.

I’m assuming that there are particular applications that either strictly depend on all cores being part of the same system and either scale poorly or not at all across multiple nodes?

An 8 socket x64 superdome.

Does it run HPUX?

Kevin G

I loved most of the things Silicon Graphics put out – from the Challenge, to the O2, Origin 200 and 2000 and later the Origin 300 and 3000s.

The were so far ahead of Dell, HP and who ever else.

Would take much more than a simple redesign of the chipset. Back then clustering was still hit or miss, and while IB had the super low latency, the line speeds were not that great – and the complexity and expense of a clustering FS – I still have a SGI TP16000 Array – about 2PB atm, and 8 x 40Gb IB instead of Fibrechannel. That unit was bought as a spare, and at the time ran CXFS to support our cluster. Today, clustering is not a problem – and even the biggest systems like the current Crays are clusters. The move to GPU compute made the pure CPU plays irrelevant and much more important to have a high GPU count, and it’s easiest to do with dual sockets – so the 32 CPU systems were incredible in their day, their day has passed.

Most of the 8 Socket boards will be custom pieces for OCP for Facebook and other hyperscalers – can’t see them taking on an HP Superdome into an almost completely pure OCP world.

I guess we shouldn’t wait to see an AMD 4 or 8 socket – since their poor design limits them to 2 sockets. They probably thought they were smart by not using dedicated bandwidth like QPI/UPI and using PCIe lanes.

Fuzzy

an 8 socket is much more expensive than either a 4S or 2S – per socket. If you need 8S you are going to pay dearly for 8 sockets.

How are the two chassis linked together? Is it some UPI interconnect cabling between each other or something else? Or is it borrowing some the interconnect tech from their 32-socket Superdome rack?

fuzzyfuzzyfungus : clearly the machine is not aimed at GPU computing. IMHO price would be too prohibitive in comparison with 1-2 sockets boxes cluster. It’s mainly to compete with higher end POWER and SPARC boxes and in in-memory DB domain. E.g. SAP/HANA, Oracle etc.

I was going to ask about the use case as well. Where I’m from (HPC for scientific computing) pretty much every HPC is using 2-socket blade servers because cost per Flops is lower.

As I guess databases as mentioned by KarelG is a good case. Great to have many threads share the same enormous amount (TBs) of RAM to access for example a database without taking the trip over Infiniband.

But I guess a 2-socket EPYC solution is getting competitive with some of these cases as well.

If someone uses quad or 8-socket systems on a daily basis I’d be happy to become less ignorant and learn more about your use case.