HPE ProLiant RL300 Gen11 Internal Hardware Overview

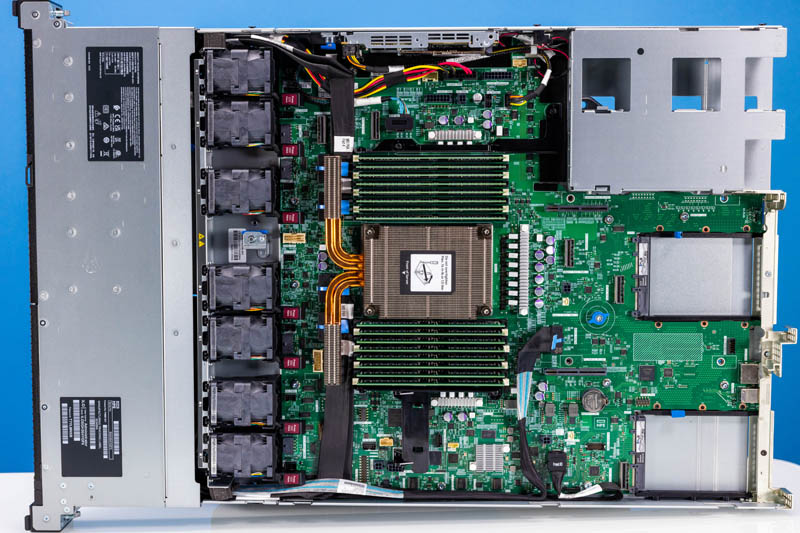

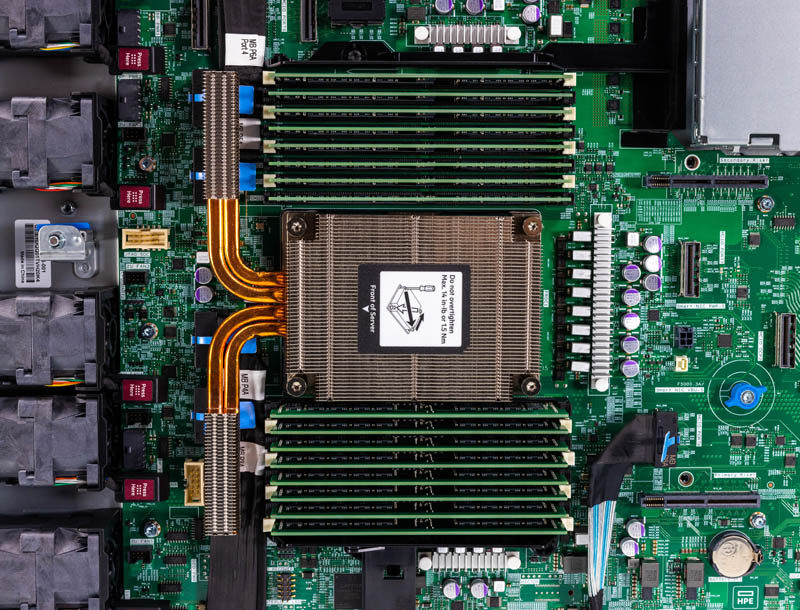

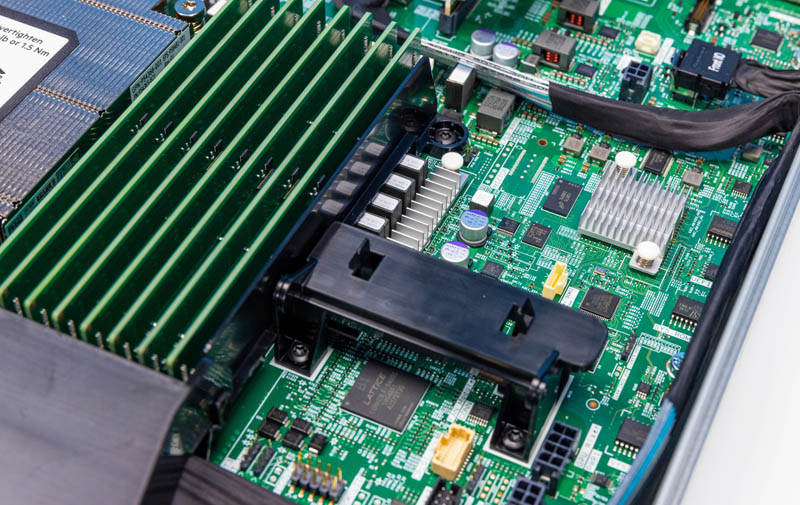

Inside the system, we see a single-socket platform that is very ProLiant. This is an overview of the internals to help orient our readers to how we are going to go through the system from left to right.

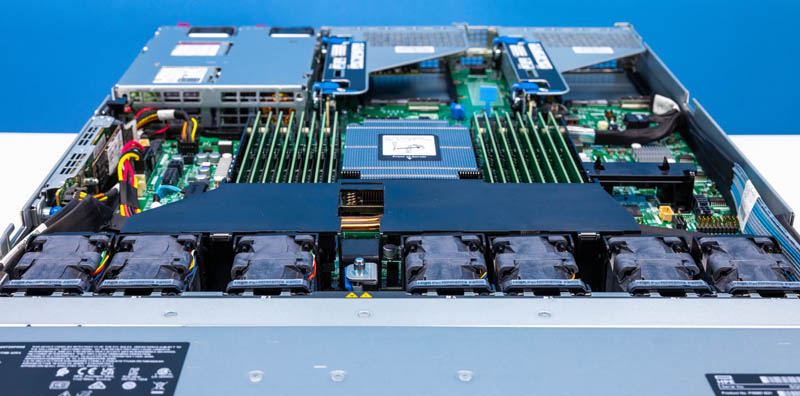

The first big feature is the fan partition. HPE has seven dual fan modules. What is different from many other vendors is that its airflow guides do not extend past the CPU.

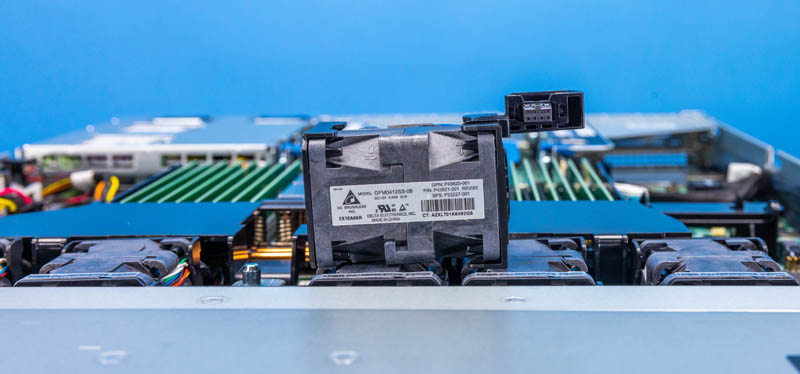

Here is one of the fan modules. Something nice that HPE’s design team specified is using the company’s hot-swap fan modules. Many 1U servers some with wired fan modules. HPE’s solution is a more challenging design which is why we do not see it as often.

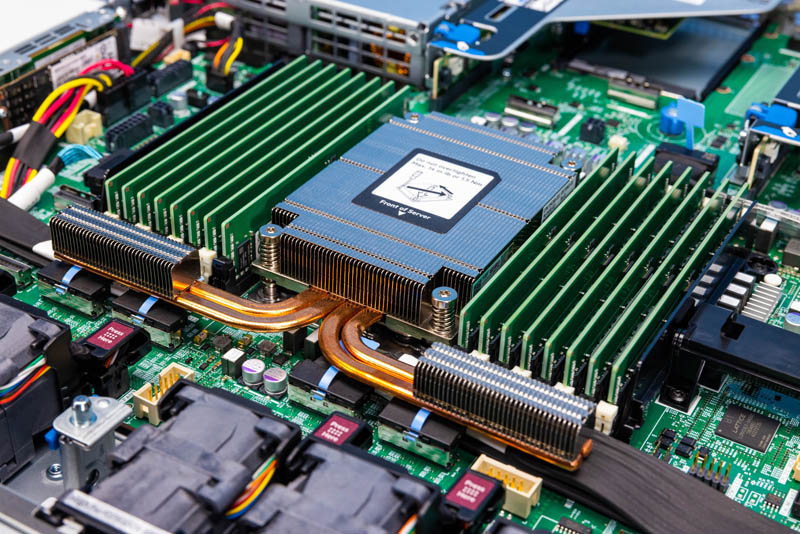

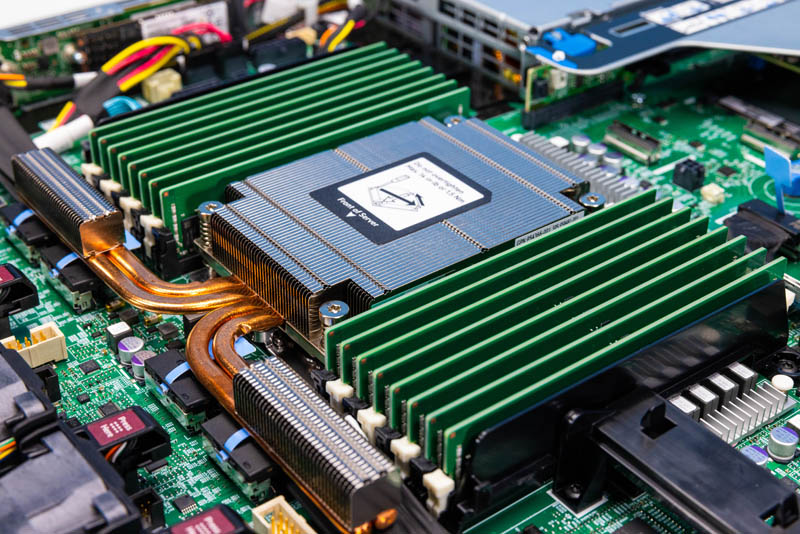

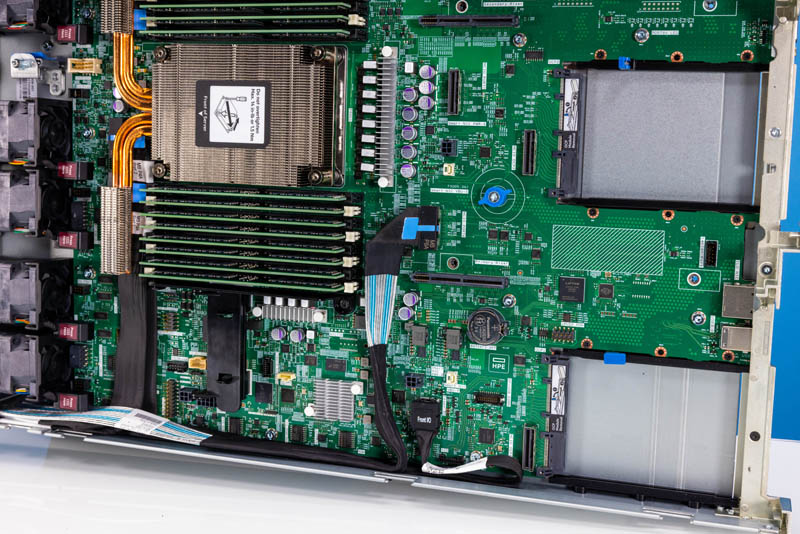

On the topic of cooling, HPE has copper heat pipes that lead to additional “dog ear” heatsinks that are placed in front of the memory. Most Ampere Altra systems we have reviewed have simple coolers.

The supported CPUs in this platform range from the Ampere Altra, also found in clouds from Oracle, Microsoft, and Google, as well as the higher core count Ampere Altra Max with 128 cores. Our system has a 128-core Altra Max version with twice as many cores as AWS has with its homegrown Graviton2 / Graviton3 Arm processors.

Beyond the cores, Ampere also has 8-channel DDR4-3200 memory that supports ECC RDIMMs. It can also handle 2 DIMMs Per Channel (2DPC) configurations. This is very similar to Intel Xeon “Ice Lake” and AMD EPYC “Milan” generations.

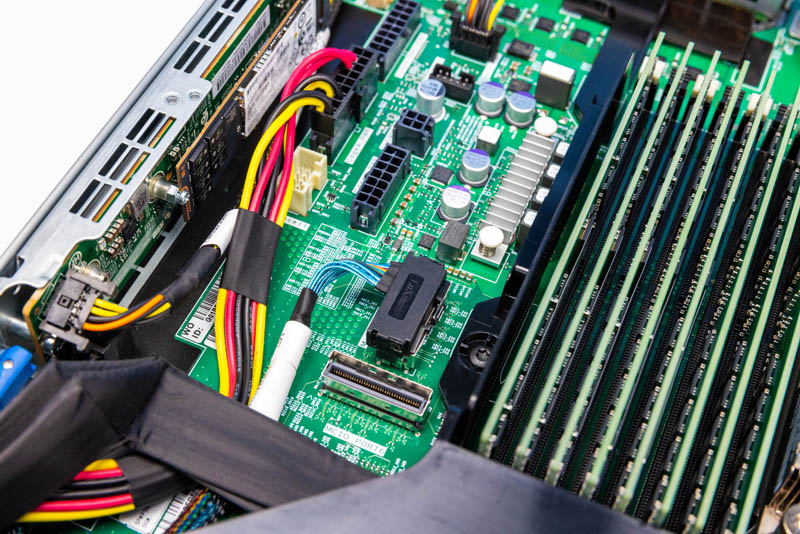

Since this is a single socket 128-core solution, there is ample room on either side of the CPU and memory for connectivity. Here we have features like MCIO connectors and power connectors.

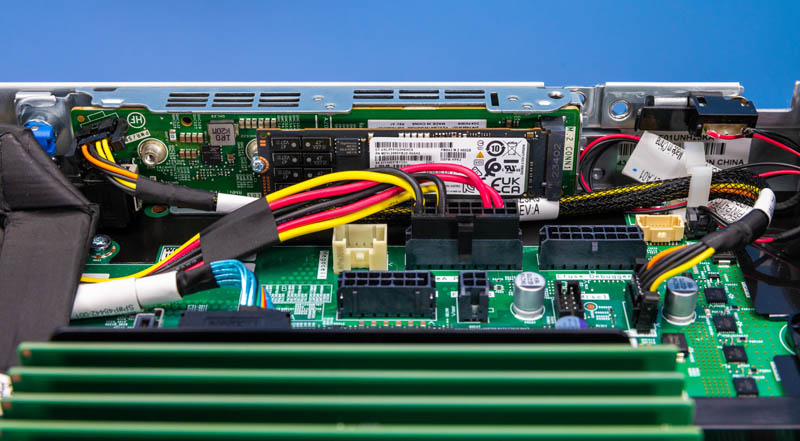

HPE also has an internal boot M.2 solution.

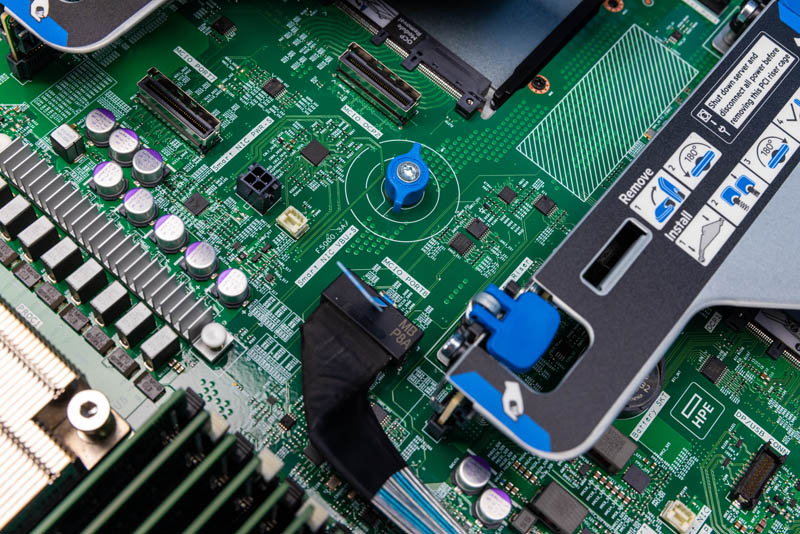

On the right side, we have what appears to be our iLO BMC.

In the area behind the CPU, there are additional MCIO connectors for PCIe connectivity. This is also a lot of the toolless risers and motherboard locks that feel very ProLiant.

At the rear of the motherboard, below the PCIe risers, we have two OCP NIC 3.0 slots. If you have seen our OCP NIC 3.0 Form Factors The Quick Guide you will recognize them as ones with internal latches. Typically we see hyper-scalers that focus on ease of service use externally serviceable locking mechanisms. Companies like HPE and Dell that have large service revenue streams tend to use the internal lock.

Something that is very apparent is that this is not the densest solution. As a single socket server with 16 DIMM slots, there is a lot of room in this chassis and motherboard.

In our hardware overview, we have shown why the ProLiant RL300 Gen11 is a real ProLiant from a hardware perspective, despite using Arm. Next, let us get to iLO management.

Nice review

“Having a major US server vendor sell Ampere Altra-based servers is great and it shows a willingness to listen to its customers”

Do enterprise customers really want ARM chips. They are usually more conservative than hyperscale customers and cost benefits have to be bigger to make them choose ARM servers.

That’s a great review. I’ll share it with our team. We aren’t a huge customer $20-30m in annual spend, but we’ll at least look at these for a small cluster this year to try.

It’s funny. Dell tried coming into our account with IDC and some white paper recycling bin collateral. They still haven’t had the ah ha that we read STH but we don’t look at that garbs.

2 things I found kind of confusing.

1) What do Storage Subsys failures have to do with Screws?

HPE replacement drives already come with caddies, so you aren’t swapping bare drives in/out of the caddy anyway, so why does a screw-based design matter?

2) You mentioned the Dell XE9680, did they not have such a machine before?

HPE has had the Apollo 6000/6500 and now the Crazy XD600 all with this same 2P/8SXM design.

Grrrr Spell Check – CRAY XD600

No mention if this meets HP certified supply chain requirements.

If not, this server will never see the inside of any datacenter where security is paramount.

The last time when one of the ARM vendors were bragging about how prevalent they were with some cloud operators, we checked and how were they used? As batch processing and storage managers. Any compute?

“No, we don’t use them for that internally, only if a client demands it…so we put a few on the wire to let people give them a run”.

What a farce.

Can’t find an actual listing with price. Maybe it is vaporware?

Could really use direct shopping link.

How about a HP Microserver version of this with the Ampere Altra 32 or 64 core CPU?