Gigabyte H261-Z60 Power Consumption to Baseline

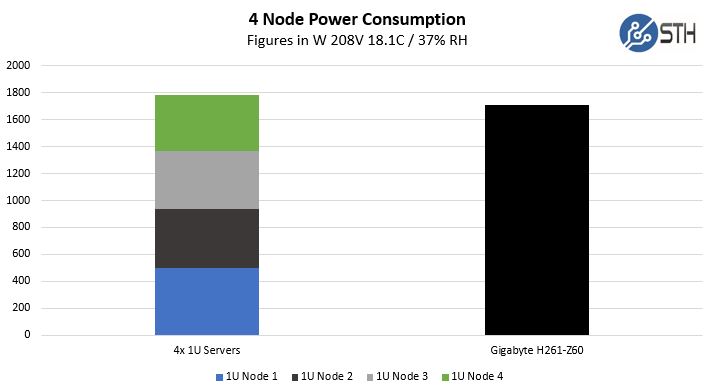

One of the other, sometimes overlooked, benefits of the 2U4N form factor is power consumption savings. We ran our standard STH 80% CPU utilization workload, which is a common figure for a well-utilized virtualization server, and ran that in the sandwich between the 1U servers and the Gigabyte H261-Z60.

We ended up with about 4% lower power consumption with the Gigabyte H261-Z60 over the set of 1U servers. That is partly due to the power supplies running in their more efficient ranges. It is also due to having fewer larger fans and fewer power supplies in the mix. This is an excellent result and one that potential buyers should weigh heavily.

Putting this in context, the Gigabyte H261-Z60 is delivering virtually identical performance to four 1U servers while reducing rack space by 50%, power consumption by 4%, number of power supplies by 75%, and the number of base power/ 1GbE/ management cables by 56% (7 v. 16.) That is exactly why 2U4N systems are so popular.

STH Server Spider: Gigabyte H261-Z60

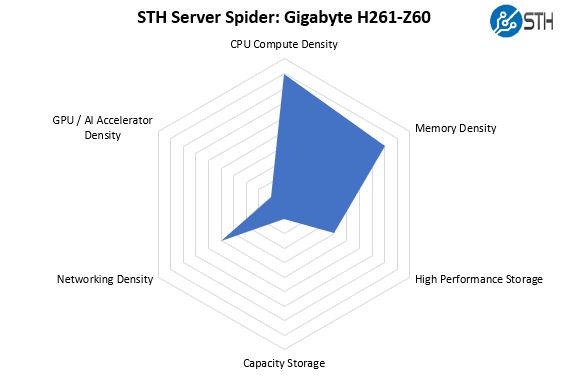

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is not the densest accelerator or storage configuration possible. At the same time, it is one of the densest CPU compute and memory solutions on the market. We recommend the Gigabyte H261-Z60 for applications where one must pack in compute and memory into a small form factor.

Final Words

When it comes down to it, this is the dense platform you want to use. Using today’s AMD EPYC “Naples” gen1 parts, one can handle 256 cores, 512 threads, 8TB of RAM, 24x SATA SSDs, 8x M.2 NVMe SSDs, OCP networking, and still have room for 16x PCIe x16 half-length low profile cards. Intel platforms simply cannot match this. Indeed, we know the next-generation AMD EPYC 2 “Rome” will double this maximum core count so we will be looking at 512 cores and 1024 threads in a single 2U box. The current and next-generation of comparable Intel Xeon Scalable servers will be limited to 224 cores/ 448 threads and cost a lot more to get to that number. If you are looking for cost-effective compute density, the Gigabyte H261-Z60 is available today and has a bright roadmap.

Our biggest points of improvement would be that that the Central Management Controller continues to gain features. This would be an enormous benefit to the unit but it is an option we would love to see as it would be nice to login via a single management interface per chassis. This is still a great feature simply for reducing cabling. The other point of improvement is we want to see NVMe. Gigabyte launched the H261-Z61 variant of this server, which has that functionality built-in. Now the action would simply be to pick that SKU if you want more NVMe.

Overall, these high-density plays are awesome for virtualization clusters. There is a bigger implication though. Since all but one M.2 slot is accessible via CPU0, one can retain virtually all of the functionality using a single AMD EPYC processor. That means a 4x CPU, 128 core/ 256 thread 2U system is extremely affordable and offers a surprising amount of expandability in such a compact form factor.

That’s a killer test methodology. Another great STH review.

Great STH review!

One thing though – how about linking the graphics to a full size graphics files? it’s really hard to read text inside these images…

Monster system. I can’t wait to hear more about the epyc 7371’s

I can’t wait to see these with Rome. I wish there was more NVMe though

My old Dell C6105 burned in fire last May and I hadn’t fired it up for a year or more before that, but I recall using a single patch cable to access the BMC functionality on all 4 nodes. There may be critical differences, but that ancient 2U4N box certainly provided single-cable access to all 4 nodes.

Other than the benefits of html5 and remote media, what’s the standout benefit of the new CMC?