The 2U 4-node or “2U4N” form factor is very popular. It offers up to eight CPUs in 2U along with easy serviceability. One of the big challenges the form factor is hitting these days is that the density of putting over 2kW of CPUs (8x 280W TDP), 64 DIMMs, two power supplies, high-speed networking, and an array of SSDs. Now, Gigabyte and CoolIT are partnering to be able to cool even the high-end AMD EPYC CPUs in these dense 2U4N form factors.

Gigabyte and CoolIT Partner to Cool 280W AMD EPYC 7003 2U4N Servers

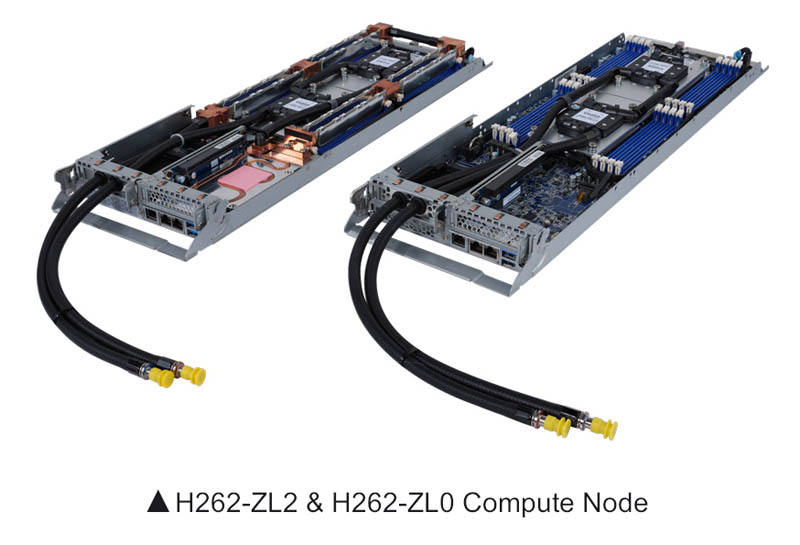

At STH, we have reviewed a number of the Gigabyte AMD EPYC 2U 4-node servers. These servers use nodes that place the AMD EPYC processors in parallel on a sled that has the CPUs, memory, and networking. CoolIT is partnering with Gigabyte to offer two different styles of liquid cooling for these nodes.

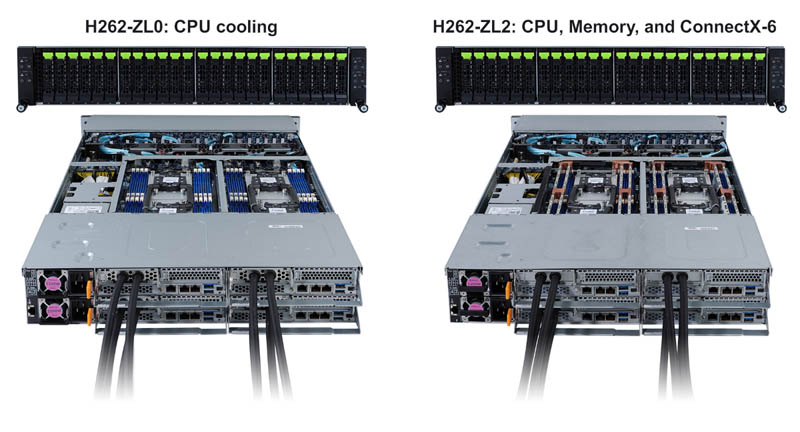

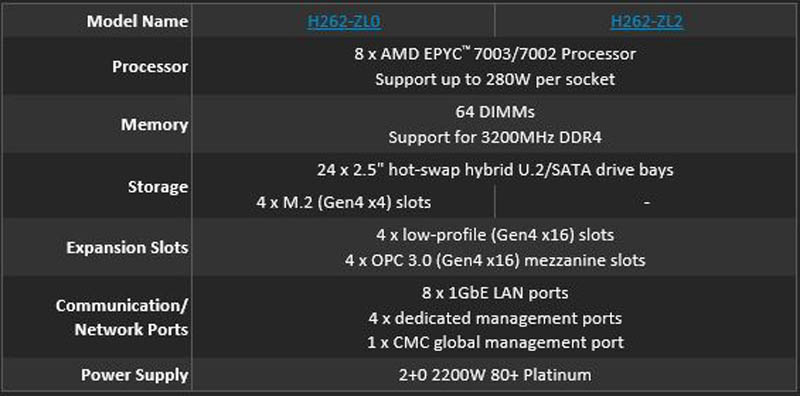

The first is the Gigabyte H262-ZL0 that puts cold plates on the two CPUs, but then relies upon air cooling for the rest of the system. The Gigabyte H262-CL2 adds additional cold plates in its loops for cooling the DDR4 modules as well as a Mellanox/ NVIDIA ConnectX-6 fabric adapter.

Here are the specs for the systems. One item we can note is that the system that only cools the CPUs can support an extra M.2 slot per node, or four more in a chassis. The advantage of cooling, not just the CPU, but also the memory and NIC is that much less airflow is needed in the system lowering the overall power consumption while retaining performance.

For the table, Gigabyte is summing the aggregate capacities of the four nodes. Just for some context, typically 2U4N designs struggle to cope with 205-240W TDP CPUs requiring lower data center ambient temperatures for air cooling to be effective. Liquid cooling helps maintain density while also lowering cooling costs.

Final Words

Overall, higher-density liquid cooling solutions are coming. We have already shown three options on STH, and have a number of other options we will be showing. Our new lab facilities will be designed specifically to handle liquid-cooled servers as we are already seeing the demands of next-gen servers and the cooling they will require.

This is a cool system by Gigabyte and CoolIT. Expect to see more on liquid-cooled servers on STH over the coming quarters.

I’m curious… Since the PSU configuration in this is 2+0, in the event of a mains or PSU failure, does the whole box fail, is performance throttled, or does a single node fail?

I have had many 2U/4-node server models over the years…It seems as the processors got more power hungry, if one PSU failed, the chassis would throttle the power to the individual servers (versus the one surviving PSU keeping all 4 servers running full blast).

Our cluster monitoring would notice the lower thruput.

@Carl Schumacher: I’ve only seen this on the Dell PowerEdge 13th gen platform (e.g. C6320). There are IPMI settings in order to bypass this default behavior and make the PSUs truly fault tolerant (no throttling, full blast on one PSU).