I have been running VMs on an older overclocked 4790k with a single SSD for quite a while and decided it was time to step up my game. I’ve been adding VMs regularly and pushed the server to its limits so a higher capacity system was in order. A single system would be simple to maintain but after Microsoft Windows Server 2016 came out with storage spaces direct technology so I decided I would be an early adopter and see how much pain I could endure setting up a two node lab. I am an MSP and this Windows Server 2016 Storage Spaces Direct hyper-converged cluster is going to be used to house a dozen or two VMs. Some for fun (plex), some for my business (Altaro offsite backup server). It will host a virtual firewall or two as well as all of the tools I use to manage my clients. Here is the original post of this build log on the STH Forums.

Introducing the 2-Node Windows Server 2016 Storage Spaces Direct Cluster

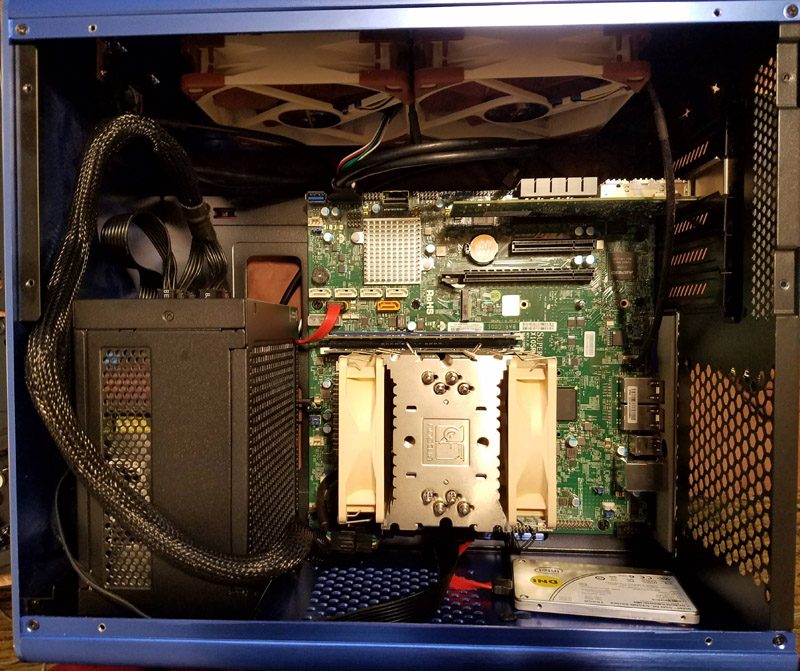

I’ve been in the technology game since I was about 14 when I made friends with the owner of a local computer shop. I ended up managing the shop and eventually went to school and became a full-time Sys Admin. Since I’ve been rolling my own hardware for so long that is generally my preferred way to go when it comes to personal projects. I am also space limited so I had to figure out a way to do this without a rack. I decided to build the two node cluster using off the shelf equipment so that it would look, perform, and sound the way I wanted. Did I mention I hate fan noise? If it’s not silent it doesn’t boot in my house. A rack will definitely be in my future and the use of standardized server equipment means I can migrate the two servers to rack mountable chassis with minimum effort.

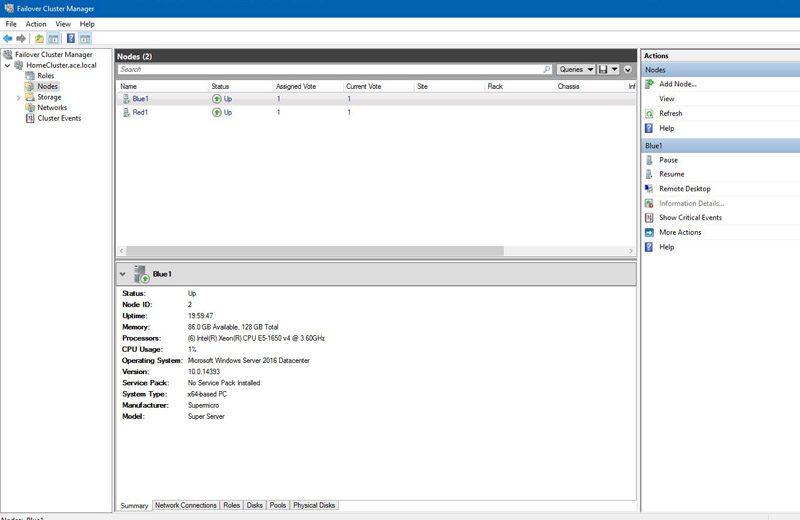

The end result is a two node hyper-converged Windows storage spaces direct cluster using Supermicro motherboards, Xeon processors, and Noctua fans. Silence is something you can’t buy from HP or Dell unfortunately. It only has the ability to withstand a single drive or server failure but that won’t be a problem since it is “on premise” and using SSDs.

The two nodes are connected on the back end at 40Gbps using HP branded Connectx-3 cards/DAC which handles the S2D (Storage Spaces Direct), heartbeat, and live migration traffic. Using this method I did not need a 40GbE switch which saves an enormous amount of power. I still got 40GbE which gives lots of performance. On the front end, they are each connected via fiber @ 10Gbps to a Ubiquiti US-16-XG switch (a close relative of the Ubiquiti ES-16-XG reviewed on STH) and @ 1Gbps to an unmanaged switch connected to a Comcast modem. This allows me to use a virtual firewall and migrate it between the nodes.

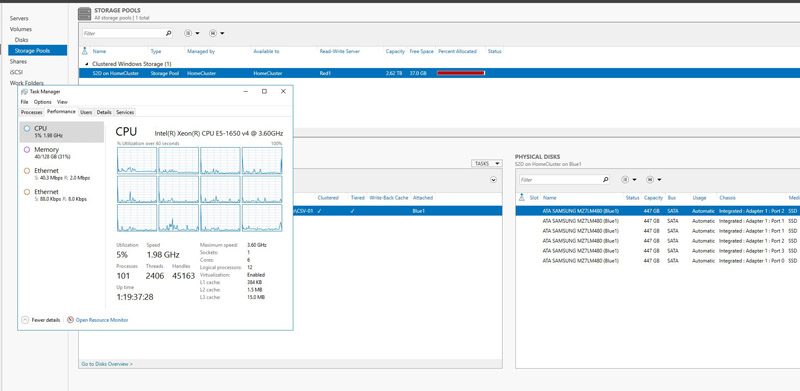

Each node is running a Supermicro microATX board and an Intel V4 Broadwell-based CPU. I found a great deal on a 14 core (2.5Ghz) E5 V3 CPU on ebay for one of the nodes. I purchased a 6 core (3.6Ghz) Xeon for the other node. The logic behind the two different CPUs is to have one node with higher clocks but a lower core count and the other with a high core count CPU but with lower clocks. This does impose a 12 core per VM limit (the max number of threads on the 6 core CPU). Any more cores and the VM could only run on the 14 core node.

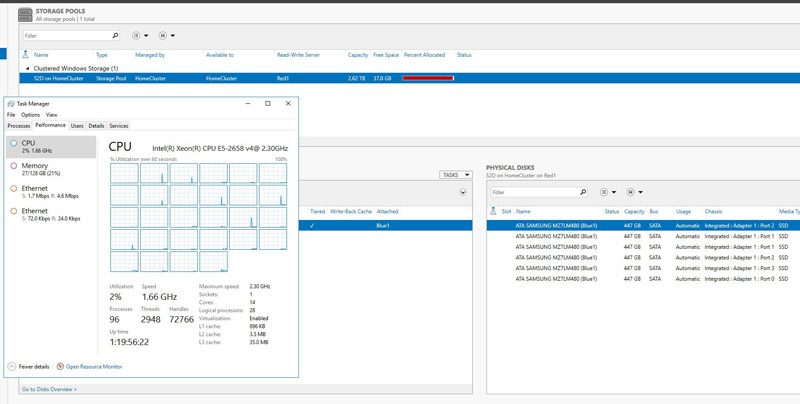

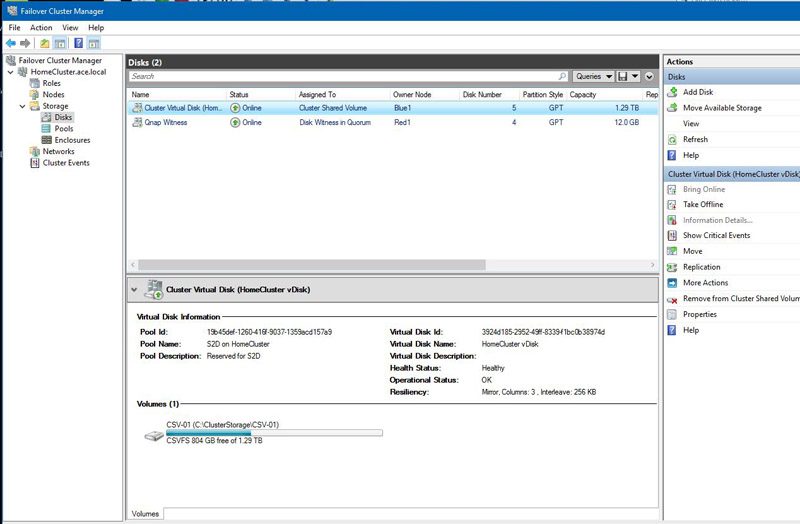

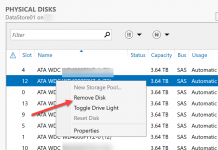

In order to use S2D on a 2 node cluster with all flash storage you need a minimum of 4 SSDs, two per server. I am using a total of 6 SamsungPM863 drives, 3 per server. Since a 2 node cluster can only use mirroring I am able to utilize half of the total storage, about 1.3TB. Since this configuration can only handle a single drive failure with significant risk to the cluster I will be adding an additional drive to each server in the future. Having an additional drives worth of “unclaimed” space allows S2D to immediately repair itself to the unused drive if there is a failure, similarly to a hot spare in a RAID array. Performance is snappy but not terribly fast on paper, 60K read IOPS, 10K write IOPS.

I also decided to play with virtualized routers and am currently running pfSense and Sophos XG in VMs. By creating a network dedicated to my Comcast connection I am able to migrate the VMs between the nodes with no down time other than a single lost packet if the move is being lazy. I will be trying out firewalls from Untangle and a few others to see which works best.dddd

The hardware build process went very smooth thanks to the helpful people on the STH forum and the deals section. I saved a lot of money by buying used when I could. I had that nifty Supermicro fluctuating fan problem that I managed to fix thanks to more help and both nodes are essentially silent, the Noctua fans run around 300rpm on average and haven’t gone above 900 under prime95.

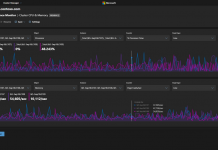

Power consumption is right in line with what I was hoping for.

- Node1 idle: 50W

- Node 2 idle: 45W

- Node 2 Prime95: 188W

Neither node puts out enough heat to mean anything and even under full load they are both dead silent. Due to the oddities of the Styx case airflow is actually back to front and top to bottom. This works great with the fanless power supplies as they have constant airflow.

The software configuration was a good learning experience. There are about a million steps that need to be done and while I can do most of them in my sleep S2D was a new experience. Since S2D automates your drive system I ran into a problem I wasn’t expecting. The major gotcha I found involved S2D grabbing an iSCSI share the moment I added it to the machines. It tried to join it to the pool and ended up breaking the whole cluster… twice. Admittedly I knew what would happen the second time but I’m a glutton for punishment apparently.

Other than that everything has worked flawlessly. Rebooting a node causes a 10-minute window in which the storage system is in a degraded state while the rebooted node has its drives regenerated. I can move all VMs from one node to the other in just a few seconds (over a dozen VMs at the same time which only uses about 12Gbps of bandwidth) or patch/reboot a node without shutting anything else down.

Overall I think MS hit a home run with Windows Server 2016 and storage spaces direct. The drive configuration is one of the most flexible of all of the hyper-converged solutions out there and their implementation has been rock solid no matter what I’ve thrown at it. The biggest drawback of a 2-node setup is that it can only handle a single point of failure but I will be mitigating most of that in the near future.

Part List for the 2-Node Windows Server 2016 Storage Spaces Direct Cluster

If you want to replicate something similar here are the parts I used. Since much of this gear was second hand, the build price was less than a quarter of what it would have cost from Dell or HP new.

Node1 (Blue1):

- Operating System/ Storage Platform: Server 2016 Datacenter

- CPU: Xeon E5-1650V4 Intel Xeon E5-1650 v4 3.6 GHz Six-Core LGA 2011 BX80660E51650V4

- Motherboard: Supermicro X10SRM-F *NEW* SuperMicro X10SRM-F Micro ATX Motherboard *FULL MFR WARRANTY*

- Chassis: Raijintek Styx, blue RAIJINTEK STYX BLUE, Alu Micro-ATX Case, Compatible With Regular ATX Power Supply, Max. 280mm VGA Card, 180mm CPU Cooler, Max. 240mm Radiator Cooling On Top With A Drive Bay For Slim DVD On Side-Newegg.com

- Boot Drive: Intel S3500 boot drive *QTY AVAIL* Intel DC S3500 160GB Internal DataCenter 2.5″ SSD (SSDSC2BB160G401) 735858258388 | eBay

- Data SSDs: 3x Samsung PM863 480GB data drives SAMSUNG SSD MZ-7LM4800 PM863 2.5″ 480GB SATA 6.0Gbps PN: MZ7LM480HCHP-000G3 | eBay

- RAM: 128GB DDR4 ECC 2400Mhz 4X70M09263 32GB DDR4 2400MHz ECC RDIMM Memory Lenovo ThinkStation P510 P-series | eBay

- Add-in Card: HP branded Connectx-3 649281-B21 656089-001 661685-001 HP IB FDR/EN 10/40GB 2P 544QSFP ADAPTER | eBay

- Power Supply: Seasonic 400W platinum fanless SeaSonic X series SS-400FL Active PFC F3 400W ATX12V Fanless 80 PLUS GOLD Certified Modular Active PFC Power Supply-Newegg.com

- Other Bits: Noctua heatsink Noctua NH-U9DXi4 90mm SSO2 CPU Cooler – Newegg.com

- Other Bits: 3x Noctua fans Noctua Anti-Stall Knobs Design, SSO2 Bearing 120mm, PWM Case Cooling Fan NF-S12A-Newegg.com

Node2 (Red1):

- Operating System/ Storage Platform: Server 2016 Datacenter

- CPU: Xeon E5-2658V4 QS Intel Xeon E5-2658 v4 QS 2.3GHz LGA2011-3 14C Compatible with X99 i7-6850K 6900K | eBay

- Motherboard: Supermicro X10SRM-F *NEW* SuperMicro X10SRM-F Micro ATX Motherboard *FULL MFR WARRANTY*

- Chassis: Raijintek Styx, red RAIJINTEK STYX Classic, an Alu Micro-ATX case, Compatible with regular ATX Power Supply, Max. 280mm VGA Card, 180mm CPU Cooler, 240mm Radiator Cooling On Top, a Drive Bay For Slim DVD On Side – Red-Newegg.com

- Boot Drive: Intel S3500 boot drive *QTY AVAIL* Intel DC S3500 160GB Internal DataCenter 2.5″ SSD (SSDSC2BB160G401) 735858258388 | eBay

- Data SSDs: 3x Samsung PM863 480GB data drives SAMSUNG SSD MZ-7LM4800 PM863 2.5″ 480GB SATA 6.0Gbps PN: MZ7LM480HCHP-000G3 | eBay

- RAM: 128GB DDR4 ECC 2400Mhz 4X70M09263 32GB DDR4 2400MHz ECC RDIMM Memory Lenovo ThinkStation P510 P-series | eBay

- Add-in Card: HP branded Connectx-3 649281-B21 656089-001 661685-001 HP IB FDR/EN 10/40GB 2P 544QSFP ADAPTER | eBay

- Power Supply: Seasonic 400W platinum fanless SeaSonic X series SS-400FL Active PFC F3 400W ATX12V Fanless 80 PLUS GOLD Certified Modular Active PFC Power Supply-Newegg.com

- Other Bits: Noctua heatsink Noctua NH-U9DXi4 90mm SSO2 CPU Cooler – Newegg.com

- Other Bits: 3x Noctua fans Noctua Anti-Stall Knobs Design,SSO2 Bearing 120mm, PWM Case Cooling Fan NF-S12A-Newegg.com

Other hardware used in build:

- DAC for back end 40Gbps network Dell DAC-QSFP-40G-5M Copper Direct Attach Cable DP/N J90VN | eBay

- QSFP+ to SFP+ adapters HP / MELLANOX QSFP+ to SFP+ Adapter MAM1Q00A-QSA 655874-B21 | eBay

- 10Gbps SFP+ transceivers that work GREAT with the Ubiquiti US-16-XG switch Lot of 5 F5 SFP 10G SR 10GB SR 10GBase-SR GBIC Transceiver Module OPT-0016-00 | eBay

- Random fibers 15M LC-LC OM3 Multimode Duplex 3.0MM OFNR Fiber Optic Patch Cable | eBay

- Ubiquiti US-16-XG switch UniFi Switch 16 XG – UniFi – Ubiquiti Networks Store

Additional Resources

This project came about mostly because of STH so thank you Patrick for the great site! I also want to thank everyone that helped by answering all of my questions, especially those about the Mellanox ConnectX-3 cards, this thing would have died young without the help. I have some commands and a mostly finished outline available if anyone wants to build something similar. Here are some links I found useful while doing this project:

- Storage Spaces Direct in Windows Server 2016

- Fault tolerance and storage efficiency in Storage Spaces Direct

- Don’t do it: consumer-grade solid-state drives (SSD) in Storage Spaces Direct

- Windows Server 2016: Introducing Storage Spaces Direct

- Resize Storage Spaces Direct Volume

Final Thoughts

I wouldn’t put a 2-node cluster into production for any of my clients. The minimum recommended is a 4 node cluster but I think 3 nodes would work well if be a little inefficient in terms of storage capacity. This is purely a low power, screaming fast and inexpensive test cluster.

I’ve built a 2 node cluster using 1Gbps links, don’t bother, it’s functional but not much more, the 10Gbps recommendation minimum is pretty accurate. 40GbE is only marginally more expensive but much faster with SSDs.

There are enough resources available to find an answer to almost anything but it does require a bit of searching. I had issues multiple times because the pre-release versions of Server 2016 used slightly different syntax than the release version, thanks Microsoft. S2D has a mind of its own, don’t screw with it, just let it do its job. If you fight it you will lose, ask me how I know…

Stop by the STH forums if you are looking to build something similar.

S2D is definitely the killer app of windows 2016. Unfortunately requiring datacenter edition of windows 2016 just killed it. I think Microsoft should allow 2 node S2D in Essentials edition

4 node S2D in Standard Edition(networked using dual port 40Gbe NICs in ring topology, so no need for a pair of high end switches)

leaving 8-16 node S2D for DataCenter editions because more than 4 nodes would require $20K switches.

Great write-up Jeff! I love the X-wing and Tie fighter co-existing in a cluster working together. You pointed out a few pains you had, I would love to chat and learn more on how we can improve S2D. Shoot me an email.

Elden – I just removed your in-message e-mail address but will ensure Jeff has it.

Is it possible to contact the author of this article to ask a few questions about this build?

Hi Bob, there is an entire thread on the forums with a discussion on this build. It is linked in the first paragraph but here it is again: https://forums.servethehome.com/index.php?threads/hyper-converged-2-node-build-is-done.12980/

Hi,

How to deploy storage spaces direct in two node, can please send step by step procedure.