Growing Lambda’s 1-Click Clusters

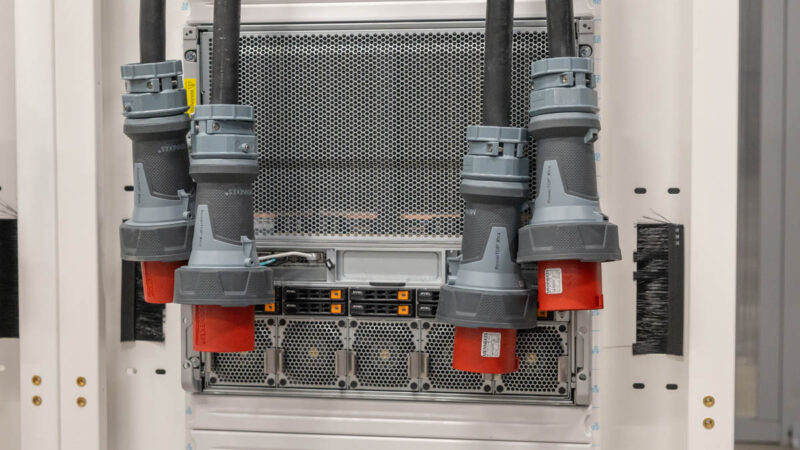

You may have noticed this in the video, but we toured this cluster when there were thousands of GPUs, and Lambda was actively growing the cluster. Our section before this one on the forgotten parts of AI clusters was not just to show aspects like traditional CPU compute servers supporting the AI clusters, but also to shed some spotlight on the rapid pace of the AI build-out and all that needs to go right so that GPUs spend as little time looking like the machine below. This Supermicro GPU system is racked, but not powered on. That means it is not part of a training or inference cluster and not earning revenue.

When I see in-progress AI clusters, I often see sights like the server above and think of aircraft parked at an airport rather than in the sky earning revenue.

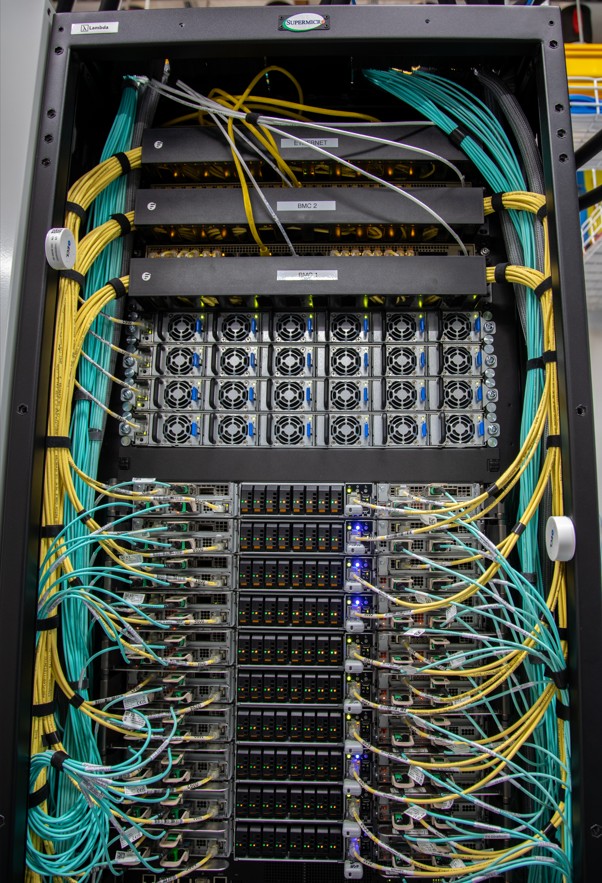

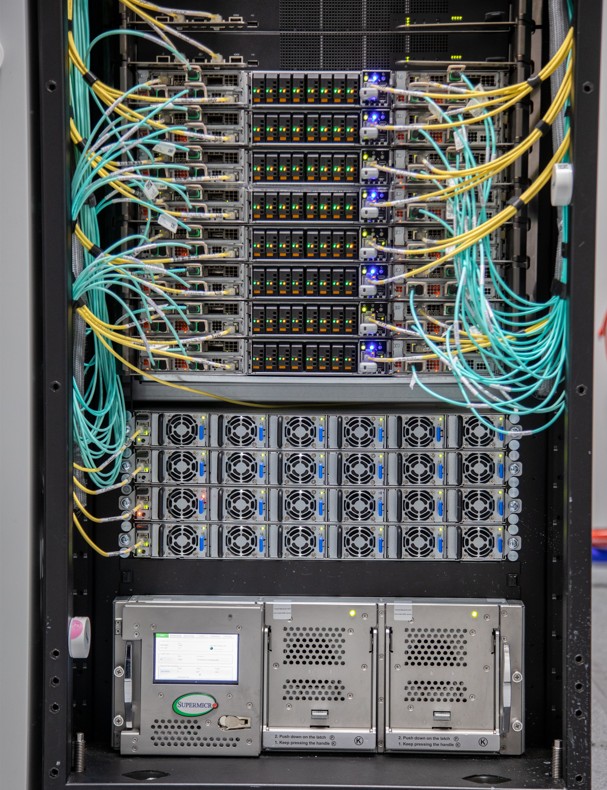

Earlier in this piece, I mentioned that in the video, we had a second team capture another location. Not only is Lambda building around the more traditional NVIDIA HGX B200 8-GPU platforms, but also the newer NVIDIA GB200 NVL72 liquid-cooled racks.

The NVL72 racks present a larger scale-up domain linked by NVIDIA NVLink. We have been reviewing the traditional 8-GPU servers on ServeTheHome for over a decade at this point, but those have been for scale-out cluster connectivity. In contrast, the NVL72 racks are designed for density packing accelerators close together with a high-speed interconnect.

For a neocloud provider like Lambda, the ability to provide both types of compute and provision them in a frictionless manner for clients is one of the key differentiators versus traditional hyper-scale clouds built for legacy compute applications.

Final Words

We covered a ton of ground in this article. We went from showing a 10U Supermicro NVIDIA HGX B200 platform in Cologix’s lobby to a running AI cluster with thousands of GPUs and all of the supporting pieces required to go from cold boxes to token-generating infrastructure.

I just want to say thank you quickly to the teams at Supermicro, Lambda, Cologix, and NVIDIA for making this happen and putting me to work servicing these systems. Even if that was just to be a human light stand.

It was great to also show off an air-cooled facility. Realistically, even AI clusters at this scale probably have another 12-18 months maximum before they will almost all be deployed with liquid cooling. I do not want folks to think that just because we showed an air-cooled cluster that Supermicro, Lambda, or Cologix are against liquid-cooled servers. This is just the NVIDIA B200 cluster we had access to.

While I have toured larger clusters before and I have been able to show a few of them on STH, it was great to get to show off what makes this more than just a bunch of GPU AI servers. Instead, those GPU servers are there for multiple customers to run workloads on them so there needs to be supporting infrastructure.

As cool as the GPU compute side is, part of me is drawn to the network side of these large AI clusters. We had servers with eight GPUs, two CPUs, but over a dozen network connections per node.

One thing is for sure, next to today’s AI cluster build-outs, even one of this scale, you start to feel very small.

Hopefully, you enjoyed this look at a Lambda 1-click cluster at Cologix in Ohio built using NVIDIA GPUs and Supermicro servers. If you did, I would urge you to watch the video as well as there are more views in that than we can show in the web format. Also, feel free to share this with colleagues and friends because the AI build-out is incredibly exciting and happening at a fantastic pace.

Manmade horrors beyond comprehension!

Article good. Video better. I’m not sure I’ve seen a dc video as fast paced as your xAI video since that one. It was like I was watching some gripping mission impossible action movie not some boring dc video. I don’t know how you did that, but keep doing more of it

Eagerly awaiting the day when the AI bubble bursts after investors figure out that AI isn’t a magic black box that replaces human employees. Then some of this hardware can hit the secondary market for prices that hobbyists are willing to pay for hobbyist use of AI.

AI isn’t some investor-fueled bubble. Companies are actively spending massive amounts of money on it. If the companies are collectively spending hundreds of billions of dollars per year on AI just to satisfy perceived investor interest then there is a much bigger problem in the marketplace than an AI bubble. If it is a bubble it is in the hopes and expectations of technology companies, not in the speculation of investors.

@Matt it’s become so bad that the likes of Microsoft have started ‘trimming the fat’ despite record revenues, and are desperately tacking AI (‘copilot’) onto any popular product despite customer pushback that it doesn’t really add any value let alone justify a price increase.

Looking at Cologix’s locations it seems that the most northerly location is Montreal Canada.

Checking the Open Canada website for “Permafrost, Atlas of Canada, 5th Edition” we see that there’s so many better locations available for cooling a server farm (at the lowest possible price). Just as you probably don’t want to setup in southern California (because of the temperature and electricity costs) you wouldn’t setup in southern Canada (when you can move to northern Canada, near a dam).

Not my hundreds of millions ….

Within a year or two, we’ll be looking back and wondering how absolutely *everyone* seemed to think this was a great idea.

I’d love to see this equipment put to work doing scientific research but I fear that it’s already too tightly optimized for AI work.

Regardless, fascinating view into how these clusters come together. Thanks, Patrick!