Networking a NVIDIA B200 Cluster

While the GPU servers may get all of the attention, networking is a big deal. Inside each Supermicro 8-GPU system, we have NVLink. To scale out, we need an East-West network so GPUs across servers can talk to each other. We also need a North-South network to connect the systems to the rest of the infrastructure and things like clustered storage. There are management networks for the servers, DPUs, power distribution units in each rack, the network switches, security cameras and devices, environmental monitors, and more. A lot goes into networking.

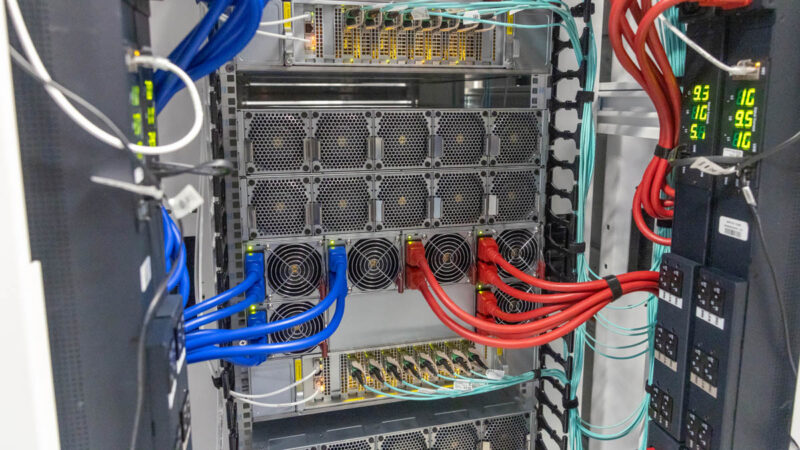

At the heart of the East-West network is the NVIDIA Quantum-2 networking. Previously, we would have said something like NDR InfiniBand for this 400Gbps generation interconnect. NVIDIA has other neat technology, like SHARP to do in-network computing for clusters. Still, on the fan and power side, these 1U switches look mundane.

On the optical cage side, they are quite exciting. Something you might not have seen before is that the OSFP optical modules each have two MPO connections.

In our photos, you may also notice that there are many different types of connections being made for different networks, from 400Gbps networking like this NVIDIA ConnectX-7 and Quantum-2 East-West network all the way to the management network.

Part of the reason for this is that each GPU gets its own ConnectX-7 NIC.

There are eight 400Gbps NICs for GPUs, or 3.2Tbps worth. There is then a 2x 200Gbps DPU, a 10GbE OS and application management port, and two management ports (one DPU one server) on each server. Lambda has over 3.6Tbps of networking capacity on these machines and something like 13 network connections per GPU server.

The network is one of the major reasons we see these rows of 256 GPUs each. Something to keep in mind is that Lambda is building this to be multi-tenant. As a result, there are layers of networking beyond just the direct switch-to-device networks so that thousands of GPUs can communicate.

In contrast to the rear GPU side, the front DPU side has relatively mundane networking requirements.

Here are some of the Arista Networks 7060DX5-64S switches. These are the 64-port 400GbE switches that importantly use the QSFP-DD cages, making the Ethernet side much easier. Going NVIDIA Quantum-2 finned OSFP to NVIDIA ConnectX-7 flat top OSFP is easy but crossing over to QSFP-DD is quite painful. We are learning this the hard way with a 1.6-4Tbps network traffic generation tool we are bringing up for our future networking reviews.

When we visited the cluster, there were still rows being built out. You may see unpopulated cages in these switches, but that is a good thing, as the cluster was not complete. The Ethernet side can also be used for many purposes, so extra capacity here is good.

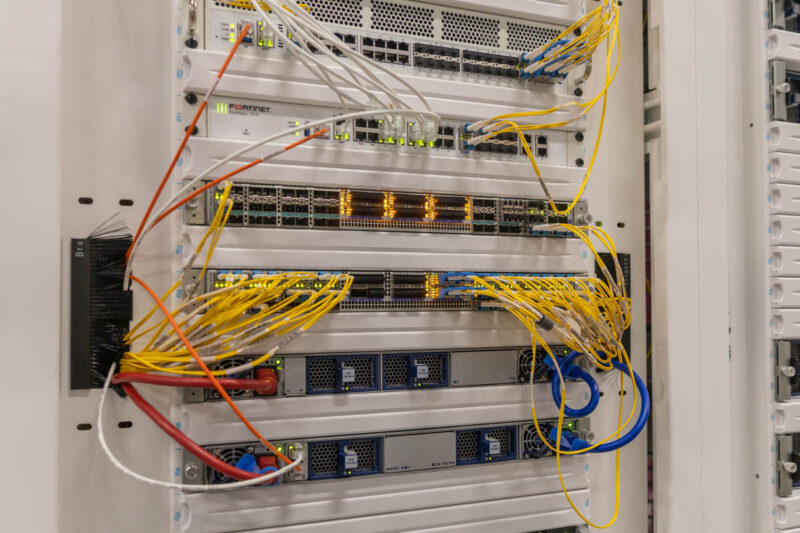

We often overlook this, but just because we have 200Gbps/400Gbps networks does not mean those are the only networks. Each server also has a 10GbE OS and application management interface, and then two 1GbE management interfaces linked as well.

Lambda also needs to provide things like VPN services to specific clients, or even for its own purposes. It needs firewalls for the installation as well, so we see some Fortinet gear racked. One important fact is that AI clusters of this scale are not running 1Gbps or 10Gbps fiber links to bandwidth providers, traditional hyper-scale clouds, and so forth. Instead, the uplinks here usually start at 100Gbps, and there is more than one. Customers need to move huge amounts of data that they may have stored with a cloud provider, so the network transit outside of the cluster is important, and that is another reason Lambda is using this Cologix data center.

It is actually quite neat to see just how many different types of networks go into this type of cluster, even though we often only discuss the GPU East-West network.

Here is a great shot at two of the non-GPU networking racks for the cluster.

Above the racks, you might have noticed more elevated raceways than Los Angeles freeways. These hold the fiber and networking cables as they cross the cluster.

We often show the compute racks, but these are how we go from a single GPU server or a rack or two of GPU servers, and we scale to thousands of GPUs (or more.)

Next, let us talk about the storage.

Manmade horrors beyond comprehension!

Article good. Video better. I’m not sure I’ve seen a dc video as fast paced as your xAI video since that one. It was like I was watching some gripping mission impossible action movie not some boring dc video. I don’t know how you did that, but keep doing more of it

Eagerly awaiting the day when the AI bubble bursts after investors figure out that AI isn’t a magic black box that replaces human employees. Then some of this hardware can hit the secondary market for prices that hobbyists are willing to pay for hobbyist use of AI.

AI isn’t some investor-fueled bubble. Companies are actively spending massive amounts of money on it. If the companies are collectively spending hundreds of billions of dollars per year on AI just to satisfy perceived investor interest then there is a much bigger problem in the marketplace than an AI bubble. If it is a bubble it is in the hopes and expectations of technology companies, not in the speculation of investors.

@Matt it’s become so bad that the likes of Microsoft have started ‘trimming the fat’ despite record revenues, and are desperately tacking AI (‘copilot’) onto any popular product despite customer pushback that it doesn’t really add any value let alone justify a price increase.

Looking at Cologix’s locations it seems that the most northerly location is Montreal Canada.

Checking the Open Canada website for “Permafrost, Atlas of Canada, 5th Edition” we see that there’s so many better locations available for cooling a server farm (at the lowest possible price). Just as you probably don’t want to setup in southern California (because of the temperature and electricity costs) you wouldn’t setup in southern Canada (when you can move to northern Canada, near a dam).

Not my hundreds of millions ….

Within a year or two, we’ll be looking back and wondering how absolutely *everyone* seemed to think this was a great idea.

I’d love to see this equipment put to work doing scientific research but I fear that it’s already too tightly optimized for AI work.

Regardless, fascinating view into how these clusters come together. Thanks, Patrick!