Several weeks ago, we had the opportunity to tour one of the first air-cooled NVIDIA HGX B200 clusters in Ohio. The cluster was made of Supermicro servers for Lambda’s 1-click AI clusters and housed at Cologix. This cluster represents hundreds of millions of dollars in investment, and it was using new GPUs, so it was cool to see. In this article, we are going to get into a lot of detail that folks who have never toured a cluster like this will have ever seen. We also have a video for this one, which is worth a watch even if you are a reader, as there are more views on video and there is even a teaser of our next tour video near the end:

As always, we suggest watching this in its own browser, tab, or app for the best viewing experience. Also, we need to say thank you to Supermicro, Lambda, Cologix, and NVIDIA for getting us to and into this cluster with their sponsorship and support. With that, let us get to it!

Exploring the NVIDIA HGX B200 Lambda AI Cluster at Cologix with Supermicro

We cannot say the exact number of NVIDIA B200 GPUs installed, but what we can say is that it is in the thousands. It is crazy to think that only a few years ago, a system of this scale would have been a top supercomputer. It is now a cool AI cluster to look at.

The cluster itself is an air-cooled affair. That allows for faster cluster deployment. For companies like Lambda, getting GPUs online fast means that they can turn those GPUs into online clusters ready to rent to customers.

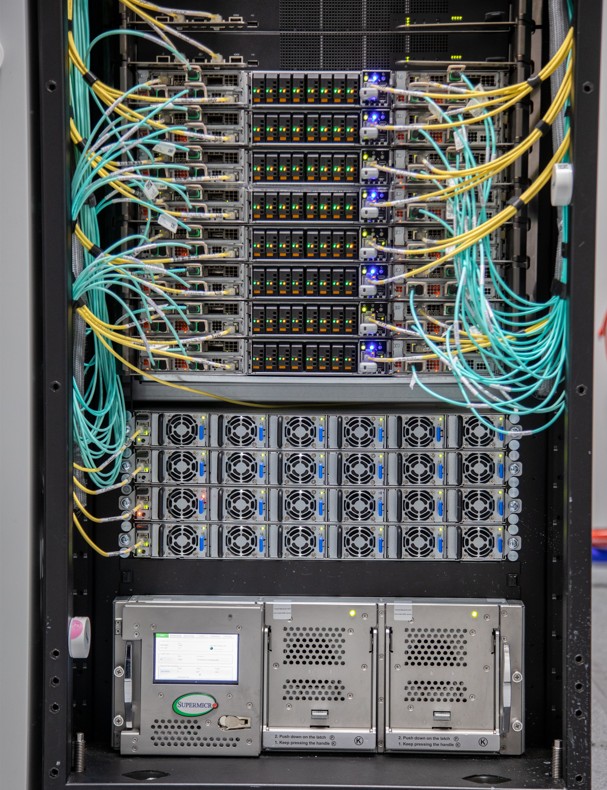

Racks of 32 GPUs each, four in each Supermicro NVIDIA HGX B200 platform, are aligned in rows with cold aisles and withering hot aisles. The heat from the GPUs is contained and circulated outside of the building.

You will notice that the GPUs are aligned with rows of eight GPU server racks, each with four servers. Each of those servers has eight GPUs. That means that rows like the one below have 256 GPUs total.

In the subsequent parts of this article, we are going to get into detail about these racks. Everything from the Supermicro servers, to the networking, storage, and even some of the often overlooked bits.

We are also going to get into the data center level cooling that keeps this cluster cool.

This cluster is not the only one in this 36MW Cologix facility, so we are also going to show you outside in the power yard. I may look small in this photo, but it is even bigger than this shot makes it look.

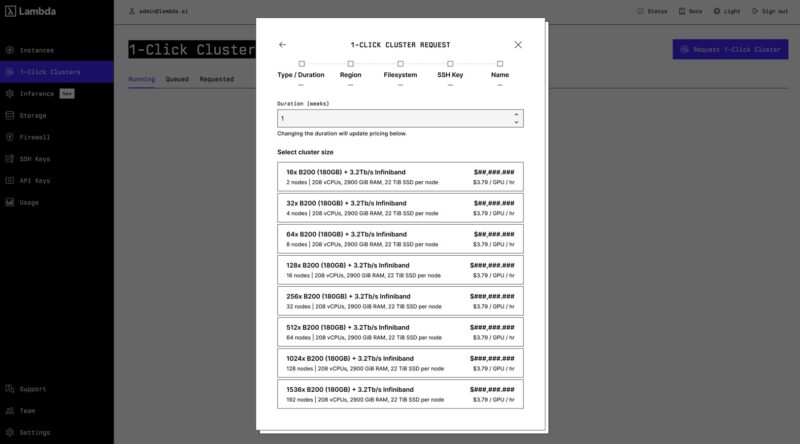

The entire reason for this is really to support Lambda’s 1-click clusters. With many cloud providers, if you need a GPU cluster, it requires a call to sales and then a provisioning process that can take time and patience. Lambda’s 1-click clusters are the alternative. You can provision them with a credit card, and without going through a manual sales process. That is the Lambda neo-cloud experience.

While we are showing the air-cooled NVIDIA HGX B200, it is probably worth noting that we sent a team to check out one of Lambda’s liquid-cooled Supermicro NVIDIA GB200 NVL72 racks at a different facility. You can see that in the accompanying video.

I think it is important to note that the companies involved in the main cluster we are looking at, Supermicro, Lambda, Cologix, and NVIDIA, are not just building out this one cluster. Instead, this is more of an exercise in finding available power and data center space and then building clusters to fit inside those available locations.

With that, let us get into the components of a cluster like this, including the GPU servers, networking, storage, CPU compute, and the facility’s power and cooling. Let us get into it.

Manmade horrors beyond comprehension!

Article good. Video better. I’m not sure I’ve seen a dc video as fast paced as your xAI video since that one. It was like I was watching some gripping mission impossible action movie not some boring dc video. I don’t know how you did that, but keep doing more of it

Eagerly awaiting the day when the AI bubble bursts after investors figure out that AI isn’t a magic black box that replaces human employees. Then some of this hardware can hit the secondary market for prices that hobbyists are willing to pay for hobbyist use of AI.

AI isn’t some investor-fueled bubble. Companies are actively spending massive amounts of money on it. If the companies are collectively spending hundreds of billions of dollars per year on AI just to satisfy perceived investor interest then there is a much bigger problem in the marketplace than an AI bubble. If it is a bubble it is in the hopes and expectations of technology companies, not in the speculation of investors.

@Matt it’s become so bad that the likes of Microsoft have started ‘trimming the fat’ despite record revenues, and are desperately tacking AI (‘copilot’) onto any popular product despite customer pushback that it doesn’t really add any value let alone justify a price increase.

Looking at Cologix’s locations it seems that the most northerly location is Montreal Canada.

Checking the Open Canada website for “Permafrost, Atlas of Canada, 5th Edition” we see that there’s so many better locations available for cooling a server farm (at the lowest possible price). Just as you probably don’t want to setup in southern California (because of the temperature and electricity costs) you wouldn’t setup in southern Canada (when you can move to northern Canada, near a dam).

Not my hundreds of millions ….

Within a year or two, we’ll be looking back and wondering how absolutely *everyone* seemed to think this was a great idea.

I’d love to see this equipment put to work doing scientific research but I fear that it’s already too tightly optimized for AI work.

Regardless, fascinating view into how these clusters come together. Thanks, Patrick!