The 360TB+ Studio NAS Build

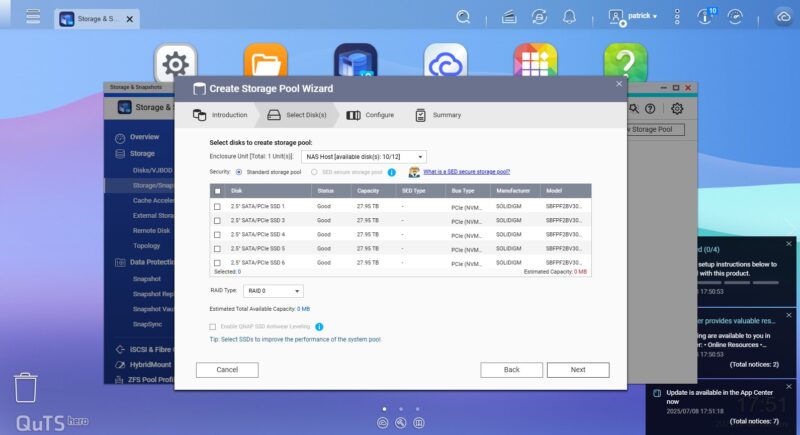

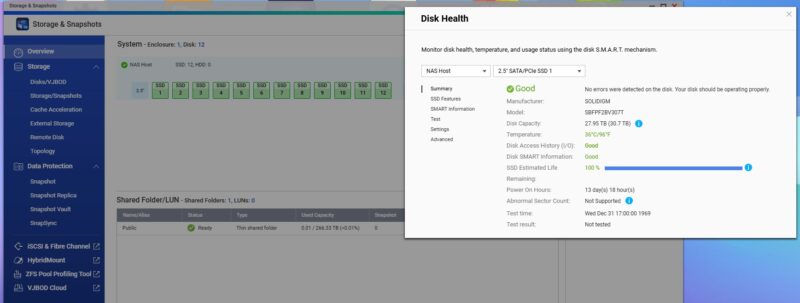

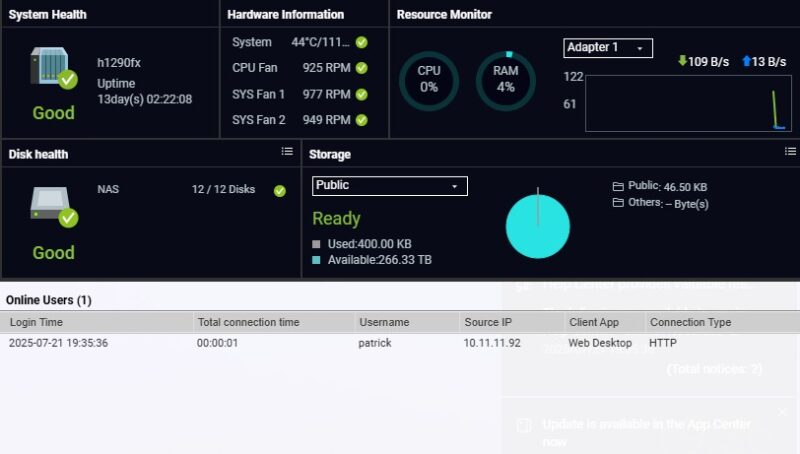

First, with the QNAP TS-h1290FX we did the straightforward build and simply added the SSDs.

With that, we had a NAS capable of just over 360TB of raw capacity which was great. The SSDs also ran at very reasonable temperatures.

Still, it felt like we could get more from the machine. The 8-core AMD EPYC 7232P was fine for a NAS, but this was a SP3 socket. There was plenty of room to improve since that suppots 64-core CPUs.

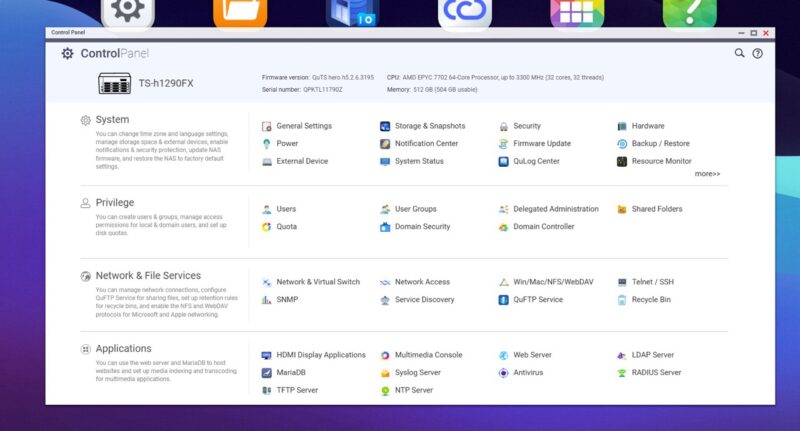

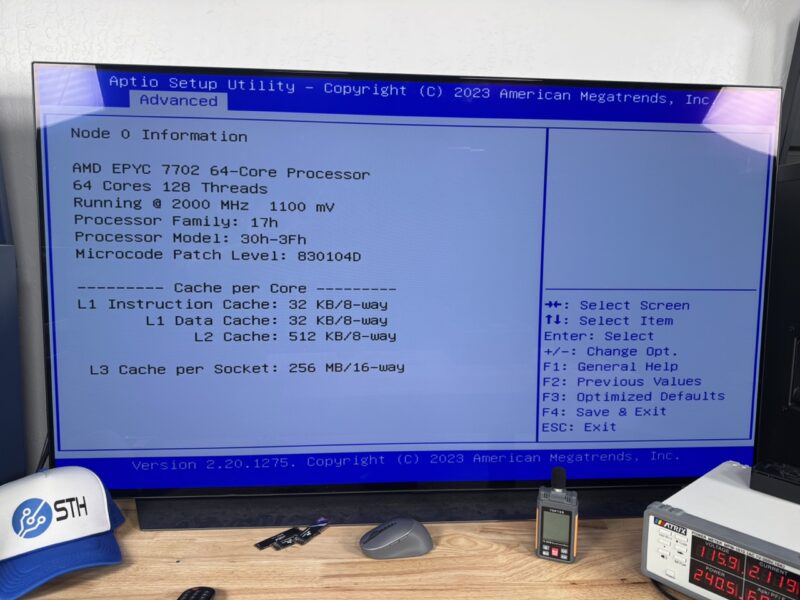

AMD back in 2019-2020 sent us an AMD EPYC 7702 64-core part. This felt like a huge upgrade. As a result, we added that and 512GB of memory.

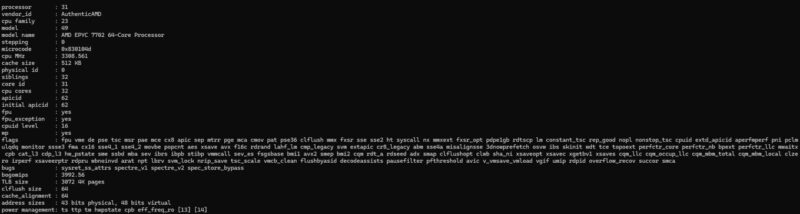

A 64 core/ 128 thread NAS with 512GB of memory seemed like a great idea. It booted without issue, but then we noticed that the CPU was being reported as only 32 cores/ 32 threads. We fired up SSH and saw that it ws only 32 threads as well.

At this point, we thought it was a BIOS setting, so we installed a GPU, went into the BIOS. Here, the CPU was showing up as 64 cores/ 128 threads but we did not have an option to enable more cores.

It was very odd indeed. When we tried other higher core count SKUs we were stuck in this 32 thread maximum. Eventually, we installed an AMD EPYC 7302P which is a 16 core/ 3 thread CPU which worked great. That might be because it is another SKU option in this NAS.

With that, we had a NAS that was easy to edit on, we could have two clients doing 20-25Gbps of transfers to/ from it, and it felt like we had an awesome configuration. With more memory and compute, the next question was what else could we do with it.

Going Beyond Just a Studio NAS for Video and AI

Our next thought was some of our other goals:

- 100Gbps(+) of networking connectivity for AI nodes accessing models stored on the NAS

- A GPU for local transcoding but also any video analytics that we needed

- Setup some basic VMs to consolidate workloads running on smaller machines in the studio

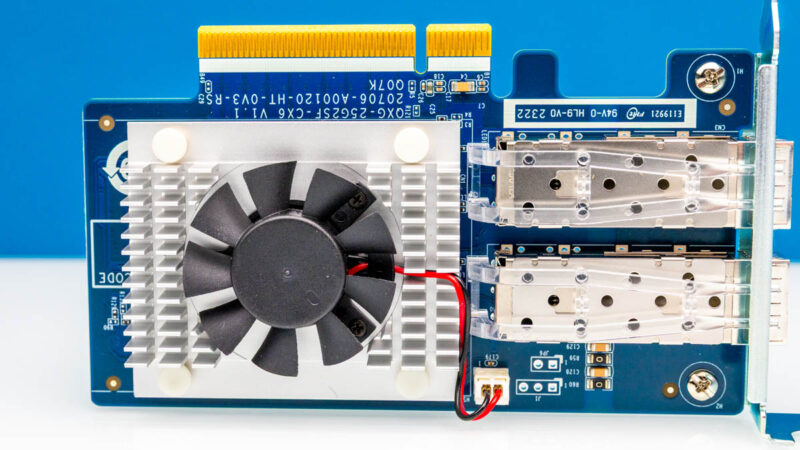

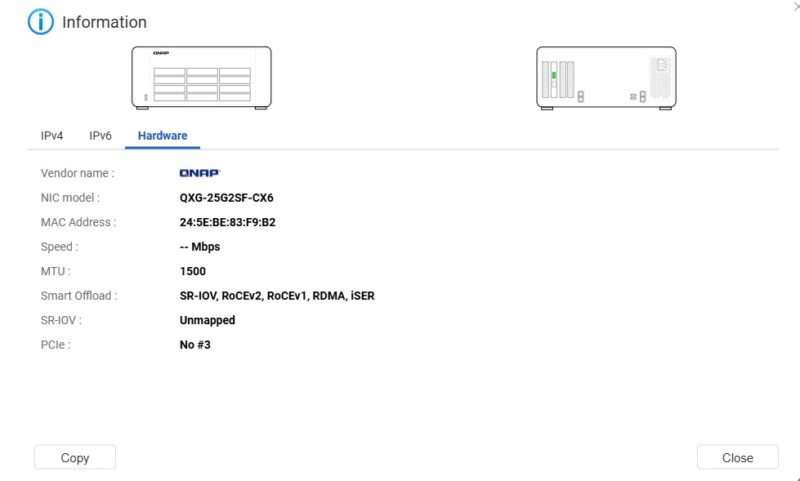

Starting with the network side, we had 2x 25GbE and 2x 2.5GbE onboard NICs. We tried 100GbE NICs, but the optics got fairly hot while DACs tended to work well, especially in ConnectX-6 NICs. Still, we ended up trying, and liking the QNAP 25GbE solution since it had a fan.

One other benefit is that the QNAP NIC shows up and reports properly in the UI.

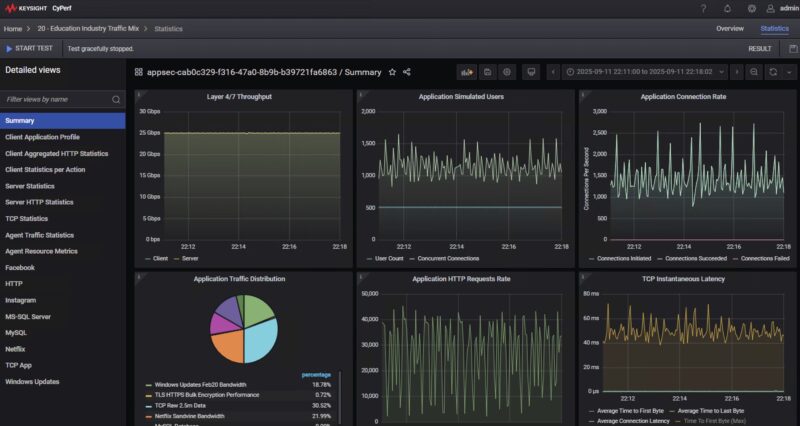

We were able to test this NIC in our QNAP QXG-25G2SF-CX6 25GbE NVIDIA ConnectX-6 Lx NIC Mini-Review using our new Keysight CyPerf testing tool and we saw great bandwidth running a router VM on the system and pushing data through the NIC.

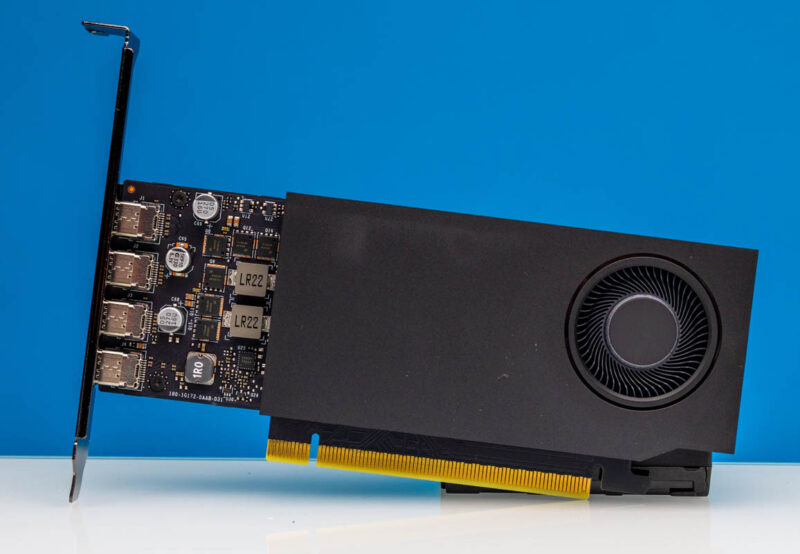

Once we added networking, the next step was the GPU. We ended up adding a few different GPUs, but settled on the lower performance and lower power NVIDIA RTX A1000:

The NAS did not come with a GPU or even onboard graphics. Adding the NVIDIA RTX A1000 allows for functions like using it for NVR video analytics, local transcoding of data on the SSD storage, display adapters for VMs and the system, and even lightweight LLMs directly on the NAS.

A key trend we will see in the market will be adding GPUs in network storage arrays that can generate insights from the data stored. For example, one can take photos and use a vector database to build out key attributes. From there, instead of having to search for all photos that have cars in them, one can search for photos with blue cars and just return the nearest images on the storage array.

We also are using this to host AI models for many of the systems we review in the studio.

Final Words

We will have more on the QNAP TS-h1290fx in a future review. Still, the impact was clear. When we had thought about doing this project in 2023 with 7.68TB drives, the math was rough. With around 90TB of capacity for a NAS unit, it was hard to justify undertaking a project like this. Fast forward two years, and with the Solidigm D5-P5336 30.72 drives that were loaned to us, we had a denser NAS than if we had built around 3.5″ hard drives with a completely different level of performance. That meant that while we set out to store just our video footage on the NAS, it has ended up being a store for AI models that we serve to different systems in the studio. Not only did we have a relatively easy NAS for our video storage in the studio, but we also had a system that could take on the load of some of our infrastructure virtual machines and containers. Add to that the GPU to be able to do local transcoding and utilize new AI models, and this is a far better project than we would have embarked upon a few years ago before large capacity SSDs became mainstream.

As drive capacities continue to increase, this little machine can scale to over 1.4PB of storage using today’s drives. We tested both the 61.44TB and 122.88TB of the D5-P5336 in here as well, although we only had single drives. The idea of almost 1.5PB of storage being in a quiet desktop chassis is really cool. Our hope is that over the next few years, if new drive technologies devlop to lower the cost of larger drives, we can upgrade this with even more storage capacity than we have today.

We will be featuring this platform in the future since it has become a cornerstone of studio operations here at STH.

These are always my favorite articles on STH. What you don’t mention and that I’m sure you’re going to get some here blowing back on is that QNAP is popular in TikTok, Instagram, and YouTube video production because it’s so easy to use. We’re tech savvy on STH, but if you’re a gorgeous beauty channel and need storage but don’t care about installing your own NAS OS, then its much easier to use a pre-built like this QNAP and the QNAP is priced better than a Dell NAS.

Looks like a standard ATX format PSU – could probably find a dual PSU in the form factor to get a little more redundancy added. Quick google search, something like the fsp twin pro 500w (I don’t know that this is even a good psu model, but just that there are ATX dual units that fit in that ATX size). 7002 is a little long in the tooth now, but solid performance and lower power consumption than the latest. You only care about that cpu efficiency if it ran out of breath vs bandwidth – at 100Gbps it might but not badly. Nice little server, it’s more than a NAS really with that cpu in there.

@Patrick Did you contact QNAP and ask why they have a 32thread limit?

I suppose if you’ve got $36,000 in nvme ssd just sitting around this might make sense but I doubt any homelabbers will try this set up, for that price they could likely build a 1PB cluster and any company that can afford to drop 36k on a storage server will likely go for something more professional grade

Tim I think you’ve missed the video production angle. There’s so many of those shops. Camera stuff is $100 for a $2 piece of metal. Even used, a SSD Dell NAS SAN would be four times the cost of this and would be harder to setup.

Interesting project but seems impractical for all but people in similar shoes – wanting fast access to large amounts of videos (and making money on it, and getting freebies/discounts from vendors). I don’t have a use case for this in my homelab, but interesting nonetheless.

Tim what do you consider professional grade? This NAS has sever hardware running it. My work we use QNAP NAS for backups in production and it works great. We have a ZFS filesystem on it with NVMe write caching on a 25Gb network.

Am I correct when I say

– Qnap ZFS is a fork of an older OpenZFS

– Qnap pools are not compatible/moveable from/to OpenZFS

– Newest OpenZFS features like Raid-Z vdev expansion, draid, fast dedup, vdev rewrite or special vdev as slog are not available in Qnap ZFS and will most propable never be due separate ZFS development

Hey Patrick, here’s my 2¢.

ZFS, a video accelerator less powerful than the AMD Alveo U30 Data Center Accelerator

Card, and SCM (since you are going Solidigm) like the D7-P5810.

I would like the article to layout the actual price of all the hardware assuming it was purchased and not donated/sponsored.

Give the readers a feel for what the actual layout is for something equivalent, rather than vaguely indicating it can be pricey.

360TB raw, but how did you actually set it up? Raid 5, 6, or 10?

I’d really like to see 8-16tb ssds become price competitive with hdds. I’d be very happy with sas12/24 speeds if it meant they were cheaper. I’ll be limited by 10gbe networking anyway.

I’d also really like to see some low cost pcie switches/fanout cards ideally with the ability to do pcie rev conversion. Plug the card into a 5.0×16 slot and get 32 4.0 lanes, or 64 3.0 lanes or a mix of the 2. Either that or more pcie lanes on desktop platforms.

I have this system with the 32-core CPU / 256GB RAM, 32TB Solidigms, a couple M.2 SSDs on a PCIe card internally for OS mirror, and installed TrueNAS Scale CE instead of QNAP’s OS. I have a production company where we mostly shoot ARRIRAW from Alexa LF, working in Resolve/Nuke/etc., and I needed something that a small workgroup could use for this but also that I could fly with as I am on-location a lot. This has worked like a charm (2 years in). I put it in a hard case and fly all around with it, have an extra 4-port 25Gb NIC and I can just plug 6 computers into it directly and all can work RAW native without any issues. I can have a few editors, a DIT, and an LTO backup all running at the same time.

I wish there were more compact server-class builds like this. I was going to engineer my own and have a custom Protocase built, but then this came out and it was close enough to my needs that I just went with it to make it easy.

Great as always.

Super cool project, but at this point calling it “Serve the Home” has really jumped the shark.

Super feedback Tyler! The direct connection is a great idea as well.

@Scott – not sure what I can tell you. Do you often ask why the Wall Street Journal covers topics beyond a single road in NYC?

@Rob We did RAIDZ2 – Using the calculator, RAIDZ was probably OK given the better reliability and faster rebuilds with the SSDs, but it seemed wise to get the much higher reliability.

The Wall Street Journal reference is kind of apples and oranges. Not that I don’t appreciate these articles, but I remember when the site had a lot of homelab content. There is still some of that, but most seems to be centered around uber expensive datacenter enterprise level equipment that no one in their right mind would have in a home lab. I would just enjoy seeing more home lab content again is all, and from the looks of other commenters, I’m not the only one. Regardless, your content is great, and appreciated.

That’s a lovely nas, good spot. But how many STH customers can drop really £50k on a desktop nas?

@Tyler Out of curiosity, after installing TrueNAS Scale, were you able to use all 64 CPU threads?