Today, AWS made the much-anticipated announcement of Graviton4, which should be available in 2024. This is AWS’s latest Graviton processor and the fourth generation launched in the last five years. The company also announced its second-generation Trainium2 processor for AI workloads.

AWS Graviton4 is an Even Bigger Arm Server Processor

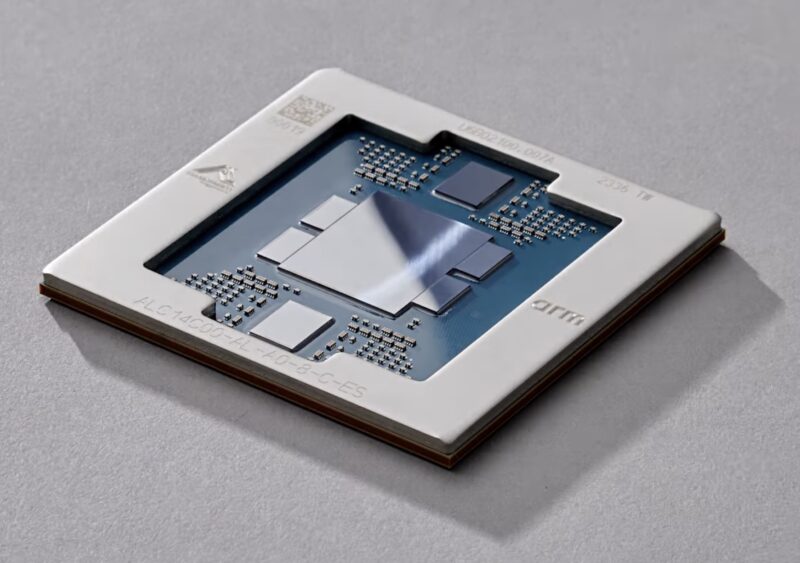

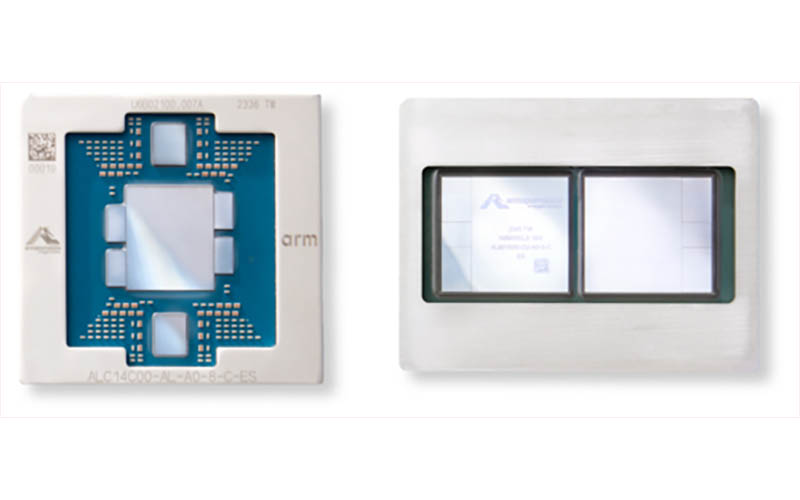

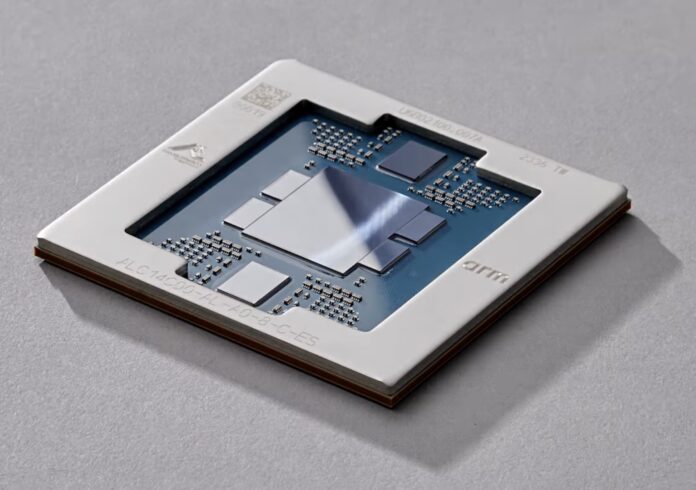

AWS is continuing on its path from its Annapurna Labs acquisition many years ago and now has a 4th generation of Graviton Arm CPUs. The Graviton4 is said to have 50% more cores (now 96), 75% more memory bandwidth (faster DDR5 with 12 channels), while increasing overall compute performance by 30%.

AWS does something interesting, and more like Ampere is doing with AmpereOne with the design principles of having a sea of cores and then I/O surrounding the chip. Intel will start to go that route with Intel Granite Rapids and Sierra Forest next year, but not to the same extent. What is really interesting is that when we talk to startups, and companies building chiplets, they tell us that cloud customers are asking for I/O hub designs, more like AMD’s EPYC. As we continue into the chiplet era, chip package designs are going to get a lot more exciting.

As part of today’s announcement, AWS stated that it has now made over 2 million Graviton Arm CPUs.

Trainium2 for AI

AWS Trainium2 is the company’s new AI accelerator to push back against the NVIDIA stranglehold on the market. AWS says the new parts can offer up to 4x the performance at twice the energy efficiency as its first generation Trainium parts from three years ago. AWS is also transitioning to liquid cooling for its accelerator-heavy portfolio. We expect all of AWS’s high-end accelerators to be liquid-cooled by 2024. Feel free to take a look at some liquid cooling options on STH for traditional server vendors as well.

Trainium2 is designed to be deployed in clusters of 16 chips. What is perhaps more exciting is that AWS says it can scale clusters up to over 100,000 chips. That is more accelerators than the company’s newly announced NVIDIA DGX Cloud offering. Of course, that assumes one can actually get access to Trainium2 as we know that AI accelerators in the cloud are hard to come by.

Final Words

While AWS and NVIDIA announced that AWS would house a 16,000 node GH200 cluster which alone will be over 16 megawatts just for the GH200 cards, it is pretty easy to see that AWS’ strategy is to offer whatever is popular in the market, plus its own hardware. Although mainstream cloud provider CPU pricing from large chipmakers is often a quarter, a fifth, or less of the list price, that is not the case on AI accelerators. There is a good chance AWS is looking at 10+ figure spend with NVIDIA, looking at NVIDIA’s margins, and thinking about how it can do the same thing in the AI training market.

Trainium2 is spelled incorrectly several times in the article.

Tranium is Amazon’s Rainbow AI processor?

Johannes – trying to get both spellings since AWS used both today.

“Silicon underpins every customer workload, making it a critical area of innovation for AWS,” said David Brown, vice president of Compute and Networking at AWS. “By focusing our chip designs on real workloads that matter to customers, we’re able to deliver the most advanced cloud infrastructure to them. Graviton4 marks the fourth generation we’ve delivered in just five years, and is the most powerful and energy efficient chip we have ever built for a broad range of workloads. And with the surge of interest in generative AI, Tranium2 will help customers train their ML models faster, at a lower cost, and with better energy efficiency.” Source: AWS Press Release