In Part 1 of our launch day coverage, we discussed the basic architecture of the AMD EPYC 7000 series CPUs. AMD, perhaps because of their GPU business, embraces the idea that the various devices plugged into a server may be more valuable than the CPUs themselves. Conversely, Intel still starts conversations with the CPU being the center of platforms. When you look at GPU compute servers, virtualization servers, and storage servers, often other components such as GPUs, RAM and SSDs/ HDDs in a server can make up a more significant amount of costs. As part of embracing that reality, AMD has designed a unique set of platform level features that will interest those looking beyond CPU bound compute applications.

AMD EPYC 7000 Series Platform-Level Features

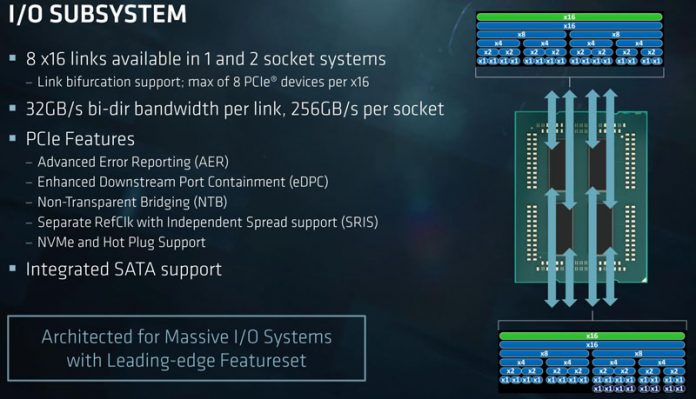

Understanding the AMD EPYC 7000 series platform level features is key to understanding AMD’s design philosophy. AMD has two x16 links per physical die. In dual socket configurations, one of these x16 links is essentially used for Infinity Fabric instead of PCIe. that means that in both single and dual socket configurations, AMD EPYC can have up to 128 PCIe lanes.

PCIe bifurcation is a big deal in storage applications. Here AMD can support 8x PCIe devices per PCIe x16 lane. For storage systems builders, AMD now has a very interesting platform not just for NVMe storage arrays of x4 devices, but also dual-port NVMe arrays where each drive gets an x2 link.

Up to eight of these PCIe lanes can be used as lower speed SATA instead. We have seen Intel use similar technologies in products such as its Atom C3000 series codenamed “Denverton” and expect this to be how SATA is implemented more frequently in the future. AMD has a forward-looking platform architecture here.

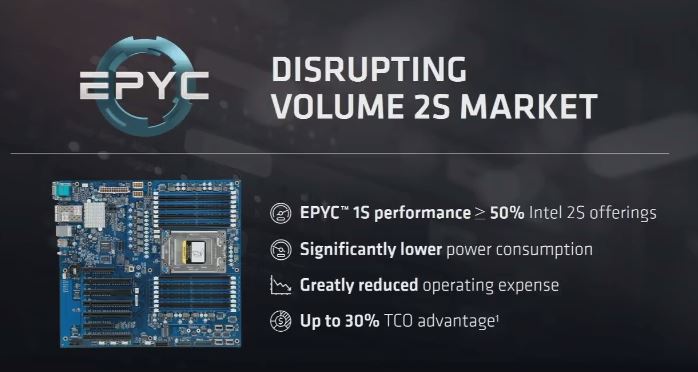

Since in single socket configurations, AMD can dedicate the PCIe lanes for Infinity Fabric die-to-die interconnect to PCIe instead, AMD has a unique single socket proposition. It can handle 128 PCIe lanes (more likely 96 once other platform features are implemented), SATA devices and up to 16 DIMMs or 2TB of memory. AMD essentially has more PCIe lanes and a similar memory capacity and core count to a huge swath of the Intel Xeon E5-2600 V1-V4 markets using only one CPU.

We mentioned 96 lanes because in AMD’s architecture “everything is a PCIe lane.” We still expect to see some I/O lanes dedicated to SATA for boot devices. Likewise, the ASPEED AST2500 series or other BMC devices are still present on systems:

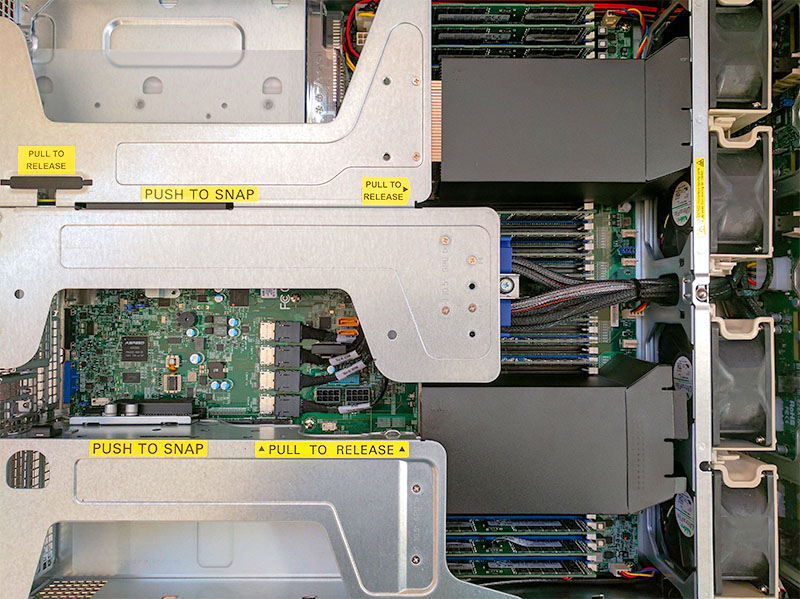

Still, the number of PCIe lanes is amazing. the Supermicro 2U “Ultra” server above has four x4 NVMe links. It has four PCIe x8 slots in the bottom (pictured) riser, two PCIe x16 GPU supporting slots, additional PCIe slots for HBAs and NICs. Simply this 2U server is using more PCIe lanes than Intel has in its current generation platform. It also has 32 DIMM slots and can take up to 4TB of DDR4-2666 memory.

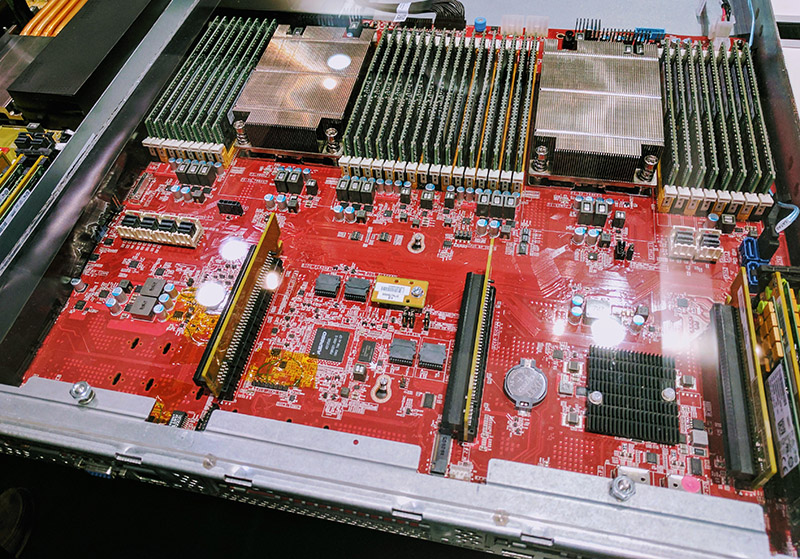

Here is another Microsoft OCP design using EPYC:

You can easily see the large number of PCIe lanes for accelerators and NVMe devices.

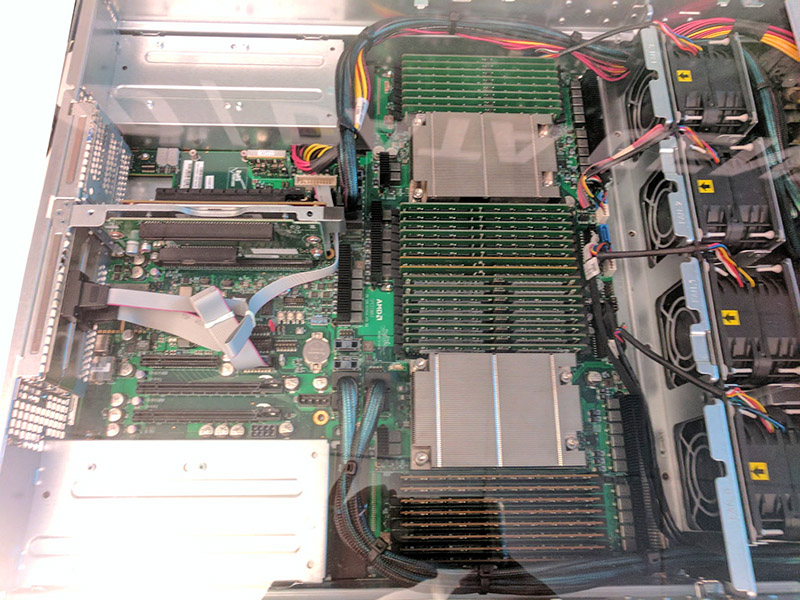

Here is a reference AMD “Speedway” platform that shows a similar story:

All three designs are using ASPEED BMCs for standard management functionalities.

Final Words

We are going to have more on the platforms coming soon, but AMD has a massive dual-socket platform. The single socket story is even more disruptive as it keeps the same level of PCIe I/O while halving the number of NUMA nodes in the system. AMD is pushing this story, even with three targeted SKUs, but it will be up to vendors preparing their sales teams to show customers why a new platform from AMD can do more with one socket than their existing installed base of dual socket servers can do.

128 Lanes sounds like a lot, but it’s not that impressive for something that’s essentially an 8 CPU system.

I’d like to see 4 x Highpoint SSD7101A installed and running

in one of these massive server motherboards. That AIC

has good fundamentals, but it’s still a diamond in the rough.

Patrick, we never did hear if Highpoint did find an OEM partner

for their RocketRAID model 3840A. I’ve been looking, but

no reviews have turned up anywhere on the Internet.

@ Nils: There’s nothing stopping Intel from architecting 128 PCIe lanes into their monolithic dies (completely orthogonal to number of dies), nor AMD for that matter. For Intel, doing what AMD has done cannibalizes some part of their 2P system sales so there’s clearly no incentive for marketing to push for it. Now, they might have to.

Also it’s 128 lanes in 1P too, so 4 CPU system.

@dfx2

I think Intel already got cold feet with regards to offering too much IO on a single die, seeing how many switched to the cheaper Xeon-D which offers a lot of I/O and decent performance as opposed to the Xeon E3 which is crippled with regards to I/O or the more expensive Xeon E5 in a single socket configuration. I believe clients would prefer a single die in a compact server to a large NUMA setup.

If AMD or someone else were to deliver 8 Cores and 64 Lanes on a single die I think it would fly off the shelves, or perhaps a SoC with integrated 40GbE and 32 Lanes.

It doesn’t surprise me in the least that there is no known successor to Broadwell-DE.

@ Nils: I’d expect AMD’s on package NUMA to have lower latencies than an off-socket NUMA on Skylake (we’ll soon find out but just from the fact that they’re millimeters apart instead of 10s of cms). So if decent performance is all you want, you needn’t bother about optimizing for NUMA. Also, i think AMD has an 8-core Epyc variant with the full set of PCIe lanes for $400(?).

dfx2:

Well the difference is that you can get a lot of cores per CPU with Intel without bothering with NUMA at all, and two nodes is a lot better than 8.