Today is a big day. Amazon AWS launched new deep learning / AI focused instances featuring the NVIDIA Tesla V100 GPUs. The NVIDIA Tesla V100 GPUs have NVIDIA’s latest technology including the new Tensor Core. We highlighted the Tensor Core benefits and the NVIDIA Tesla V100 architecture in our NVIDIA Tesla V100 Volta Update at Hot Chips 2017. Suffice to say, these are monster chips and are highly anticipated for the deep learning / AI crowd. At the same time, the V100 is very costly so if you are infrequently training models the ~$150K rumored going price for an 8x NVIDIA Tesla V100 server may be less cost-effective than running the workload in the cloud.

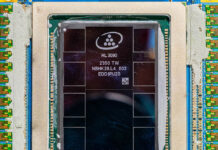

Each NVIDIA Tesla V100 Volta-generation GPU has 5,120 CUDA Cores and 640 Tensor Cores. That provides 125 TFLOPS of mixed-precision performance, 15.7 TFLOPS of single precision (FP32) performance, and 7.8 TFLOPS of double precision (FP64) performance.

The Amazon AWS EC2 P3 instances also include NVLink for ultra-fast GPU to GPU communication. At STH, we have figures already for 8-way NVLink enabled Tesla P100 and V100 systems that we will be publishing soon.

Amazon AWS EC2 P3 Instances Specs and Pricing

Here is a table with the three instance types and hourly pricing based off of the N. Virginia list.

| Instance Size | Tesla V100 GPUs | GPU Peer to Peer | GPU Memory (GB) | vCPUs | Memory (GB) | Network Bandwidth | EBS Bandwidth | $/hour |

|---|---|---|---|---|---|---|---|---|

| p3.2xlarge | 1 | N/A | 16 | 8 | 61 | Up to 10Gbps | 1.5Gbps | $3.06 |

| p3.8xlarge | 4 | NVLink | 64 | 32 | 244 | 10Gbps | 7Gbps | $12.24 |

| p3.16xlarge | 8 | NVLink | 128 | 64 | 488 | 25Gbps | 14Gbps | $24.48 |

Not every AZ has the P3 instances at the time of publication.

If you do have a need for AWS EC2 P3 instances on a regular basis, a 12-month all up-front reserved term is only $136,601 which is an absolute bargain compared to our estimate of just under $160,000 for an 8x Tesla V100 server plus power cooling and networking.

Final Words

Make no mistake, NVIDIA is shipping the Tesla V100. As AWS launches the p3.2xlarge, p3.8xlarge, and p3.16xlarge instances with 1, 4 and 8 of the GPUs respectively, we expect Google and others to follow suit in short order.

The bigger implication here is that NVIDIA has been shipping Volta for some time now and we are now well beyond the point when consumers get a new architecture first. We also wonder at what point NVIDIA will have its architectures diverge. The Tensor Core is a big deal from what we are seeing on the deep learning side, but it is less useful in a gaming scenario.

You can read more about the AWS announcement here.