Kicking off our AMD Instinct MI325X review series, we have the Gigabyte G893-ZX1-AAX2. This server combines eight AMD Instinct MI325X GPUs with two AMD EPYC CPUs, plus all of the power, networking, and local storage one might need. It is also an air-cooled GPU server, making it more versatile in its deployment.

For this one, we have a short overview:

We saw this system in Taipei in January, and then had the chance to remotely test the system afterwards. Since we were at Gigabyte to take the photos, we were able to grab some unique views of this system.

Gigabyte G893-ZX1-AAX2 External Hardware Overview

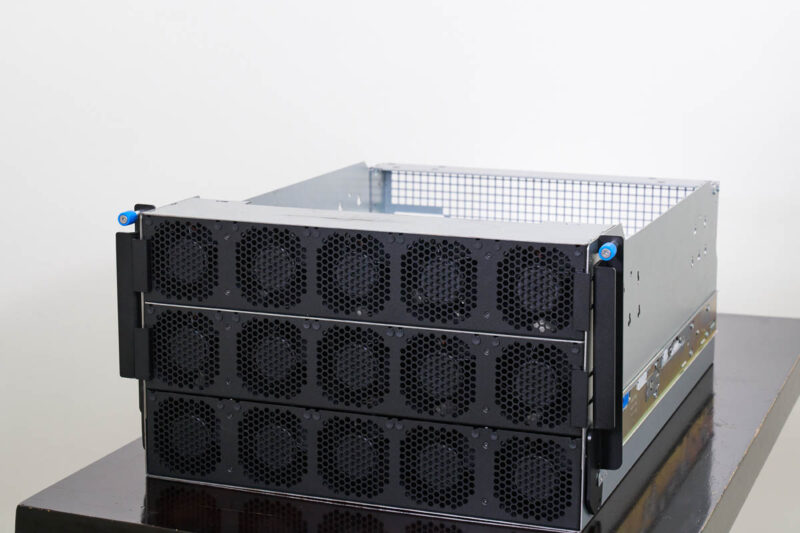

Starting off, this is an 8U system with 6U dedicated to the accelerators and power and another 2U for the CPU tray.

Depth wise, it is 923mm or just over 36.3in deep which is common in AI servers. The removeable handles were very handy moving this system onto the table.

6U of the front is a fan wall mostly dedicated to cooling the accelerators.

The bottom 2U is the CPU tray so we get four U.2 NVMe bays on the left.

In the bottom center, we get our USB ports, VGA port, and an out of band management port. There is also an Intel X710 dual 10Gbase-T link for lower speed 10GbE links.

Here is a quick view of that front panel board which we have seen a few times from Gigabyte but without the fans installed.

On the right side we get four more U.2 NVMe bays for storage.

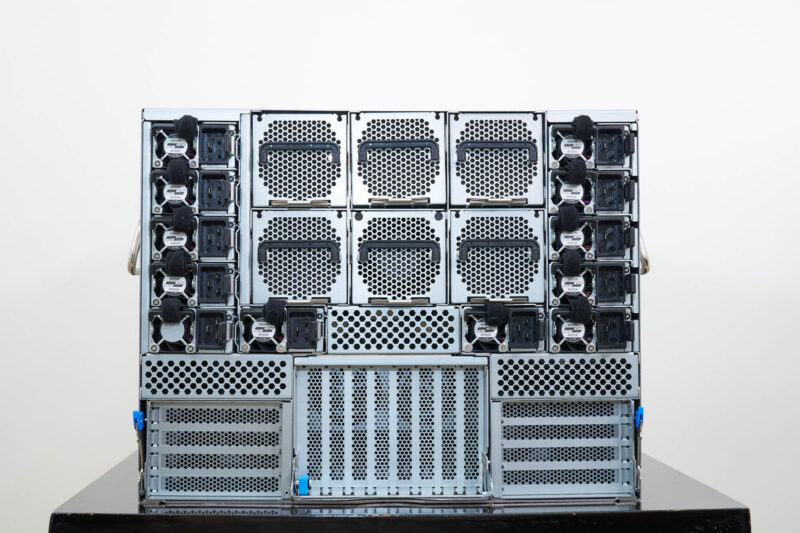

Moving to the rear, we get fans, power supplies, and PCIe expansion card slots.

The center wall is just hot swap fan modules.

Here is a quick look at one of those.

There are a total of twelve 3kW power supplies to provide redundant power for the system.

On the bottom left and right we get four slot PCIe card modules. These are mostly for adding North-South networking into a server or the NICs that connect to the CPUs rather than being paired with GPUs.

That module is removeable and has its own cooling fan. There are two of these trays, one on either side of the rear.

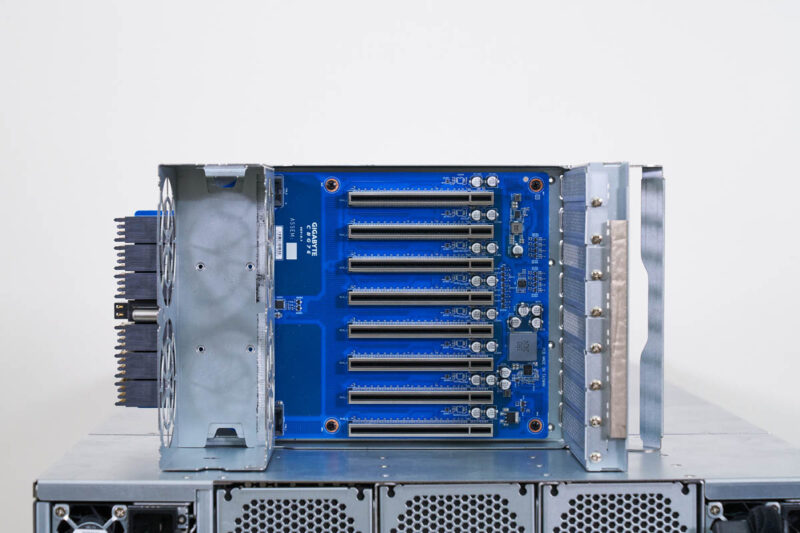

In the center, we get eight PCIe Gen5 x16 slots for the GPU networking. That means we get a total of 16x PCIe Gen5 x16 slots in this server.

Here is a quick look at the tray. These slots are designed to be filled with our East-West NICs for GPU traffic.

Next, let us check out the motherboad tray.

Is there any concern with regards to performance when using these pci switches?

ex. Any potential for oversubscribing and causing congestion avoidance protocols to kick in?

How does this topology compare to a typical dgx system regarding the issue above?

Is there the same potential for oversubscription with similar Nvidia based servers?

The motherboard without components actually has dual socket SP6 with 32 DIMM slots.

Kawaii, the unpopulated motherboard has different layout too. Most obvious is the m.2 slot adjacent to the middle bank of DIMMS on the unpopulated board.