10GbE in 2026: The Challenges

Perhaps one of the biggest challenges to 10GbE in 2026 is really the efficient use of PCIe lanes. With a PCIe Gen4 x1 link able to handle a 10GbE interface, the lane-to-network bandwidth utilization is well matched. Once we move to PCIe Gen5, each lane can handle a 25GbE link. That may sound trivial at first, but take a step back.

25GbE exists as SFP28, but not much else. It is still generally using NRZ signaling, which does not work super easily with faster PAM4 signaling that higher-end networking is using. It is in a pluggable form factor that takes up more PCB space on motherboards. Perhaps the most impactful challenge is that the cabling options generally do not include the twisted pair cabling found in the walls of many homes and businesses. 25GbE is better for PCIe lane usage, but it is not as good for the biggest driver for 10Gbase-T: Existing wiring.

In data centers, it is really interesting. 10Gbase-T is used as a management interface, often with onboard ports, while higher-end NICs handle data transfer.

For switches, think of what switch vendors are up against. If you have a leading-edge wafer supply and use it for sub-$300 network switches, you will likely be out of work soon, as those nodes are focused on higher-end data center switching or other tiles in AI systems.

That is perhaps the biggest challenge of 2026. At silicon providers, over the last 2-3 years, they have had to review their R&D budgets and decide whether to invest in silicon for AI clusters with an enormous TAM or to focus on driving down the cost of 10GbE devices. It is hard to focus on capturing a smaller market.

Testing 10GbE Devices in 2026: Keysight IxNetwork

You may have seen this make its way into reviews, but for years, the STH community has told us they want better network testing. At some point, we should probably do an article around our evolution building multi-node iperf3 clusters, a big Dell workstation with Mellanox NICs and Cisco T-Rex, T-Rex on Napatech 100G FPGAs, and so forth. While in Austin, we went as far as buying a used Spirent C100 8x 10G Test Center that is sitting on the shelf with its nostalgic Intel Xeon E5-2687W’s (STH was the first site to look at a system with those in January 2013!) Over the years, we have poured money into developing something better than iperf3, yet we have largely failed.

In 2025, we showed our Keysight CyPerf server based upon a highly customized (down to custom-made cables) Supermicro Hyper 2U server and a pair of Intel Xeon 6980P 128-core CPUs. That means we can generate realistic traffic patterns, but for truly low-level testing, we needed to go one step further.

In 2026, we have added IxNetwork and hardware-based solutions, now with two XGS2 chassis and three line cards. If you have read comments like “you should use Ixia” or “we use Ixia at work” this is often the type of gear that folks are referring to.

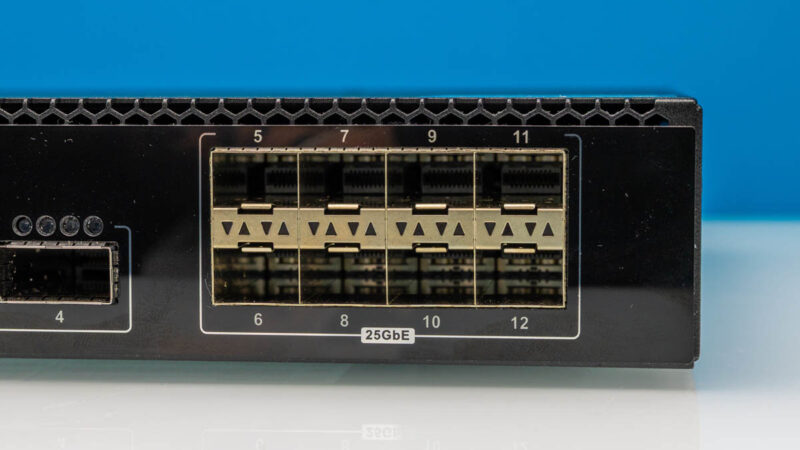

We can generate over 1.7Tbps of traffic and should be able to do up to 80 ports of 10GbE or 16 ports of 100GbE or up to 64 ports of 25GbE.

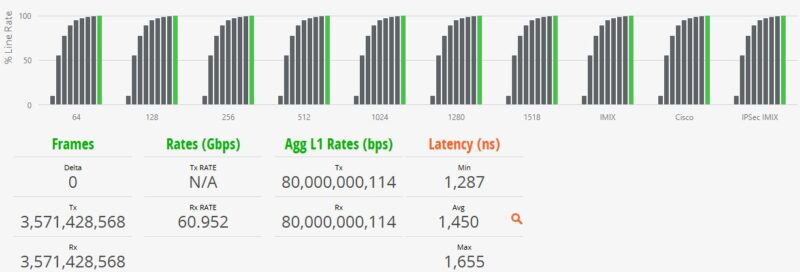

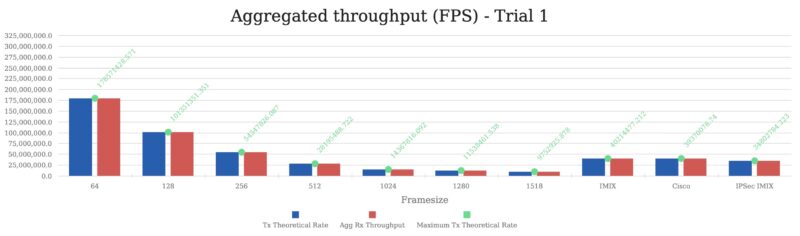

The advantage of this is that we can now do more accurate and lower-level testing. For example, earlier this week, we showed the TRENDnet TL2-F7080, a cheap 8x 10G SFP+ switch doing 80Gbps of L1 traffic passing almost 61Gbps of 64B frames without loss. You sometimes see a random comment saying that a Realtek RTL9303-based switch drops packets at 64B and cannot hit full performance. Now we can test that and show otherwise:

This setup is allowing us to explore if and when switches drop frames. We can also see the theoretical maximum (given overheads) versus what we are actually getting on devices.

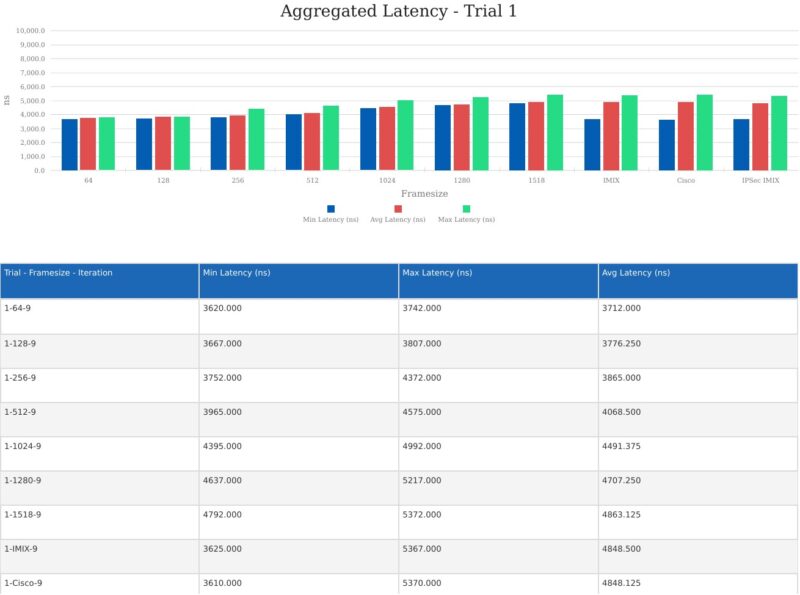

Since we have a high-end hardware-based solution, we can also get better latency figures.

At 1GbE, and realistically even with a few ports of 10GbE, this is something that is not too difficult to do these days. When we are pushing 400Gbps, 800Gbps, or more of traffic, that is where these higher-end solutions are going to help.

Folks in the test and measurement industries are probably banging their keyboards right now since CyPerf and IxNetwork have so much more than we are currently using them for. We are spending a lot of time using different devices to profile our testing, and that simply takes time. As a result, you will probably see what we are testing and our methodologies evolve in 2026 on the 10GbE side, and beyond, but just know, we have an absolutely wild setup as part of a project that we started in May of 2025.

Let us know if you would find a deeper dive into the testing equipment and setups interesting. We might do a deep dive on this later in 2026 if there is enough interest.

Final Words

In February 2026, we will do a series on 5GbE networking, as we have a pretty good set of content already tested at this point, or at least enough to get a critical mass like when we started 10GbE and even 2.5GbE. At the same time, it feels like 10GbE might be the answer. The ecosystem is catching up to the promise of 10GbE after so many years.

While we are really building our networking test lab to handle 400GbE-1.6Tbps generation devices, we also made sure that we could test down to 10GbE devices just to catch this wave of new items hitting the market.

Longtime readers may notice that we have some new switches, devices, and brands in here that we have not reviewed on the STH main site yet. Our goal is to cover as many products with the best testing we can.

Stay tuned to STH.

there is enough interest

nice summary to start the year!