Using DPUs Hands-on Lab with the NVIDIA BlueField-2 DPU and VMware vSphere Demo

Many VMware administrators are familiar with one of the biggest basic networking challenges in a virtualized environment. Traditionally, one can use the vmxnet3 driver for networking and get all of the benefits of having the hypervisor manage the network stack. That provides the ability to do things like live migrations but at the cost of performance. For performance-oriented networking, many use pass-through NICs that provide performance, but present challenges to migration. With UPT (Uniform Pass Through) we get the ability to do migrations with higher performance levels close to pass-through. The challenge with UPT is that we need the hardware and software stack to support it, but in this BlueField-2 DPU environment, we finally have that.

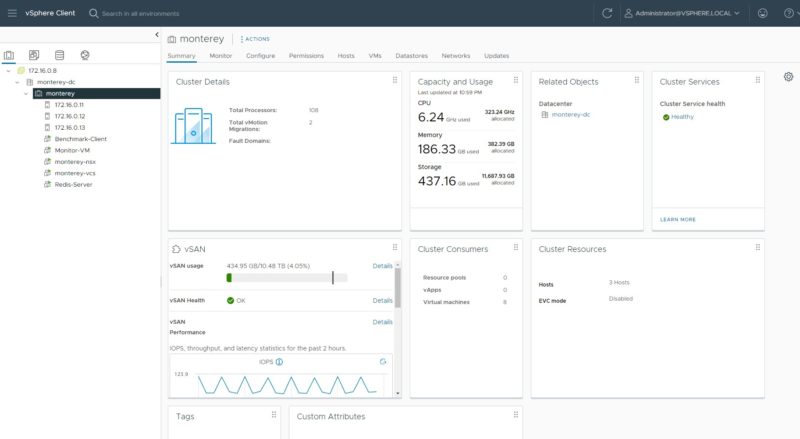

The demo environment we are using today is part of NVIDIA LaunchPad. If you are looking at deploying a DPU-based vSphere solution, you can request access to the environment and go through this demo. There are limited spaces due to how much hardware is tied up in each environment. Still, the hope was by STH doing this, we could get folks to see what the solution looks like.

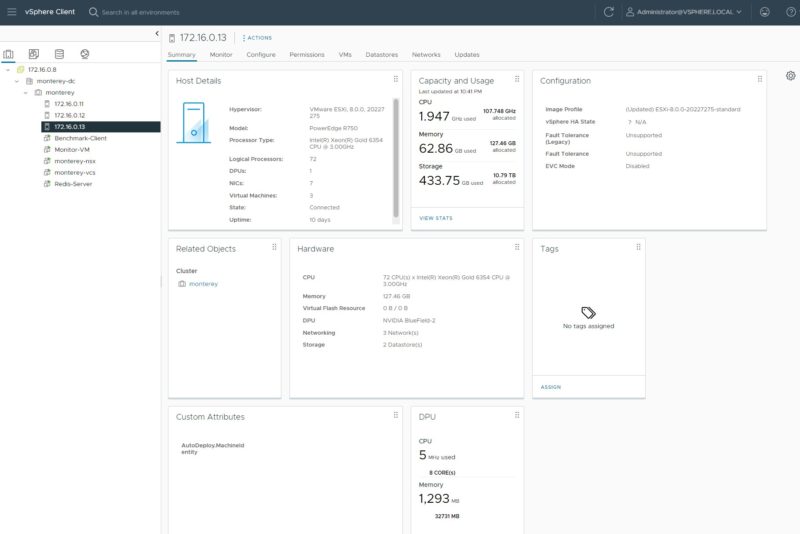

As you can see, the nodes provided are Dell PowerEdge R750’s with Intel Xeon Gold 6354 CPUs, and most importantly under the DPU category of hardware, we have the NVIDIA BlueField-2. As a quick note, the PowerEdge R760 review on STH will be live fairly soon.

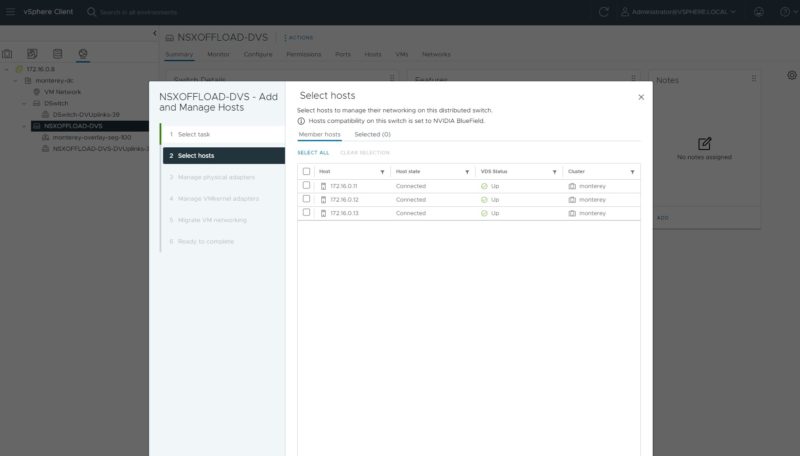

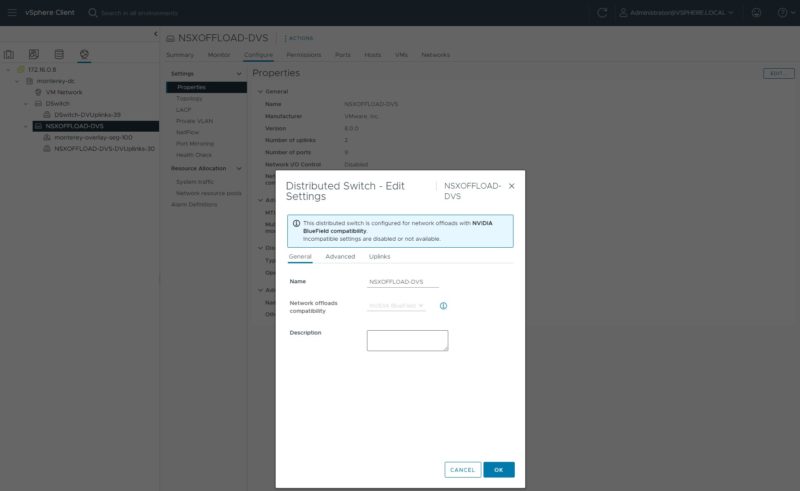

One of the first things we need to do is to create a Distributed Switch. Ours is a bit different since we are configuring the switch with the BlueField DPUs. There are fairly standard steps like adding our three hosts.

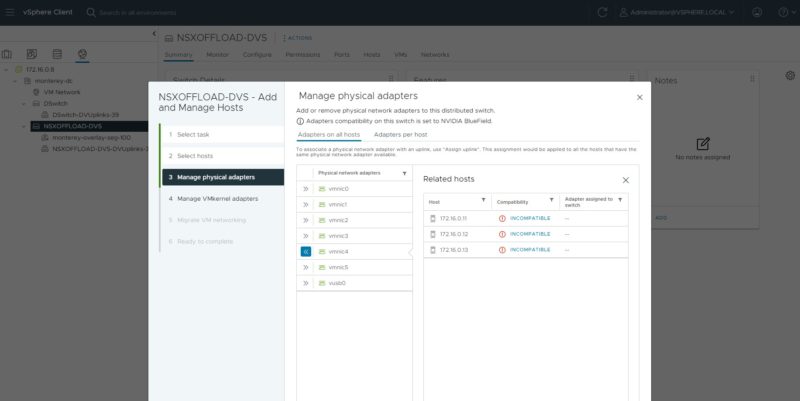

We then need to select the adapters. The Dell PowerEdge R750’s have other NICs. Since we are making a DPU-enabled virtual switch, the other NICs are listed as incompatible.

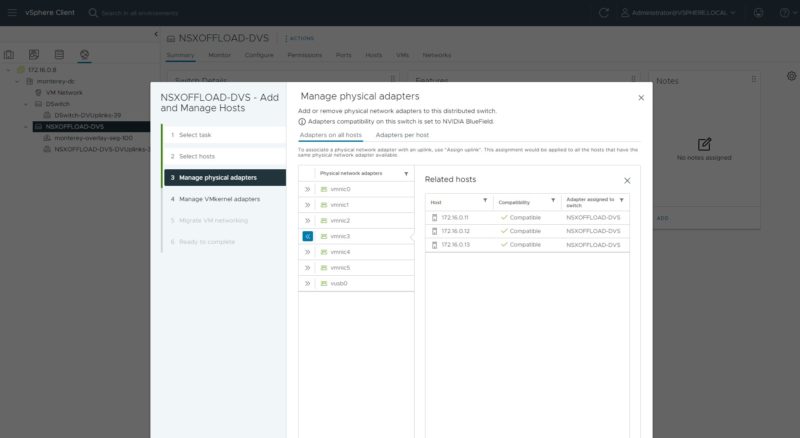

The BlueField-2 DPUs are listed as compatible.

As we can see, the switch now has BlueField capabilities.

Something that NVIDIA does not have in its demo, but is a big differentiation point, is that VMware is building a BlueField-specific integration into its environment to make this all work. While VMware initially announced Intel DPU support, and there are many other DPU vendors out there, VMware only has integrations for NVIDIA and AMD DPUs at this point.

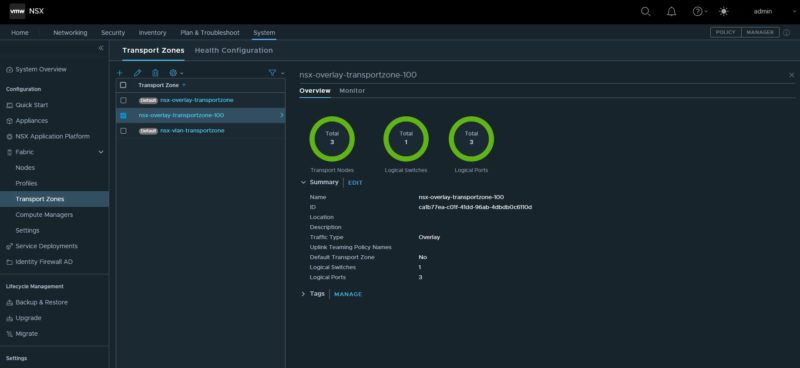

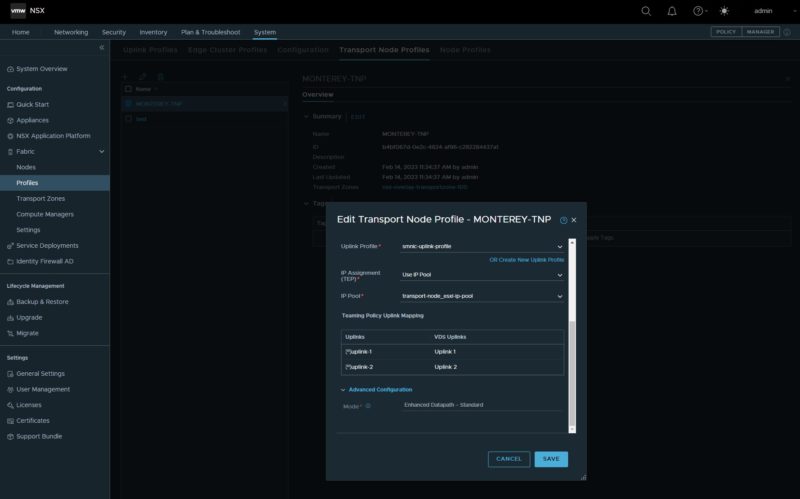

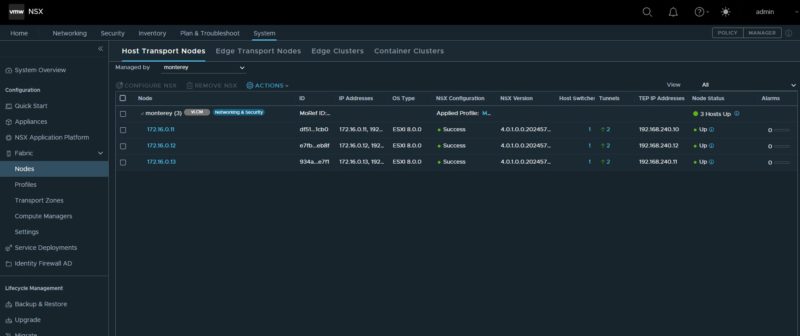

The next step is going to VMware NSX. DPUs in many ways make the NSX vision of managing networking off of the network switch more of a reality.

Here we can see that we have a NSX overlay that is being powered by the BlueField-2 DPUs.

One important aspect is that we need each node to have a BlueField-2 DPU for this to work. If it does not, then we do not have the hardware capabilities to make the overlay work with the underlying hardware. This is an important point. If you are planning to deploy DPUs later in 2023 or 2024, but you want the nodes to participate that you are deploying in early 2023, then those nodes need to have BlueField-2 cards today, even if they are just being used as ConnectX-6 networking until the new capabilities are being used.

With the NSX overlay complete, we can go back to our vSphere environment.

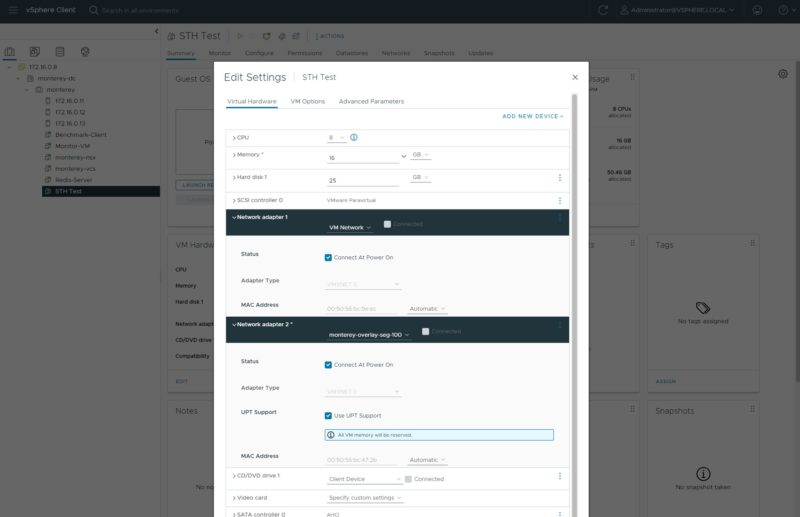

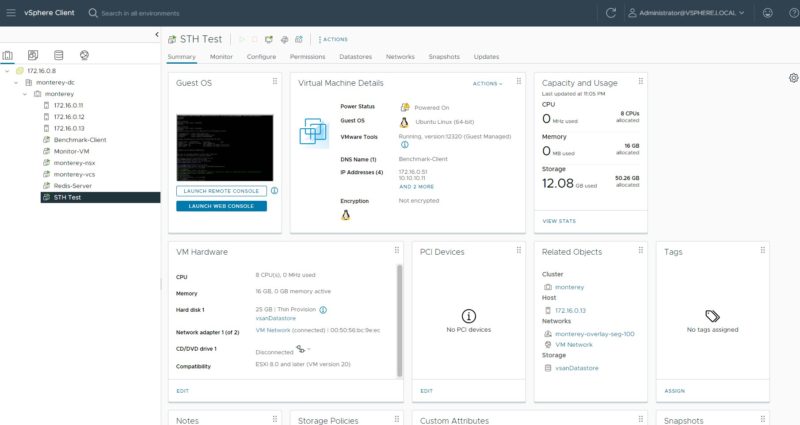

Now we can create a VM that we are going to call “STH Test”. Here we can see the first network adapter does not have the option, but Network adapter 2 has the option to Use UPT Support. We also will have this adapter connected to the NSX overlay.

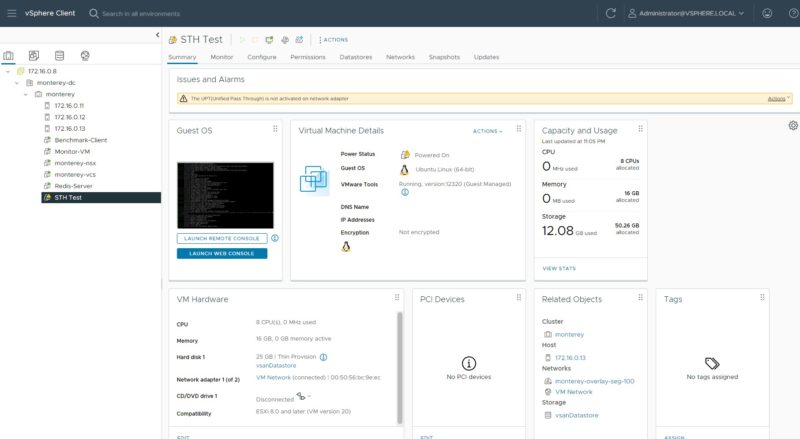

Looking at the VM we just created, we will notice quickly that the UPT is not activated.

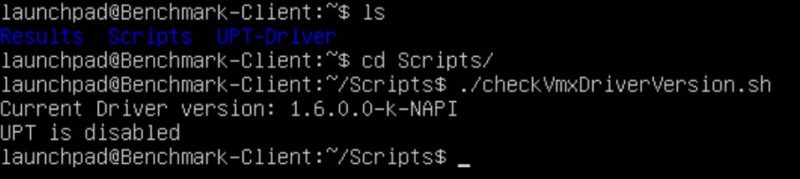

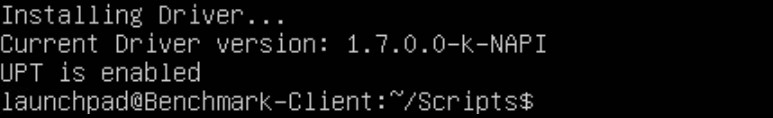

Going into the Ubuntu instance, we have an older driver 1.6.0.0-k-NAPI. That version does not support UPT for the Ubuntu VM, so we need a newer version.

Upgrading to the 1.7.0.0-k-NAPI version gives us UPT capabilities

Looking at the VM again, our UPT warning is now gone because the VM’s driver now supports UPT.

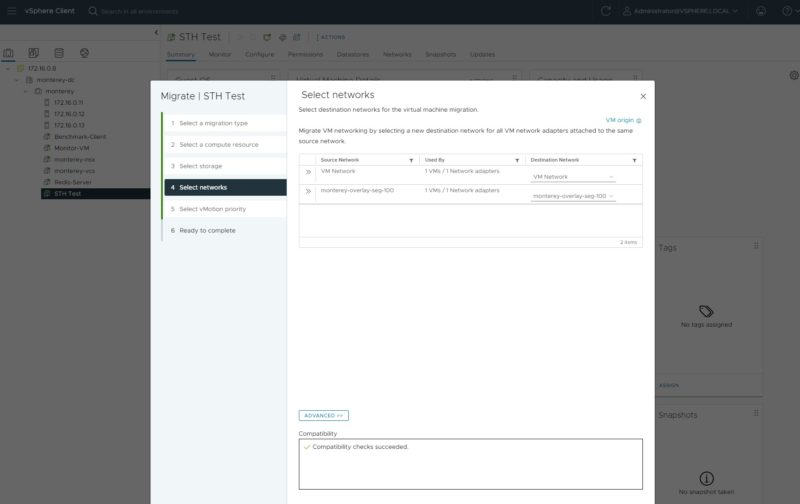

With UPT enabled, we can still migrate using the networks we set up earlier.

The setup is not automatic, and it takes some time to work through, but it is fairly straightforward and if you do this LaunchPad demo, NVIDIA has documentation for you.

Next, let us take a look at the performance and impacts of this.

Yeah, I’ll just say it: I don’t understand all the fuss. Does it improve performance in area XYZ? Well yes, of course. It’s a computer. You have put a smaller computer in charge of a specialized group of functions so that your CPU does not have to do those functions. It would be like going nuts over a GPU. Yes, of course the GPU is going to GPU so the CPU does not have to. And no, ZFS was not run on not a server, it was run on the computer that is the DPU. Some of them throw down 200W+ (not including pcie passthrough). Well, I think that is a correct high number, NVIDIA actually publishes all of the specs EXCEPT the power usage. You have to go sign into the customer portal to get those. I kid you not, look: https://docs.nvidia.com/networking/display/BlueField2DPUENUG/Specifications

I mean, I know there has never been a computer of 200W that has ever been able to run ZFS with ECC memory……….atom. And as your article does show, as usual, it is not that you can put another computer somewhere, its putting it there and getting it to do what you want and talk with the computer playing host to it. So great. Its an comp-ccelerator. It can offload my OVS, cool. Also there is a certain always around the corner about so much these days. The future, great (maybe?), but if something is always around the corner, its not being used for anything they told us Sapphire Rapids was good for. Bazinga! Intel joke. AMD handed Zen 1 to China for a quick buck. AMD joke. All is balanced.

The article states all VM were provisioned the same, but then doesn’t go on to provide details or at least details I could find. Was the test sensitive to how many virtual CPUs and RAM was assigned to each VM? What are the details of the VM?

Hi Eric – We had screenshots of the VMs on Page 2 so my thought was folks could see the 8 vCPU/ 16GB configuration there.

Thanks for the quick reply. I was reading the article on my mobile phone. The screen images are blurry unless one clicks on an image and then pinches to zoom. I see the configuration now.

Thanks for the detailed analysis.

I wonder how close you could get to these figures using DPDK in Proxmox instead of using a DPU. The DPU performance difference while nice is not as big as I would have expected. Afaik AWS mentioned latency decreases and bandwidth uplift of 50% when initially switching to their Nitro architecture.

What was the power consumption of the BF2?

WB

AMD committed something akin to treason, and someone lied to you about something something Intel. Run Along – you are not the target audience for this now deprecated DPU nor for anything in the server realm.

David

This the previous gen card – the Bluefield 3 (400Gb/s) offers better performance.

No SPR servers to review (I have 13 dual SPR 1U servers) – no modern DPU to review, no DGX A100 or DGX H100 to review – yet all of those are in my datacenter…

If Patrick had actual journalistic skills and a site that attracts more than a couple dozen people I would let him review the equipment I have.

Ha! This DA troll. You’re realizing at some point they’ve got 130K subs on YT and they’re getting tens of thousands of views per video, not posting anywhere near as much on here. Based on their old Alexa rank they’re 3-5M pv/ month so that’s like 100k pv/ article.

13 SPR servers is small. We’re at thousands deployed in just the last 4 weeks and we’re looking at Pensando or BlueField for our VMware clusters going forward.

Go troll somewhere else.

DA I strongly suspect that better hardware performance is not the missing key but that the virtualization implementation is less than optimal. We already have numbers of DPU to DPU traffic running directly on the cards and those are much better than the VM level differences.

Right now, only a select number of cards are supported by VMware. These are the fastest BlueField-2 DPUs supported. BlueField-3’s will be, but not yet.

To be fair to DA – we do only get a several dozen people per minute on the main site.