Cavium ThunderX2 Power Consumption

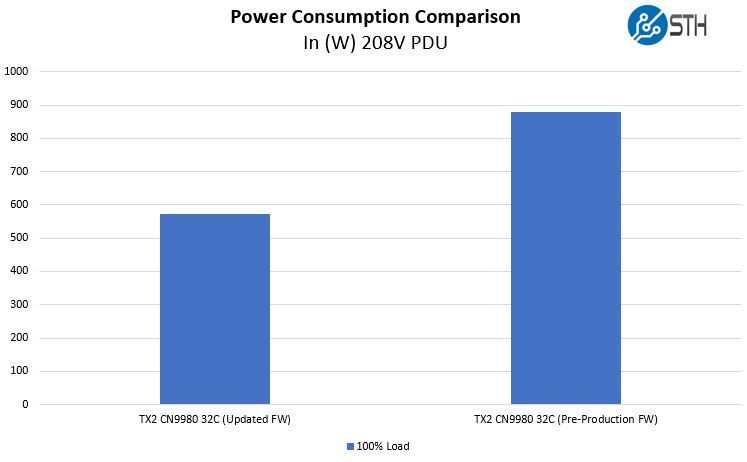

As a reminder from the original piece, the chips we are using are not the final binned parts. Cavium says that the shipping parts will be binned for power and should run more than 10W lower per chip (edit: Cavium told us 15-20W). With that said, we wanted to show the dramatic improvement that lowering the new firmware had on power consumption:

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.8C for the original results and 17.6C for the updated results and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance so this is reasonable and in-line with our standard environments.

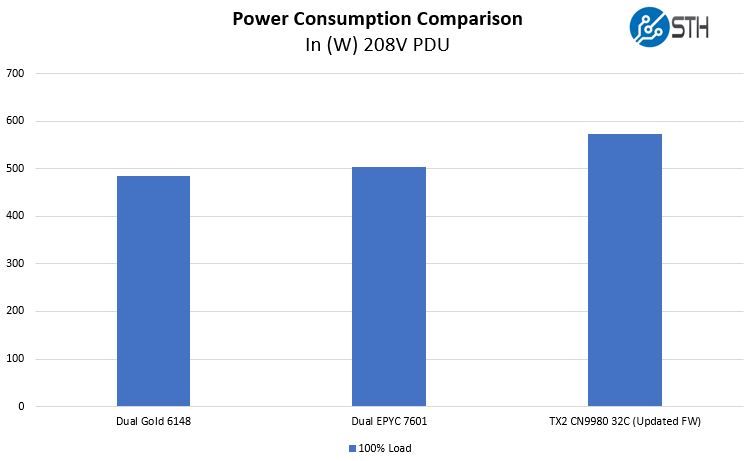

The bigger question we wanted to explore today is whether the Cavium ThunderX2 is competitive with its x86 competition in terms of power consumption. We used the Gigabyte R281-G30 chassis as our Intel Xeon Skylake-SP platform and swapped power supply assemblies to ensure we were using the same PSUs in both platforms. On the AMD EPYC side, we are using a 2U Supermicro AS-2023US-TR4 “Ultra” platform as it was the closest dual socket AMD platform we had in the lab. Here is what the power consumption looks like:

As you can see, the significantly improved power consumption puts it in-line with where Intel Xeon Skylake-SP and AMD EPYC systems are. Also, we wanted to note that the AMD EPYC and Intel Xeon Scalable figures are a bit higher than we put in our reviews. We fully configured the systems, meaning, we needed to match add-in Mellanox 100GbE NICs, Broadcom 9305-24i SAS HBAs, 25GbE NICs, Intel Optane 900p AIC 280GB drives, SATA SSDs. We also used 256GB of RAM on all three platforms. Since Intel Xeon Scalable (Skylake-SP) CPUs only have six memory channels we used 8 DIMMs per CPU which means one channel was in 2 DPC mode. We manually set DDR4 frequencies on the Intel system to DDR4-2666 to ensure we had as close to apples-to-apples as possible. It is fair to stay that the systems are not identical. That is a tough task given these utilize three different architectures. At the same time, this is perhaps about as close as one can configure the three platforms and it took us a tremendous amount of lab labor and hardware on hand to get this close.

Validating the Firmware Update

One area that we were aware of is that it is possible for chip companies to update firmware and drastically decrease power consumption to the detriment of performance.

We re-ran benchmarks overnight from our original article. As we were going through the data, figures were within +/- 0.8% of our original tests and not always lower so after 12 hours of workloads we made the call that the firmware update did not have an appreciable impact on performance.

Final Words

Assuming the production chips are binned for power and Cavium sees 30-40W lower power consumption in a dual socket server from that exercise, we arrive at a fairly simple conclusion. Cavium ThunderX2 is competitive in terms of performance and power with Intel and AMD. If you were taking “Cavium is Arm, thus it must have significantly lower power consumption” that would be an incorrect assumption. Cavium ThunderX2 is designed to match Intel and AMD offerings in a broad range of applications and therefore uses a similar amount of power. At the same time, we are seeing the ThunderX2 make waves since it bests AMD and Intel by utilizing a single die with 8 channel DDR4 memory. That is a claim neither AMD nor Intel can match.

We are going to update our original article with a link to this piece to preserve continuity.

Not bad at all! BTW, you a bit over-estimate with “Assuming the production chips are binned for power and Cavium sees 30-40W lower power consumption in a dual socket server from that exercise, we arrive at a fairly simple conclusion. ” — especially when you mention at the beginning of article that Cavium itself expects just modest decrease of over 10W per chip. Honestly if it would be 30-40, then they would mention directly over 30W, don’t you think?

But anyway, 550W is still better than nearly 900W.

Very nice to see that they have fixed the power draw by that much without any performance difference.

Seems like it can be a really good deal given the price.

AMD seems to be the most likely to suffer from it as people susceptible to jump from Intel to AMD are more like to jump to Cavium entirely. Let’s see how AMD adjusts its prices.

@karelG Greater than 10W per CPU (I have been told that it may be on the order of 15W each). Then add in additional savings from cooling and that is how I got to the 30-50 range. It may well be closer to 30W and hope to get some production silicon to do more analysis with.

When you say that the systems are running at “100% load” what workload or combination of workloads do you run to get them to the “100%” mark?

@Ziple: with jump to Cavium you need to be sure, your stack is ARMv8/64 ready while on the other hand with jump to AMD, you just need to adjust a bit for example AVX2 if you are using them. Nothing more, same arch.

So IMHO Cavium is in more risky position than AMD.

@Patrick: well, let’s see when production silicon is available. IMHO Intel and general market needs it as a salt to have after years again competing architecture. POWER9 is nice, but it’s too little…

This kind of means that China doesn’t need intel (USA) anymore to enhance their supercomputer power.

Just buy an ARM license some machinery from ASML and your done, they have the money and the engineering power.

What you present here is not a useful story. Administrators need to know the productivity/watt, e.g. jobs/sec/watt, or jobs-completed/watt-sec. Energy is the story. How much energy is consumed for the system to complete a given workload?

Reporting power at “100% load” is useless. Rather report power and report the benchmark results. e.g. X ops/sec and 100W becomes X/100 ops/watt-sec, or convert to X/100 ops/kilowatt-hour.

Now you have energy.

Please, do you job here!

And to clarify, there is no reason to “equalize the configurations”. What you strive to do is obtain a configuration yielding best performance and lowest power. Clearly there is room for trial and error. e.g. adding more DIMMs might push the power up more than the benchmark results, so take them out again.

->DON’T equalize the configuration.

->DO obtain the best performance and measure and report benchmark and power for that run.

Now anyone can decide if they are getting the most jobs completed per kilowatt-hour of energy use.

I feel compelled to further add that *no particular benchmark performance level is required*. To obtain the most efficient jobs/kilowatt-hour, that actual performance can be anything. For example, if you yank the CPU out of your electric toothbrush, it may consume micro-watts, and take a week to run the benchmark, but calculate the jobs/kilowatt-hour, and hey it is the highest!!

There is no a-priori requirement to have a good benchmark time, *rather* a given usage model decides if the delivered performance is within an acceptable range or not.

If the application is being sent to Mars, then taking a week to run the benchmark job may be just fine! And it has the most optimal jobs/kilowatt-hour – the winner!

The tuning of the configuration is clearly a search through a large dimensional space. Vary every parameter, measure the benchmark, measure the power, and keep seeking that best ratio!

The minimal config may win. The maximal config may win. Any config change that reduces power is worth a try! Any config change that boosts the benchmark speed is worth a try. And you may still not find the best one.

I won’t normally comment on articles other than to say we run well over 10,000 nodes in our facilities. We have HPC, virtualization, analytics, VDI you name it. Bullshit on all that matters is watts/ work Rich. If we don’t have enough RAM in nodes, then we’re doing expensive calls to slower memory. That means we can’t use them with less RAM. It isn’t every workload but if you’re not starting from the same base configuration then it’s not a useful comparison. I don’t mean to sound crass here but the reality of modern data centers is that machines are not running one application and running one workload. They’re running many applications and it changes all the time. Hell, you’re talking 4 DIMMs in a system? Everything else like the network and storage needs to be the same since they’re external devices. It isn’t hard to reduce the numbers by 20 watts or whatever for all four DIMMs if you want to come at a conclusion.

Oh, and let’s not forget, the obvious here: everyone who does data center planning needs to know how much nominal power nodes use. You have rack, aisle, room, facility level power and cooling budgets so knowing how much a node uses is really useful.

Great job STH team on these articles. We are looking at getting a TX2 quad node system for evaluation based on your reviews but you’ve provided a lot of great info.

I agree with John W. You did a good job of equalizing the platforms but there are differences. Like the Intel PCH is not the same as you’ll find on AMD or Cavium. That PCH is there and a fact of the system, but it’s using power. I can see both arguments on both RAM quantities for the Skylake but I’d do what you did and equalize the RAM. Otherwise, you’re showing the RAM power differences.

Thumbs up to John W!

Another interesting fact, both AMD EPYC and Marvell ThunderX2 are still on GF 14nm. This makes them one node behind Intel. I wasn’t convinced about the Qualcomm Server solution, but ThunderX2 surely looks like a win.

Cant wait to see some more test once we have post production firmware and final binned CPU. Along with better Compiler.