At OCP Summit 2018, Facebook and Intel showed off Twin Lakes. Twin Lakes is the cartridge based on Intel Xeon D-2100 series that is used for Facebook’s web front end. Facebook has been using Xeon D in this capacity since the previous generation. You can read a bit about that generation here Facebook at Open Compute Summit 2017 – 7.5 Quadrillion Web Server Instructions Per Second. At this year’s OCP Summit, we saw a few designs based on Twin Lakes which were innovative in their approach. We wanted to share more about this platform as we even saw designs incorporating water cooling.

OCP Twin Lakes and the Intel Xeon D-2191 for Facebook

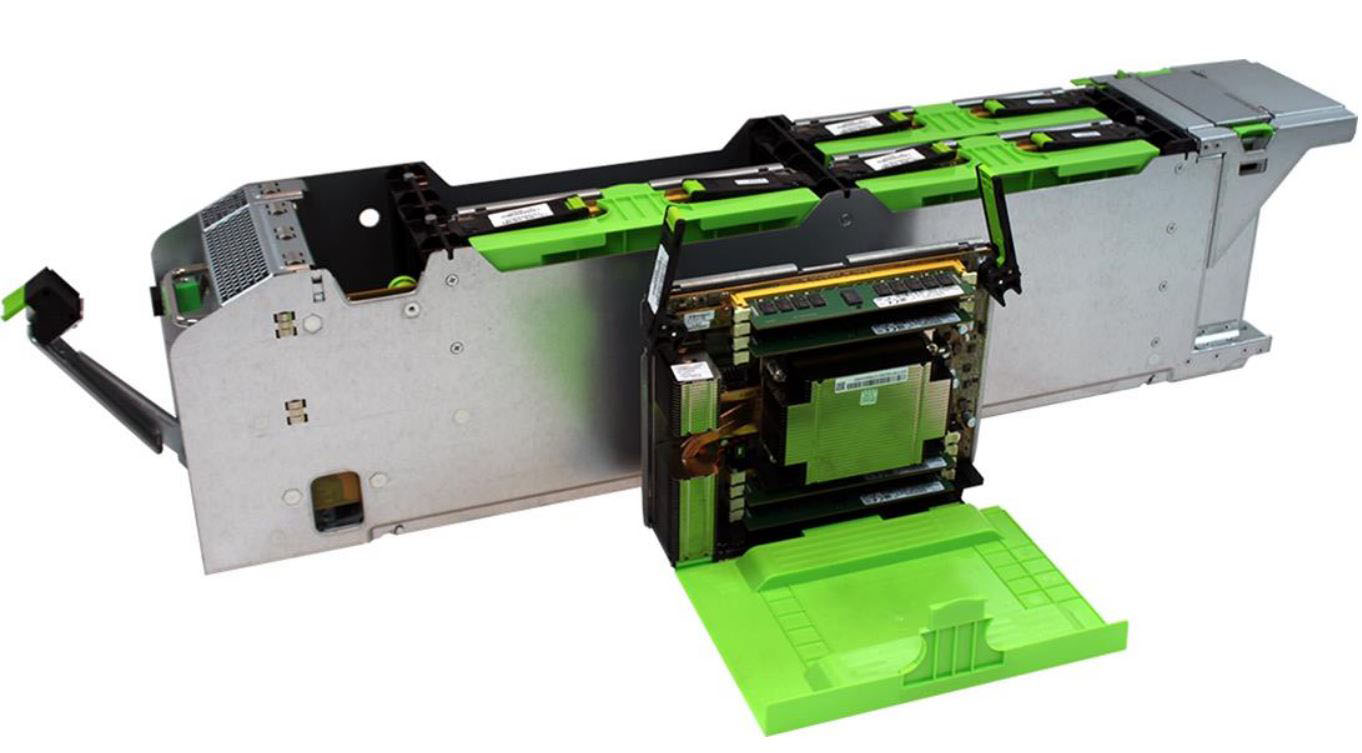

When the Intel Xeon D-2100 series was launched, Intel touted the Xeon D-2191 as the 18 core top bin SKU. What we learned around the time of launch is that this 18 core SKU was only for select customers. When we delivered our Intel Xeon D-2100 Series Initial SKU List and Value Analysis piece we noticed that it lacked features like QAT and was the only SKU with 10GbE listed as disabled. Putting this together, the SKU makes a lot of sense since it was intended for the OCP Twin Lakes platform and Yosemite V2 chassis. Here is a shot of a cartridge next to a Yosemite V2 sled.

Each Yosemite V2 sled can house up to four Twin Lakes cartridges all with a single network uplink. Here is a shot of the multi-host adapters used by Facebook in Yosemite V2 to aggregate bandwidth from multiple Twin Lakes Xeon D cartridges to its QSFP28 network. This allows Facebook to aggregate multiple nodes to a single 40/50/100GbE top of rack switch.

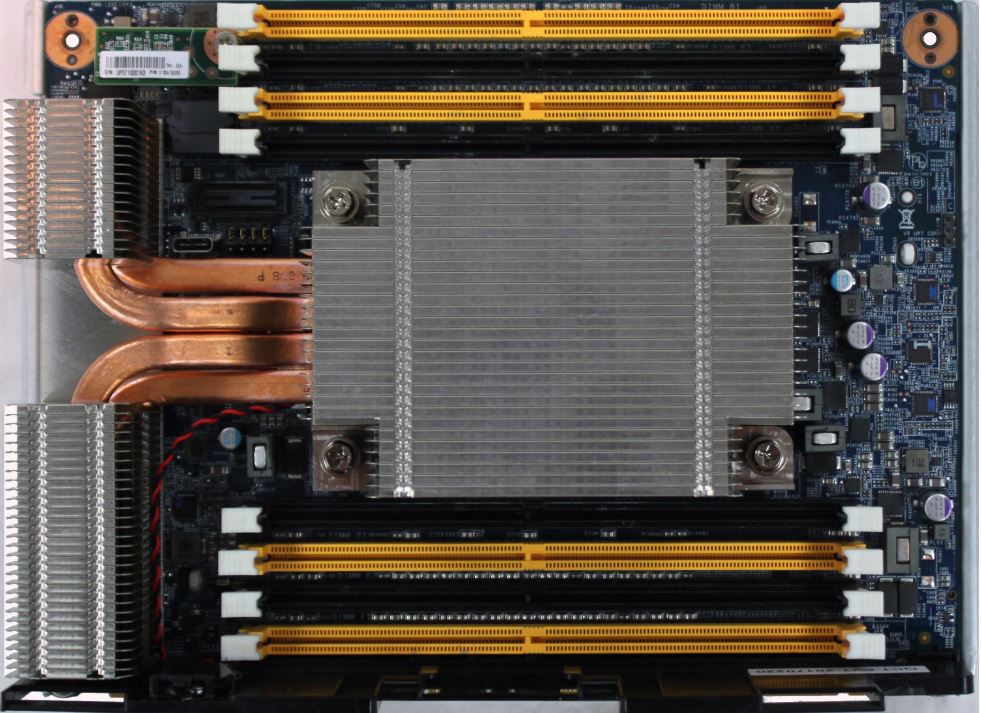

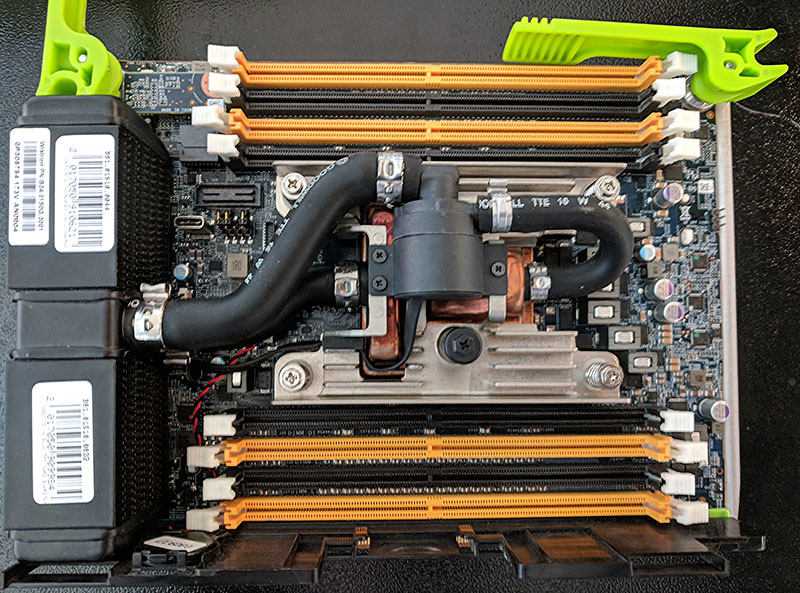

Here is what the top of an open Twin Lakes cartridge looks like (you can find the full OCP assembly here.):

You can see the 8 DIMM slots supporting four memory channels. You can also clearly see the TPM and the BIOS in the upper left of that picture.

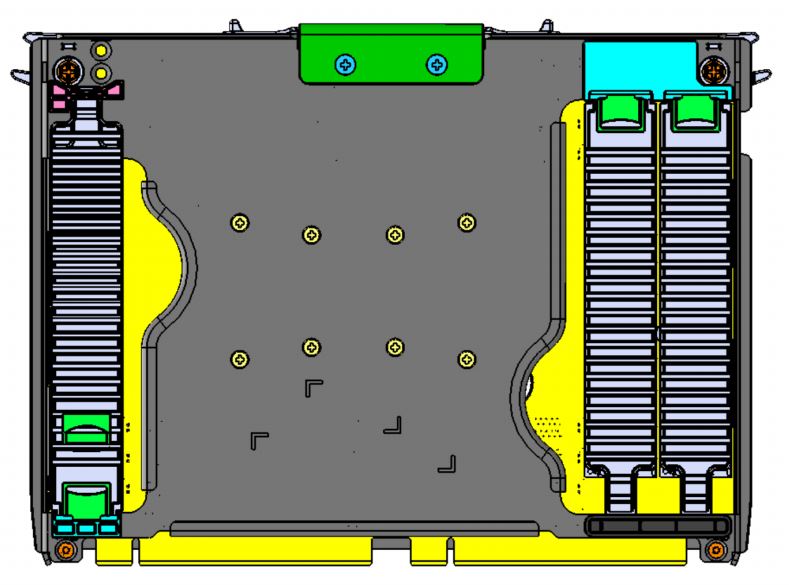

For storage, Twin Lakes has two main models. First, one can directly attach up to three m.2 SSDs directly to the cartridge itself. Here is what the diagram looks like:

Alternatively, one can use a Glacier Point flash card and attach up to 6x m.2 NVMe drives (2280 or 22110) to a Twin Lakes cartridge.

This gives Facebook the flexibility to run 2, 3, or 4 nodes in a common sled and attach storage as needed.

Twin Lakes is designed to be a single NUMA node web front end to deliver web services. Without having to deal with multiple NUMA node performance impacts, developers can have a consistent base to work with. Facebook, in turn, gets the ability to scale out this successful infrastructure to a large number of individual nodes.

With all of that out of the way, we saw one unique part at the OCP Summit 2018, a water-cooled version. We even grabbed some video for STH readers which will be on our YouTube channel soon.

Intel Xeon D-2191 Water-cooled Twin Lakes

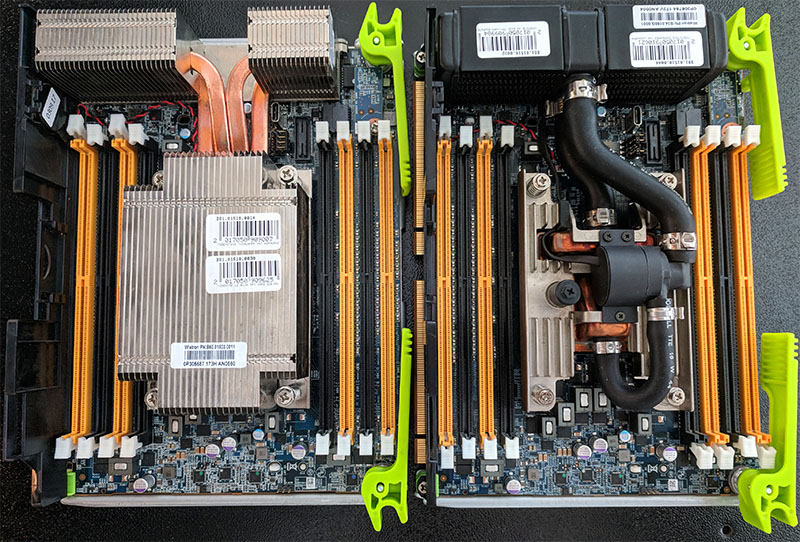

We saw two versions of Twin Lakes at the show. The more intriguing version was a water-cooled Wistron part to keep the 86W TDP 18 core Intel Xeon D-2191 cool:

As you can see, there is a closed loop system with a small radiator to move heat off of the CPU. Rumor has it that some of the large hyper-scale data centers do not like having water in their data centers so they prefer the copper/ aluminum version. Still, seeing the in-cartridge heat exchanger was a surprise. Most HPC vendors looking at liquid cooling are doing the heat exchange outside of the chassis, but the Twin Lakes design shown off has a closed loop system so it can be serviced like a standard passively cooled version.

The sheer size of the heatsink, as well as the board’s layout, shows just how much different the new chips are. The eight memory slots and large heatsink essentially fill the entire sled.

Here is a quick video zooming over the nodes:

Additional Technical Bits on Twin Lakes

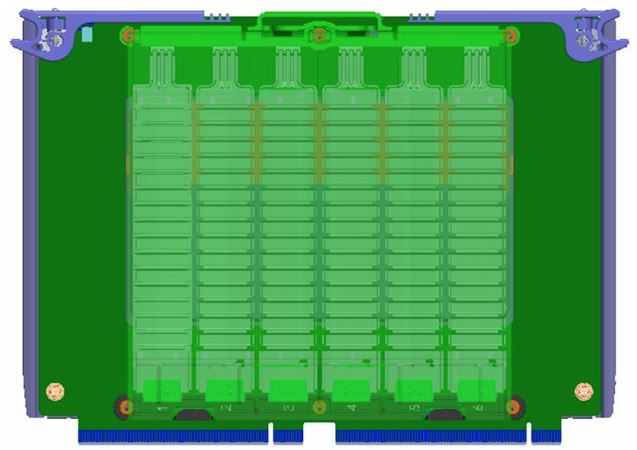

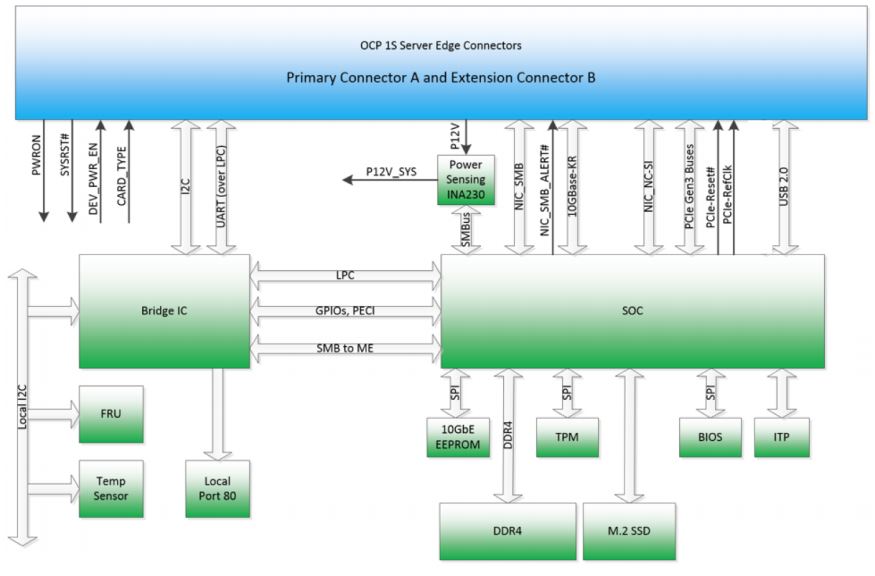

We wanted to show a few technical details we learned about the platform. For reference, here is the block diagram for Twin Lakes.

Even though the cartridge is compact, it has a surprising amount of I/O, more than the previous generation Intel Xeon D-1500 series. Twin Lakes uses all 32x PCIe 3.0 lanes from the SoC. It is also using 9 of the 20 HSIO lanes from what is essentially the integrated Lewisburg PCH for PCIe 3.0 as well. That is a significant jump from the previous generation that had 24x PCIe 3.0 lanes plus 8x PCIe 2.0 lanes.

Final Words

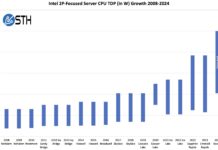

If you were looking at a potential area that Qualcomm and eMAG ARM players could disrupt, this is the form factor. The majority of servers run in dual socket configurations but the single socket, single NUMA node web front end is ripe for ARM competition. The web stack is based largely on open source software that has already been ported and optimized for ARM. At the same time, if you want to see what the major players are doing today, Facebook has a sophisticated hardware and ops team that are using Intel Xeon D for a reason. One of the great things about Facebook’s server teams is that their work ends up in OCP documentation and open source projects so those outside of the company can benefit from their progress. Twin Lakes and Yosemite V2 are great examples of how Facebook innovation in OCP leads to designs others can leverage.

There’s a lot of talk about severs using ARM.

There’s a lot of silicon and hardware deployed in the real world for servers using x86.

Water-cooling-cool. I wonder what the management of these is like. If you wanted to buy a rack and use them think it would suck?

>The eight memory channels and large heatsink essentially fill the entire sled.

I believe there’s actually only 4 memory channels with 2 DIMM slots per channel.