At FMS 2025, we saw the Supermicro ARS-121L-NE316R. This is an NVMe storage server based on the NVIDIA Grace Superchip. While a NVMe storage server in 2025 may not sound exciting, consider this: the NVIDIA Grace Superchip was not designed to be used in storage servers.

Supermicro ARS-121L-NE316R at FMS 2025

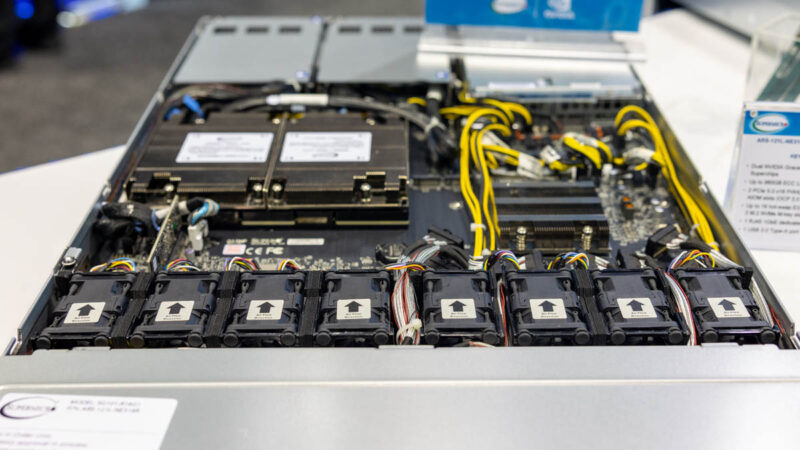

Taking a look at the front of this system, we can see it has sixteen drive bays, as well as a lot of room for airflow in the 1U faceplate.

Each of the drive bays are E3.S 1T, part of the EDSFF revolution that will effectively be complete in 2026 with the introduction of PCIe Gen6 (U.2 connectors effectively are not suitable for PCIe Gen6 speeds.)

We highlighted this EDSFF transition back in 2021, but the industry now needs to start moving quickly to EDSFF given 2026’s PCIe Gen6 servers. Five years in an enterprise/ data center timeline is a quick transition.

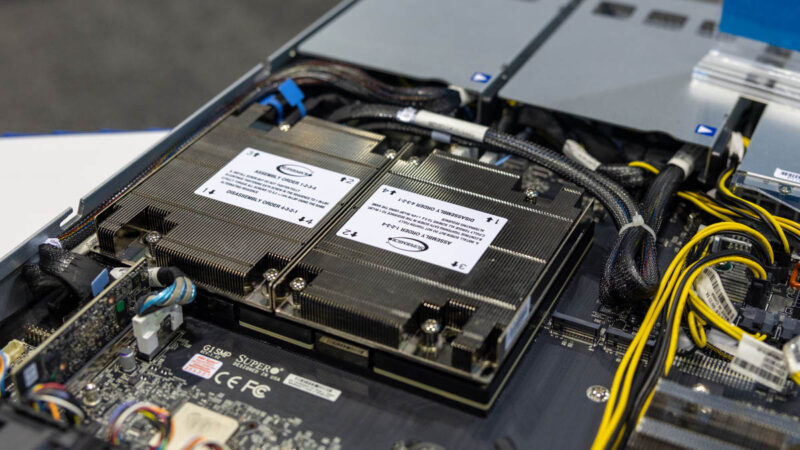

Supermicro and other server vendors all have EDSFF systems at this point, but what makes this FMS 2025 system notable is that it is powered by a NVIDIA Arm processor. That processor is not a BlueField-1 or BlueField-3 DPU, instead, it is powered by a NVIDIA Grace Superchip.

The NVIDIA Grace Superchip offers a solid amount of performance with up to 144 Arm cores. It also has up to 960GB of LPDDR5X memory in its lower memory bandwidth but higher memory capacity configuration.

The notable part here, however, is that the NVIDIA Grace CPUs are not designed exactly as replacements for traditional x86 server processors. We went into this in December 2024 in our Substack in more detail, but a great example of that is the NVIDIA Grace CPUs do not bifurcate PCIe root complexes down to x4 for storage. NVIDIA was only focused on providing connectivity for higher performance devices like GPUs and NICs with Grace.

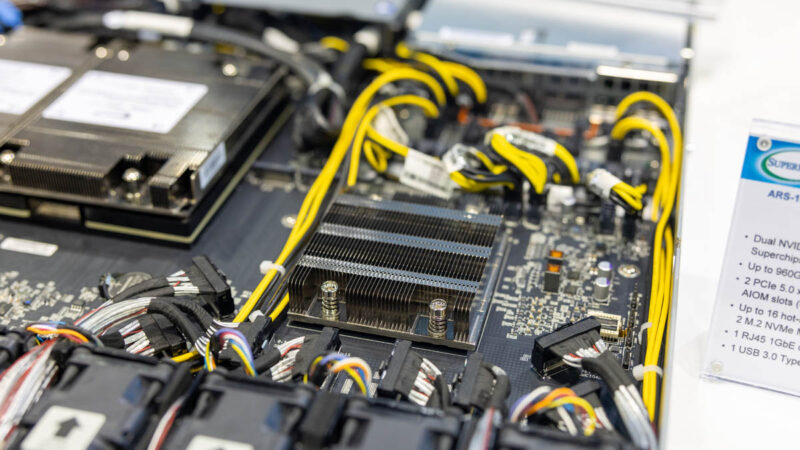

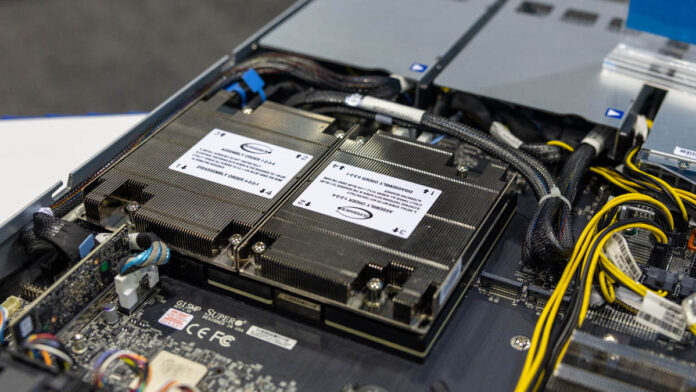

To get around this, Supermicro added a PCIe switch next to the NVIDIA Grace Superchip CPU. You can see the MCIO connections being made in a dense connector array near this switch chip, and also two PCIe Gen5 x4 M.2 slots between the NVIDIA module and the PCIe switch. Having the PCIe switch allows Supermicro to get the necessary PCIe x4 bifurcation for SSDs but you can also manage to replicate other common storage features found on x86 CPUs using PCIe switches.

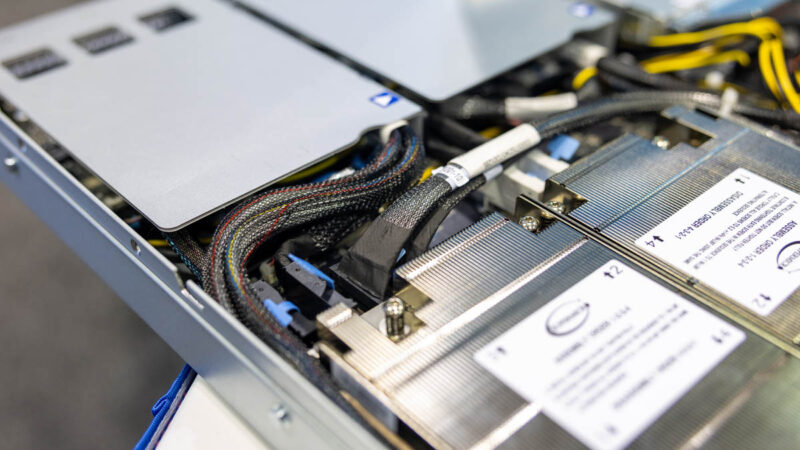

Behind the NVIDIA Grace Superchip, there are more MCIO cables.

The default configuration has connections for two full-height PCIe add-in cards for expansion, but there is an option for dual AIOM (Supermicro’s flavor of the OCP NIC 3.0 form factor.) These do not need to go through the PCIe switch since NVIDIA Grace can handle PCIe Gen5 x16 links

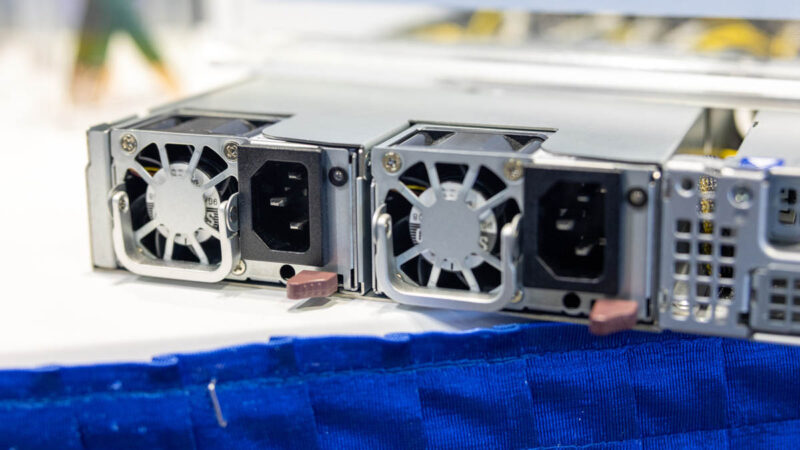

As we would also expect, there are redundant power supplies. These are 1.6kW 80Plus Titanium power supplies.

We did not get a great photo of it at the show, but there is a single USB Type-A port, and out-of-band management port, and a miniDP display connection in the rear corner opposite the power supplies.

Final Words

Overall, this is a neat system since it is so different. Realistically, a 500W dual 72 core (144 cores total) processor module for 16 NVMe SSDs is a lot. If you wanted a higher-performance Arm CPU with plenty of memory bandwidth and also a lot of storage, then this is a neat platform. It also provides a compute platform with more locally attached storage than most other NVIDIA Grace offerings. This is unlikely to be a best-seller for Supermicro, but it is a really interesting design.

Is it possible future CPU designs by Nvidia will soon be in production and result in an abundance of Grace superchip old stock?

Maybe there will also be a bunch of Grace superchips in the recycled market when the AI clouds update.

My impression is Supermicro has a talented engineering team with an eye for building long-lived systems. From this point of view, the synergy between the Grace superchip, the onboard memory and it’s use in a storage server is likely to result in an efficient use of resources.

It seems to be better used as a database server (in a 960GB RAM configuration) if price is reasonable (which is far from certain).

Dumb question: Aren’t there any trunk style MCIO connections/connectors?

I.e. A small number of much larger connectors connecting to a backplane to drive the ssds?

It seems like most of the MCIO stuff I’ve seen is 1:1 connector to endpoint (drive, NIC, etc.) connectivity.

@James A couple of minutes searching finds well known companies, so there must be thousands of lesser known ones too. When buying one of the endpoints (motherboard or SSD) ask where they get their cables; it’s only when the cable is all you want that you have to find a distributor. They’ll be commonplace next year.

Search: “SFF-1016 to SFF-1002” turned up (1:2 and 1:4, your terminology):

https://www.highpoint-tech.com/nvme-accessories

https://www.amphenol-cs.com/product-series/edsff-e1-e3-high-speed.html