Today, TACC announced its new supercomputer dubbed, “Stampede3”. This is another supercomputer using the new Intel Xeon Max HBM2e-equipped CPUs. The new system will also utilize a new generation of Omni-Path, at 400Gbps speeds, much faster than OPA100. What is more, it is a new win for the Dell PowerEdge XE9640’s with the Data Center Max series of GPUs instead of NVIDIA GPUs.

TACC Stampede3 4PF Using Intel Xeon Max and 400GBps OPA

Here are the key specs from Intel on the new NSF system:

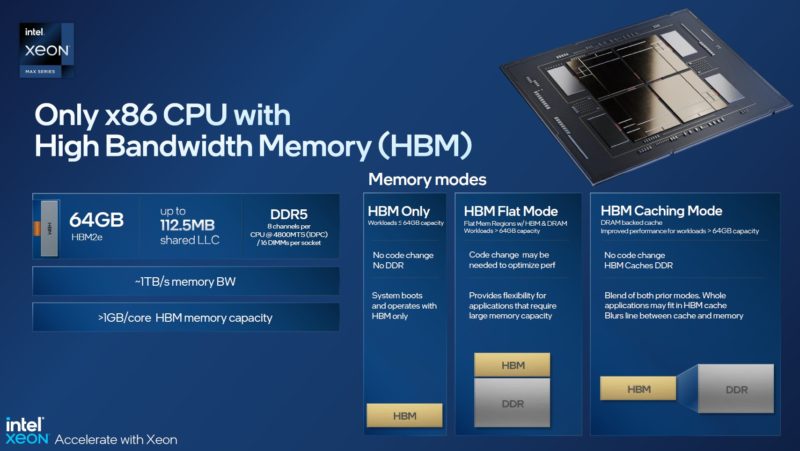

- A new 4 petaflop capability for high-end simulation: 560 new Intel Xeon CPU Max Series processors with high bandwidth memory-enabled nodes, adding nearly 63,000 cores for the largest, most performance-intensive compute jobs.

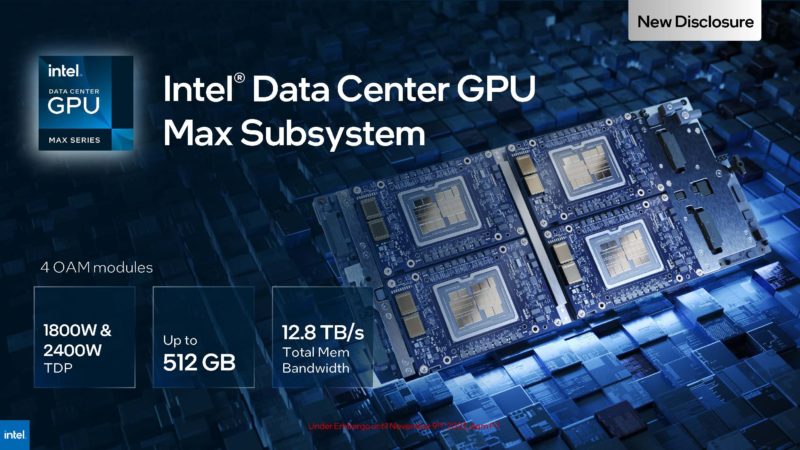

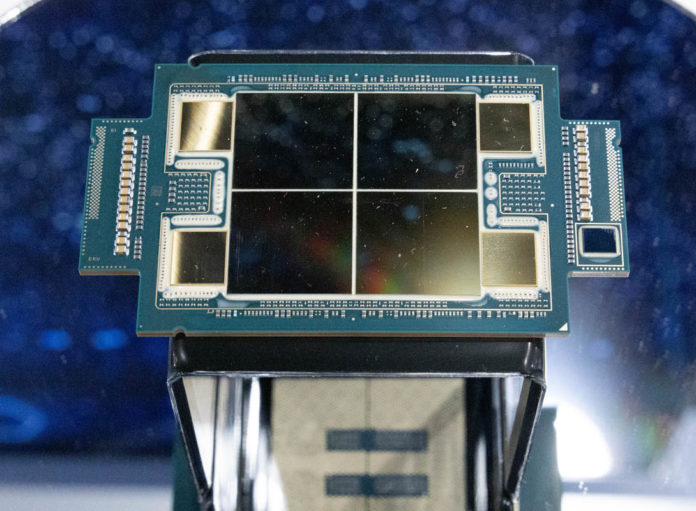

- A new graphics processing unit/AI subsystem including 10 Dell PowerEdge XE9640 servers adding 40 new Intel Data Center GPU Max Series processors for AI/ML and other GPU-friendly applications.

- Reintegration of 224 3rd Gen Intel Xeon Scalable processor nodes for higher memory

applications (added to Stampede2 in 2021). - Legacy hardware to support throughput computing — more than 1,000 existing Stampede2 2nd Gen Intel Xeon Scalable processor nodes will be incorporated into the new system to support high-throughput computing, interactive workloads, and other smaller workloads.

- The new Omni-Path Fabric 400 Gb/s technology offering highly scalable performance through a network interconnect with 24 TB/s backplane bandwidth to enable low latency, excellent scalability for applications, and high connectivity to the I/O subsystem.

- 1,858 compute nodes with more than 140,000 cores, more than 330 terabytes of RAM, 13 petabytes of new storage, and almost 10 petaflops of peak capability. (Source: Intel)

Beyond the new interconnect, and new Dell-Intel GPU compute nodes, there is a bit more context. The Dell XE9640 is the update to the Dell EMC PowerEdge XE8545 we reviewed. This will be an all-Intel flavor. As a quick note, given that Dell started from a UT Austin dorm room and Michael Dell is a huge donor, TACC’s supercomputers tend to be Dell-Intel so we would not take this as a competitive win for either.

First, TACC is adding 3rd Gen Intel Xeon Scalable nodes from 2021 into a Q4 2023/ 2024 supercomputer. Second, there are even 2nd Gen Intel Xeon Scalable Cascade Lake systems in the mix. That is quite amazing to span 4+ years of CPU history in a single machine. Texas power is relatively inexpensive, so perhaps that is the reason.

Final Words

Overall, this is not a huge number of CPUs or the largest supercomputer in the world. It is also a decent-sized system with a mix of new and old systems. New technologies like a 400Gbps Omni-Path are exciting as well.

Stay tuned to STH as we will have our Xeon Max testing piece out in early August. We just re-shot a portion of the video and are editing that into the article/ video based on questions we have received post-Genoa-X launch.

We also had a few words from our Editor-in-Chief on this one to add.

The original Stampede is actually a machine that helped launch STH. It was the first time I took time off of my consulting work to take a trip down to Austin when I was still doing Xeon reviews for Tom’s Hardware. Stampede with the original Intel Xeon Xeon Phi was the first supercomputer I ever visited. Seeing that was one of the experiences that led me to put more effort into STH and servers. Who would have guessed I would end up moving to Austin (albeit I have not gotten an invite to go back to TACC since.) – Patrick

I thought Omnipath was dead or sold off?

sold to Cornelis Networks

HBM Flat Mode: “Deja vu all over again”…It reminds me of a project from 30 years ago, writing in X86 asm & C in a “MUD” (Multi-User MS-DOS (don’t ask)) environment…I rewrote the main DB internal sort routine in C from Asm (and it ran faster)…Why? Because I used 8K of that sacred lower 640K for a buffer doing a write via the OS to (cached) disk. I was admonished by management for using a bit of said sacred RAM.

So same(ish) scenario 3 decades later, who get’s to use that first 64-GB of HBM when in flat mode? Hmmm.

Not sure why tiny SC get coverage but here is another new one:

https://centers.hpc.mil/news/index.html#blueback