NVIDIA has been moving the “Titan” line up-market for generations. The price of entry is now $2500 with the NVIDIA Titan RTX. For that $2500 you get the biggest RTX GPU both in terms of compute and memory performance. For the AI and deep learning crowd, these are not just the GPUs to get for your desktop. Instead, these are the GPUs that people will buy in pairs for their workstations which we expect will quickly deplete the supply. At the same time, this is a slightly different take on the Titan platform than the Titan V was previously.

NVIDIA Titan RTX

The NVIDIA Titan RTX is based on the same type of design we saw with the NVIDIA RTX 2080 Ti Founders Edition. That means there are two large fans and a huge vapor chamber heatsink, yet without exhaust out of the rear of the chassis. That is a big deal as traditional workstation airflow is not designed for this type of airflow pattern. One also gets a gold card to show off the fact that you have the $2500 Titan RTX, not the RTX 2080 TI.

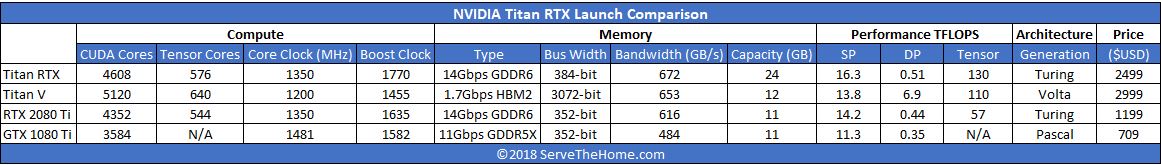

Specs are what matters. Here the Titan RTX has more CUDA and Tensor cores than the RTX 2080 Ti. Tensor core numbers are still not at Titan V levels but they are close. Those Tensor cores get full performance or roughly twice the performance per Tensor core versus the desktop RTX part. One also gets 24GB of GDDR6 memory which provides high bandwidth and a large memory footprint for training larger models.

The main area where the Titan RTX is not quite up to the level of its predecessor is really in terms of double precision. The Titan RTX still has castrated double precision performance like the RTX 2080 Ti and the 1080 Ti before them. The Titan V had significant double precision performance and will still be the go-to crowd for double precision desktop compute.

Perhaps the most intriguing part of the NVIDIA Titan RTX is NVLink support. One can link two cards together to get a large pool of compute resources and 48GB of memory accessible through the high-speed link.

Final Words

If history serves as any indication of the future, the Titan V sold out and was supply constrained. We expect the NVIDIA Titan RTX to do the same as AI researchers flock to the 24GB of memory and full tensor core accumulate performance. We think that given the NVLink feature and the 24GB per card memory size, the workstation AI researchers are going to buy these in pairs. NVIDIA has been emphasizing NVLink over PCIe 3.0 as their preferred scale-up solution.

At $2500 per card, this is not intended to be a gaming device. The NVIDIA Titan RTX has the feature set to make the deep learning/ AI researcher ready to upgrade. That is what NVIDIA is counting on with the Titan RTX, the ability to continually push $2500 per card, or realistically $5000 for a pair of cards to each user on an annual or semi-annual basis. For highly paid researchers, $5000 is about the lowest cost 48GB NVLink solution on the market that also supports Tensor cores so it seems like this stock will be consumed quickly as well. If you are in this market, you probably are watching the ordering page on refresh and have signed up for notifications. NVIDIA is enjoying being the leader in the desktop training market, and continues to put out cards like this to maintain that lead.