Proxmox VE 5.3 is now out. There are a number of new features in the release including one that we have wanted the Proxmox team to cover for a long time: a storage GUI. For those that are unfamiliar with Proxmox VE, is an open source virtualization (KVM) and container (LXC) platform that handles clustering and HA duties. Proxmox VE also is well-known for supporting a number of different open source storage solutions such as ZFS for reliable scale-up storage, and both Ceph and Gluster for scale-out storage. Best of all, it is free to use with a reasonable support contract option.

Proxmox VE 5.3 New CephFS and Storage GUI

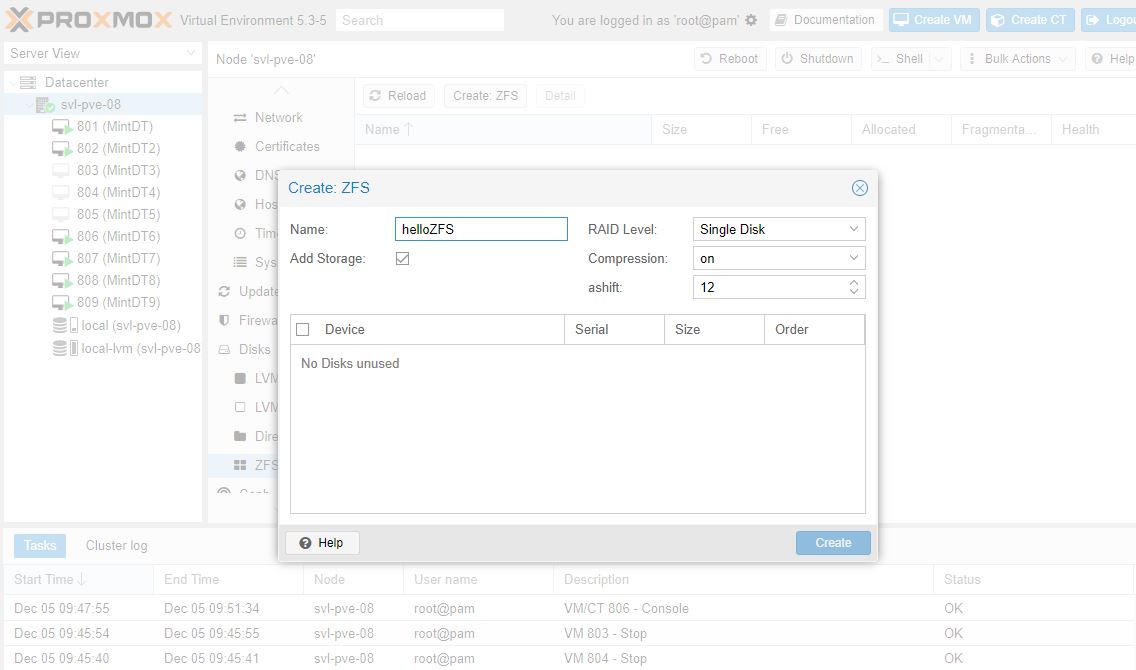

While Proxmox VE 5.2 added cloud-init and LE certificates among other features to make virtualization better, the new Proxmox VE 5.3 builds on storage features. Proxmox VE 5.3 has added a new storage GUI that our Editor-in-Chief outed back in September. This is a huge deal as it means that users no longer need to go into the command line to create ZFS pools and then add the ZFS storage to the virtualization node or cluster.

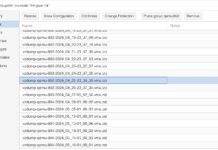

The storage GUI is still basic for creating volumes. It is not sufficient, at this time, to operate as a full storage management replacement like FreeNAS is. Still, if you just want to make a Ceph cluster or ZFS RAID-Z2 array for VM storage, Proxmox VE now can set everything up without a trip to the CLI. Proxmox VE already has health monitoring functions and alerting for disks. If a drive fails, you are notified by e-mail as the default.

CephFS integration is a big feature. Proxmox VE adopted Ceph early. Ceph is one of the leading scale-out open source storage solutions that many companies and private clouds use. Ceph previously had both object and block storage. One of Ceph’s newest features is a POSIX-compliant filesystem that uses a Ceph Storage Cluster to store its data called Ceph File System or CephFS.

Closing Thoughts

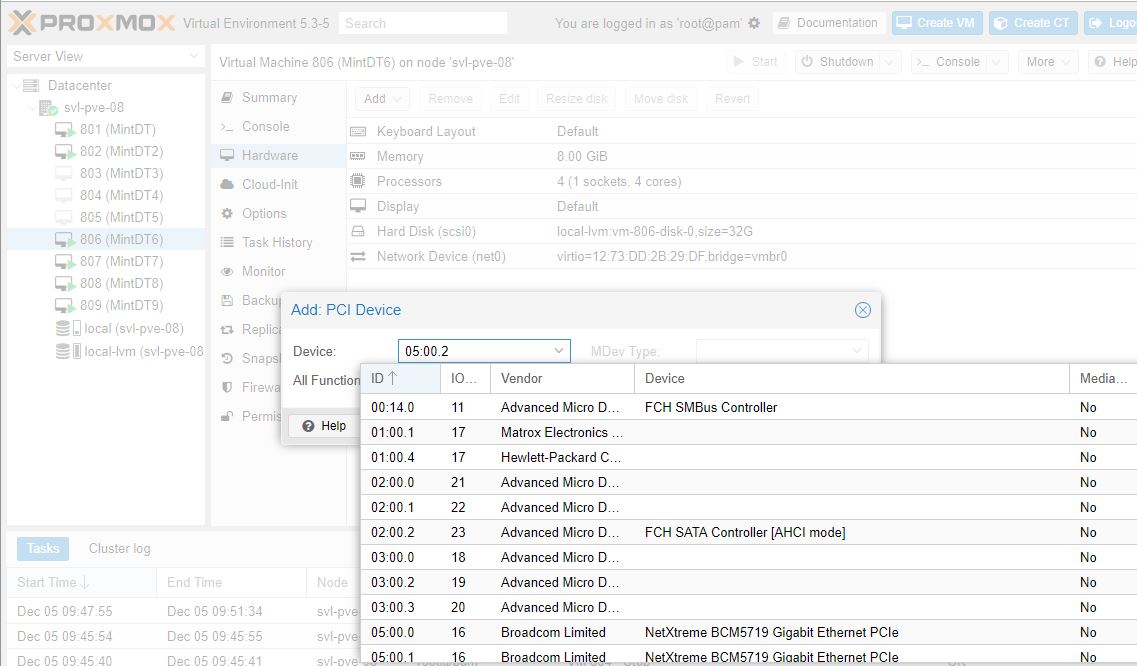

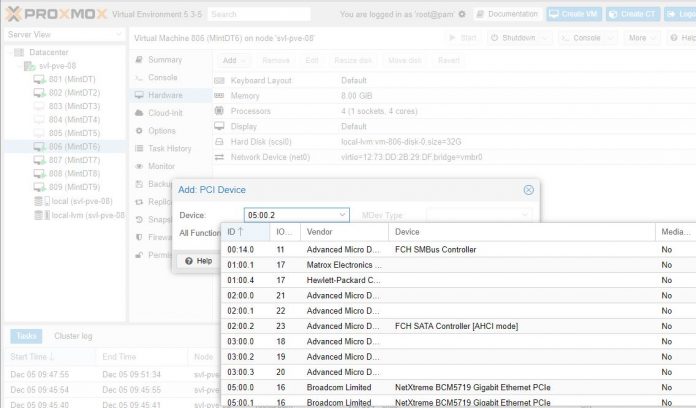

Current kernels and packages are a hallmark of Proxmox VE, which cannot be said about many other products. Also, Proxmox can now support Docker in a nested container, PCIe passthrough via the GUI, and even emulating new features. CephFS integration is great for those who are running Proxmox VE hyper-converged clusters. This is perhaps one of, if not the best, open source VMware ESXi and vSAN alternative.

Here is where Proxmox VE 5.3 gets really interesting. Proxmox VE by putting effort into the storage GUI is very close to having a FreeNAS competitor. Proxmox VE uses modern Linux kernels which means that things like hardware support is much better than FreeBSD. Supporting not just ZFS, but also a number of scale-out storage solutions, as well as VM and container host clustering, is a huge advantage. Once you get beyond one virtualization host, and want to move to a virtualization cluster, Proxmox VE has a complete solution. Proxmox VE is missing a lot of the permissions and user management features that FreeNAS has, but if their team wants to, they are one or two dot releases on their storage and user GUIs to having a compelling alternative to FreeNAS, and on Linux.

You can see what we were doing with STH Proxmox VE even before the latest updates in Create the Ultimate Virtualization and Container Setup (KVM, LXC, Docker) with Management GUIs.

Below are the key new features of Proxmox VE 5.3.

New Proxmox VE 5.3 Features

Here is a slightly abridged list of features.

- Based on Debian Stretch 9.6

- Kernel 4.15.18

- QEMU 2.12.1

- LXC 3.0.2

- ZFS 0.7.12

- Ceph 12.2.8 (Luminous LTS, stable), packaged by Proxmox

- Installer with ZFS: no swap space is created by default, instead an optional limit of the used space in the advanced options can be defined, thus leaving unpartitioned space at the end for a swap partition.

- Disk Management on GUI (ZFS, LVM, LVMthin, xfs, ext4)

- Create CephFS via GUI (MDS)

- CephFS Storage plugin (supports backups, templates and ISO images)

- LIO support for ZFS over iSCSI storage plugin

- ifupdown2 package and partial GUI support

- Delete unused disk images on the storage view

- Enable/disable the local cursor in noVNC

- Enable/disable autoresize in noVNC

- Edit /etc/hosts/ via GUI, which is necessary whenever the IP or hostname is changed

- Editable VGA Memory for VM, enables higher resolutions (UHD) for e.g. SPICE

- VM Generation ID: Users can set a ‘vmgenid’ device, which enables safe snapshots, backup and clone for various software inside the VM, like Microsoft Active Directory. This vmgenid will be added to all newly created VMs automatically. Users have to manually add it to older VMs.

- qemu-server: add ability to fstrim a guest filesystem after moving a disk (during move_disk or migrate with local disks)

- Emulating ARM virtual machines (experimental, mostly useful for development purposes)

- vGPU/MDev and PCI passthrough. GUI for configuring PCI passthrough and also enables the use of vGPUs (aka mediated devices) like Intel KVMGT (aka GVT-g) or Nvidias vGPUS.

- pvesh rewrite to use the new CLI Formatter framework – providing a configurable output format for all API calls (formats include: json, yaml and a pleasant table output with borders). Provides unified support for json output for the PVE CLI Tools.

- Nesting for Containers (privileged & unprivileged containers): Allows running lxc, lxd or docker inside containers, also supports using AppArmor inside containers

- Mounting CIFS/NFS inside containers (privileged): Allows using samba or nfs shares directly from within containers

- Improved reference documentation

You can learn more here.

Without a trip to the GUI, eh?

Why don’t they have a dev build to test the latest stuff?

Based on Debian 10

Kernel 5.1

QEMU 4.0

LXC 3.1.0 / LXD 3.12

ZFS 0.8

Ceph 14.2.0