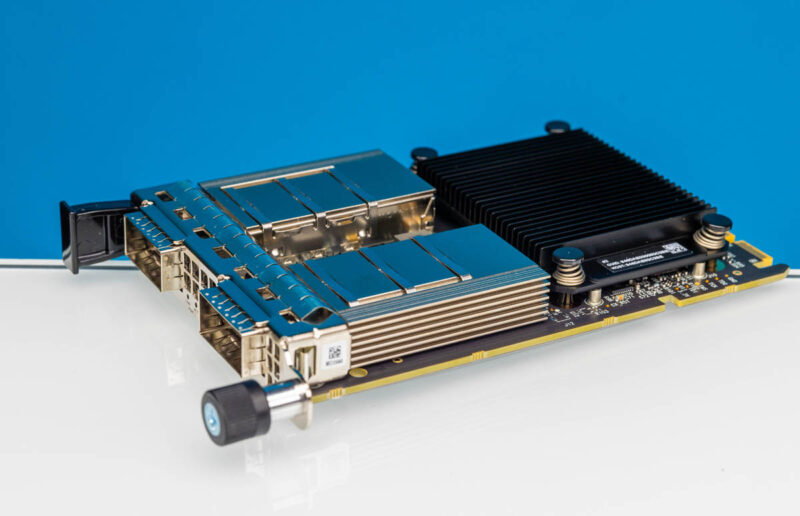

Today we have a fun one in our NIC review series. We are going to take a look at the NVIDIA ConnectX-7 OCP NIC 3.0 form factor card. This is a PCIe Gen5 x16 card so it can sustain two ports of 200GbE or NDR200 InfiniBand. Moreover, instead of being a standard PCIe card size, this fits into an OCP NIC 3.0 form factor that most server vendors use. Let us get to the hardware.

As a quick note, we have been asking NVIDIA to get us cards for some time, and they finally arrived so we could do this piece. Thank you to the NVIDIA folks for making this happen.

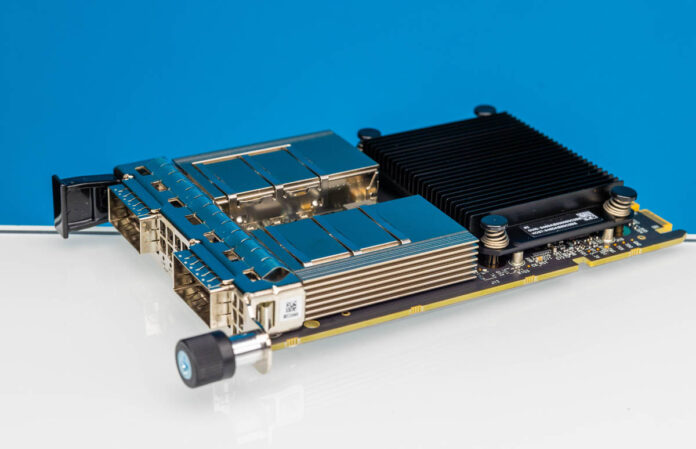

NVIDIA ConnectX-7 OCP NIC 3.0 Hardware

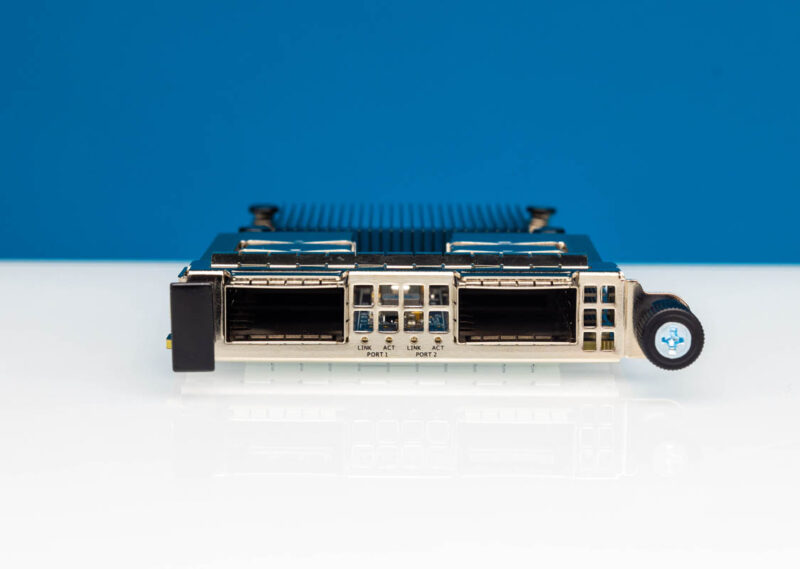

Here are perhaps the big features of the Connect-X 7, the dual QSFP112 ports for up to 200Gbps networking. Since the CX753436M is a VPI card, these can carry either Ethernet or NDR200 InfiniBand traffic.

Our cards are the pull-tab designs, which is our favorite OCP NIC 3.0 format. Cloud providers tend to favor this format since it allows for easy replacement from the aisle without having to open the chassis. Many large legacy server vendors that charge premiums for service contracts will have different OCP NIC 3.0 locking mechanisms and faceplates for their cards.

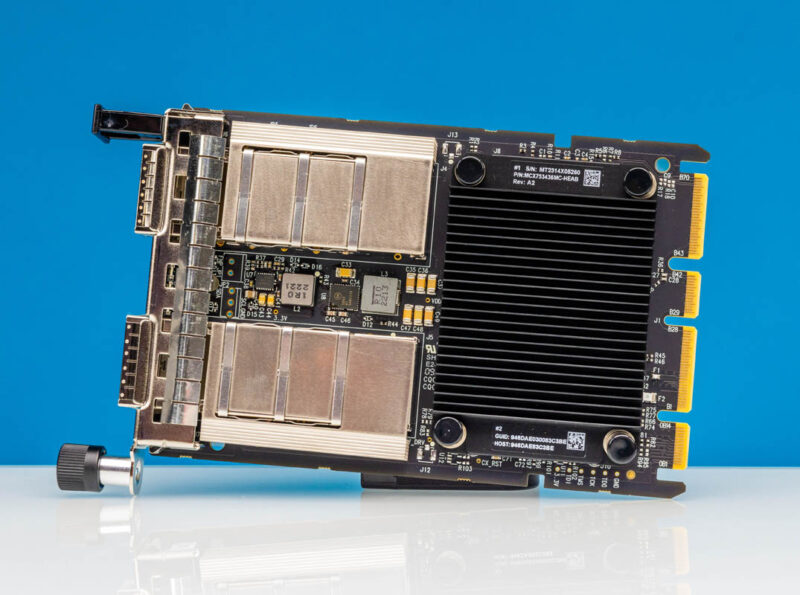

The NIC itself is essentially the NVIDIA ConnectX-7 chip underneath the black heatsink and the QSFP112 cages.

Something to look at here is that the QSFP112 cages have little heatsink wings on the side. A major challenge with OCP NIC 3.0 designs is that as networking gets faster, more heat is generated for the modules to cool. These little heatsinks plus generally higher-quality NVIDIA optics tend to help the optics last longer. If you buy lower-cost high-speed optics and use them in a card like this, we have had readers tell us that is a recipe for disaster. Stick with higher-quality optics or DACs for cards like these since air is usually pre-heated in servers before it gets to the optics.

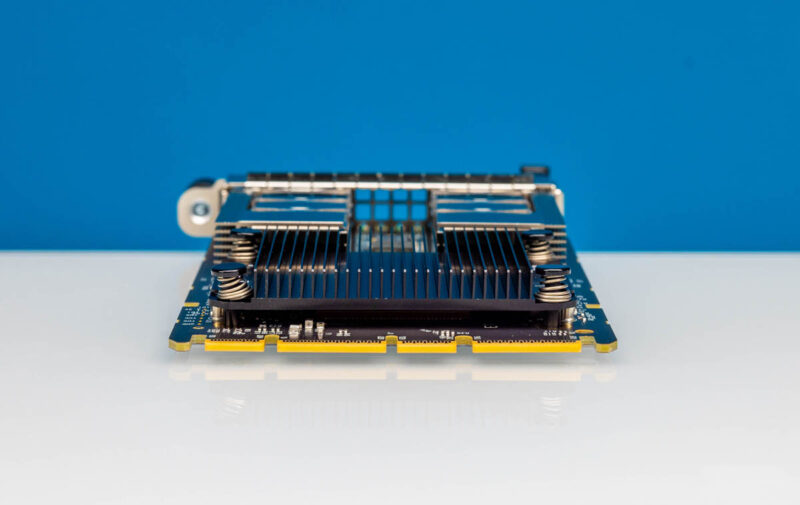

We can also see the PCIe Gen5 x16 edge connector allowing for full bandwidth on this card. Note, with each generation, dating back to the Mellanox days, there are often cards that have ports that cannot run simultaneously at full speed. The ordering codes/ part numbers matter, but so do server designs. Some servers only provision x8 lanes to OCP NIC 3.0 slots, so beware of that.

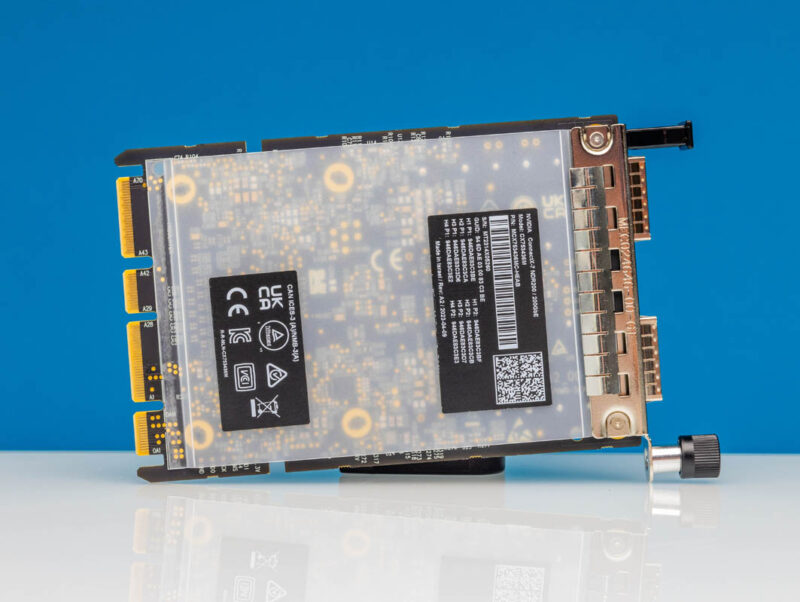

The cards are actually the CX753436M variant. As a result, we also get eight MAC addresses on the bottom label along with a cool semi-transparent bottom cover. Kudos to NVIDIA for the small but fun upgrade from an opaque black cover so that us hardware nerds can see what is underneath.

Overall, this is an incredibly fast NIC in one of our favorite form factors.

Next, let us see how it works.

NVIDIA ConnectX-7 OCP NIC 3.0 Interfaces

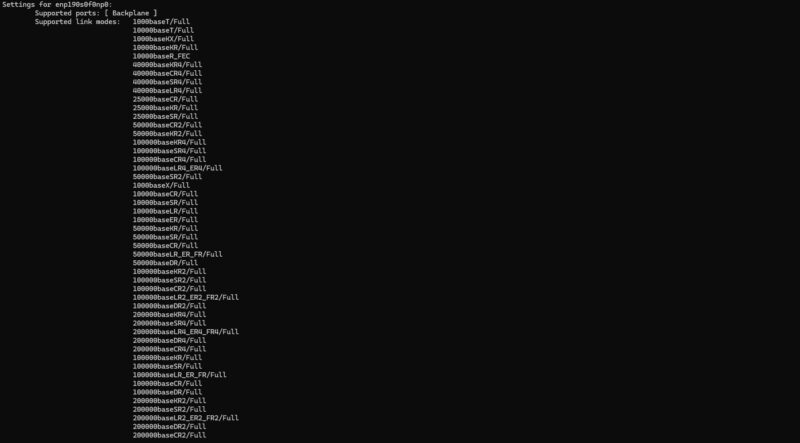

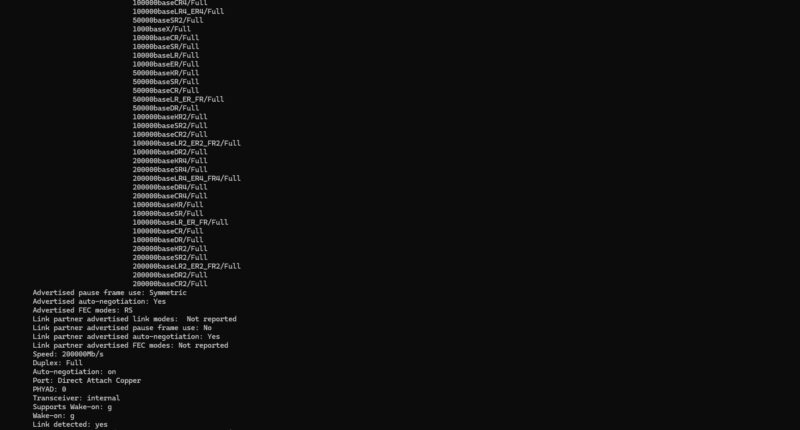

The particular model we have is a VPI model. This means that we can support either 200GbE or 200Gbps NDR200 InfiniBand in full duplex mode. What was fun to see is that this NIC supports all the way back to 1GbE leading to a long list of supported/ advertised link modes.

Here is the ethtool output connected via a NVIDIA 200Gbps DAC.

The last time we looked at the NVIDIA ConnectX-7 it was a 400GbE part.

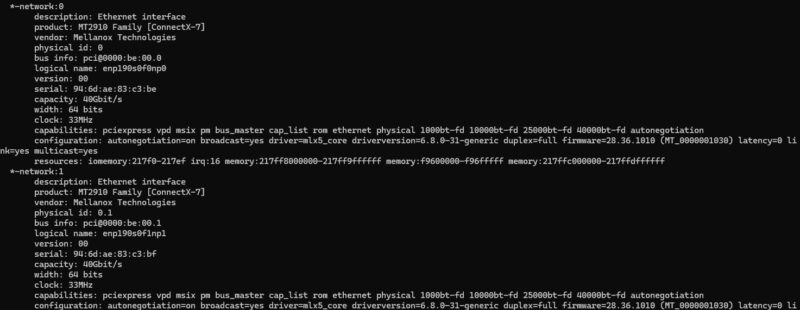

Just to round things out, here is some lshw output:

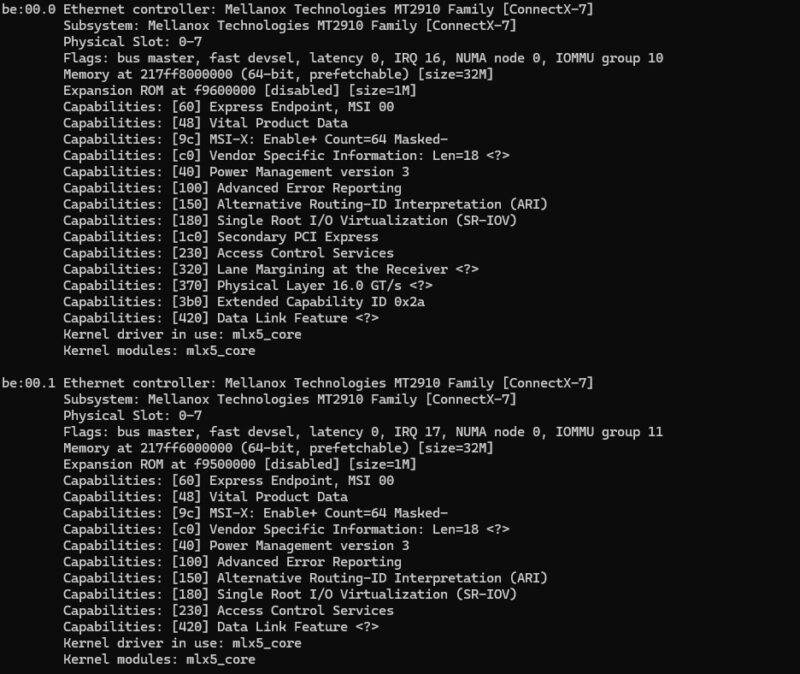

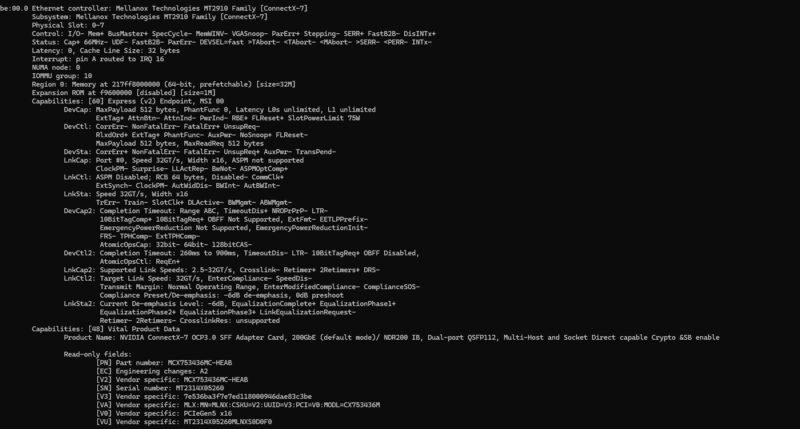

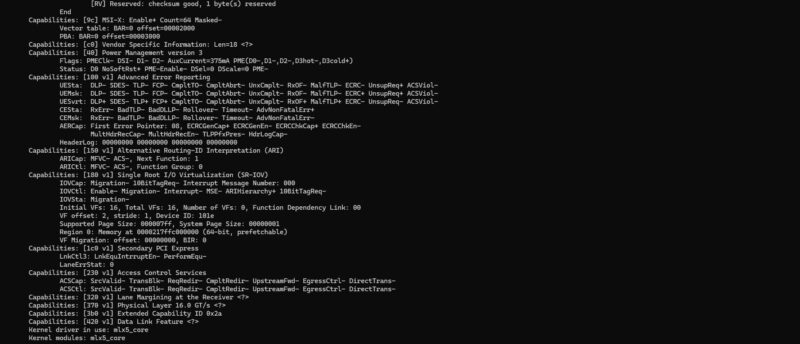

Here is the lspci -v output with the Mellanox Technologies MT2910 Family [ConnectX-7] device listed.

The lspci -vvv output was huge, but here is the first part:

And the second:

We can see that we are connected in a PCIe Gen5 x16 OCP NIC 3.0 slot here.

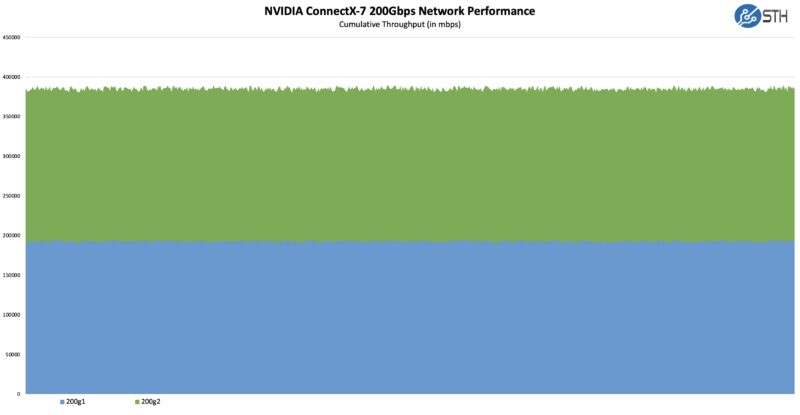

NVIDIA ConnectX-7 OCP NIC 3.0 Performance

200Gbps of traffic is non-trivial to drive even with FPGA-based traffic generation. Still, we got fairly close to the line rate on the cards, which was an accomplishment.

As a quick note, we did not go through NVIDIA’s full tuning guides for these, so we are leaving performance on the table. Also, it is worth thinking about a 200Gbps link as being roughly equivalent to a PCIe Gen4 x16 link (roughly being operative here), which is stunning to think about. That means you have almost as much network bandwidth on each port as four PCIe Gen4 x4 NVMe SSDs or eight PCIe Gen3 x4 NVMe SSDs. There are two ports, and they fit into a single OCP NIC 3.0 PCIe Gen5 x16 slot.

Final Words

NVIDIA ConnectX-7 adapters are more than just port speed. Being able to deliver either Ethernet or Infiniband networking means that hey have a large number of features for RDMA (including GPUDirect RDMA), storage acceleration, overlay networking like VXLAN, GENEVE, NVGRE, and even things like network booting that will be more important with systems like the upcoming NVIDIA Oberon platform and so forth. There are a ton of features here, so it is probably worth just checking them out. As these devices hit the speeds of PCIe, offloads become more important since those are what keep valuable CPU and GPU resources from being wasted.

For those waiting for our NVIDIA BlueField-3 review, get ready. That one has been in progress as well and will be coming soon. In the meantime, hopefully this look at a different option in the ConnectX-7 family is useful.

We’ve seen a high failure rate on FS 100G modules if they’re on the hot aisle side but they’ve been OK on the cold aisle side so form the thousands of modules we’ve got deployed, I’d say that observation is on point.

how do they leverage the 8 macs?

“the dual QSFP112 ports for up to 200Gbps networking”

Sorry, this is confusing me. QSFP112 is for 400Gbps networking. QSFP56 is 200Gbps. Both use 4 channels with PAM4 signalling, but QSFP112 has a higher symbol rate (106.25 Gbps compared to 53.125 Gbps in QSFP56). Dual QSFP112 should be 800Gbps total bandwidth. I recently purchased a system with a ConnectX-6 dual QSFP56 card which the vendor insisted was 100Gbps per port, so this is clearly a thing, but I don’t understand it, since 100Gpbs is of course QSFP28.

QSFP112 allows for up to 400Gbps. These dual port QSFP112 cx7 ocp3 nics have fw that limits them to 200Gbps per port.

Nvidia does make a nic (actually bf3 dpu) that enables 400G per port for 2 ports. These cards are still limited by the fact that they are pci 5.0. However, they have the ability to use the auxiliary daughter card. Future fw may allow those cards to use 2 pci 5.0 slots where they may be able to run both ports at 400G for a total of 800G.