Today at GTC 2020 (#2), NVIDIA is announcing or re-announcing its DPU portfolio. First, we have the NVIDIA BlueField-2 DPU that was first announced and shown by Mellanox at VMworld 2019. The company also showed off BlueField-2 at VMworld 2020. Perhaps the more intriguing option is the BlueField-2X DPUs. The NVIDIA BlueField-2X combines Mellanox networking, with Arm cores, and an NVIDIA GPU which is especially exciting.

What is a DPU?

If you are looking for a quick primer on “What is a DPU?” we have a What is a DPU A Data Processing Unit Quick Primer. You can also see the video here:

In those pieces, we cover BlueField-2 and some of the other promising DPU solutions in the market.

NVIDIA BlueField-2 and BlueField-2X DPUs

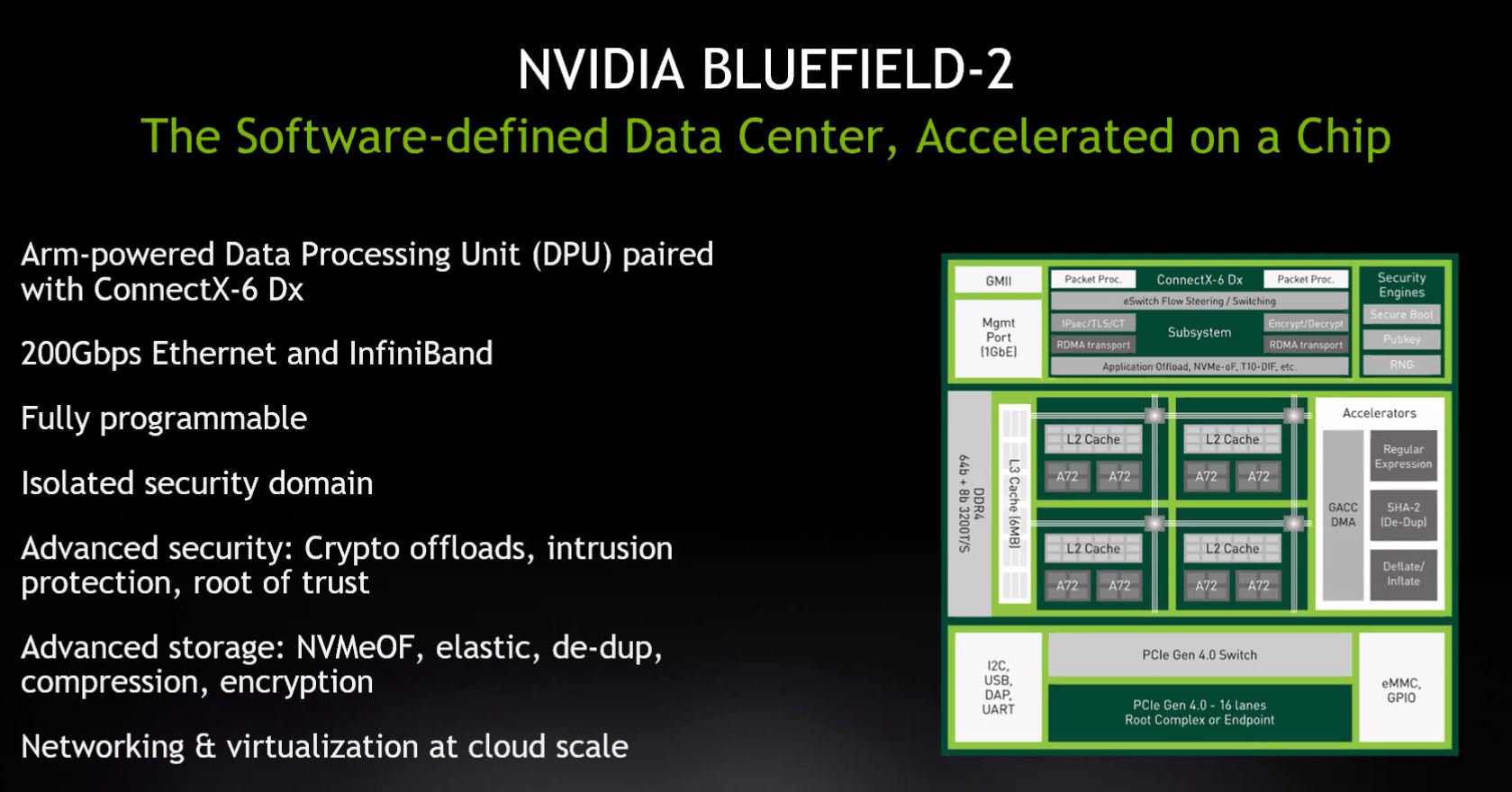

This one feels a bit like it is an announcement that already happened. First, we had the Mellanox Bluefield-2 IPU SmartNIC launch in 2019. At the Ampere launch, we saw a ConnectX-6 NIC plus NVIDIA A100 GPU solution in the NVIDIA EGX A100. Let us go over what NVIDIA is announcing. Here is the block diagram and features from VMware Project Monterey.

This aligned closely to what Mellanox showed off as BlueField-2 in 2019.

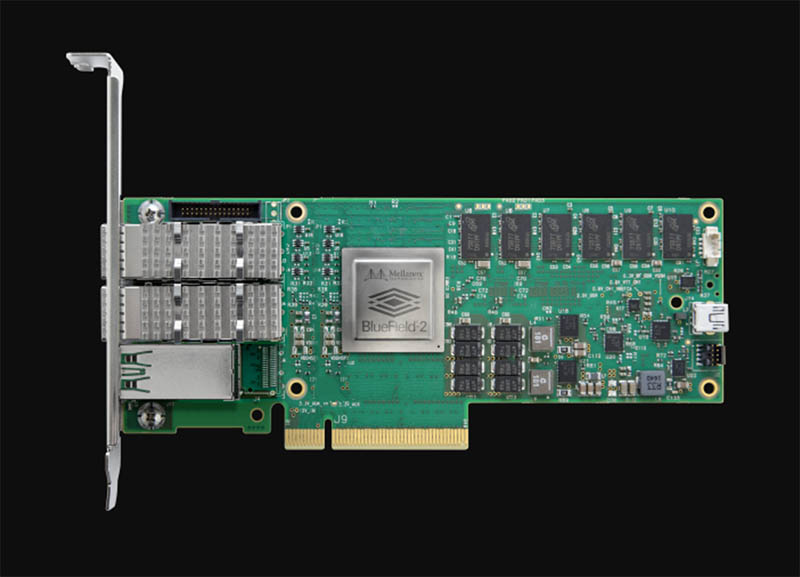

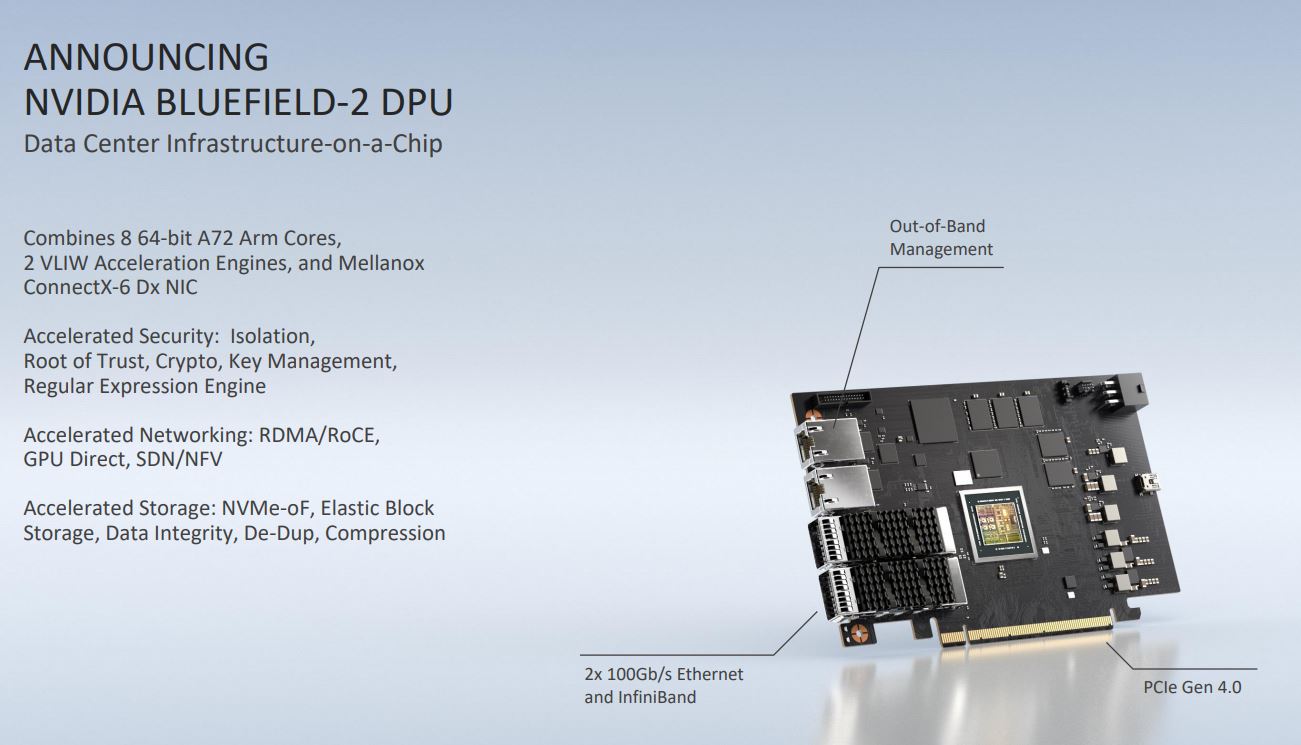

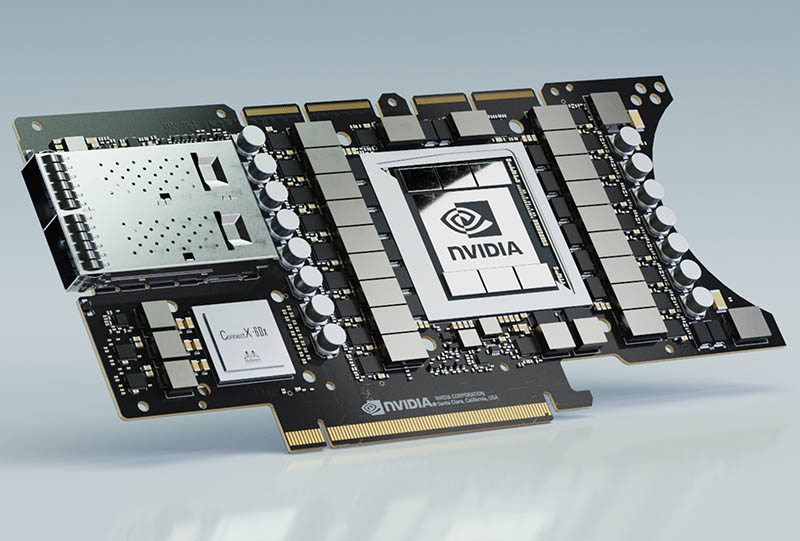

At GTC 2020, the company is giving a bit more detail in terms of what the cards look like. We can see the new version still is powered by Arm Cortex A72 cores and ConnectX-6 IP. The new card is full height to support two QSFP28 100Gbps connectors for InfiniBand or Ethernet.

NVIDIA says that all of this offload “replaces 125 x86 cores” in its specs.

Since we have covered the BlueField-2 a lot, we are going to stop there. Again, you can see our recent What is a DPU? A Data Processing Unit Quick Primer piece where we go into how these specs compare with other current DPUs from other companies.

NVIDIA BlueField-2X

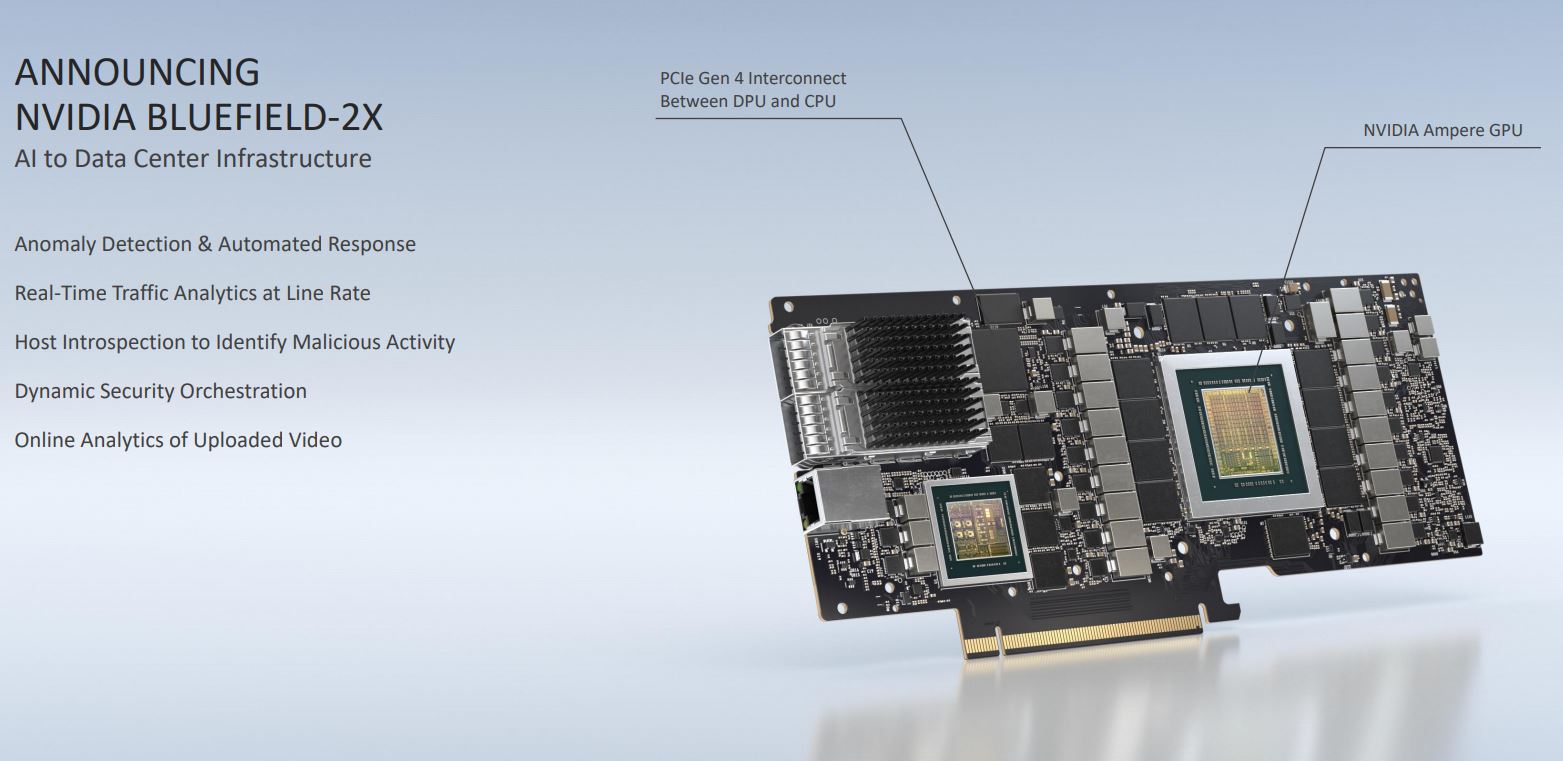

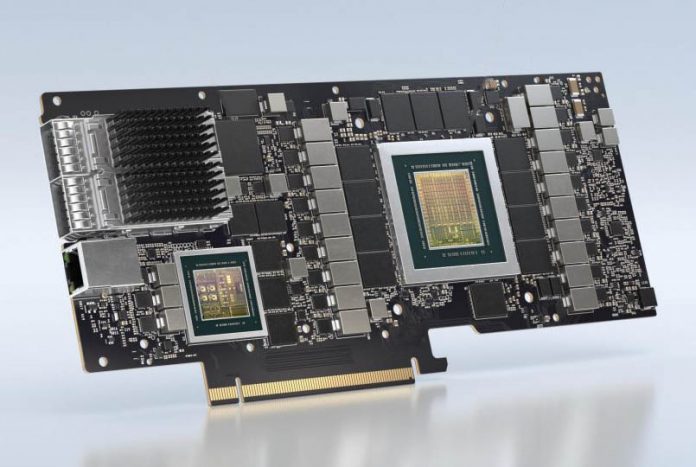

While BlueField-2 is cool, the NVIDIA BlueField-2X is a much more important product. The company is effectively taking the BlueField-2 card and adding an Ampere generation GPU.

This is very similar to what we saw NVIDIA launch at the first GTC 2020 with the NVIDIA EGX A100. Here is that card:

The big difference with the new NVIDIA BlueField-2X is that the card uses ConnectX-6 Dx IP in the context of the BlueField DPU. Effectively, NVIDIA is able to run a Linux OS, or in the near future VMware ESXi, on the BlueField-2X. The GPU is connected to the BlueField-2X’s PCIe Gen4 x16 lanes. From there, the DPU is connected to a fabric. This is the power of what NVIDIA is building. They can put an entire system on SoC (BlueField-2) with the CPU, NICs, accelerators, and security. They can then use that to attach GPUs to the network without x86 hosts as are done today.

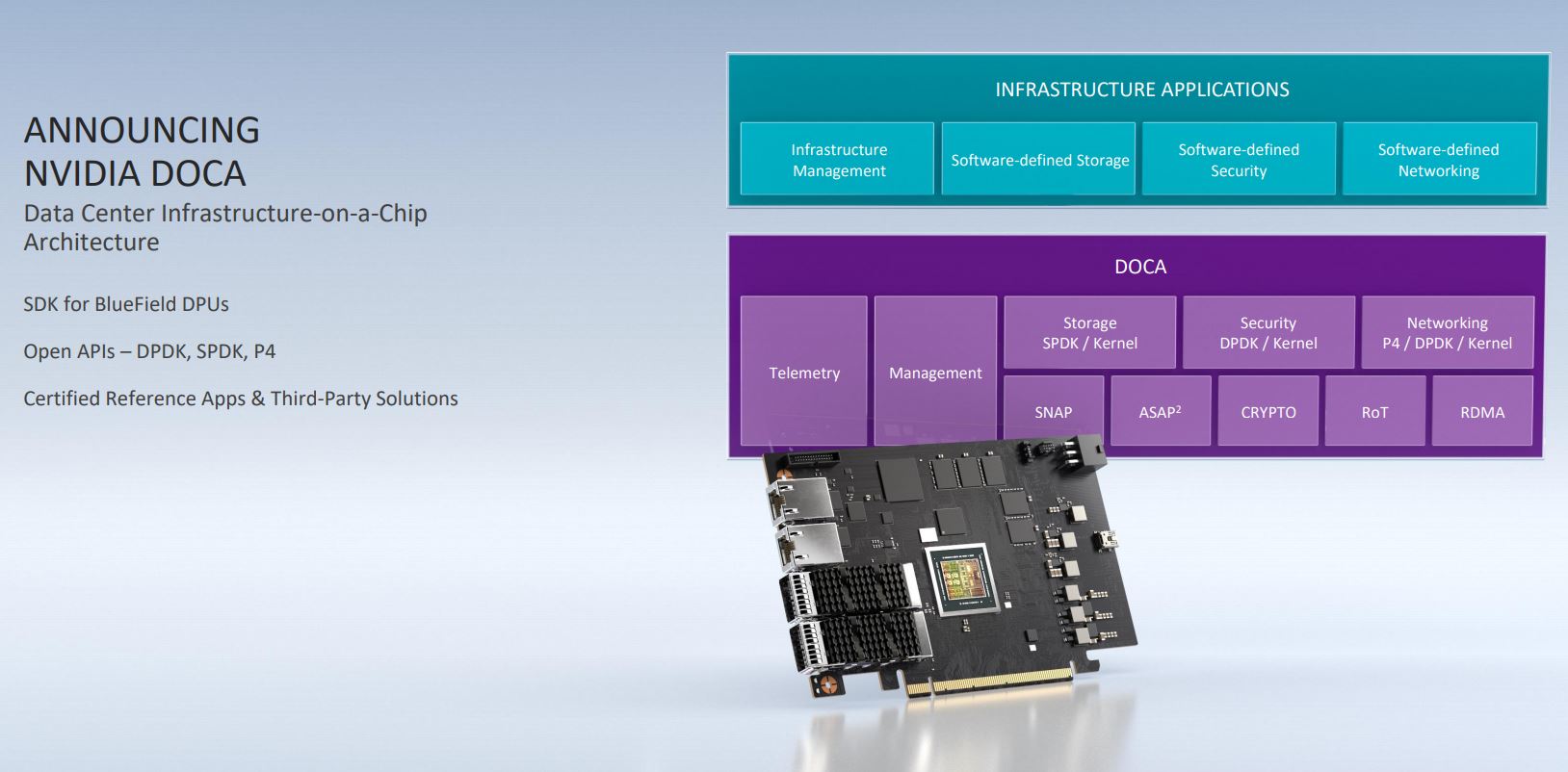

NVIDIA DOCA

To bring about this change, we have NVIDIA DOCA. NVIDIA DOCA is what the company plans to be the CUDA of the DPU era. It is a full SDK for BlueField-2 DPUs so that applications can be built atop DOCA and leverage NVIDIA hardware much in the same way that applications can leverage CUDA today.

This is still the early days of DOCA, but we hope to see more on this in the near future.

Final Words

The power of this solution goes beyond today’s announcement. As you will notice, the BlueField-2 and BlueField-2X accelerators have RDMA and RoCE acceleration. While NVIDIA did not announce it today, we know that NVIDIA is using scale-out software-defined storage solutions in its Selene Supercomputer. BlueField-2 is designed to service these types of storage applications as well.

On a broader note, this solution is bringing together traditional NVIDIA GPUs, now with the recent Mellanox acquisition’s networking prowess. Embedded here are Arm compute cores that run their own OS. Make no mistake, the NVIDIA BlueField-2X is a shot across the x86 bow. NVIDIA did not confirm the GPU details, but it is not hard to imagine one day it includes a flagship-level GPU such as the NVIDIA A100. It is clearly something we see as the future vision of NVIDIA.

Perhaps I am missing the point. What I hear is a small fast computer that uses PCIe for external non-network comms rather than internal comms. It’s an attempt at an end run around Intel and AMD.

IDK, I think it will tend to grow to include an on card expansion bus… it’ll become, you know, a PC, or it will drop the PCIe x16 edge connector and then it will be… a PC.

“it” being the DPU concept.

Really, if nVidia just called it TX 2000, added some m.2 slots, dropped the x16 edge connector, and stuck in in a card frame that handles power and oob mhmt, I’d look at it seriously.

Sorry spamming, only on my first cup of coffee this morning.

“DPU” = I/O Communications Processor!! Stop calling it a “DPU”, people! It should be called according to its function, hence an I/O Communications Processor! ANY old processor chip is a “data processor”! Define what “data” you are processing with it and call the thing that kind of data processor, NOT merely “data processor unit”!

The more you offload from the CPU to the NIC, the more new names you need :-)

NIC -> SmartNIC -> DPU

I guess someone will come with HCDPU soon (Hyper Converged Data Processing Unit) just because the mouthful sounds imposing.

As some point, the CPU will become an OPU (Orchestration Processing Unit) because all the other compute activities will have been offloaded to other XPUs where X is a letter of the alphabet.

The problem with all this marketing blah-blah-blah is that it does not tell engineers what the processor unit or device actually does. I understand RDMA, RoCE, offloaded crypto. I do not understand DPU beyond the point of just being a nice name.

Then the current cycle of offloading tasks from the CPU will come to an end because of technological advances and the need and possibility to again reduce latency (e.g. the North Bridge being absorbed into the CPU) and the meaning of OPU will change to Omniscient Processing Unit.

And so on.

Other issue: there are not many English words ending in “pu”. According to Bing:

coypu: aquatic South American rodent resembling a small beaver; bred for its fur.

quipu or quippu: an ancient Inca device for recording information, consisting of variously colored threads knotted in different ways.

So for the marketing people who wants to avoid just inventing a new abbreviation, it seems that quipu or quippu are their best bets given the meaning.

@domih – Kioxia might like quippu!

@hoohoo

Yeah, can’t wait to hear about the Quantum Universal Instrumentalized Primary Processing Unit or the Cryptographic Optical Yobibyte Processing Unit.

@domih

Why is SO MUCH effort being put into offloading tasks and work from the CPU in the first place? Is the CPU not somehow capable of doing this stuff? I mean, we’ve got freaking 64-plus core processors now. Surely SOMEHOW at least SOME of that capacity can do that work, right? You hit the nail on the head when you point out the unclear purpose for the existence of “DPU” in the common technical vernacular other than “convenience”, though I’m going to argue that it’s being done because the ones doing this are LAZY and UNCREATIVE. Or, they are not as clever as they want us, the average laypeople, to think they are and they are completely ignorant to the existence of perfectly suitable terminology such as “ICP – Input/Output Communications Processor”. After all, that’s what that class of processor was called back in the 1960s and 1970s when computers were huge room-filling hulks of electronics and not the svelte microprocessors we have now. Just because the times have changed DOES NOT mean that the technical terms should be changed.

Also, as a side complaint, WHY, OH WHY do we need everything to be “smart” all of a sudden? I have precious little in my house that’s “smart”. Indeed, I DON’T WANT “omniscient” “smart” devices. I’m perfectly happy doing my own work without any interference from AI or whatever they who make this stuff think I need. All the stupid “new” terms is just making computer technology more inaccessible and hard to understand for everyone. The world does not need an “Omniscient Processing Unit”. As soon as we have that, civilization is done for. The AI bots will hand us our asses on silver platters and order us to surrender or die. And quite frankly, I’m not keen on living in a world where I have no purpose and the machines have rendered me obsolete! I want my job assembling electronics back, or at the very least a job fixing all the AI crap that’s replacing human jobs left, right and center! Many apologies for going tinfoil hat angry resistance freedom fighter mode. Hopefully, you understand my schtick. Thanks.

The IP Blocks and Specs slide really jumps out- if it is doing 100Gbps IPsec, 50Gbps RegEx + NVMe duties using just the “acceleration engines” and not eating into the ARM cores, that’s just impressive. With cards like this really driving VMware’s push w/ ESXi on Arm (publicly as a fling- they’ve had it internally for awhile), I’ll be curious to see if other vendors shift their “appliance” VMs to ARM- think NGFW (Palo Alto/ Fortinet), backup proxy (Veeam), etc. And if that does happen, I would imagine that would be a boon ARM based servers (which suddenly makes nVidia’s play to buy ARM make so much more sense…)

@Stephen Beets: I was tongue in cheek about the marketing side of it :-)

@GuyisIT: exactly (as STH discussed it the last few weeks/months), and now AMD is allegedly on the verge to buy Xilinx probably for the same reasons. For the last 25 years, software middleware progressively took center stage and relegated databases, then application servers to the periphery and then finally morphed into fully distributed architectures. Hardware middleware does the same to servers because heavy servers without hyper-fast interconnect to share very heavy distributed work are like slow dinosaurs stuck in the mud.

To sell more CPUs, GPUs and Accelerators Intel, NVidia and AMD need big interconnect pipes with very low latency and very fast throughput.

Are there any threat models for this? Concern is that much of this stuff is only considered in a data center fiber media lens. How does someone cage it in that environment and prevent it from a blueZ mesh network made up of vulnerable edge devices with high powered radios whose range is software proportional (APIC)?