MLPerf Training v5.1 was released this week and a lot of the results are about what you would expect. NVIDIA dominated the results. The only real other accelerator in the results was AMD, and AMD certainly had a strong showing. Perhaps the most interesting is that Cisco Silicon One G200 made an appearance in the list. We dug into the configurations and found something very notable.

MLPerf Training v5.1 NVIDIA Dominates, while AMD Has a Strong Showing and Cisco Silicon

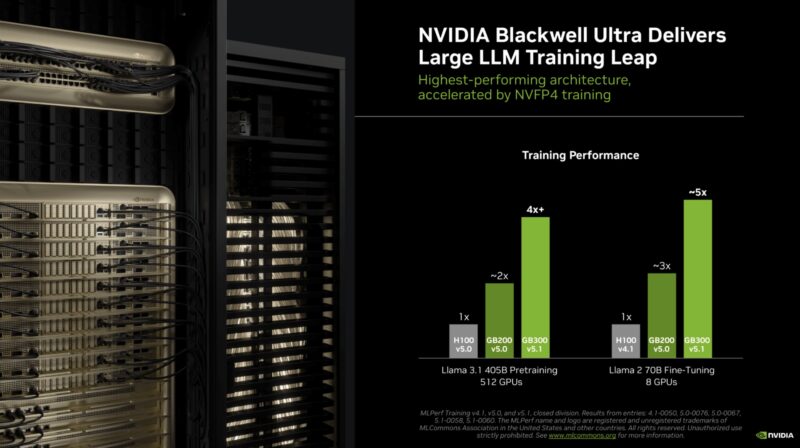

Starting off, NVIDIA showed Blackwell Ultra results, saying it is 4-5x the performance of the H100 generation. If you have not been keeping up, B200 is Blackwell and B300 is Blackwell Ultra.

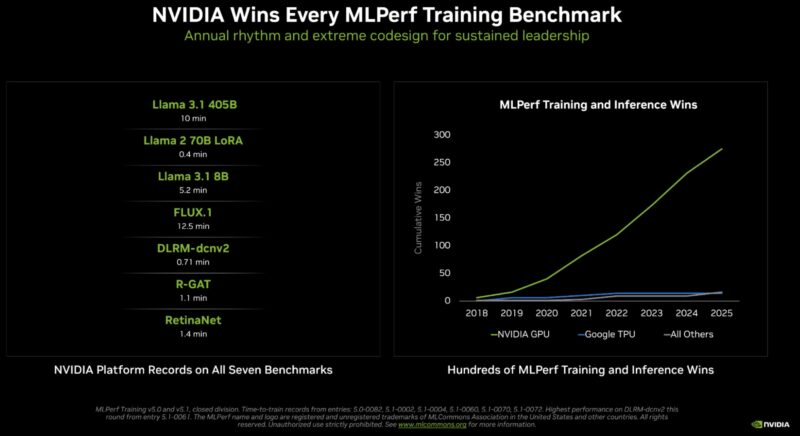

For several years, I have been calling MLPerf NVIDIA’s benchmark. NVIDIA made a chart of how often they have won compared to Google’s TPU and others that have submitted.

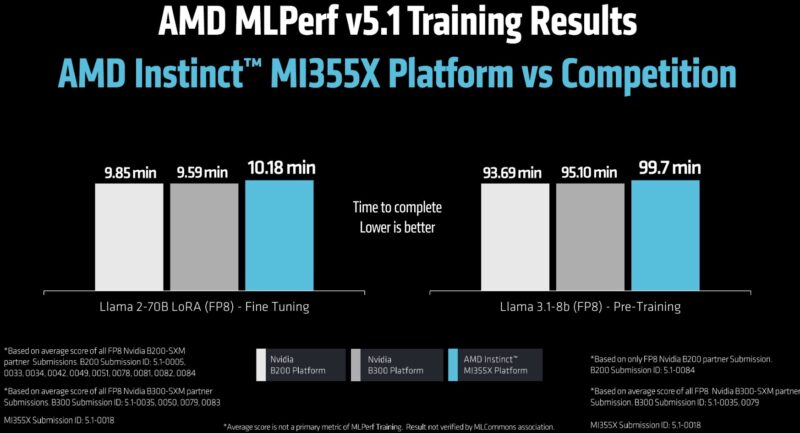

For its part, AMD did not win the benchmarks, but it showed relatively competitive numbers. We did not measure the pixels on the bar charts here, but the message was clear. AMD is saying that its current Instinct MI355X solutions are in the ballpark of Blackwell.

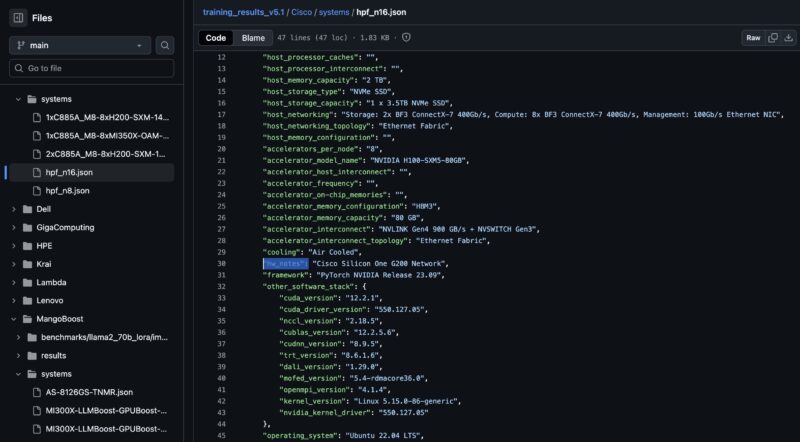

Reading through the configuration notes, we saw that Cisco submitted results using the Cisco Silicon One G200 networking. That is important since Cisco Silicon One joined NVIDIA Spectrum-X.

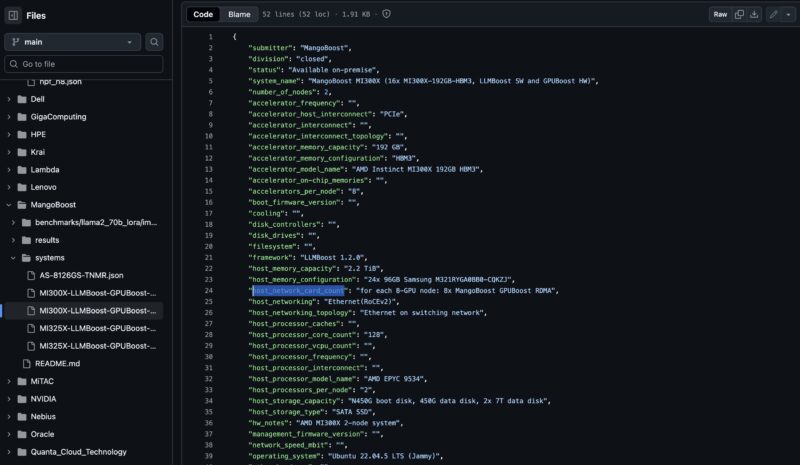

We also saw in the MangoBoost results that they had AMD Instinct GPUs and something interesting in networking. “MangoBoost GPUBoost RDMA” is listed in the networking.

It turns out that MangoBoost has a DPU solution. We tried finding a block diagram since someone mentioned they have an ASIC. We could not find one. It seems like the MangoBoost folks are STH readers based on this FPGA DPU presentation we found (the previous slide’s Xilinx SolarFlare headline was ours too. Given that we are about to work on the Xsight Labs E1 DPU, maybe we will have to dig into this one as well.

Final Words

NVIDIA took top marks in benchmarks, which is what seems to happen just about every MLPerf training and inference benchmark. AMD showing up is likely good, but we are not sure if this is going to sell a lot of GPUs for AMD. Perhaps the most interesting thing was the networking side with Cisco using its switch silicon and MangoBoost using its DPUs.

In the Substack, we went into an analysis of the CPUs used for submissions, and some of the AMD EPYC versus Intel Xeon trends, along with the use of NVIDIA Grace. While doing that, we found that the configuration information, although apparently peer-reviewed, was incorrect for at least one cluster.