Microsoft is simultaneously tackling a number of engineering challenges with the Microsoft Azure Project Natick. Project Natick is the company’s foray into sunken environmentally friendly data centers that looks to lower operational costs alongside providing lower latency for future data centers. For those wondering, “Natick” is a codename after the town in Massachusetts. The company has progressed to larger and larger installations and is now showing off a third-generation submerged data center roughly twelve times larger than the previous attempts.

Microsoft Project Natick and Natick 2

Natick was an early concept where the company would submerge a rack of servers in a steel shell into the ocean. The idea was to use servers that were fairly similar to what would be deployed on land modified for marine use. These can be deployed in the ocean within 200km from users which is roughly equivalent to a 1ms directional hop over the Internet. Effectively, this gives undersea data centers a 2ms or lower round trip latency to half of the world’s population. The first version you can learn more about in Microsoft’s video here:

This first proof of concept was built and deployed in California within about a year. The first version operated for 105 days.

About two years later, Microsoft Research took the learnings from the original Natick and submerged 14 racks of servers instead of just one in a single pod off the coast of Scotland. That is a significant region not for its population density, but it is where a lot of renewable energy sources such as solar, offshore wind, and tidal/ wave power are deployed. Here is the video for the second generation undersea data center.

After well over a year of this second generation Natick data center, the company has found a number of interesting attributes.

In terms of power usage effectiveness, or the power efficiency of a data center, the PUE of Natick is 1.07 which is very low for a current in-production data center. Lower PUE can mean lower operational costs and also makes a data center more environmentally friendly.

Water usage of Natick 2 is “zero.” This compares to a traditional land-based data center Microsoft says uses 4.8L of water per kWh. Of course, the ocean is made of water and is being used here, but in a different way. Water usage has become a major topic of conservation discussions with data centers growing in power and therefore water usage. There are drought-prone regions, even near the shoreline, that cannot necessarily handle the water needs for next-generation data centers so this is a big deal.

The cylinders are vacuum-sealed which means the servers and IT equipment do not have to deal with humidity and dust. Also, given how well water conducts heat, and how large the oceans are, once an installation goes deep enough (say 200m), constant and cool ambient temperatures can be maintained without the same fluctuations we see on land-based data centers. If you saw our Inside the Intel Data Center at its Santa Clara Headquarters piece from years ago, Intel is leveraging the temperate climate of the bay area California to get “free” cooling, however, there are still some days that require air conditioning to cool their servers.

Microsoft took servers from the same batches, deployed them in traditional land-based data centers and these environmental factors have led to 7x better reliability for the undersea versions in the first 16 months or so. Natick 2 is designed to operate for five years without maintenance.

That is a big deal since the undersea Project Natick data centers cannot be serviced. Submerged data centers are truly a lights-out so these reliability factors are extremely important.

One other interesting fact that Microsoft offers is that the Natick concept is very fast to build and deploy. From the go-ahead decision, the assembly, deployment, and go-live running Azure services is only 90 days. That contrasts to 18-24 months for traditional data centers. Microsoft did not cite this, but while Natick is currently deployed in coastal Scottish waters, it can, in theory, be deployed in international waters as well likely reducing regulatory hurdles even further.

Microsoft Project Natick Gen 3

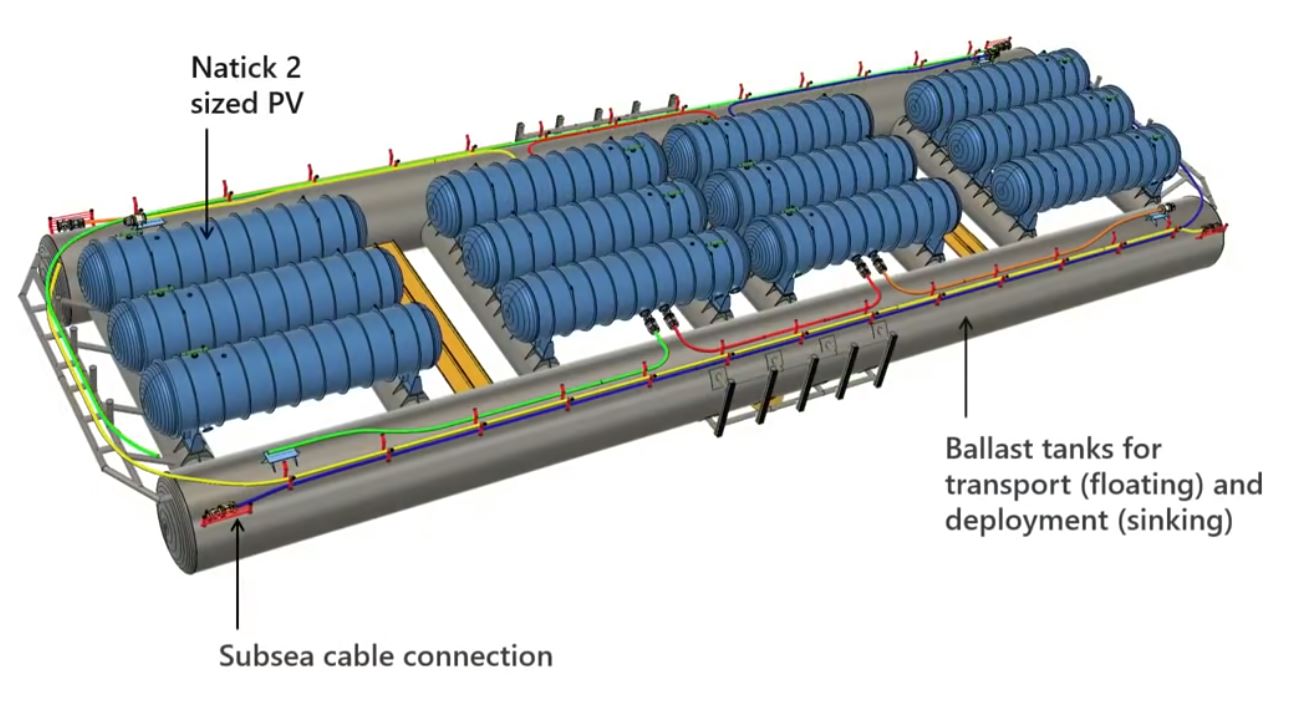

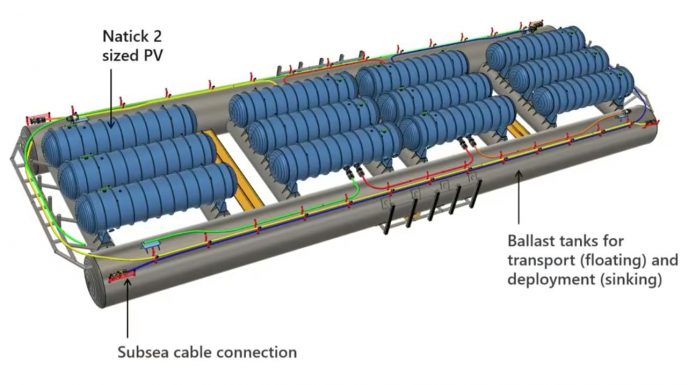

With some time operating Natick 2, Microsoft is starting to discuss Natick gen 3. Here, the concept is to put cylinders together with networking and power with a large steel lattice structure. The total size is under 300 feet long.

Each side of the structure has ballast tanks for transporting and deploying the setup. That can help make the solution easier to deploy potentially requiring smaller support vessels.

These twelve cylinders have around 5 megawatts of data center capacity. Microsoft says this is enough for a mini Azure region. Microsoft also says these 5MW designs for Natick can be grouped together to form larger undersea availability zones.

Final Words

Spurred by Microsoft’s initial findings, there are now a number of startups looking to submerge data centers and also to put data centers on barges. As an example, Nautilus Data Technologies closed a $100M credit facility to build a 6MW Stockton, California barge data center earlier this year. They claim a 1.07 PUE (rumors are that the target is much better than that.) One of the advantages to these undersea (with ballast tanks) and barge data centers is that you could, in theory, even re-deploy the data center to a different location during periods of high demand.

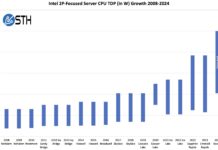

One thing is for sure, the data center industry is looking at many options to deal with the next-generations of hotter and more power-hungry components that will make today’s servers look like low power devices in comparison. As servers move to a new

Since this is a weekend article we usually reserve for fun, one must of course ask the question of whether there will be 21st-century pirates plundering the future data centers of the high seas. Of course, companies like Microsoft are investing in technologies such as silicon root of trust to ensure hardware supply chains are not compromised. Perhaps this is part of the overall strategy.

It would be interesting to know if the proposed deployment speed advantage holds up beyond prototype scales.

It will certainly remain true that plunking down a bunch of prefabbed modules will be faster than constructing a datacenter and then building out inside; but one could also plunk down modules on land(and people have been playing with the idea long enough that this, example is still “Sun” rather than “Oracle” https://docs.oracle.com/cd/E19115-01/mod.dc.s20/index.html).

Given that, I assume that much of the time savings is regulatory/land acquisition related; and while there is a lot of seafloor there is often still some contention for it, especially if you don’t want an anchor dragged across your cables multiple times a week; and, especially in relatively shallow and convenient areas, or areas with fisheries, you can only do so much before environmental impact assessments become a thing; and international waters both requires going much further out(territorial waters is 12 nautical miles maximum; but exclusive economic zone is 200 nm) and raises interesting questions about whether you’ll be able to get the coast guard to care if someone decides to do a little…’proactive salvage’ whether for a crack at the data or just because a net full of current-model datacenter silicon is probably worth more than most catches even if everything is encrypted and secure-booted such that you just have to part it out.

I suspect that it’s still cheaper and easier than acquiring rights to build on land near the coast; but it seems like one of those things that will probably attract a lot more regulatory scrutiny if it becomes common.

Is anything labeled “environment friendly” not the new oil? Unless it touches your stomach, then it’s just crazy-talk! Pandemic? What pandemic?

Global warming, climate change and ocean acidification is not going to annihilate our descendants fast enough…let’s go ahead and help warm the oceans a little faster, yeah! Little here, little there, won’t have any affect whatsoever. You’ll see. Nothing to worry about….

Lovely sentence, so carefully phrased, “sunken data centers that looks to provide lower environmentally friendly operational costs alongside lower latency for future data centers.”

Take away “environmentally friendly” and what have we? Lower operational cost? Future data centers? Move along, nothing to see here…

So the illustration show ballast tanks for transport and sinking… No one worried about raising for replacement, or permanent removal after the tech is too outdated? I’m confident that’s just an oversight. Sigh.

“let’s go ahead and help warm the oceans a little faster, yeah! Little here, little there, won’t have any affect whatsoever. You’ll see. Nothing to worry about….”

same line of reasoning (even 1000 of these deployed won’t raise the temp of the ocean) – misunderstanding of scale leads smack dab into the Flat Earth set.

I know that isn’t your line of thinking – but I am sure that some will read this and take it to be real. Unless you are a flat earther and think that a few undersea datacenters will raise ocean temps.

Are the ballast tanks removed? would think that displacing the sea water with compressed air would facilitate raising them.

For whatever reason, you have a vendetta against this idea…

@NoWorries, the heat gets dumped somewhere, air or water irrelevant. A MW is a MW underwater or above.

So for 20 years we have been struggeling with increasing datacenter operational security.

(N+1 everything, vesda fire detection, securing the power feed, securing the fiber connectivity)

Now we will just dump everything in the ocean with power and fiber conectivity that will take 72 hours to be fixed in case of problem…

This kind of concept it good on paper but will lead to huge trouble on the long term.

How will they address physical security of the data lines? I am put in mind of cases of gov’ts tapping undersea cables.

That second sentence would read better if “lower” was after “environmentally friendly.”

Uh…what happens when a HDD/SSD/Memory module/etc goes bad? they have to lift the whole thing out of the ocean? this seems incredibly…stupid.

Bikini Bottom goes HIGH TECH! If Microsoft doesn’t partner with Nickelodeon on this, it’s a lost opportunity.

This is a perfect example of outside-the-box innovation!

@Evan Richardson “…what happens when a HDD/SSD/Memory module/etc goes bad”

The ability to control humidity, and temperature greatly extends the life of these modules… especially SSDs during heavy read/write load! https://harddrivegeek.com/ssd-temperature/ https://avtech.com/articles/8644/humidity-in-your-data-center/

The gut reaction of “how will they service these?” is valid for most company’s operational methods but less so for ultra scale operators like MS. None of the software that would be run in this facility is unique to a node but rather will be one of hundreds or thousands of copies of the same code being run all over the world. If a single node fails, it matters as much to MS as if your mission critical database server had one drive fail in a 40 drive mirror. If you’re familiar with the Pets vs Cattle metaphor, this is as close to cattle as it gets.

Add to that the fact that server hardware is very reliable these days and cloud providers like MS, Facebook and the Goog have all implemented redundancy at larger and larger scales (e.g. N+2 power supply redundancy to the rack vs N+1 redundancy to each server), and it’s easy for MS engineers to design as system that will have four or five nines of hardware availability for the expected 5 year mission of this concept. Really, even if a whole canister out of the twelve depicted became unusable, that’s still just a rounding error in MS’s hardware strategy.

With a move to solid state, redundancy and planned obsolesense, reliability has to be a non-issue.

Permits and cost of ‘land’ – total win! Even if they make it harder than today.

Heat generation? Same as if you pump water out the sea/lake, round your datacenter and dump it back. Imapact on wildlife? A submerged cylinder is probably better than sucking all that water up and jetting it back in again.

I think this is a winning idea – I’m not sure where the major disadvantge is… maybe getting power out to the pods. I wonder if it would be possible to add tidal generation at some point in the future? Till then I guess a big fat shore cable will work!

Leaks perhaps, but, thats probably on a par with earthquakes/fires/hurricanes etc.

I don’t see security being much worse than on land really, its a bit remote so harder to monitor than cables/equipment on land, but it can be protected and monitored.

This – or some version of this (barges?) – will very likely be in the future of the datacenter IMO. Balloons in the air and pods in the sea…. talk about cloud computing!