After our recent The Everything Fanless Home Server Firewall Router and NAS Appliance piece, we got no fewer than a dozen comments from various folks saying that the Intel X553 does not work in Linux Kernel 6.x. That would include Proxmox VE 8 and Proxmox VE 8.1, but also many other distributions. We decided to double check since it worked in our testing which has been going on since September.

Intel X553 Networking and Proxmox VE 8.1.3

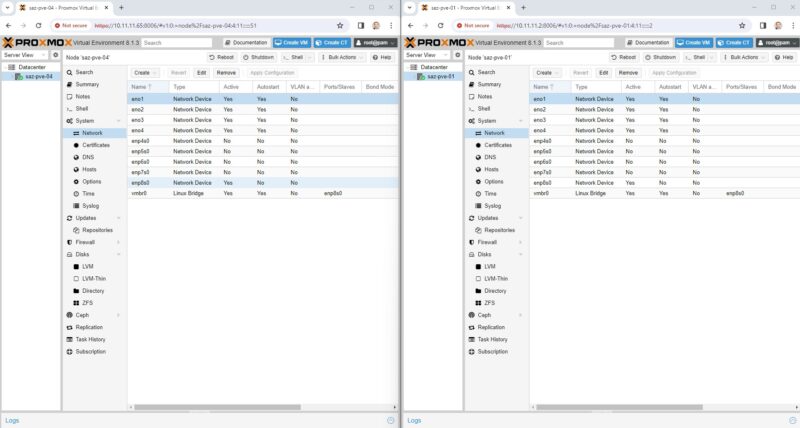

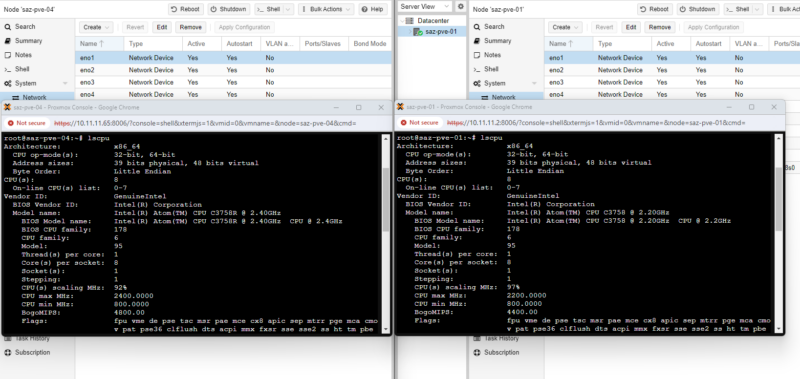

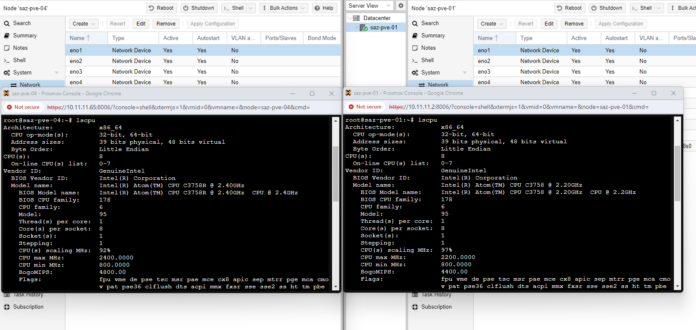

To double check this, we setup two new Proxmox VE 8.1.3 instances which is the latest available. We did not update the C3758R instance from the installation packages. Instead, we just installed iperf3. The Qotom C3758 machine installed updates. These installations were done fresh today and are so fresh that they have not even been transitioned to the dark theme yet.

We setup the two hosts side-by-side. One challenge is that the SFP+ naming is a bit hard to figure out since the ports on the systems are not labeled. Instead, we just assigned IP addresses to all of the interfaces so we could test them with different optics.

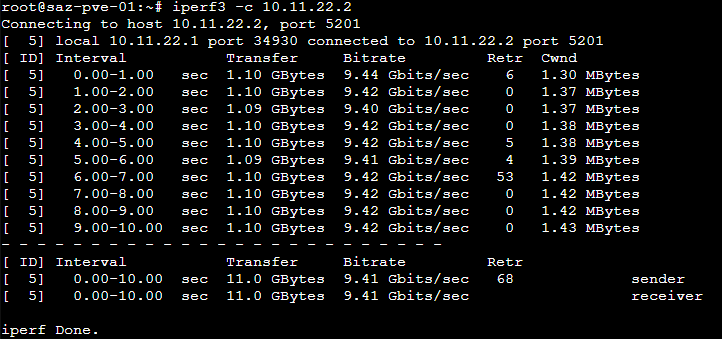

All of them were about the same, and we saw roughly 9.42Gbps with just running iperf3 -s -D and iperf3 -c. The C3758(R) can push enough iperf3 traffic directly without having to set multiple instances or so forth.

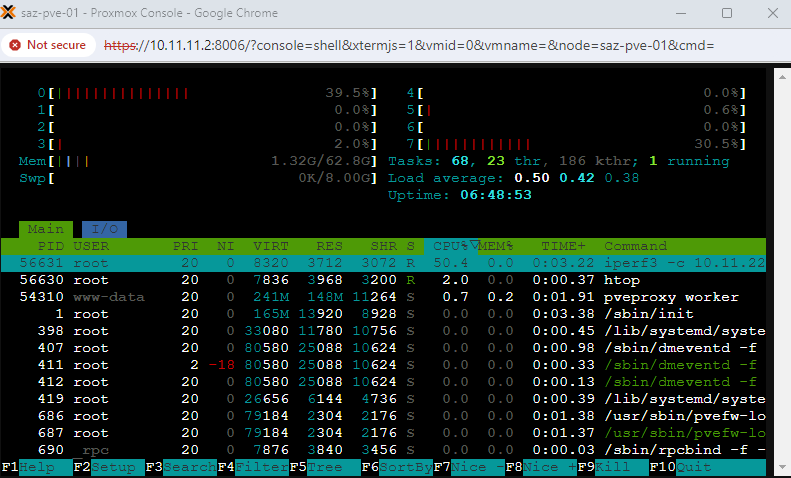

For folks wondering what this looks like in terms of CPU utilization to push that traffic using iperf3, here is a htop during the run.

Of course, iperf3 is a very simple traffic pattern, but the C3758 has plenty of performance for this, and the C3758R is about 6-8% faster.

Final Words

Normally we do not post updates like this, but there were so many comments that the X553 did not work, that we went back to try it. We also tried it on an older Supermicro mITX board and the results were similar.

While there may have been a hiccup for some folks, it appears as though the current Proxmox VE 8.1.3 with the 6.5.11-4-pve and 6.5.11-7-pve are working out-of-the-box as we would expect.

If you are reading this, and are unsure why this came up this week, there is a link in the introduction and here is the video:

I hate to rain on the party, but I tried EXACT same setup (see my othe rant) and it refuses to connect with anything but itself. WHICH sfp+ modules do you use? Seems like it hates anything but intel according to the docs. I am trying freebsd as a last ditch effort to see if its even worth the trouble of getting 10gbps working. Its a nice box otherwise.

make sure that the ixgbbe drive is getting

https://forums.servethehome.com/index.php?threads/10gbit-interface-compatibility-intel-x553-mellanox-connectx-2.21477/

# echo “options ixgbe allow_unsupported_sfp=1” > /etc/modprobe.d/ixgbe.conf

I don’t have that board but intel nics can be picky about how transcievers are coded even if they are not literally locked. can also verify with. a dac cable failing that.

The problem doesn’t appear when you give the SFP+ NIC (eno1-4) an IP. The problem appears when you DON’T give the SFP+ NIC an IP, create a linux bridge and attach it to that NIC. On system boot, if you watch the link lights, all 4 are on at powerup (assuming you have them plugged into a switch), but when Proxmox loads, the link lights go off one by one about half-a-second apart, and attaching a VM to the bridge (vmbr0, e.g.) yields no network connectivity.

Do I have a second on this?

Patrick, have you tried the config above (no IP for the NIC, attach a bridge)?

Correcting the above: Giving the physical NIC (eno1) does not in fact work. The IP just replies through my out-of-band connection which is one of the 2.5G NICs on the same subnet.

Grrr. I want this to work. This is a great little server.

@thomas I tried Rocky 8. Also no go. Chelsio 10GB 850nm Chinesium modules.

Going to try actual Intel modules (don’t have any on hand, have to buy, will update around Jan.18th)

@patrick Which modules DO you use in the Quotom?

My Intel modules are arriving. They’re pretty cheap on eBay about 20 bucks. I just wish someone had posted this before I spend 12 hours trying to figure this crap out.

Solved – Build the latest ixgbe driver:

– Download the latest ixgbe source from Intel: https://www.intel.com/content/www/us/en/download/14302/intel-network-adapter-driver-for-pcie-intel-10-gigabit-ethernet-network-connections-under-linux.html

(pick the top choice – ixgbe-5.19.9.tar.gz)

– untar it to /usr/src/ixgbe-5.19.9

– follow https://www.xmodulo.com/download-install-ixgbe-driver-ubuntu-debian.html (but ignore the wget and tar -xvzf lines, you’ve already downloaded it)

– Don’t forget to add ixgbe to the end of /etc/modules

– Reboot.

Voila. Works now even with the Chinesium 10GB LC modules. Just gotta check they’re actually running at 10GB…

I followed a few steps recommended in various other parts of the interwebs like setting allow_unsupported_modules (“options ixgbe allow_unsupported_sfp=1,1,1,1”) before this and still no go, but I did not undo any of those steps, so a clean PVE8 install+ixgbe compile _might_ not work. PM me if you’re stuck.

Need to get on the proxmox team to include this latest driver either in the kernel or as a module.

@Patrick: The issue appears to be that a driver change to work around some buggy auto-negotiation behavior on some particular device causes issues with most other devices that don’t also have this behavior change implemented.

https://marc.info/?l=linux-netdev&m=170113799914642

When you tested between different devices with the same NIC using the same driver, it would indeed work just fine. However, if you were to test with an X553 on one end and some other network device like a 10 GbE switch you’d probably be able to reproduce the issue people are experiencing.

Would you be able to do that?

I can verify that the new drivers install and work on proxmox. I will be posting a nice howto on how to get proxmox working with the intel drives. Its not quite as straightforward as the directions. Still waiting on my SFP modules, more to come.

Just a sad note to put on. I rad it in a 95 degree closet for 10 hours and it locked up. So any ‘high temp’ usage is out. Has to be in a nice cool 75ish area to work reliably.

Final Reply: I am sending it back to amazon, as this device is simply “not fit for use in any environment thats not heavily air-conditioned, with NON-switch non happy 10g interfaces” Save yourself a LOT of time and just buy a terramaster. Review should have had better testing. I spent 20 hours trying to get this thing to work. So I am warning those of you who might make the mistake of buying this.

Oh and its permanaently dead after just 10 hours in 90+ degree closet.

+1 on the “nice cool 75ish area to work reliably” although no high-temp tests done by me. I have it in a ~70 deg. F basement, running pfSense and a few other VMs (PiHole, WiFi management, home assistant, …). With traffic WAN LAN at 1GB the CPU sits around 75% on the host, 80+% on the PFSense VM, but the temps are not outrageous. Running since Jan.14 as my “forbidden router”. WAN conn is 1GB NIC to AT&T and 1GB NIC to cell modem for backup, LAN NIC is 10Gb Chinesium fiber to my backbone.

I would think if you run this in a high-temp/low-air-flow environment, a fan would keep it from cooking itself, and that’s a design flaw, so again, agree w/@thomasmunn it needs to be in a controlled environment, despite its appearance as an “industrial” device.

Still can’t beat it for the price and the I/O it has.

@Michal Rysanek – Thank you. I built the updated drivers and it’s good to go. I’ll either have to watch out for kernel updates and rebuild again, or I’ll have to setup dkms. Hopefully they fix this in the near future.

Looking for help… using C3758R unit with Proxmox 8.1.3. I got the latest Intel drivers for X553 installed and the 10G SFP or DAC are linking. If I run iperf3 3.10.1 (server) on my NAS machine and iperf3 3.12.0 (client) from the C3758R, I get ~9.3Gbps…

If I reverse the server/client, I’m only getting ~4Gbps… I also tried running 2x VM on the C3758, giving each solo access to a 10G port. When I iperf3 between them, again, the bandwidth seems to top out ~4Gbps.

what gives? is the C3758 just not able to iperf3 serve fast enough?

Compiling newer modules is 1, but then keeping proxmox to use the module instead of the 2016 drivers is another problem.

dmesg | grep ixgbe

[ 2.217232] ixgbe: Intel(R) 10 Gigabit PCI Express Network Driver

[ 2.217235] ixgbe: Copyright (c) 1999-2016 Intel Corporation.

Then I have to do rmmod ixgbe; modprobe ixgbe

Then it will use the new drivers, otherwise it doesn’t

[ 1143.203267] ixgbe: module verification failed: signature and/or required key missing – tainting kernel

[ 1143.224239] Intel(R) 10GbE PCI Express Linux Network Driver – version 5.19.9

[ 1143.224245] Copyright (C) 1999 – 2023 Intel Corporation

[ 1143.228687] ixgbe 0000:0b:00.0 0000:0b:00.0 (uninitialized): allow_unsupported_sfp Enabled

It is in /etc/modules

Do not forget the “make install” like I did.

Also see: https://www.servethehome.com/day-0-with-intel-atom-c3000-getting-nics-working/ for compiling the out-of-tree driver.

the current driver version is 5.19.9

@ Michael C

I’m having the same issue. In one way I can max out the 10gig with iperf. In reverse I only get about 3-4gig/s.

I’m having the same trouble. It does run hot as hell even if sensors is reporting the CPU at only 47 deg C. I bought some USB powered fans to sit on top and this is a game changer, suddenly the chassis is cool to the touch. That said. the 10G SFP ports thing is a mess, does not work in kernel 6.x without the new driver an for me I can get full speed in one direction and nothing in the other. I’ve tried 3 types of SFP+ RJ45 adaptors all the same. I’m now going to try SFP+ DAC adaptors just in case its a power issue as I have heard the RJ45 ones are power hungry. But its ridiculous, surely SFP ports should support whatever adapator.

This should work out of the box with common linux distributions. These are Intel NIC’s. If this doesn’t work out of the box with Debian / PFsense / Opnsense they shouldn’t be selling it.