Intel DC P4510 Server Architecture Testing

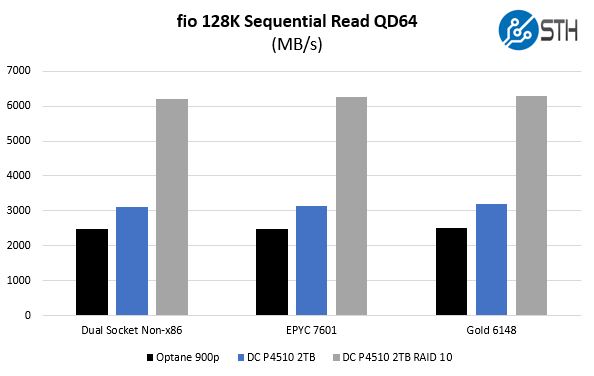

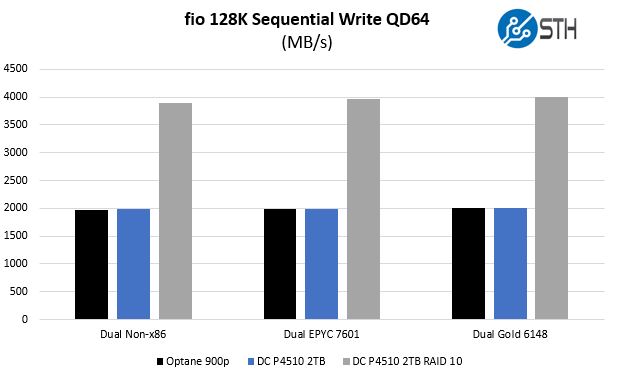

We also wanted to see if there were Intel-specific platform optimizations so we utilized an AMD EPYC solution as well as a semi-available non-x86 dual-socket platform that we got the word just before this review that we cannot name the platform yet. We will re-produce these numbers in the review of that upcoming platform. We are also using md RAID for the RAID 10 results. Here is a quick look at sequential numbers:

Here you can see that the Intel platforms actually are the fastest with the NVMe-based solutions, but the AMD EPYC 7601 and the non-x86 architectures are close.

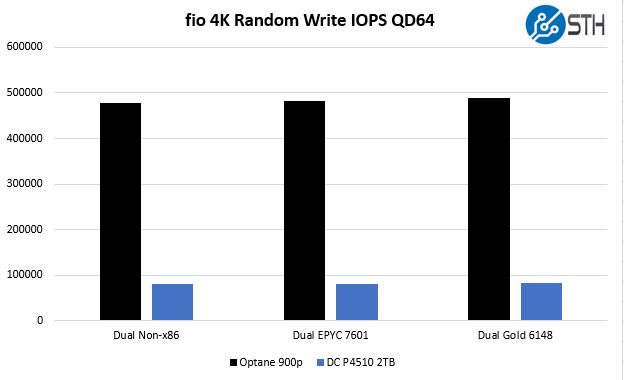

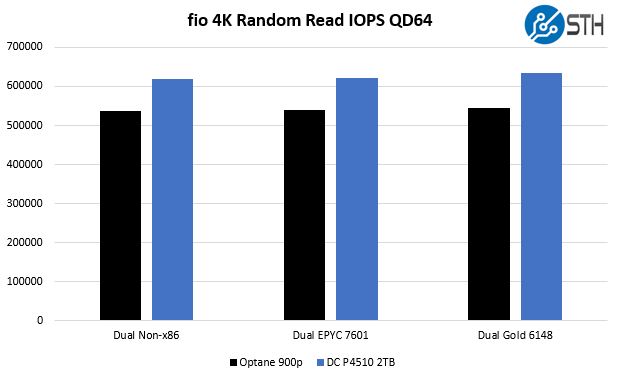

Moving over to the random 4K IOPS side, the architectures again remain close, however, one can see the 4K random write performance of the Intel Optane drive clearly shows why we recommend it for write-intensive workloads.

We are going to test these drives against some of our larger test harnesses. Unfortunately, we received the drives about a week before the embargo and had short notice that we were doing the review.

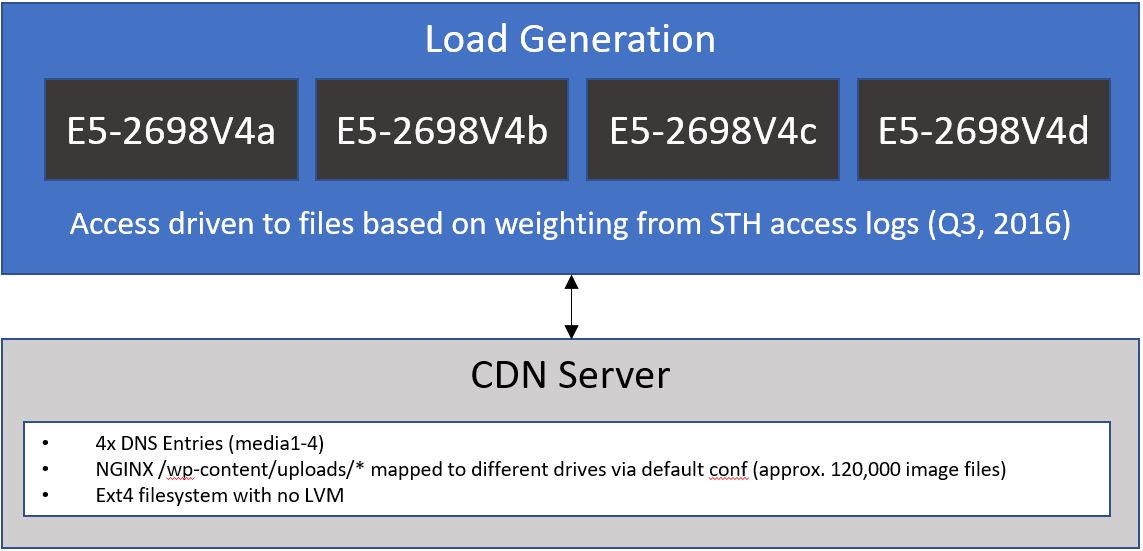

Intel DC P4510 8TB STH WordPress CDN Storage Performance

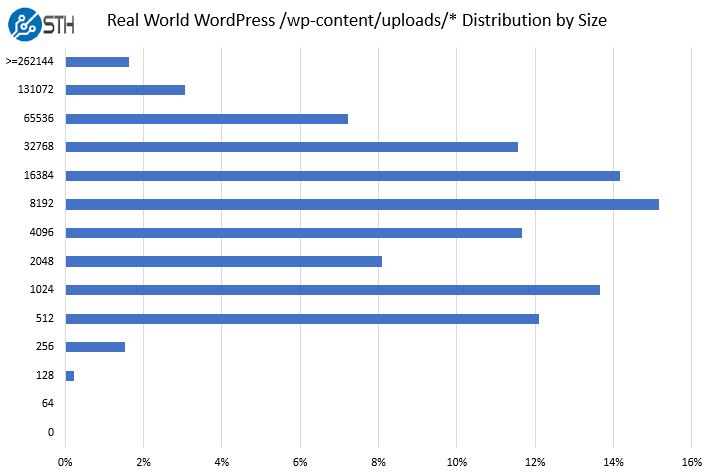

This is a test that we have been using for some time to show the performance of a CDN workload on these drives. We are using a real-world snapshot of the wp-content/uploads directory for a WordPress CDN image. We then host the files in a nginx backed CDN and use that as the basis for our testing. Here is a distribution of the file sizes in the data set.

For drives like the Intel DC P4510 NVMe SSDs, this is a real-world test case on how they will be used in the field. They should never be subjected to a 100% write workload, or a 4K only workload.

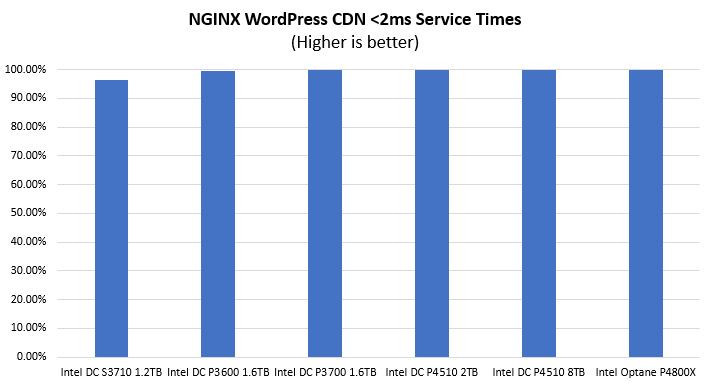

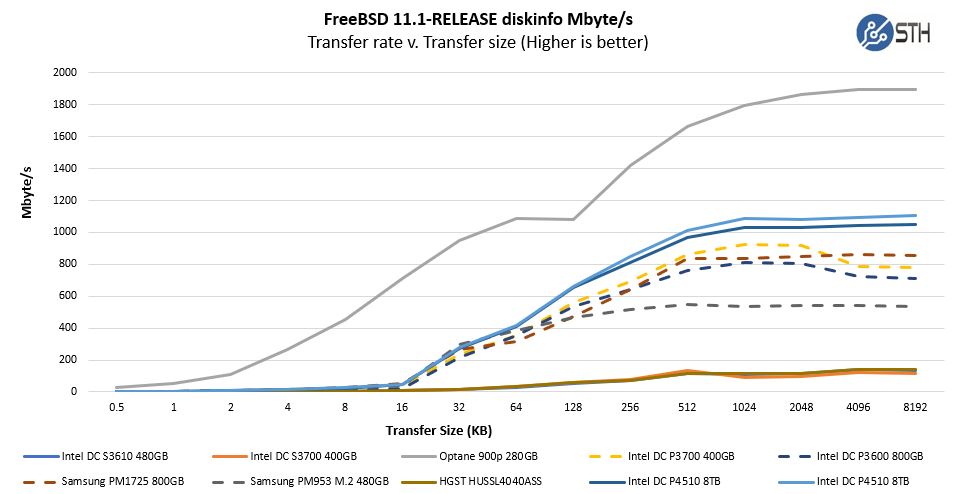

Here is what the distribution looks like across several SSD generations and types.

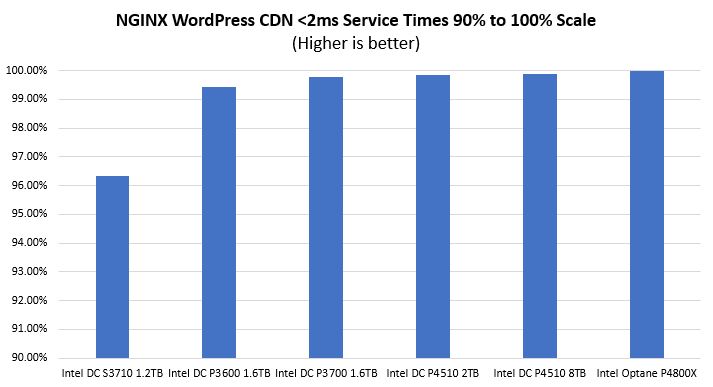

Here is a zoom in on that chart using 90% to 100% scale to show the differences better.

The key here is that if you are buying servers with NVMe or SATA/ SAS drive bays, there is an enormous difference. With the newest generation of NVMe SSDs like the Intel DC P4510, it makes sense to eschew legacy storage protocols for these workloads.

Write Cache and Logging

If you need a write cache drive, Intel Optane is the way to go period. There is nothing else in the same league. Check our Exploring the Best ZFS ZIL SLOG SSD with Intel Optane and NAND piece as a real-world example as a ZIL/ SLOG device. While 4K random writes are great, there are heavy write patterns that also flush data.

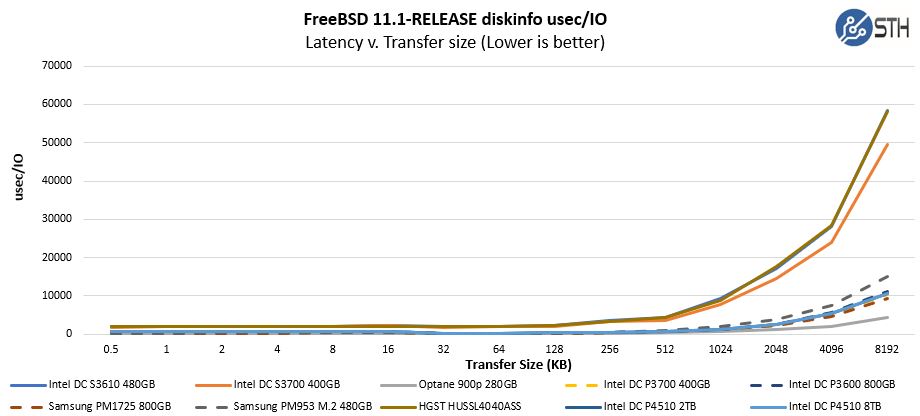

In this particular benchmark, there is an awesome stratification. SAS/ SATA drives cannot keep up with the latest generation of NVMe. SSDs. Likewise, Optane shows why it is top in these tests.

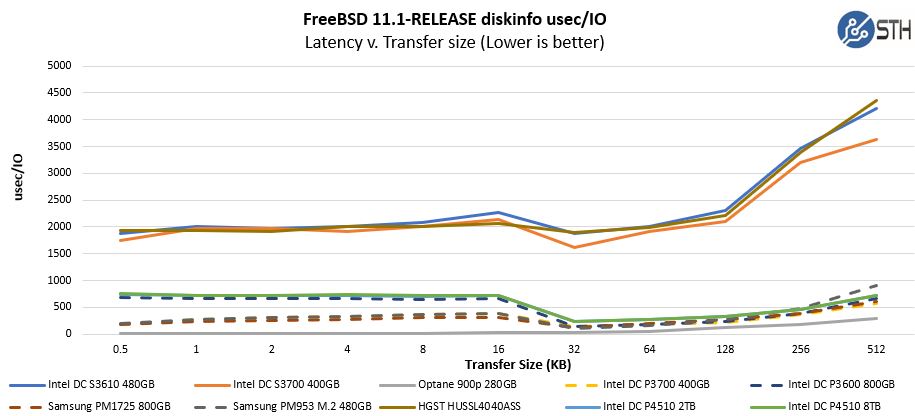

Here is a zoom of the latency:

Again we have good results. The new controller and NAND seem to be performing well. The other benefit is that larger drives mean one can use fewer hot-swap bays for the drives. For storage servers and hyper-converged appliances, larger SSDs are highly desirable.

Final Words

With five drives installed in the system we saw sub 70W total impact on power consumption using our 208V power racks in our Dell EMC PowerEdge R740xd system. That is a great figure and significantly better than higher-power previous generation options. In arrays of 10 drives per 1U we would then expect a 80-110W lower power consumption figure than many of the other drives we have seen. In a 42U rack with 40x 1U servers, that is around 4kW worth of power, or about a thousand dollars a month in our racks of cost savings.

We will be working with VROC, Intel’s NVMe RAID solution, in the future. The Dell EMC PowerEdge R740xd was not working well with our physical key. We are going to have a future VROC piece dedicated to that technology. Still, without VROC you can utilize NVMe SSDs in RAID using standard software tools. We even were able to create ZFS parity RAID sets across the five drives we used and we did temporarily try the 8TB drive in our Ceph cluster. For buyers of multiple NVMe SSDs, it is likely that you will utilize a higher-end solution than VROC but we have the keys and we will cover that aspect soon.

Overall, these are great drives and a huge improvement over the P3xxx series. The capacity upgrades are welcome as they address the reality that systems are still limited by the number of PCIe lanes. Write performance is good, but again, if you have a heavy write database or log device, just get Optane. If you have mixed workloads, workloads that are read heavy with smaller write bursts, then the Intel DC P4510 is a great option with more performance than previous generation parts.

Cool adding EPYC and the non-x86. Let’s see. Qualcomm is vaporware. Ampere is new and not shipping. So that’s Cavium?

I like the NGINX CDN results. Those are really good.

8TB helps us since we can get 96TB per U using those. Cliff you could have done a better job saying why that’s important to conserve PCIe

Thank god ya’ll did something other than pages of iometer and fio results. That’s boring. Can you do more Ceph?

What about the fact that buying retail drives like these won’t work right in HPE servers because ProLiant’s throw errors when you’ve put in drives without HPE firmware? We bought a few hundred drives only to find this out the hard way.

@Rajib A

Buy Supermicro the next time….

Supply constraints for the P4511 force me to look for alternatives for M.2 boot for Supermicro server builds. What other vendors are suitable for Enterprise?