Recently we became aware of a new version of diskinfo that uses FreeBSD libraries to simulate the ZFS ZIL / SLOG device pattern. While many tests online focus on pure writes, or 70/30 workloads, heavy write endurance drives are also used as log or cache devices where data is written then flushed. Just about everyone in the storage world knows about ZFS and the ability to use a fast device in front of an array to speed performance. We wanted to take both traditional NAND SSDs as well as Intel Optane SSDs and use this new tool to see how they compare.

What is the ZFS ZIL and SLOG?

ZIL stands for ZFS Intent Log. The purpose of the ZIL in ZFS is to log synchronous operations to disk before it is written to your array. That synchronous part essentially is how you can be sure that an operation is completed and the write is safe on persistent storage instead of cached in volatile memory. The ZIL in ZFS acts as a write cache prior to the spa_sync() operation that actually writes data to an array. Since spa_sync() can take considerable time on a disk-based storage system, ZFS has the ZIL which is designed to quickly and safely handle synchronous operations before spa_sync() writes data to disk.

What is the ZFS SLOG?

In ZFS, people commonly refer to adding a write cache SSD as adding a “SSD ZIL.” Colloquially that has become like using the phrase “laughing out loud.” Your English teacher may have corrected you to say “aloud” but nowadays, people simply accept LOL (yes we found a way to fit another acronym in the piece!) What you would be more correct is saying it is a SLOG or Separate intent LOG SSD. In ZFS the SLOG will cache synchronous ZIL data before flushing to disk. When added to a ZFS array, this is essentially meant to be a high speed write cache.

If you want to read more about the ZFS ZIL / SLOG, check out our article What is the ZFS ZIL SLOG and what makes a good one.

Testing the Intel Optane with the ZFS ZIL SLOG Usage Pattern

Today we have some results for the Intel Optane product as a ZIL / SLOG device. We have numbers for several products including the Intel Optane 900p and lower end products like the Optane Memory M.2 devices. We also have Intel NVMe SSDs along with a few devices from other vendors along the NVMe, SAS and SATA ranges to compare.

The diskinfo slogbench test we are using has a usage pattern that is unlike many of the pure write tests. It performs writes then regular flushes. This is different than many write specific workloads that are often tested using tools like fio and iometer. Instead, this is intended to more closely resemble ZFS ZIL SLOG usage patterns, or as a write caching / log device. It turns out, that these are write heavy devices but also those where we typically see high-speed SSDs with high write endurance and reliability.

Test Setup

The genesis of this project was that a user requested we setup a custom demo in our DemoEval lab to compare drives using this specific workload. The individual wanted to compare a few of their existing NAND solutions to Optane. Here is the basic setup we used for this test:

- System: Supermicro 2U Ultra

- CPUs: 2x Intel Xeon E5-2650 V4

- RAM: 256GB (16x16GB DDR4-2400)

- OS: FreeBSD 11.1-RELEASE

The SSD stable is more interesting. We picked the Intel Optane M.2 16GB and 32GB drives just for fun. We also used a 280GB U.2 Intel Optane 900p. You will notice that we do not have P4800X results. We had good results, but off of where we would expect. For NVMe SSDs we have Samsung U.2 and M.2 offerings as well as the Intel DC P3700 and P3600. The particular Samsung M.2 drive we are using has PLP capacitors so it is representative of a data center M.2 device rather than a consumer M.2 device. Consumer drives, without PLP, have such poor performance we excluded those results from our sets. We also have SATA drives in the form of the Intel DC S3700 and S3610 SSDs which show SATA performance. Finally, we have a popular HGST SAS SLOG device. You are going to quickly see the stratification of these results.

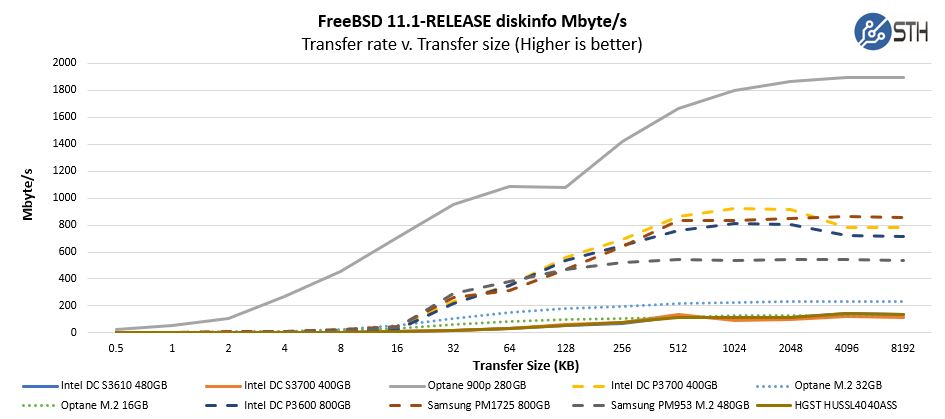

The Results: Transfer Rate

We managed to distill the output to three views which we find telling. One that shows transfer rate in this usage scenario. The other two show average latency per IO. This generally is similar to what we saw with Intel Optane Memory v. SATA v. NVMe SSD: WordPress / vBulletin Database Import / Export Performance and in our Intel Optane: Hands-on Real World Benchmark and Test Results pieces, but the three views are telling.

The first view is raw MB/s. Here is the chart where you can see that the Intel Optane 900p 280GB drive is the clear leader despite some of the NAND NVMe SSDs having better throughput specs. If you are still on 10GbE, NAND NVMe SSDs can fill the pipe at larger transfer sizes. Once you go past there into 25GbE and 40GbE, you simply want Optane or something more exotic.

We wanted to highlight a few other parts of this chart. First, the NAND based NVMe SSDs occupy a distinct band to themselves with the two Intel and one Samsung 2.5″ U.2 SSDs offering somewhat similar performance. The Samsung PM953 M.2 SSD we are using has capacitors for power loss protection so it still performs well, albeit at a lower level than the performance-oriented NAND NVMe SSDs in our comparison. A Samsung 960 Pro M.2 NVMe SSD will be near the bottom of this chart, often below the SATA SSDs because of its lack of power loss protection for sync writes.

When it comes to the NAND SSDs for SATA or SAS, we see a tight grouping on this relative scale. This is a case where with latency sensitive I/O the legacy buses show their weaknesses. If you have a 1GbE ZFS NAS, this is unlikely to be an issue, but one can readily see the impacts.

Perhaps the most intriguing result is from the Optane Memory M.2 devices. Here one can see that these are the least expensive devices in the comparison group, but the 32GB version essentially obliterates SATA / SAS2 options despite its obvious handicapping from a product standpoint. The PCIe x2 interface paired with low power / package count Optane does surprisingly well. What these drives lack are capacity and endurance, but the performance is certainly there.

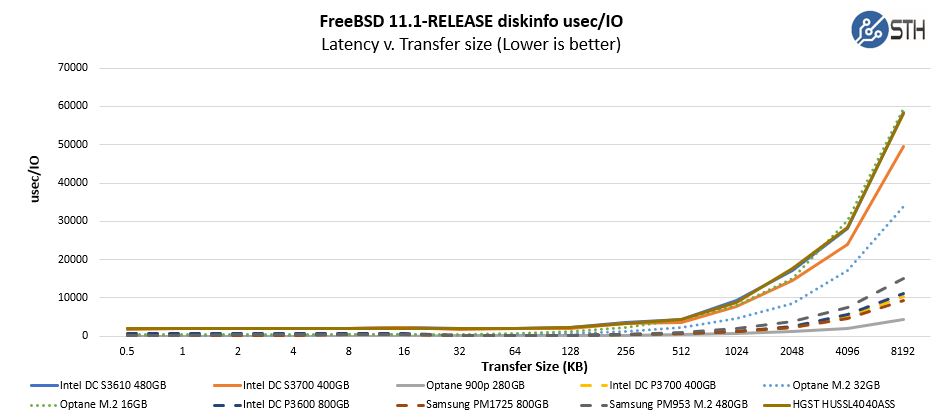

The Results: IO Latency

With a ZFS ZIL SLOG device, a key concern is I/O latency. Most storage systems to not run at 100% write utilization 24x7x365 so an important factor is how long does each I/O take. Here is what the chart looks like across the entire sample set.

One can see the general grouping here, again with the SATA and SAS offerings lagging and the NVMe NAND SSDs performing well. The Intel Optane 900p 280GB drive is again obliterating the competition.

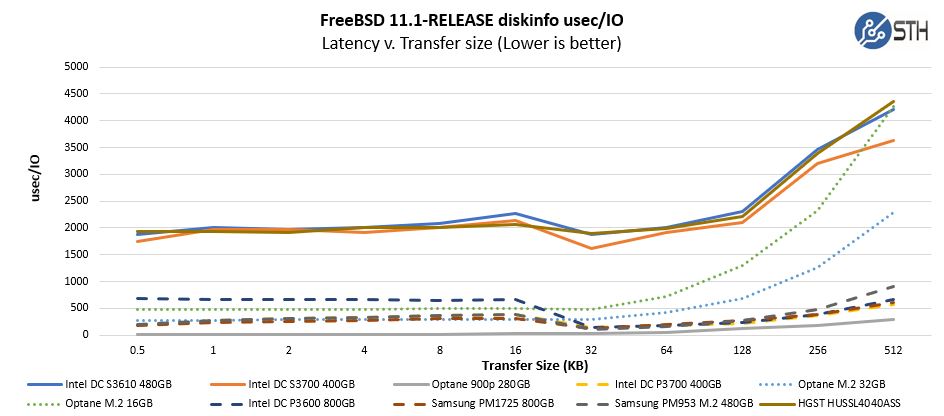

Below the 512K size, the chart is compressed to the point that it looks like all offerings are essentially the same. They are not. We took a sample of this chart stopping the results at 512K to show the difference.

Again, we can see the highest latency per I/O is the group of SATA and SAS drives we had. We actually had a few more drives but we wanted to limit our comparison group to 10 offerings and realistically, while you can debate which one is faster the message is clear: get on the PCIe bus.

The Intel Optane Memory M.2 devices are beyond intriguing. They are handicapped by the PCIe x2 interface and limited media, but at smaller transfer sizes (under 16K) they are competitive with higher-end NVMe drives. As transfer sizes go up, enterprise NAND NVMe SSDs can handle the throughput.

Coming back to the Intel Optane 900p if you were not looking closely you may have missed it on the chart. Its grey bar looks like it is the X-axis for a good portion of this zoomed-in chart with 1/10th the latency of the higher-performance enterprise NAND NVMe SSDs.

What about the Intel DC P4800X as a ZFS ZIL SLOG?

As we mentioned earlier, we actually have data on the Intel DC P4800X. Directionally, you can look at the Intel Optane 900p 280GB drive and assume it is a bit better. We double-checked the configuration before publication and it was in a different PCIe slot so we did not feel comfortable publishing the comparison. The physical location seemed to have a slight impact on performance.

Realistically there are a few more factors into whether you would use an Intel DC P4800X that are more important than the muted performance advantage: endurance and data integrity. The Intel DC P4800X 375GB SSD is rated at 4x the write endurance of the Intel Optane 900p. The Optane 900p is rated at 5PB. One could make a legitimate argument that a majority of 100-200TB ZFS appliances over five years will never push even 1PB of writes onto a SLOG device. That is fair. Once you are over 200TB, the cost of a mirrored Intel DC P4800X becomes so small on a TCO basis, we would recommend the Intel DC P4800X in a heartbeat.

The other reason to get an Intel DC P4800X over the Intel Optane 900p is data integrity. Originally the Intel Optane 900p was marked on Intel ARK as having power loss protection. That makes sense given the physical architecture. There are no RAM write cache packages on the P4800X or 900p due to how Optane works. What is more important is end-to-end data protection.

The official Intel DC P4800X v. Intel Optane 900p comment we got from Intel is:

As an enterprise part, the Intel® Optane™ SSD DC P4800X offers multiple data protection features that the Intel® Optane™ SSD 900P does not, including DIF data integrity checking, circuit checks on the power loss system and ECRC. The DC P4800X also offers a higher MTBF/AFR rating.

Given Optane performance, if you are building a large ZFS cluster or want a fast ZFS ZIL SLOG device, get a mirrored pair of Intel DC P4800X drives and rest easy that you have an awesome solution. If you are building a small proof of concept ZFS solution to get budget for a larger deployment, the Intel Optane 900p is a great choice and simply blows away the competition in its price range.

Final Words

When we used the new FreeBSD diskinfo slogbench, the Intel Optane drives stood out. In fact, they categorically obliterated SATA and SAS options. Even the previous generation category killer Intel DC P3700 is easily bested by the Intel Optane 900p (and P4800X.) Although it was our conjecture, if you are building a 10TB ZFS proof of concept NAS with 1GbE networking, the Intel Optane Memory 32GB M.2 drive is an enormous upgrade over SATA and SAS devices in the sub $100 category if you can live with the lower endurance and reliability ratings. This particular SLOG use pattern is quite common in other log device scenarios so it is instructive well beyond ZFS applications.

In the first part of this series, we investigated What is the ZFS ZIL SLOG and what makes a good one. In this article, we used a new tool to simulate the writes and flushes that a ZFS ZIL SLOG device goes through. We did not want to stop at using a synthetic test so in the next installment we have real-world data from a lab ZFS NAS. A quick spoiler there is that we have been seeing actual ZFS NAS performance that follows the stratification we see with this benchmark.

I thought it was just the thermometer but nope, this is the coolest thing I’ve seen all day

ZFS on Linux please.

Thanks for the review! This is an example of a real-world use for the 3DXpoint technology that’s very important to its real target market.

My only other request for real-world benchmarks would be in intensive database workloads if you have good benchmarks in that area.

What’s your process to test a single ZFS server to get the metrics listed above? I ask because I’d like to duplicate the process if it’s simple enough to test my server and get an apples to apples comparison when I make configuration changes.

My scenario is that I’m running a home server with 8x4TB storage drives (RAIDz2) and an Intel DC S3700 100GB SLOG. Recently switched my disks from a 3Gb SAS chassis to a 6Gb and wanted to see how big of a difference it made. Also testing with and without the SLOG to see how it impacted performance.

So, my scenario is *way* below what you do here, but it’d be interesting to see how big a gap it is.

Really loving this. raiderj it’s linked in the article but it’s FreeBSD 11.1 only. That’s a limitation I see since FreeBSD almost always lags Linux and Windows in hardware support and optimized driver support.

It’s not clear here, what was the backing pool made of for these tests? An array of SATA SSDs, SAS HDDs, something else?

You got this to work! I’d tried but it kept erroring out on me. I don’t think it works on Linux. For Christmas I want a FreeNAS to Linux port and this included. Santa?

Samuel Fredrickson you run this benchmark directly on the log devices so the pool backing doesn’t matter. It’s eliminating the pool variable while emulating the usage pattern.

Log scale for charts next time?

A log scale would be great if you can include in this article. Great to see how zsf works in different cases, thank you!

“The Samsung PM953 M.2 SSD we are using has capacitors for power loss protection so it still performs well, albeit at a lower level than the performance-oriented NAND NVMe SSDs in our comparison. A Samsung 960 Pro M.2 NVMe SSD will be near the bottom of this chart, often below the SATA SSDs because of its lack of power loss protection for sync writes.”

How do capacitors for power loss protection improve performance?

@Misha Engel this is explained in more depth in the previous article on what makes a good ZFS ZIL SLOG device.

Short version: if you are doing a sync write to a SSD without PLP a write needs to hit NAND before it is acknowledged. As a result, the performance is much lower.

Now I’m curious as to how these new optane drives would work within a microsoft tiered storage spaces solution. We’re pushing intel dc p3700s for database clients but I’m thinking (based on the small db clients) that these would be just as good/better in some regards and more price competitive.

We are actually going to have one of the STH hosting DB servers using (mirrored) Optane soon. We use P3700’s/ XS1715’s in other nodes for this purpose. The machine is in burn-in now but we hope to get a few benchmarks done before it goes into production.

The biggest “issue” is the small capacity. Even 480GB is too small compared to the cost of adding more PCIe slots/ U.2 bays these days.

Patrick, isn’t ZIL mirrored in RAM and on SLOG the only time a SLOG it is ever read is on a recovery from some catastrophic crash or power loss. You will not get any performance increase my mirroring a SLOG (it’s all writes except for the one in a blue moon recovery). The only benefit you get by mirroring SLOG is to protect against performance degradation and avoid any data loss during a device failure or a device path failure.

But what are you really protecting yourself from …

Loss of a SLOG device during normal operation is a non-event – they simply get kicked out of the pool and the system will continue, possibly running a little slower. ZIL synchronous writes will go to the main pool

drives instead of the SLOG device and/or l2arc caching is no longer there, depending which device fell over.

It seems like you are guarding against a failure of a SLOG happening at the exact same time you had some sort of spontaneous reset or power failure. What are the odds ?

Jon, the easy answer is that mirroring is relatively cheap. Mirroring just to keep replacement windows in the DC to at most a semi-annual basis.

First, Linux isn’t used much in large-scale storage systems because of its licensing brokenness. Not even Google observes the GPL. Second, if you really want enterprise grade storage with more IO than you can probably ever use, get a NetApp All Flash FAS system. All the benefits of FreeBSD and a better file system than ZFS.

Are the Intel 900p Optane AIC’s supported under Illumos yet? We’re running ZFS on Omnios and I’m always searching for better ZIL options.

@Shamz

“…First, Linux isn’t used much in large-scale storage systems because of its licensing brokenness…”

No, Linux is not used because of licensing. Linux is not used because problems of scaling in storage:

http://www.enterprisestorageforum.com/technology/features/article.php/3749926/Linux-File-Systems-Ready-for-the-Future.htm

“My advice is that Linux file systems are probably okay in the tens of terabytes, but don’t try to do hundreds of terabytes or more. And given the rapid growth of data everywhere, scaling issues with Linux file systems will likely move further and further down market over time.”

Regarding NetApp, it costs lot of money. ZFS is free. And if WAFL is a better filesystem, I dont know. But it is certain that ZFS is safe as lot of research shows that. See the wikipedia article on ZFS for research papers.

Do you use the whole disk size of 900p as slog?

“The other reason to get an Intel DC P4800X over the Intel Optane 900p is data integrity….What is more important is end-to-end data protection.

As an enterprise part, the Intel® Optane™ SSD DC P4800X offers multiple data protection features that the Intel® Optane™ SSD 900P does not, including DIF data integrity checking, circuit checks on the power loss system and ECRC”.

This is not true. If you look at the spec sheets of Enterprise disks with DIF to ensure end-to-end data integrity, you will see that the spec sheets have an entry something like “1 irrecoverable error in every 10^15 bits read”. Now, how can a DIF disk with data integrity have irrecoverable errors when reading lot of data? Something does not add up. Ergo, DIF disks are not safe. So this Optane is not safe. Let me explain why.

The thing is, you need to compare the data integrity checksum on the disk, through the filesystem, raid controller, …., up to RAM. You need to compare that the checksums on both ends of the chain: disk to RAM agrees. A single disk can not do that, you need software that controls the entire chain, end to end. That is exactly what ZFS does. And that is why ZFS is the safest solution out there. A single raid controller can not access and check the data integrity in RAM, nor can a single disk. You need end-to-end software, i.e. ZFS.

Even if say, a filesystem such as ext4 had data integrity checksums, those checksums are not passed over to the volume manager, and neither are they passed over to the raid controller, etc and not passed all they way up to RAM. That is why a storage solution with many layers (filesystem, volume manager, raid, etc) are susceptible to data corruption: the individual parts might have checksums, but they do not communicate to each other. That is why you need a storage solution in charge of the entire storage stack, from filesystem all the way up to RAM, so you need a monolithic filesystem – which is exactly what ZFS is.

The Linux developers never understood why ZFS is designed the way it is, and mocked the monolithic construction (which is the only reason why ZFS was designed). They called ZFS badly designed “rampant layering violation”. Later BTRFS filesystem was developed by Linux devs, and it is also monolithic – but they never understood why they did a monolithic design of BTRFS, they just copied ZFS even though they dont like layering violations. And if you read the forums, lot of people loose data with BTRFS. It is not safe.

Optane can not access the data in RAM and confirm the data is not corrupted. Hence, Optane can not guarantee data integrity. You need a software solution in charge of the whole stack.

So will a PM953 do it for a small 10G Network or is it over burdened with it?

“We did not want to stop at using a synthetic test so in the next installment we have real-world data from a lab ZFS NAS.”

It has been almost an year since the article. Is the next part coming at all?

Great work!

What about dual Intel Optane Memory M10 16 GB PCIe M.2 80mm SSDs (Model number: MEMPEK1W016GAXT)? 16GB is plenty of Zil SLOG for many systems and according to Intel Ark it has an Endurance Rating (Lifetime Writes) 182.5 TB & Mean Time Between Failures (MTBF) 1.6 Million. For ~34 each from Amazon seems pretty cost effective if viable.

OK so my engineers are reluctant to specify P900 on a Supermicro since it is not on their supported NVMe drive list. Did you experience any compatibility problems? What Supermicro server was actually used in testing?

Hi,

why use 5 years old FreeBSD 11.1 from 2017 while you have up-to-date (and with OpenZFS) FreeBSD 13.1 released in the middle of 2022?

Regards,

vermaden

Hi vermaden – we are using 2017 FreeBSD 11.1 here because this article was published in December 2017. As a result, we could not use 13.1 because it was not available for around four and a half years after this article was published.