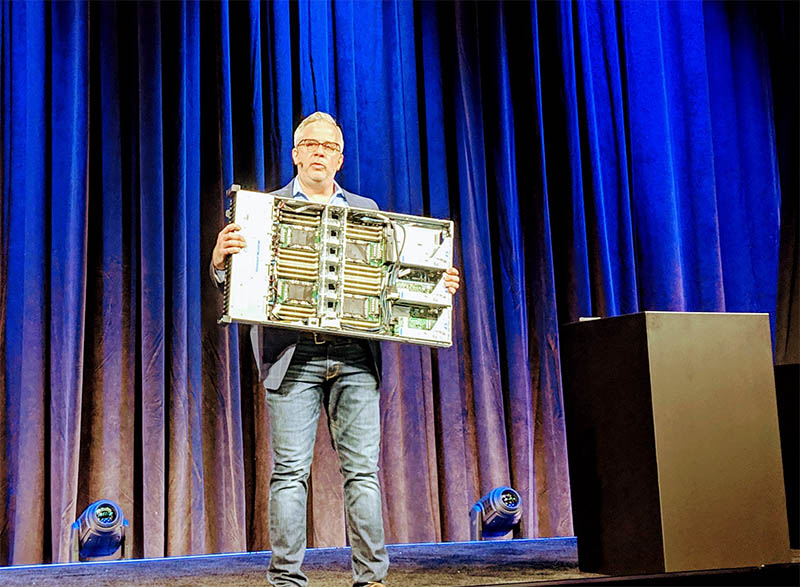

2U 4-socket OCP Design Contributed by Intel and Inspur

Intel and Inspur contributed a new quad-socket OCP design to the project. The server was first shown at the show by Jason Waxman of Intel during his keynote, then during Inspur’s keynote.

Currently, the vast majority of servers are dual-socket designs. I had the chance to ask Inspur what the key drivers are for customers to adopt a 2U quad-socket design over 2U two-socket design other than density.

Benefits customers are seeing on four-socket platforms include:

- Higher utilization with a larger resource pool

- Power supplies run at higher efficiencies due to higher loading

- Using six larger fans instead of twelve smaller fans saves around 30W

- Savings on network cards, boot devices, cables, power supplies, switch ports, and other per-server components

In the end, with Intel Xeon Scalable allowing Gold and Platinum CPU SKUs to operate in a quad-socket mode, the incremental cost to go quad socket has decreased. The Inspur NF8260M5 has eight PCIe slots also allowing more GPUs and peripherals to be connected to the node.

GPU Inferencing at the Edge with the Inspur NE5250M5

This is a design the company has for the Open Telecom IT Infrastructure (OTII) standard. It is a dual socket Intel Xeon Scalable system that has space for multiple GPUs including two NVIDIA Tesla V100 PCIe cards or up to six NVIDIA Tesla T4 cards.

One can see the foam inserts inside the chassis. These systems are meant to be deployed in relatively harsh field locations by telecoms and are built to have a large air filter in front of them. The two SFP+ network ports are actually from the Intel Lewisburg PCH. Using the PCH 10GbE allows the systems to have higher-speed networking at low cost. We asked Inspur and the SFP+ networking from the PCH NIC does not require an extra PHY which saves power and cost over 10Gbase-T or add-in card NICs.

The company also showed off a NE5260M5 variant that had rear power supplies but eight 2.5″ hot-swap bays for more storage.

Final Words

Between OCP Summit 2019 and GTC 2019, Inspur made a fairly clear statement. It showed off why it is growing fast as the largest AI systems supplier in China and why it’s growth rate dwarfs that of Lenovo, HPE, and Dell EMC. It is not simply making undifferentiated dual socket CPU servers or purely following the NVIDIA HGX-2 spec. Instead, it is innovating with building larger topologies and having them used at its cloud service provider clients. It is quite obvious that Inspur is iterating faster on its GPU designs than players like HPE and Dell EMC who showed off relatively conservative designs at GTC by contrast. At STH, we recently reviewed the Inspur Systems NF5468M5, a 4U 8x NVIDIA Tesla V100 server.

Those are some amazing GPU servers. 32 V100 = 1TB of video RAM plus you can stuff DIMMs into it. I want to know if it can handle apache pass and how much they cost