Dell PowerEdge R6715 Power Consumption

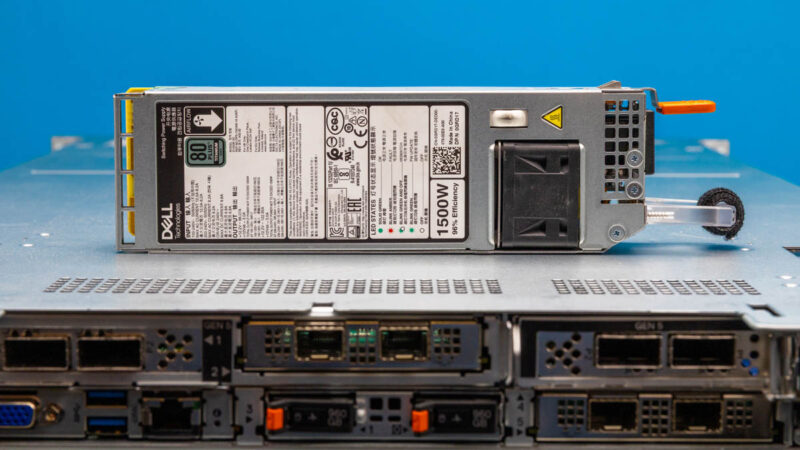

Our system had dual 1.5kW 80Plus Titanium power supplies. These are very high-end units for traditional single socket compute servers.

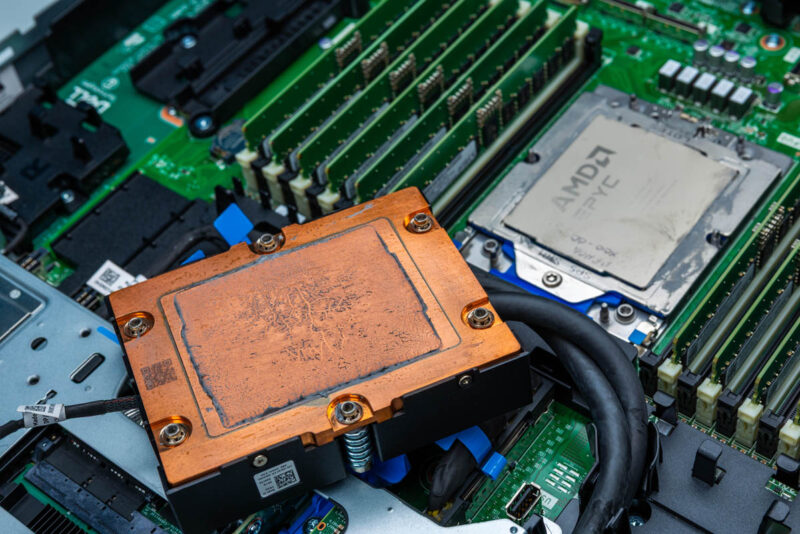

We ended up running this server at around 750-800W. As a fun aside, we took the NICs out and put them in the 2U server that we used for the reference point. Usually, 2U servers are more power efficient due to larger fans. Still we were running about 50W higher in the 2U platform. Part of that is power supplies, but part of that is also the liquid cooling. The liquid cooling removes a huge amount of heat from the system so the fans run at lower speeds.

As a quick aside here, it is a bit challenging to do a direct air to liquid comparison. We did not have the air cooled version for a side-by-side. Even on the liquid side, it also depends on how heat is evacuated, the loops used, and even the fluids used. Still, directionally it feels like we are in the ballpark of the number that we should see based on other systems that we have tested side-by-side.

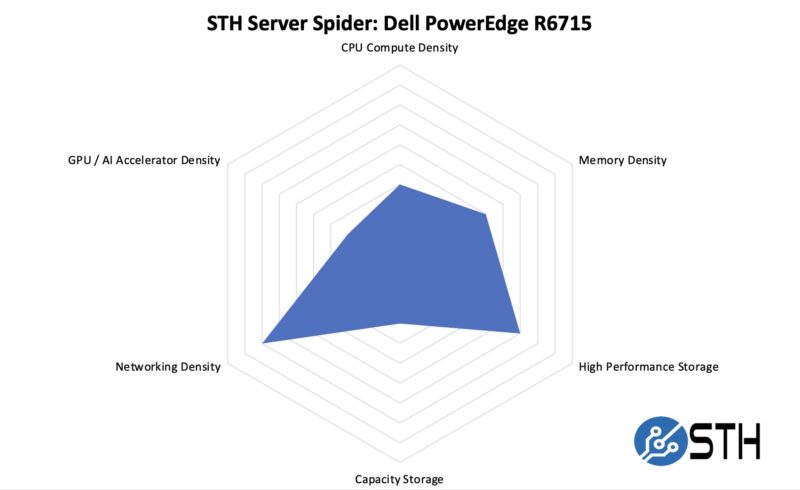

STH Server Spider: Dell PowerEdge R6715

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

Let us talk for a moment about throwing our traditional metrics for a loop. This is a single socket platform, but with 2 DIMMs per channel and a rear I/O area packed with expansion cards for networking, it is denser than one might imagine. At the same time, it does not reach the CPU density of a 2U 4-node platform. It is in some ways strange to discuss a liquid cooled platform and not be discussing maximum density systems. Still, the customization in terms of the EDSFF storage for higher density, the four rear NICs, and so forth in a 1U is truly impressive.

Final Words

In some ways, this is a really neat concept. The Dell PowerEdge R6715 is a neat 1U server irrespective of the liquid cooling. Most of these servers will find their way into racks replacing legacy servers, and today that means usually LGA3647-based 1st and 2nd Gen Intel Xeon Scalable systems. Even using popular higher-end Xeons of that era, replacing three or four dual socket servers with one single socket node is an awesome capability. 160 cores in a single socket 1U server is great. The option to liquid cool that chip just adds an extra dimension to the customization one can do on this flexible server.

We review a lot of servers at STH, and the Dell PowerEdge R6715 is a stellar machine with more customization options than you can shake a stick at.

I think I saw the reference in this post, but it might have been somewhere else. I don’t understand why people still test Linux with Ubuntu or Debian – or VMs with Promox – and not Fedora. Fedora is the cutting edge of mainstream operating systems, using the latest kernel, adding new tech asap, retiring obsolete tech asap. Fedora server is dead easy to use. Its package system IMO is superior to everything else. I can setup one of these MinisForum minipcs from box to customised server in less than an hour. From what I understand, getting Ubuntu or Proxmox to work with the latest kernel one needs to perform the operating system equipment of a root canal. What’s the point of having the latest and greatest hardware if the operating system doesn’t exploit the technology. Even with Fedora, there is a few months wait unless one wants to play with rawhide.

One more thing… Fedora is developed by IBM. What did they used to say about using IBM?

@Mike: Because Ubuntu and Debian have well tested stable versions, and most people running servers want reliability above all else (hence the redundant power supplies, redundant Ethernet, etc.) If you want bleeding edge hardware support and you can tolerate the occasional kernel crash that’s when you go with something that keeps packages more up to date, although Fedora is a bit of an odd choice for that as it still lags behind other distros like Arch or Gentoo that are typically only a day or so behind upstream kernel releases.

But I do question why you need the latest kernel on a server since they usually don’t get new hardware added to them very often, they typically come with slightly older well tested hardware that already has good driver support, and most server admins don’t like using their machines as guinea pigs either, especially when a kernel bug could easily take the machine out and prevent it from booting at all. It sounds more like something relevant to a workstation or gaming PC where you’re regularly upgrading parts, and you don’t need redundant PSUs etc. because uptime isn’t a primary concern.

Ubuntu is the most popular Linux distribution for servers. Usually, Red Hat and Ubuntu are the two pre-installed Linux distributions that almost every server OEM has options for. If you want to do NVIDIA AI, then you’re using Ubuntu for the best support. RHEL is maybe second.

If you’ve got to pick a distribution, you’d either have to go RHEL or Ubuntu-Debian. I don’t see others as even relevant, other than for lab environments.

If STH were a RHEL shop, I’d understand, as that’s a valid option. Ubuntu is the big distribution that’s out there, so that’s the right option. They just happened to use Ubuntu since before it was the biggest. I’d say a long time ago when they started using Ubuntu it was valid to ask Ubuntu or CentOS, but they’ve made the right choice in hindsight even though at the time I thought they were dumb for not using CentOS. CentOS is gone so that’s how that turned out.

fantastic !! The AMD Risen a proposition better than Intel pricewise it may surpass the Intel performance in the long run ..

you never mentioned how you cooled this server…. it has hoses…. to hook to what?

In the Dell R6715 technical guide, they publish memory speeds at 5200MT/s even though the server (air cooled) uses 6400 MT/s DIMM’s. Does the liquid cooled version of the R6715 also down shift the memory speed?

The memory speed is not a liquid v. air. It is a challenge with the additional trace lengths of moving from 12 DIMM to 24 DIMM configurations placed on the motherboard.

Patrick, I understand that the second bank always creates a reduced memory bandwidth. What I don’t understand is why is the R6715 documentation indicating a 5200 MT/s for the memory bandwidth when running 6400 MT/s DIMM’s. The HPE DL325 has the same published numbers in their documentation. Why aren’t these servers achieving full bandwidth on 6400 MT/s DIMM’s when running a single, fully populated bank?