Dell EMC PowerEdge R7415 Topology

Although the Dell EMC PowerEdge R7415 is a single socket solution, for those accustomed to Intel Xeon designs it is fundamentally different. Any thorough review of AMD EPYC 7001 series servers needs to address this difference in detail since it is perhaps the biggest difference between AMD EPYC and Intel Xeon systems today.

Each AMD EPYC 7001 series CPU uses a four NUMA node implementation. You can read more about why in our AMD EPYC 7000 Series Architecture Overview for Non-CE or EE Majors article or learn about it in this video:

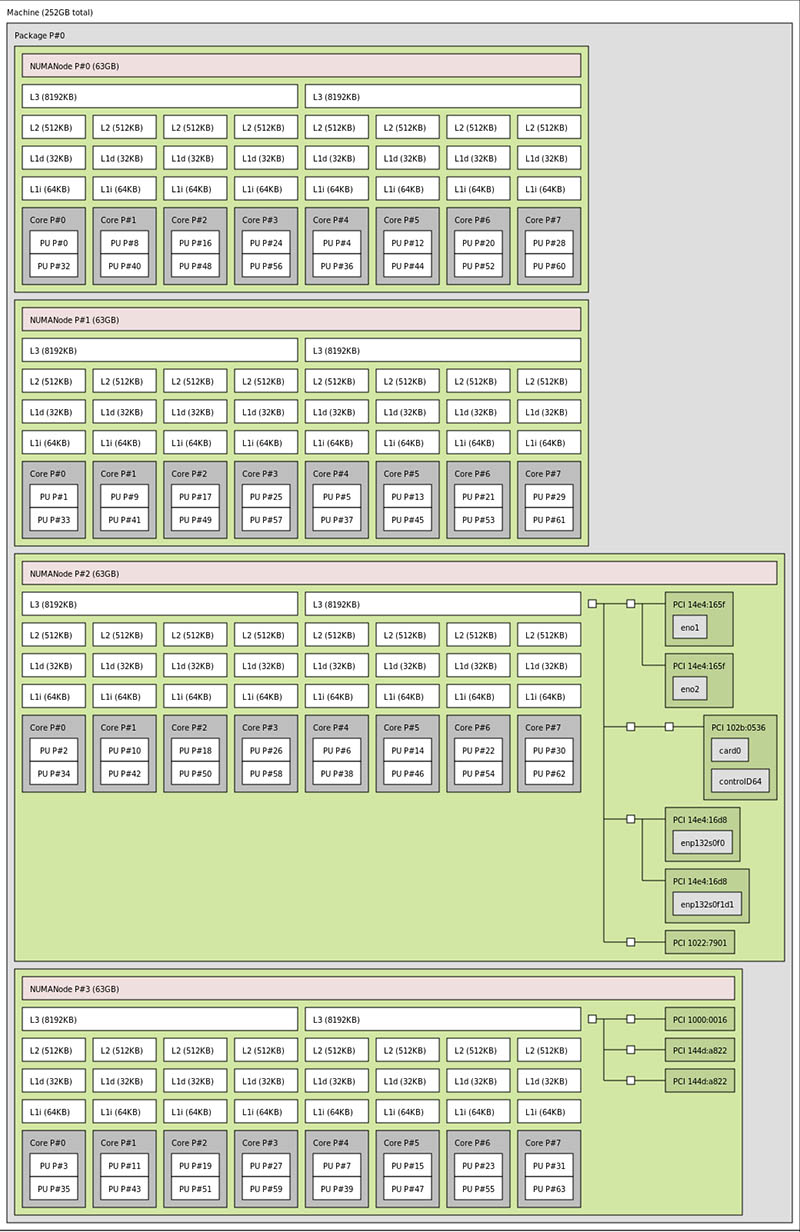

That helps AMD deliver a tremendous value since it can stitch together smaller pieces of silicon and make bigger chips than Intel can, at a lower price point. The impact of this shows itself in a key area: NUMA topology. As a thorough review of an AMD EPYC 7001 series server, we felt the need to show the topology. Here is the layout of the Dell EMC PowerEdge R7415:

As you can see, each NUMA node in our test system is comprised of 8 cores and 16 threads. There are four DIMM slots per NUMA node. In our case two are populated for each with 32GB RDIMMs which means each NUMA node shows 64GB of RAM.

The larger implication is the PCIe root complex. From this diagram, you can see the NVMe drives attached to NUMANode P#2 and NUMANode P#3. The 10GbE and 1GbE NICs plus the Dell PERC H740p are connected to NUMANode P#2.

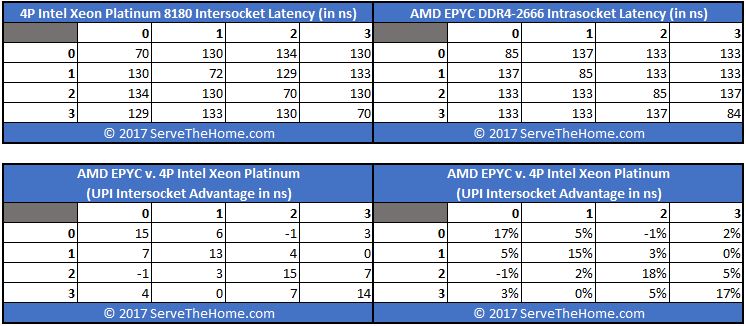

The implication of this is that when workloads or PCIe devices attached to one die need to access RAM or a NIC on one of the other three NUMA nodes, data must traverse the AMD Infinity Fabric. AMD Infinity Fabric is the magic AMD uses to make this setup work. For those coming from an Intel Xeon world, this effectively looks like how data flows around a 4-socket Xeon system. Here is an example from our piece AMD EPYC Infinity Fabric Latency DDR4 2400 v 2666: A Snapshot.

In many cases, users will notice little to no difference between Intel and AMD, but there are a few cases where this matters just like it does in Intel 4-socket systems.

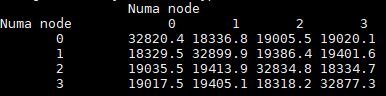

The other impact, aside from latency, is that RAM accessed Infinity Fabric is not as fast as local RAM bandwidth. Here is the 4×4 matrix of memory bandwidth between cores. Local access will be around 32.8GB/s on each NUMA node. Remote access will be around 19GB/s in this architecture.

This has an implication for minimum RAM population. With single socket AMD EPYC 7001 series systems, like the Dell EMC PowerEdge R7415, we suggest filling all eight memory channels with DDR4-2666 memory. Here is why:

- AMD Infinity Fabric between each die on the EPYC CPU is driven by memory speed. You want this fabric to run as fast as possible. Use DDR4-2666 with the AMD EPYC 7001 generation of CPUs.

- AMD EPYC will function with a single DIMM per socket, but performance will have a severely negative impact due to three of the four sets of cores having to access RAM over the Infinity Fabric.

- Intel Xeon functions well with a single DIMM because it is currently a single die design. With AMD EPYC, you will want at least four DIMMs per socket to ensure that each of the four dies has RAM directly attached to their memory controllers.

For those looking to purchase a Dell EMC PowerEdge R7415 here is the short version: order 8 DIMMs in the PowerEdge R7415. If you need to order less RAM for cost reasons, get at least 4 DIMMs. Many of the AMD EPYC HPE Proliant baseline configurations do not follow this advice and perform poorly in our lab as a result.

We are going to have a dedicated piece on why in the near future. Stay tuned to STH for that. In the meantime, that is the key item you need to know as an output of our forthcoming analysis.

Next, we are going to take a look at the Dell EMC R7415 management. We are then going to look at the performance, power consumption, and our closing thoughts.

Patrick,

Does the unit support SAS3/NVMe is any drive slot/bay, or is the chassis preconfigured?

You can configure for all SAS/ SATA, all NVMe, or a mix. Check the configurator for the up to date options.

@BinkyTO

It depends on how you have the chassis configured. It sounds like they have the 24 drive with max 8 NVMe disk configuration. With that the NVMe drives need to be in the last 8 slots.

How come nobody else mentioned that hot swap feature in their reviews. Great review as always STH. This will help convince others at work that we can start trying EPYC.

The system tested has a 12 + 12 backplane. 12 drives are SAS/SATA and 12 are universal supporting either direct connect NVMe or SAS/SATA. Thanks Patrick and Serve the Home for this very thorough review… Dell EMC PowerEdge product management team

These reviews are gems on the Internet. They’re so comprehensive. Better than many of the paid reports we buy.

I take it the single 8GB DIMM and epyc 7251 config that’s on sale for $2.1k right now isn’t what you’d get.

You’ve raved about the 7551p but I’d say the 7401p is the best perf/$

Great review! Any chance of a cooling analysis (with thermal imaging)?

Impressive review

Koen – we do thermal imaging on some motherboard reviews. On the systems side, we have largely stopped thermal imaging since getting inside the system while it is racked in a data center is difficult. Also, removing the top to do imaging impacts airflow through the chassis which changes cooling.

The PowerEdge team generally has a good handle on how to cool servers and we did not see anything in our testing, including 100GbE NIC cooling, to suggest thermals were anything other than acceptable.

Great review.

Thanks for the reply Patrick. Understand the practical difficulties and appreciate you sharing your thoughts on the cooling. Fill it up with hot swap nvme 2.5″ + add-in cards and you got a serious amount of heat being generated!

Great Review! Many thanks, Patrick!