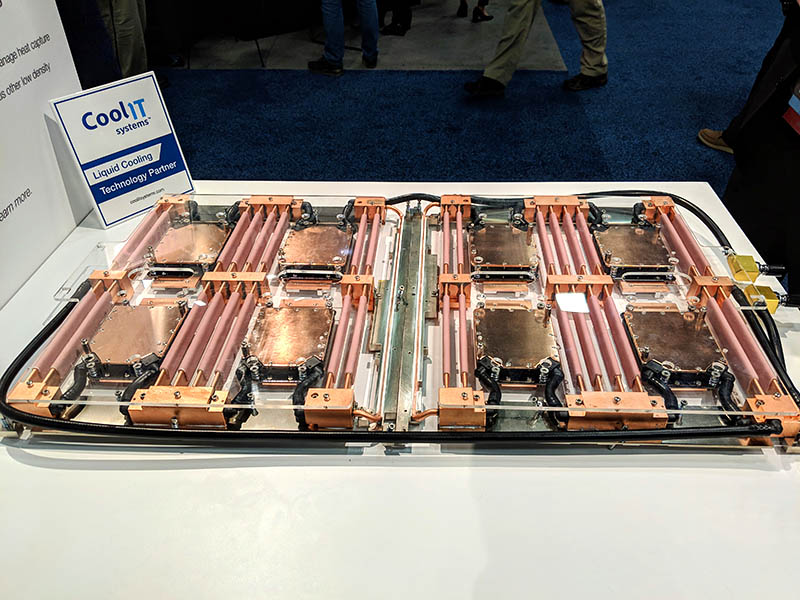

On the Supercomputing 2018 floor, we saw a hunk of metal that seemed inert at first. It looked like so many other liquid coolers with a series of tubes and copper bits. Instead, this hunk of metal allows Cray to achieve something almost unimaginable by 2019. Using liquid cooling by CoolIT Systems, Cray is able to build systems with up to 1024 threads per U in 2019 with room to increase that number as processors evolve. Cray already has a Shasta design win in Perlmutter for AMD EPYC “Milan” generation that the company highlighted as a successor to 2019’s “Rome.” You can read more about AMD’s recent roadmap disclosure in our AMD Next Horizon Event Live Coverage.

Cray Shasta Liquid Cooling by CoolIT

With eight CPUs and cooling for up to 64 DIMMs, the liquid cooling solution allows Cray to handle twice the CPU density of typical 2U 4-node systems while reducing power consumption by 20-30% over air cooling. The design has another implication as we are told it is able to easily handle significantly higher wattage parts.

You will immediately notice that the tops of each cold plate are smooth. This is for good reason. We are told that the Cray Shasta cooling includes both top and bottom mounted components.

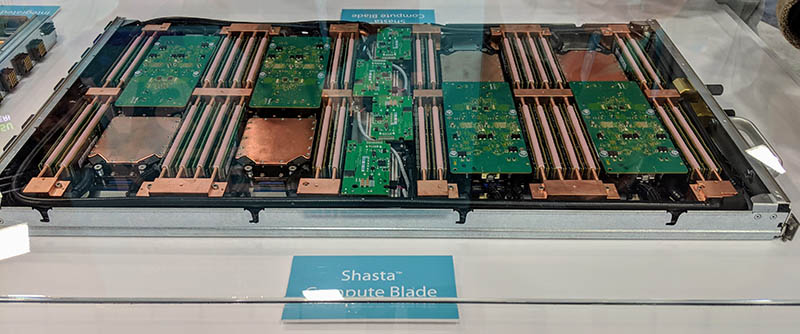

The PCBs atop the AMD EPYC sockets are the fabric uplink cards. You can see that they are cooled by the same CoolIT cold plates.

The Cray Shasta AMD EPYC platform is designed for 8 DIMMs per CPU which means 64 DIMMs in 1DPC mode per system.

Final Words

This is where the industry is heading. With next-generation NVIDIA data center GPUs likely to exceed 350W and CPUs also looking to move from the 180-205W range today to the 250-350W range in a few months, there is a looming question on how all of this will be cooled. What we do know is that we are moving to an era with more than 1000 threads per U with on roadmap products due in the not-too-distant future.

The consumer market is far ahead of the server market considering liquid cooling.

My first liquid cooled PC was 20 years ago made with machined and soldered cooper block’s and an aquarium pump.

I was recently digging around the mineral oil systems. Fully submerged racks with greatly simplified systems looked appealing. No worries of coolant leaks, high copper content $$$, no exchangers, no ambient room temp, humidity, etc… I can see that being a hit over tgis anyday.

Just need to make sure the oil stays where it’s supposed to be, kept clean, and lots of paper towels for techs when doing swaps

Misha Engel consumer market uses liquid cooling to reduce noise and number of fans. Data Center market uses liquid cooling to reduce costs.

If nothing, then this is where Moore law ends. Simply for more perf we need to pump more Watts there is no way around that by Moore law anymore like it was in ’90-00. According to passmark, old SB E5-2620 is about 1/2 perf of brand new E-2186G yet, E5 is 32nm and new E is 14+nm. Both +- the same TDP. My assumption then is that if we consider certain TDP/Watt consumption for the target platform then either on 14 or 7nm we will stay with the same CPU speed for very very long time…(minus app specs. coprocessors).

@Marcin Kowalski your statement is true for my case. It took the date centre a long time to find out that they can reduce cost by going liquid way.

@Misha Engel Crays first liquid cooled computer went on sale 33 years ago and their system was sold commercially compared to your system that was a custom job.

10 years ago, liquid had a thermal limit. Now it is around 5x that old limit. Problem is cost ($$$/gallon of the liquid) and installation. Has to be marketed on TCO. Also, issues with submerged fiber connections.

2U air cooling can reliably handle 8x 200w+ TDP CPUs, 600w of NVMe, plus RAM and add-in cards. 4U designs can handle 6kW of cooling on air. Liquid may be more efficient, and more data centers are being built for it, but air is easy to deploy.

Those were answered from Anandtech ( Top ) and STH ( Bottom ) as to why submerged liquid cooling hasn’t caught on. ( I wonder if the weight of the liquid is also a factor in DC design )

Anyway, I have often wonder if CPU / Rack Density cost were ever a problem. Do these type of cooling brings any Power usage reduction per rack? Which is likely the main calculation in TCO.

@ed The reason for liquid cooling at scale is air conditioning. If you run computers at scale with air cooling, then the air needs to be cooled.

With liquid cooling, 140F is often a fine inlet liquid temperature. A rack, or string of racks would have connections to in building water pipes and a heat exchanger. Fluid exit temp may be 165F, or higher. On the roof, radiators and fans can get rid of that heat without compressors. “chilling” the water to 140F in a 100F ambient temperature is very cheap, and may use 1/10th of the power of chilling air.

3 years of AC for a single 2 cpu normal computer may be a few thousand dollars. With water cooling, this is money not spent, that can be rolled into a nicer computer on the front end. With the lower operating costs, that nicer computer may remain for a few years longer, further reducing the total cost of ownership.