Cisco UCS C125 M5 Node Overview

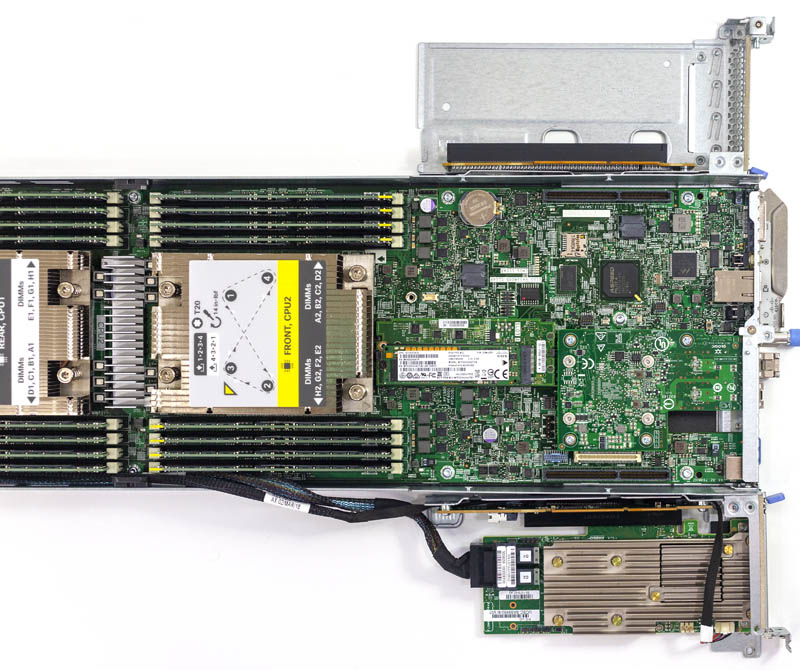

Powering the Cisco UCS C4200 are four compute nodes. These are known as the Cisco UCS C125 M5 compute nodes in this generation. Each node is designed to house two CPUs, sixteen DIMMs, three expansion cards, and a boot device. Some 2U4N designs have fans on each compute node. The Cisco UCS C4200 does not which leads to better power consumption.

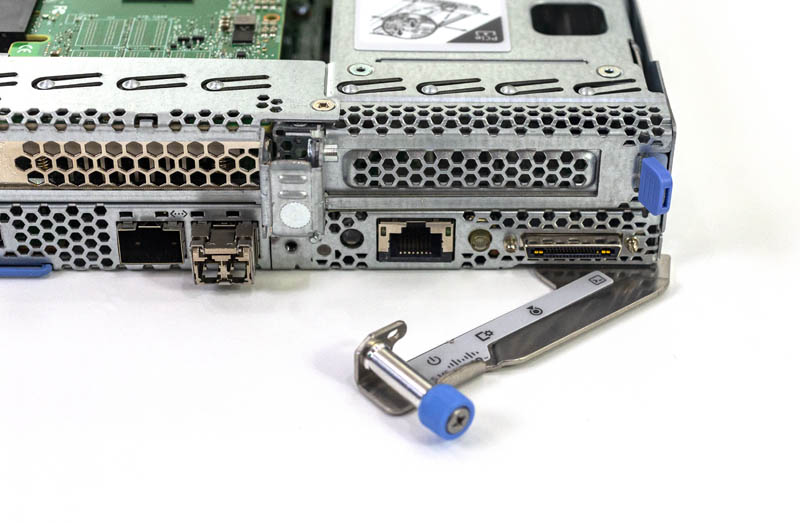

Starting at the rear of the node, the basic I/O consists of a 1GbE management LAN port as well as a USB 3.0 port. There is another high-density connector that is used for a KVM cart dongle providing I/O such as a VGA port. Cisco uses this connector to free valuable space on the small rear I/O faceplate.

That latching mechanism has a guide pin and is tool-less. If you need to service a Cisco UCS C125 M5, you can simply untighten the thumb screw and remove by the mechanical lever. That allows you to keep the Cisco UCS C4200 chassis in the rack even when a node requires service.

This lever works exceptionally well. Many 2U4N designs use much less robust levers instead relying upon the individual servicing the rack to forcibly pull the node out of the chassis via tabs. This lever provides the mechanical pry to make removal easy and give an easy signal when the node has been properly installed. There is even a guide pin to ensure proper alignment and labels for the ports integrated to the lever. These mechanical design details may seem small, but they have an enormous impact on a multi-node chassis server. They make it easier for technicians with less experience to work on the system.

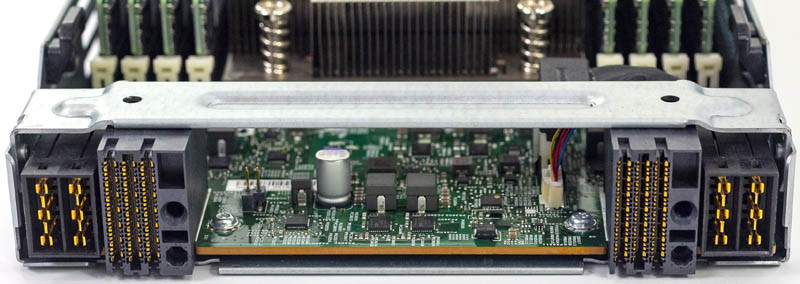

The other end of the node has the various connectors that connect to the chassis midplane. Again, these are high-density connectors because this is Cisco.

Those connectors serve another purpose. They help channel airflow directly into the two CPU heatsinks. After working on the Cisco UCS C125 M5 node alongside other 2U4N systems such as the Intel-based Supermicro BigTwin SYS-2029BZ-HNR, or AMD-based Gigabyte H261-Z60 the lack of a mylar duct makes installation and removal much easier. Those ducts tend to catch if not seated exactly, and they do not like to stay seated in the right place. Cisco’s design fixes that challenge.

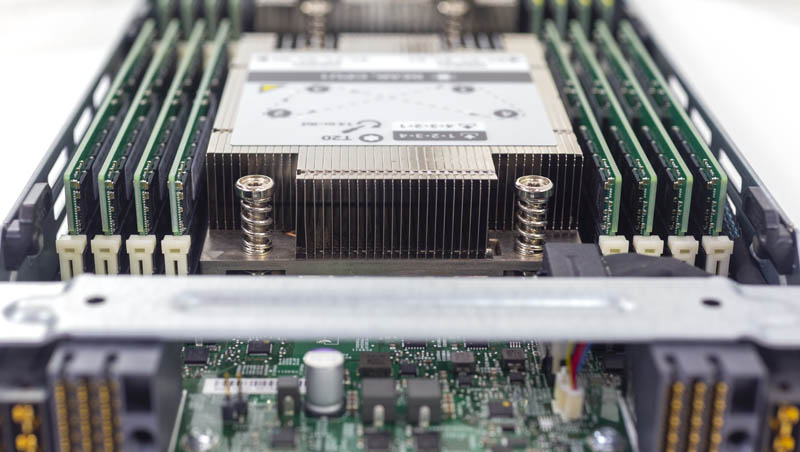

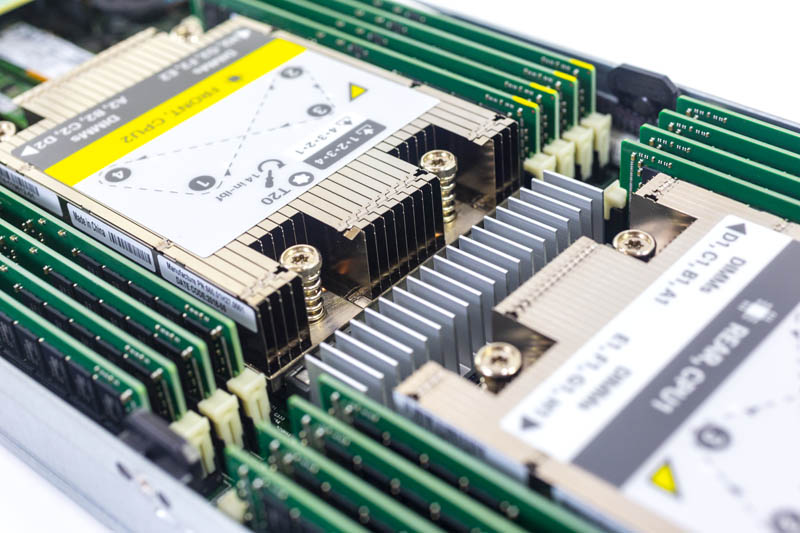

Onboard there are two AMD socket SP3’s which each house an AMD EPYC 7000 series CPU. In the AMD EPYC 7001 series that is up to 32 cores/ 64 threads per socket or 64 cores/ 128 threads per node. With the upcoming AMD EPYC 7002 series, those figures will double leading to a maximum of 256 threads per node and 1024 threads in a 2U system.

Each CPU socket is flanked by eight DDR4 memory slots for sixteen in total. Using 128GB LRDIMMs, that yields 2TB given today’s memory or 8TB per 2U chassis.

The rear of the node is designed to maximize I/O. Onboard I/O is far from extensive, but there is a lot of flexibility in configuration. A handful of screws allows access to the entire area. We would like if Cisco found a way to make this tool-less. At the same time, screws are a fact of life in modern 2U4N I/O areas and Cisco is using fewer than most.

The card in the center houses a M.2 SSD, and a surprise as well. On the bottom edge of the card, one can install a second M.2 SSD for mirrored boot devices. Years ago we saw SATADOMs as prevalent in this space. Now, most vendors have dual M.2 boot solutions. Cisco needs to name this something “cool” like the Dell EMC “BOSS” M.2 card. Cool names aside, it is a functional design.

Onboard there is an OCP 2.0 NIC slot. This is Cisco with an open networking standard slot. Indeed, Supermicro and others still are using proprietary mezzanine networking, so seeing an OCP NIC slot in a Cisco server was a refreshing change. In our test unit, we had a dual port Qlogic 25GbE OCP NIC.

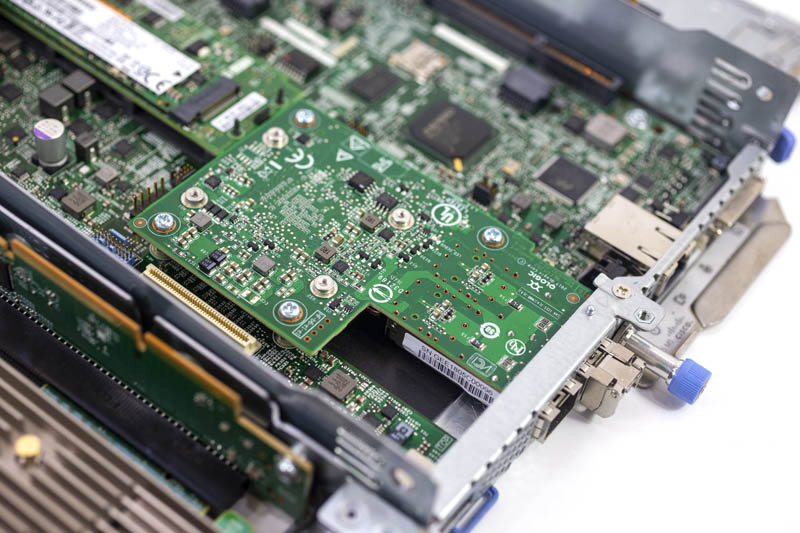

On each side of the Cisco UCS C125 M5 there is a PCIe x16 riser. The riser pictured below has a Broadcom SAS9460 SAS controller installed. Most vendors build their risers using standard PCIe x16 slots and a PCB that mounts the device parallel to the motherboard. Cisco again is pushing design standards here with their own electrical riser interface format that is much more compact than a standard PCIe x16 connector. In the picture below, you can see the black PCIe x16 connector and the compact edge connector Cisco uses to mate to the motherboard.

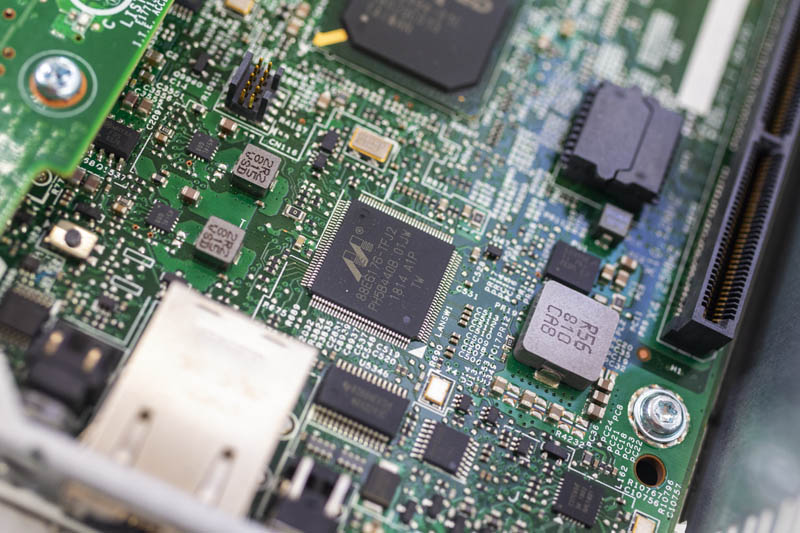

Perhaps the most unusual device on the server is the Marvell 88E6176-TFJ2 chip. The chip has onboard PHY for the BMC networking. It is also more than a low-end NIC. Instead, it is actually a small Ethernet switch chip. If this was not a Cisco server, we would question this. Since it is a Cisco UCS machine, it makes perfect sense here.

The BMC is an ASPEED Pilot 4, not the ASPEED AST2500 popular on white box servers. Cisco’s IMC runs on the Pilot 4. Next, we are going to look at the Cisco UCS C4200 management and the software that runs on this controller.

I’m sure you’re right. Cisco has been doing this so long they’ve got to have a dedicated usability team.

I got one of these to test about 4 months ago loved it. Used cisco intersight for deployment. It was a little rough with the c125’s but updates were literally coming out weekly and it handled my M4 and M5 servers perfectly. I plan on replacing my whole UCS b series with C4200 and intersight once rome is released. Really happy to see this review, was the final nail in the coffin for the B series for me.

I knew Supermicro and Gigabyte had 2u4n but never knew Cisco did until I read this.