Cavium ThunderX2 Socket Performance

When looking at Cavium ThunderX2 performance there are a few key concepts that need to be addressed. Although the Arm ecosystem has come a long way, the x86 ecosystem has generations of software optimizations including compilers such as Intel’s icc. The fact is, these compilers exist but on the Linux side, gcc is still the most popular (and free/ open source) compiler out there. Also, like we saw when AMD re-entered the market with EPYC 7000, alternatives to Intel do not necessarily need to be the best across every workload. They need some workloads where they perform well and they need ballpark performance from the remainder of applications. With that context, we are going to look a bit at gcc results versus compiler optimized results. We are also going to look at some comparative gcc results.

SPECrate2017_int_peak

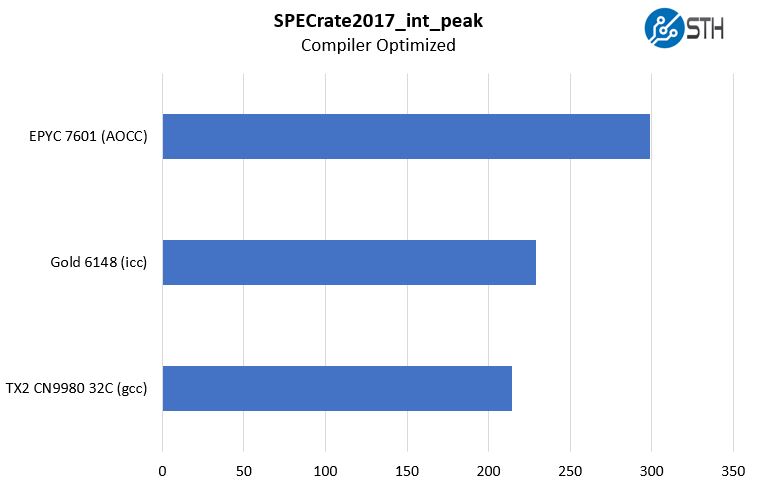

The first workload we wanted to look at is SPECrate2017_int_peak performance. Specifically, we wanted to show the difference between published Intel Xeon icc and AMD EPYC AOCC results and ThunderX2 with gcc. We expect that Cavium will get slightly better results when they actually publish but this should give you an idea regarding dual socket results for the options. First, we have compiler optimized results:

Here you can see, the Intel and AMD results are very strong using custom compilers. Again, the ThunderX2 CN9980 is about half the cost per CPU of the Intel and AMD options so even using highly tuned compilers, ThunderX2 is price competitive.

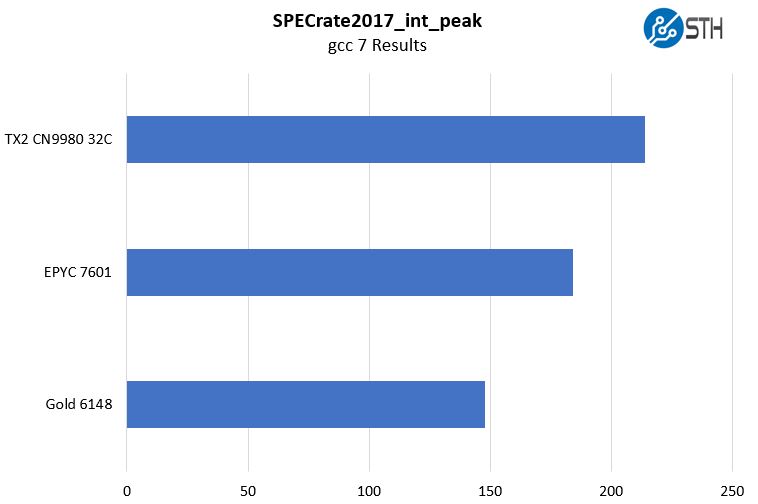

Officially, AMD and Intel do not support our efforts to run SPEC CPU2017 on gcc since using their optimized compilers give vastly superior results. Some say these compilers have benchmark specific optimizations but we are going to leave that for debate elsewhere. Here is what happens when we run the same tests using gcc and using -Ofast optimization levels, not the -O2 that a lot of the Arm vendors like to show:

With gcc, ThunderX2 CN9980 rises to the top. That is impressive because, again, while these are not official published results, they are likely more representative of many workloads that use gcc as their compiler. While it may not be the most optimized, gcc is the most commonly used compiler and is open source. This is a case where it is not the best for performance, but where the wide adoption occurred in the market.

Cray has their own compiler that works with ThunderX2 and provides better results than our gcc numbers. We do not have access to that. We also expect published SPEC CPU2017 results to be slightly higher than what we are getting.

STREAM Triad Memory Bandwidth

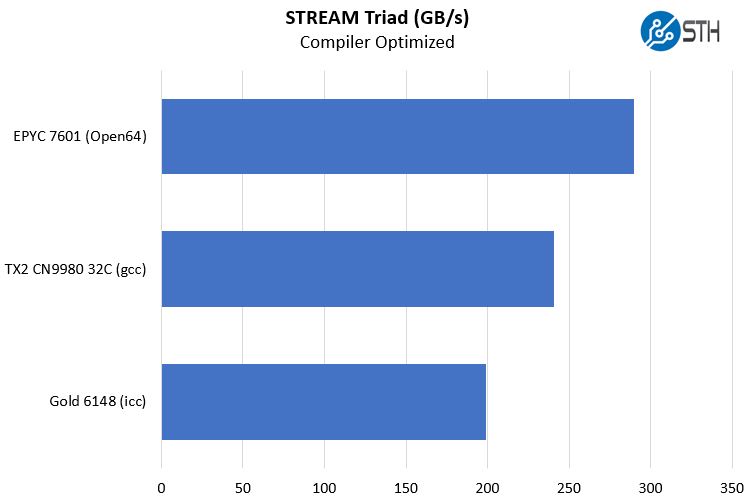

When we look at memory bandwidth, STREAM by John D. McCalpin is what the industry uses (learn more here.) Since it is an industry standard workload, it is one where we see compiler optimizations. Here is what a similar view looks like for compiler optimized STREAM Triad results:

We wanted to take a pause here and show that the results here highlight one important fact: ThunderX2 is an 8-channel memory controller design running at DDR4-2666 speeds. The Intel Xeon Gold is only a 6 channel design so even with compiler optimizations, ThunderX2 remains ahead.

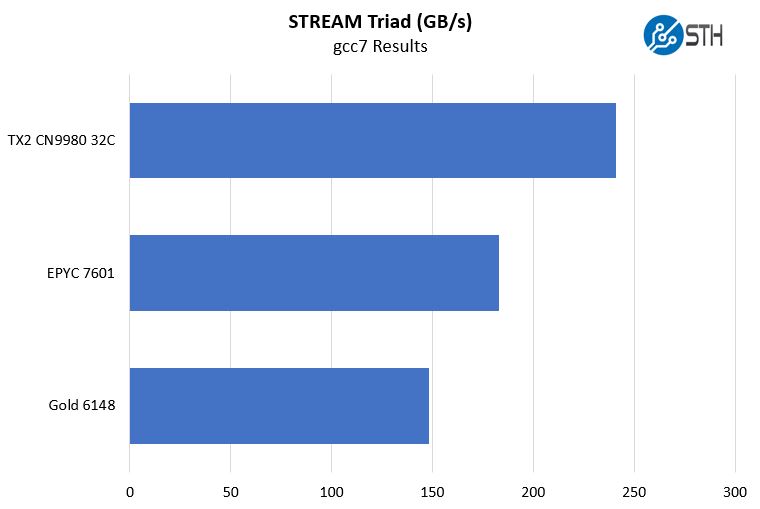

When we look at a baseline from a common compiler, gcc, the picture again changes:

The Cavium ThunderX2 CN9980 now comes out ahead. What is extraordinarily interesting is that the Intel Xeon Gold 6148 results fall by almost exactly 25%. It is almost like icc is finding a way to perform three operations instead of four to run this benchmark, perhaps optimizing how it writes to caches.

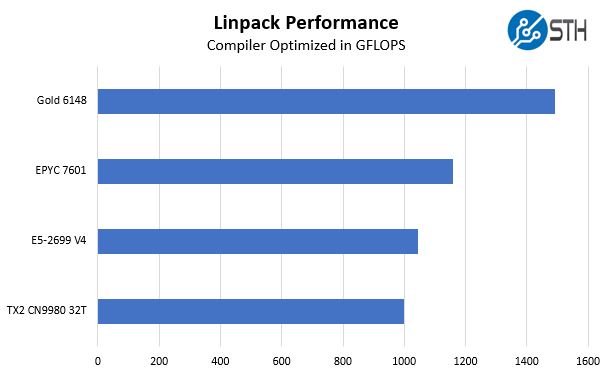

Cavium ThunderX2 Linpack Performance

Linpack is perhaps the most used HPC benchmark. We wanted to give some idea of where the ThunderX2 is. We also want to caveat this on two counts. First, the HPC space is rife with using custom compilers. Here using tools like icc are essentially a must, and we do not have access to Cray’s Arm compiler so we are using gcc for ThunderX2. Second, are using 32 threads per CPU in this benchmark for ThunderX2 since 4-way SMT hurts performance and HPC shops that run these types of workloads run SMT off.

The ThunderX2 performs well. AVX-512 and icc simply are awesome here which help Intel platforms significantly. Cavium does well but the company let us know that we have room for improvement. Our standard is to run with SMT on since that is what most of the non-HPC environments look like. This is a case where having 256 threads simply is too much. We also ran the test with 32 threads, or SMT off which yielded a solid improvement. The flip side to this is that we did not have the same level of optimized binaries that some of the custom Arm compilers, e.g. from Cray, would provide in this test. The HPC sites that focus on Linpack we expect will have access to vendor tools and therefore get better figures than we have here.

I’m through page 3. I’m loving the review so far but I need to run to a meeting.

Looks like a winner. Are you guys doing more with TX2?

It’s crazy Cavium is doing what Qualcomm can’t. All that money only to #failhardcentriq

Cool chart with the 24 28 30 and 32 core models

Cavium needs to fix their dev station pricing. $10k+ for two $1800 cpus in a system is too much. Their price performance is undermined by their system pricing

Read the whole thing, very impressed with the TX2 performance and pricing, think i’m going to try one out. But was a bit bummed out when i found out on page 8, the most important thing, power usage, wasn’t properly covered and compared to the Intel and AMD systems :(

Welcome competition, always good to see that there is pressure on the market leader.

Microsoft is also working on an ARM version for windows, so this can go the right way…

I’m very confused by some of what you wrote and the exact testing setups of these platforms is extremely unclear. To cite just one example of Linpack test where you state:

“Our standard is to run with SMT on since that is what most of the non-HPC environments look like. This is a case where having 256 threads simply is too much. We also ran the test with 32 threads per CPU, or SMT off which yielded a solid improvement. ”

On a 4-way SMT system you get 256 threads by operating 64 cores. You claim that the CPU only has at most 32 cores and in the same statement you re-tested at 32 threads. So…. what exactly did you test? A 32 core CPU that *cannot* have 256 threads? A two-socket 64-core system that can have 256 SMT threads but that was then dropped to a single-socket configuration with only a single 32 core processor?

Please put in a clear and unambiguous table that provides the *real* hardware configurations of all the test systems.

That means:

1. How many sockets were in-use. Were *ALL* the systems dual socket? All single socket? A mixture? I can’t tell based on the article!

2. Full memory configuration. Yes I know about the channel differences, but what are the details.

3. That’s just a start. The article jumps from vague slides about general architecture to out-of-context benchmark results too quickly.

Competition in the server industry great!

Don – 32 threads per cpu means 64 threads total right? 2x 32 isn’t that hard.

I don’t think this convinced me to buy them. But I’ll at least be watching arm servers now. We run a big VMware cluster so I’d have a hard time convincing my team to buy these since we can’t redeploy in a pinch to our other apps.

We’ll be discussing TX 2 at our next staff meeting. Where can we get system pricing to compare to Intel and AMD?

Can you do more about using this as Ceph or ZFS or something more useful? Can you HCI with this?

Love the write-up. You guys have grown so much and it shows in how much you’re covering on this which is still a niche architecture in the market.

Nice write-up, with plenty of details, on the newly launched. Congrats to Cavium.

Cavium Arm server processor launch, suddenly Microsoft shows up and reiterates it still wants >50% of data center capacity to be Arm powered. And it’s loving Cavium’s Thunder X2 Arm64 system. Together designed two-socket Arm servers…

Looks like cavium is taking on Intel with armv8 workstation. Same processor as used by cray. Interesting. Comparing to Xeon ThunderX2 is good in all aspects like performance, band width, No.of cores, sockets, power usage etc.

Competition in silicon is good for the market.

CaviumInc steps up with amazing 2.2GHz 48-core ThunderX2 part, along with @Cray and @HPE Apollo design wins, and @Microsoft and @Oracle SW support. Early days for #ARM server, but compelling story being told.

ThundwrX2 Arm-based chips are gaining more firepower for the cloud.

The Qualcomm Centriq 2400 motherboard had 12 DDR4 DIMM slots and a single >> 48 core CPU.

The company also showed off a dual socket Cavium ThunderX 2. That system had over >> 100 cores and can handle gobs of memory

“With list prices for volume SKUs (32 core 2.2GHz and below) ranging from $1795 to $800, the ThunderX2 family offers 2-4X better performance per dollar compared to Qualcomm Centriq 2400 and Xeon…”

Cavium continues to make inroads with the ThunderX2 @Arm-compatible platform..

Nice Coverage. 40 different versions of the chip that are optimized for a variety of workloads, including compute, storage and networking. They range from 16-core, 1.6GHz SoCs to 32-core, 2.5GHz chips ranging in price from $800 to $1,795. Cavium officials said the chips compete favorably with Intel’s “Skylake” Xeon processors and offer up to three times the single-threaded performance of Cavium’s earlier ThunderX offerings.

The ThunderX2 SoCs provide eight DDR4 memory controllers and 16 DIMMS per socket and up to 4TB of memory in a dual-socket configuration. There also are 56 lanes of integrated PCIe Gen3 interfaces, 14 integrated PCIe controllers and integrated SATAv3, GPIOs and USB interfaces.

Kudos to Cavium…

Those power numbers look horrendous. A comparable intel system would be less than half that draw. In fact, 800W is the realm 2P IBM POWER operates in. I get that it’s unbinned silicon and not latest firmware but I can’t see all that accounting for ~50-75W tops. My guess is Broadcom didn’t finish the job before it was sold to Cavium, and if Cavium had to launch it now lest they come up against the next x86 server designs (likely starting to sample late 2018).

I guess when Patrick gets binned silicon with production firmware, he’ll also have to redo the performance numbers because it’s quite possible that the perf numbers will likely take some hit. 800W! At least it puts paid to the nonsense about ARM ISA being inherently power efficient. Power efficiency is all about implementation.

The performance looks quite good, but yeah the 800W are a show stopper…

The xeons and epyc processors consume way less than that.

I doubt they can get to the power consumption of the xeons and epyc without lowering quite a lot the max frequency and voltages accordingly. If they can do it, then that’s great. But I have some doubts.

For the STREAMS benchmark (“Cavium ThunderX2 Stream Triad Gcc7”) I assume the Intel compiler is leveraging the FMA instructions, giving them the boost in performance.

Where is performance for dolar graph? Without it is this just lost of useless results..

RuThaN – how would you propose performance per dollar? All SKUs used in the performance parts have list prices that are easy to get. Discounts, of course, are a reality in enterprise gear. The ThunderX2 is sub $1800 which is by fart the least expensive.

Beyond the chips, what system/ configuration are you using them in? How do you factor in the additional memory capacity of ThunderX2 versus Skylake-SP, will that mean fewer systems deployed?

What cost for power/ rack/ networking should we use for the TCO analysis?

I do not think that performance/ dollar at the CPU level is a metric those outside of the consumer space look at too heavily versus at least at the system cost. For example, this is a fairly basic TCO model we do: https://www.servethehome.com/deeplearning11-10x-nvidia-gtx-1080-ti-single-root-deep-learning-server-part-1/

Failure to publish measured power during *every* benchmark run is evasive. This is critical data, for the spread of workloads, and allows calculating energy efficiency.

Please be honest and report the data. Caveats are fine but failure to report is not fine.

Richard Altmaier – thank you for sharing your opinion. There are two components for sure, performance and power consumption. Both are certainly important, but for this review, performance seemed ready, power did not due to a variety of noted factors.

As mentioned, the test system we have is fairly far from what we would consider comparable to the AMD/ Intel platforms that have been in our labs for more than a year. We do enough of these that it is fairly to see that power is higher than it should be. We do not want to publish numbers we are not confident in, lest they get used by competitors.

We also mentioned that there will be a follow-up piece to this. The other option was to publish zero power numbers. Despite your opinion, performance alone is a compelling story. Unlike the x86 side, the ARM side has never had a platform that can hit this level of performance which makes the raw performance numbers quite important themselves.

BTW – There was a well-known Intel executive also named Richard Altmaier.

Would love to see the commands used to generate these results, especially on STREAM on the 8180. I’ve not seen more than ~92GB/s with 768GB installed across all 6 channels with OpenMP parallelization across all 56 threads…